April 28th, 2017

You probably know that walking does your body good, but it’s not just your heart and muscles that benefit. Researchers at New Mexico Highlands University (NMHU) found that the foot’s impact during walking sends pressure waves through the arteries that significantly modify and can increase the supply of blood to the brain. The research was presented at the APS annual meeting at Experimental Biology 2017 in Chicago.

Until recently, the blood supply to the brain (cerebral blood flow or CBF) was thought to be involuntarily regulated by the body and relatively unaffected by changes in the blood pressure caused by exercise or exertion. However, advanced imaging techniques such as MRI of the brain have allowed researchers to observe the effects of these changes on CBF, providing new insights into its regulation.

In the current study, the research team used non-invasive ultrasound to measure internal carotid artery blood velocity waves and arterial diameters to calculate hemispheric CBF to both sides of the brain of 12 healthy young adults during standing upright rest and steady walking (1 meter/second). The researchers found that though there is lighter foot impact associated with walking compared with running, walking still produces larger pressure waves in the body that significantly increase blood flow to the brain. While the effects of walking on CBF were less dramatic than those caused by running, they were greater than the effects seen during cycling, which involves no foot impact at all.

“New data now strongly suggest that brain blood flow is very dynamic and depends directly on cyclic aortic pressures that interact with retrograde pressure pulses from foot impacts,” the researchers wrote. “There is a continuum of hemodynamic effects on human brain blood flow within pedaling, walking and running. Speculatively, these activities may optimize brain perfusion, function, and overall sense of wellbeing during exercise.”

“What is surprising is that it took so long for us to finally measure these obvious hydraulic effects on cerebral blood flow,” first author Ernest Greene explained. “There is an optimizing rhythm between brain blood flow and ambulating. Stride rates and their foot impacts are within the range of our normal heart rates (about 120/minute) when we are briskly moving along.”

Ernest R. Greene, PhD, a researcher at New Mexico Highlands University, presented “Acute Effects of Walking on Human Internal Carotid Blood Flow” in a poster session on Monday, April 24, at the McCormick Place Convention Center.

Comments Off on Walking Benefits The Brain

April 28th, 2017

VTT has developed artificial intelligence (AI)-based data analysis methods used in a smartphone application of Odum Ltd. The application can estimate its users’ health risks and, if necessary, guide them towards a healthier lifestyle. Check

gb whatsapp.

“Based on an algorithm developed by VTT, we can predict the risk of illness-related absence from work among members of the working population over the next 12 months, with an up to 80 percent sensitivity,” says VTT’s Mark van Gils, the scientific coordinator of the project.

“This is a good example of how we can find new information insights that are concretely valuable to both citizens and health care professionals by analysing large and diverse data masses.”

The application also guides individuals at risk to complete an electronic health exam and take the initiative in promoting their own health.

During the project Odum and VTT examined health data collected from 18–64 year-olds over the course of several years. The project received health data from a total of 120,000 working individuals.

“Health care costs are growing at an alarming pace and health problems are not being addressed early enough,” says Jukka Suovanen, CEO of Odum. “Our aim is to decrease illness-related absences by 30 percent among application users and add 10 healthy years to their lives.”

Photo: Odum

The most cost-efficient way to improve quality of life and decrease health care costs for both individuals and society is to promote the health of individuals and encourage them to take initiative in reducing their health risks.

VTT is one of the leading research and technology companies in Europe. We help our clients develop new technologies and service concepts in the areas of Digital Health, Wearable technologies and Diagnostics – supporting their growth with top-level research and science-based results.

Comments Off on Al-Based Smartphone Application Can Predict User’s Health Risks

April 28th, 2017

According to new research, nomadic horse culture — famously associated with Genghis Khan and his Mongol hordes — can trace its roots back more than 3,000 years in the eastern Eurasian Steppes, in the territory of modern Mongolia

The study, published online March 31 in Journal of Archaeological Science, produces scientific estimates of the age of horse bones found from archaeological sites belonging to a culture known as the Deer Stone-Khirigsuur Complex. This culture, named for the beautiful carved standing stones (“deer stones”) and burial mounds (khirigsuurs) it built across the Mongolian Steppe is linked with some of the oldest evidence for nomadic herding and domestic livestock use in eastern Eurasia. At both deer stones and khirigsuurs, stone mounds containing ritual burials of domestic horses – sometimes numbering in the hundreds or thousands – are found buried around the edge of each monument.

Domestic horses form the center of nomadic life in contemporary Mongolia.

Photo: P. Enkhtuvshin

A team of researchers from several academic institutions – including the Max Planck Institute for the Science of Human History, Yale University, University of Chicago, the American Center for Mongolian Studies, and the National Museum of Mongolia – used a scientific dating technique known as radiocarbon dating to estimate the spread of domestic horse ritual at deer stones and khirigsuurs.

When an organism dies, an unstable radioactive molecule present in living tissues, known as radiocarbon, begins to decay at a known rate.

By measuring the remaining concentration of radiocarbon in organic materials, such as horse bone, archaeologists can estimate how many years ago an animal took its final step. Many previous archaeological projects in Mongolia produced radiocarbon date estimates from horse remains found at these Bronze Age archaeological sites. However, because each of these measurements must be calibrated to account for natural variation in the environment over time, individual dates have large amounts of error and uncertainty, making them difficult to aggregate or interpret in groups.

“Deer stone” stela in Bayankhongor province, central Mongolia, surrounded by small stone mounds containing domestic horse remains.

Photo: William Taylor

By using a statistical technique known as Bayesian analysis – which combines probability with archaeological information to improve precision for groups of radiocarbon dates – the study authors were able to produce a high-precision chronology model for early domestic horse use in Mongolia. Lead author William Taylor, a postdoctoral research fellow at the Max Planck Institute for the Science of Human History, says that this model “enables us for the first time to link horse use with other important cultural developments in ancient Mongolia and eastern Eurasia, and evaluate the role of climate and environmental change in the local origins of horse riding.”

According to the study, domestic horse ritual spread rapidly across the Mongol Steppe at around 1200 BC – several hundred years before mounted horsemen are clearly documented historical records. When considered alongside other evidence for horse transport in the Deer Stone-Khirigsuur Complex these results suggest that Mongolia was an epicenter for early horse culture – and probably early mounted horseback riding.

According to new research, nomadic horse culture — famously associated with Genghis Khan and his Mongol hordes — can trace its roots back more than 3,000 years in the eastern Eurasian Steppes, in the territory of modern Mongolia.

Photo: William Taylor

The study has important consequences for our understanding of human responses to climate change. For example, one particularly influential hypothesis argues that horse riding and nomadic herding societies developed during the late second millennium BCE, as a response to drought and a worsening climate. Taylor and colleagues’ results indicate instead that early horsemanship took place during a wetter, more productive climate period – which may have given herders more room to experiment with horse breeding and transport.

In recent years, scholars have become increasingly aware of the role played by Inner Asian nomads in early waves of globalization. A key article by Dr. Michael Frachetti and colleagues, published this month in Nature argues that nomadic movement patterns shaped the early trans-Eurasian trade networks that would eventually move goods, people, and information across the continent. The development of horsemanship by Mongolian cultures might have been one of the most influential changes in Eurasian prehistory – laying the groundwork for the economic and ecological exchange networks that defined the Old World for centuries to come.

Comments Off on The Origins Of Mongolia’s Nomadic Horse Culture Pinpointed

April 12th, 2017

If you need a reason to become a dog lover, how about their ability to help protect kids from allergies and obesity?

A new University of Alberta study showed that babies from families with pets–70 per cent of which were dogs–showed higher levels of two types of microbes associated with lower risks of allergic disease and obesity.

But don’t rush out to adopt a furry friend just yet.

“There’s definitely a critical window of time when gut immunity and microbes co-develop, and when disruptions to the process result in changes to gut immunity,” said Anita Kozyrskyj, a U of A pediatric epidemiologist and one of the world’s leading researchers on gut microbes–microorganisms or bacteria that live in the digestive tracts of humans and animals.

Credit: Wikipedia Commons

The latest findings from Kozyrskyj and her team’s work on fecal samples collected from infants registered in the Canadian Healthy Infant Longitudinal Development study build on two decades of research that show children who grow up with dogs have lower rates of asthma.

The theory is that exposure to dirt and bacteria early in life–for example, in a dog’s fur and on its paws–can create early immunity, though researchers aren’t sure whether the effect occurs from bacteria on the furry friends or from human transfer by touching the pets, said Kozyrskyj.

Her team of 12, including study co-author and U of A post-doctoral fellow Hein Min Tun, take the science one step closer to understanding the connection by identifying that exposure to pets in the womb or up to three months after birth increases the abundance of two bacteria, Ruminococcus and Oscillospira, which have been linked with reduced childhood allergies and obesity, respectively.

“The abundance of these two bacteria were increased twofold when there was a pet in the house,” said Kozyrskyj, adding that the pet exposure was shown to affect the gut microbiome indirectly–from dog to mother to unborn baby–during pregnancy as well as during the first three months of the baby’s life. In other words, even if the dog had been given away for adoption just before the woman gave birth, the healthy microbiome exchange could still take place.

The study also showed that the immunity-boosting exchange occurred even in three birth scenarios known for reducing immunity, as shown in Kozyrskyj’s previous work: C-section versus vaginal delivery, antibiotics during birth and lack of breastfeeding.

What’s more, Kozyrskyj’s study suggested that the presence of pets in the house reduced the likelihood of the transmission of vaginal GBS (group B Strep) during birth, which causes pneumonia in newborns and is prevented by giving mothers antibiotics during delivery.

It’s far too early to predict how this finding will play out in the future, but Kozyrskyj doesn’t rule out the concept of a “dog in a pill” as a preventive tool for allergies and obesity.

“It’s not far-fetched that the pharmaceutical industry will try to create a supplement of these microbiomes, much like was done with probiotics,” she said.

Comments Off on Pet Exposure May Reduce Allergy And Obesity

March 21st, 2017

Jack the Ripper is the best-known name given to an unidentified serial killer generally believed to have been active in the largely impoverished areas in and around the Whitechapel district of London in 1888. The name “Jack the Ripper” originated in a letter written by someone claiming to be the murderer that was disseminated in the media. The letter is widely believed to have been a hoax and may have been written by journalists in an attempt to heighten interest in the story and increase their newspapers’ circulation. The killer was called “the Whitechapel Murderer” as well as “Leather Apron” within the crime case files, as well as in contemporary journalistic accounts.

Members of the University of Leicester team who undertook genealogical and demographic research in relation to the discovery of the mortal remains of King Richard III are now involved in a new project to identify the last known victim of Jack the Ripper – Mary Jane Kelly.

The researchers were commissioned by author Patricia Cornwell, renowned for her meticulous research, to examine the feasibility of finding the exact burial location and the likely condition and survival of her remains. This was done as a precursor to possible DNA analysis in a case surrounding her true identity following contact with Wynne Weston-Davies who believes that Mary Jane Kelly was actually his great aunt, Elizabeth Weston Davies.

Mary Jane Kelly

Credit: City of London police; Unknown photographer

Now, in a new report, ‘The Mary Jane Kelly Project’, the research team has revealed the likelihood of locating and identifying the last known victim of Britain’s most infamous serial killer known as ‘Jack the Ripper’, who is thought to have killed at least five young women in the Whitechapel area of London between August and November 1888.

The research team consisted of Dr Turi King, Reader in Genetics and Archaeology at the University of Leicester and lead geneticist of the Richard III project, Mathew Morris, Field Officer for University of Leicester Archaeological Services (ULAS) who discovered the remains of Richard III, Professor Kevin Schürer, Professor in English Local History who carried out the genealogical study of Richard III and Carl Vivian, Video Producer, who was video producer for the Richard III project.

Credit: Carl Vivian University of Leicester

As any DNA analysis would rely on the unambiguous identification of the remains being those of Mary Jane Kelly before such a project could even be considered, the University of Leicester team conducted a desk-based assessment of the burial location of Mary Jane Kelly.

The team visited St Patrick’s Catholic Cemetery, Leytonstone, on 3 May 2016 in order to examine the burial area. Research was carried out in the cemetery’s burial records and a survey of marked graves in the area around Kelly’s modern grave marker was undertaken.

Their work was commissioned by Patricia Cornwell who is a crime writer, known for writing a best-selling series of novels featuring the heroine Dr Kay Scarpetta, a medical examiner, and has also written two books on Jack the Ripper.

Wynne Weston-Davies is a surgeon and author of The Real Mary Kelly, an investigation into the life and death of the Ripper’s final victim. In his book published in 2015, Weston-Davies claimed that the woman known to everyone as Mary Jane Kelly was living under a pseudonym and was in fact his great aunt Elizabeth Weston Davies.

Patricia Cornwell contacted Dr Turi King at the University of Leicester to assess the possibility of testing the DNA from the remains of Mary Jane Kelly and matching them against those of Weston-Davies.

Dr King said: “During initial discussions, two issues arose – it was widely reported in the press in 2015 that the Ministry of Justice had indicated that it would issue an exhumation licence to Wynne Weston-Davies – however in fact, they had only acknowledged that they would consider such an application if submitted.

“Secondly, to complete any exhumation application to the Ministry of Justice, a compelling case for the exhumation as well as detailed information on the location and state of the grave would be required, not only for the exhumation of Kelly’s remains, but also to determine if any other remains might be disturbed in the process.

These are burial records of Mary Jeannette Kelly from 1888 St. Patrick’s Catholic Cemetary.

Credit: Carl Vivian University of Leicester

“However, the precise location of her grave is unknown and, not only that, it rapidly became clear that as such, the remains of a number of other individuals would have to be disturbed and that her remains are highly likely to have been dug through when the communal gravesite she was buried in was reused in the 1940s making accurate identification of any of her remains highly problematic if not impossible.”

Mathew Morris said: “There have been several modern markers in the cemetery which have commemorated Kelly since the 1980s and its location is likely to have little or no relevance to the real location of the grave. Problems surrounding the location of the grave stem from the fact that this area of the cemetery was reclaimed in 1947, with earlier grave positions being swept away to make way for new burials.”

“Based on numerous calculations, we concluded that in order to locate Mary Jane Kelly’s remains, one would most likely have to excavate an area encompassing potentially hundreds of graves containing a varying, and therefore unknown, number of individuals.”

Furthermore, current law relating to the exhumation of human remains in England and Wales states that consent from the next of kin for each set of remains would be required – and in cases where there are a large number of remains within a grave, it is unlikely licences will be granted.

Professor Kevin Schürer, said: “In order to make an application to the Ministry of Justice for a licence to exhume Mary Jane Kelly’s remains, the case for Kelly being Elizabeth Weston Davies needs to be compelling, not least because to test the theory by exhuming the remains will almost certainly involve disturbing the remains of other individuals buried in the vicinity.

Credit: The Illustrated Police News newspaper, October 1888.

“Relatives of these individuals would need to give consent and therefore traced and permission sought. Given the number of individuals whose remains would likely be disturbed, it would take months, possibly years, of genealogical research to trace them all.”

The team concluded that without a full review of the evidence cited by Weston-Davies, much of the case for Mary Jane Kelly and Elizabeth Weston Davies being the same individual appears to be circumstantial or conjectural.

However, the report also found that DNA testing of the remains of Mary Jane Kelly – should she be discovered – would allow for a comparison to be made between those remains and Weston-Davies in order to determine if the genetic data is consistent with them being related, and therefore likely to be Elizabeth Weston Davies.

Dr King said: “As information presently stands, a successful search for Kelly’s remains would require a herculean effort that would likely take years of research, would be prohibitively costly and would cause unwarranted disturbance to an unknown number of individuals buried in a cemetery that is still in daily use, with no guarantee of success.

Wanted poster – issued by the police during the ‘autumn of terror’ 1888.

Credit: Wikimedia Commons

“As such it is extremely unlikely that any application for an exhumation licence would be granted. The simple fact is, successfully naming someone in the historical record only happens in the most exceptional of cases.

“Most human remains found during excavations remain stubbornly, and forever, anonymous and this must also be the fate of Mary Jane Kelly.”

Comments Off on Archaeological Team Seeks Mary Jane Kelly’s, Jack The Ripper’s Last Victim True Identity

March 21st, 2017

Computer scientists in Italy are working on a new concept for remote and distributed storage of documents that could have all the benefits of cloud computing but without the security issues of putting one’s sensitive documents on a single remote server. They describe details in the International Journal of Electronic Security and Digital Forensics.

According to Rosario Culmone and Maria Concetta De Vivo of the University of Camerino, technological and regulatory aspects of cloud computing offer both opportunity and risk. Having one’s files hosted on remote servers displaces the hardware requirements and makes files accessible to remote users more efficiently. However, there are gaps in security and accessibility of files “in the cloud”. BCA IT in Miami is a managed IT service provider who knows more in depth about cloud computing.

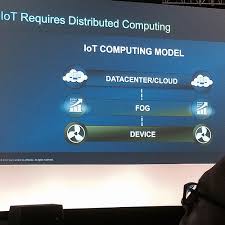

The team has now turned to another meteorological metaphor – fog – and has proposed an alternative to cloud storage that makes any given file entirely immaterial rather than locating it on a single server. They envisage a fog of files rather than a cloud.

Credit:

Flickr

The files are distributed on a public or private network and and so have no single location, in this way, there is no single server that would be a target for hackers and so only legitimate users can access them. The researchers point out that, “The trend towards outsourcing of services and data on cloud architectures has triggered a number of legal questions on how to manage jurisdiction and who has jurisdiction over data and services in the event of illegal actions.”

Fog computing would essentially circumvent the security and legal problems putting files off-limits to hackers and beyond the reach of law enforcement and in particular rogue authorities.

“Our proposal is based on this idea of a service which renders information completely immaterial in the sense that for a given period of time there is no place on earth that contains information complete in its entirety,” the team says.

They explain that the solution is based on a distributed service which we will call “fog” and which uses standard networking protocols in an unconventional way, exploiting “virtual buffers” in internet routers to endlessly relocate data packets without a file ever residing in its entirety on a single computer server. It’s as if you were to send a letter with a tracking device but an incomplete address that simply gets sent from post office to post office and is never delivered.

Comments Off on Is FOG Computing More Secure Than Cloud Computing

March 21st, 2017

The United States is the 14th happiest country in the world and Canada is the seventh happiest country in the world, according to the 2017 World Happiness Report edited by CIFAR Co-Director

John F. Helliwell.

Although the top ten countries remain the same as last year, there has been some shuffling of places in the report released today. Norway moved up from fourth place to overtake Denmark at the top of the ranking. It was followed by Denmark, Iceland, Switzerland and Finland. Canada dropped from sixth to seventh place, beneath the Netherlands.

This is the fifth annual World Happiness Report. It was edited by Helliwell of CIFAR (Canadian Institute for Advanced Research) and the University of British Columbia; Richard Layard, Director of the Well-Being Programme at the London School of Economics; and Jeffrey Sachs, Director of the Earth Institute and the Sustainable Development Solutions Network.

Ranking of Happiness 2014-2016

Credit:

World Happiness Report

Canada was also highlighted for its success in multiculturalism and integration. It is sometimes suggested that the degree of ethnic diversity is the single most powerful explanation of high or low social trust, however Canada bucks this trend. While U.S. communities with higher ethnic diversity had lower measures of social trust this finding did not hold true in Canada. Canadian programs that promote multiculturalism and inter-ethnic understanding helped build social trust and decrease economic and social segregation, the authors note.

“Canada has demonstrated a considerable success with multiculturalism; the United States has not tried very hard,” writes Sachs. The next report for 2018 will focus on the issue of migration.

The World Happiness Report looks at trends in the data recording how highly people evaluate their lives on a scale running from 0 to 10. The rankings, which are based on surveys in 155 countries covering the three years 2014-2016, reveal an average score of 5.3 (out of 10). Six key variables explain three-quarters of the variation in annual national average scores over time and among countries: real GDP per capita, healthy life expectancy, having someone to count on, perceived freedom to make life choices, freedom from corruption, and generosity.

The top ten countries rank highly on all six of these factors:

1. Norway (7.537)

2. Denmark (7.522)

3. Iceland (7.504)

4. Switzerland (7.494)

5. Finland (7.469)

6. Netherlands (7.377)

7. Canada (7.316)

8. New Zealand (7.314)

9. Australia (7.284)

10. Sweden (7.284)

Full report: http://worldhappiness.report/

According to the report The central paradox of the modern American economy, as identified by Richard Easterlin (1964, 2016), is this: income per person has increased roughly three times since 1960, but measured happiness has not risen. The situation has gotten worse in recent years: per capita GDP is still rising, but happiness is now actually falling.

The predominant political discourse in the United States is aimed at raising economic growth, with the goal of restoring the American Dream and the happiness that is supposed to accompany it. But the data show conclusively that this is the wrong approach. The United States can and should raise happiness by addressing America’s multi-faceted social crisis— rising inequality, corruption, isolation, and distrust—rather than focusing exclusively or even mainly on economic growth, especially since the concrete proposals along these lines would exacerbate rather than ameliorate the deepening social crisis.

Figure 7.1 shows the U.S. score on the Cantril ladder over the last ten years. If we compare the two-year average for 2015/6 with the two-year average for 2006/7, we can see that the Cantril score declined by 0.51. While the US ranked third among the 23 OECD countries surveyed in 2007, it had fallen to 19th of the 34 OECD countries surveyed in 2016

This America social crisis is widely noted, but it has not translated into public policy. Almost all of the policy discourse in Washington DC centers on naïve attempts to raise the economic growth rate, as if a higher growth rate would somehow heal the deepening divisions and angst in American society. This kind of growth-only agenda is doubly wrong-headed. First, most of the pseudo-elixirs for growth—especially the Republican Party’s beloved nostrum of endless tax cuts and voodoo economics—will only exacerbate America’s social inequalities and feed the distrust that is already tearing society apart. Second, a forthright attack on the real sources of social crisis would have a much larger and more rapid beneficial effect on U.S. happiness.

According to the report, “To escape this social quagmire, America’s happiness agenda should center on rebuilding social capital. This will require a keen focus on the five main factors that have contributed to falling social trust and confidence in government. The first priority should be campaign finance reform, especially to undo the terrible damage caused by the Citizens United decision. The second should be a set of policies aiming at reducing income and wealth inequality. This would include an expanded social safety net, wealth taxes, and greater public financing of 184 health and education. The third should be to improve the social relations between the native-born and immigrant populations. Canada has demonstrated a considerable success with multiculturalism; the United States has not tried very hard. The fourth is to acknowledge and move past the fear created by 9/11 and its memory.

The US remains traumatized to this day; Trump’s ban on travel to the United States from certain Muslim-majority countries is a continuing manifestation of the exaggerated and irrational fears that grip the nation. The fifth priority, I believe, should be on improved educational quality, access, and attainment. America has lost the edge in educating its citizens for the 21st century; that fact alone ensures a social crisis that will continue to threaten well-being until the commitment to quality education for all is once again a central tenet of American society.”

Comments Off on US 14th Happiest Country In The World

March 16th, 2017

Fraunhofer solutions integrate existing machines into modern production systems such as MES and SCADA. PLUGandWORK automatically generates a communication server for data exchange with other systems or IT systems. This means that medium-sized companies are also taking the leap into the age of Industry 4.0. The technology is market-ready and is currently being used by several pilot customers. The researchers will be presenting a demo at the Hanover Trade Fair (Hall 2, Booth C22, April 24-28).

PLUGandWORKTM helps you connect your legacy plant floor equipment into all the major Industrie 4.0 and Industrial Internet-of-Things players such as IBM Watson, GE PREDIX, Rockwell Automation, PTC Thing Worx and others. Today, the consistent implementation of Industrie 4.0 still often fails because older devices that do not yet have the necessary interfaces are still in use. In the worst case, the machines then work in isolation in the production hall.

The PLUGandWORK Cube by Fraunhofer integrates existing machines into modern production systems. This means that medium-sized companies are also taking the leap into the age of Industrie 4.0.

Credit: © Photo Fraunhofer IOSB

The Fraunhofer Institute for Optronics, Systems Technologies and Image Exploitation (IOSB) in Karlsruhe has developed a solution to this problem. PLUGandWORKTM ensures that existing machines and systems can be integrated into the production system. If you don’t already have gateway systems on your plant floor, our inconspicuous cube houses a standard industrial PC with Windows as the operating system. Your machine provides all information about itself and its capabilities via network cable to the cube. The machine is integrated into the production system, it can communicate with other systems and it is accessible via the network.

“In principle, this is very similar to the installation of a USB device, such as a printer, on your office PC,” explains Project Manager Dr. Olaf Sauer. “You simply plug your device in, the device describes itself to the computer, the computer goes on line if required to find the right driver, and then the computer can fully interact with and pass information back and forth to control the device to do things (like print, copy files…)”.

In the first step, our solution creates the self-description of the machine on the basis of the XML data format AutomationM™ (Automation Markup Language). An assistance tool facilitates the structure of the self description by means of an intuitive, graphical user interface. With this model, the cube or gateway PC automatically generates the communication server in the second step for exchanging information with other machines and the superordinate production control. However, the tools also register changes to the machine, such as an updated configuration. A change manager records the new configuration and forwards it to the communication server.

The Fraunhofer PLUGandWORKTM solution eliminates the need for complicated configuration and setup when a system is integrated manually into production. This process may take several days or even weeks, and PLUGandWORKTM is often finished after a few hours.

Maximum transparency, compatibility and data security

Use of a cube or gateway PC not only frees individual machines from their isolation. It offers a further, decisive advantage: “Data from the connected machines can also be stored on the PLUGandWORKTM Cube”, explains Sauer. “The employees in plant management always see what is happening on the machine and immediately recognize any problems occurring. In this way, transparency prevails in the production hall.”

The server in the cube uses the OPC UA (Open Platform Communications Unified Architecture) communication protocol and therefore adopts an internationally accepted standard that ensures the greatest possible compatibility in machine-to-machine communication. Data security is also ensured: All data is transmitted in encrypted form, and only authorized devices can connect with the system. In the process, industrial partners such as Wibu Systems AG from Karlsruhe contribute their expertise to the field of safety technology.

Depending on the complexity of the machine data and parameters, up to twenty machines can be connected to a single cube. The retrofit technology is by no means only designed for large manufacturers, such as from the automotive sector. “Even medium-sized companies with only twenty machines can integrate them into the production control,” says Fraunhofer expert Sauer. System integrators that create complete systems and pass them on to their customers ready for use also benefit from the cube.

Cooperation with industry standards

For many years, the IOSB has been working on digital technologies that make companies fit for Industrie 4.0. The experts develop the necessary standardized interfaces, software modules and data transmission protocols. In addition, the Fraunhofer experts, together with national and international partners, actively participate in the further development of AutomationML and are involved in various standardization committees.

It will surely take a few years until the vision of Industrie 4.0 is fully implemented and manufacturers have brought their complete machine park up to date. Until then, the PLUGandWORKTM Cube ensures that even older machines are fit for the digital era.

Comments Off on PlugandWork Connects Existing Machines And Systems To Industrial Internet-Of-Things

February 16th, 2017

Last winter’s El Niño may have felt weak to residents of Southern California, but it was one of the most powerful weather events of the last 145 years, scientists say.

If severe El Niño events become more common in the future, as some studies suggest, the California coast — home to more than 25 million people — may become increasingly vulnerable to coastal hazards, independently of projected sea level rise.

A new study conducted by scientists at the University of California Santa Barbara (UCSB) and colleagues at several other institutions found that during the 2015-2016 El Niño, winter beach erosion on the Pacific coast was 76 percent above normal. Most beaches in California were eroded beyond historical extremes, the study found.

Big waves affect beach access and ocean bluffs at the Isla Vista, California study site.

Credit: David Hubbard

The results appear this week in the journal Nature Communications.

“Infrequent and extreme events can be extremely damaging to coastal marine habitats and communities,” said David Garrison, a program director in the National Science Foundation’s Division of Ocean Sciences, which funded the research. “While this paper stresses the effect of waves and sediment transport on beach structure, organisms living on and in the sediment will also be profoundly affected.”

Added David Hubbard, a UCSB marine ecologist and paper co-author: “This study illustrates the value of broad regional collaboration using long-term data for understanding coastal ecosystem responses to environmental change. We really need this scale of data on coastal processes to understand what’s going on with the ecology of the coast.”

While winter beach erosion — the removal and loss of sand from the beach — is a normal seasonal process, during El Niño events the extent of erosion can be more severe.

Exposed bedrock platform at beach access staircase, where large waves swept away the sand.

Credit: David Hubbard

The research team assessed seasonal beach behavior for 29 beaches along more than 1,200 miles of the Pacific coast. They made 3-D surface maps and cross-shore profiles using aerial LIDAR (Light Detection and Ranging), GPS topographic surveys and direct measurements of sand quantities. They then combined that information with wave and water-level data from each beach between 1997 and 2016.

“Wave conditions and coastal response were unprecedented for many locations during the winter of 2015-16,” said Patrick Barnard, lead author of the paper and a geologist with the U.S. Geological Survey. “The winter wave energy equaled or exceeded measured historical maximums along the West Coast, corresponding to extreme beach erosion across the region.”

The 2015-2016 El Niño was one of the strongest ever recorded.

However, that most recent El Niño was largely considered a dud from a water resources perspective due to unusually low rainfall, particularly in Southern California, which received 70 percent less rain than during the last two big El Niño events.

“The waves that attacked our coast, generated from storms across the North Pacific, were exceptional and among the largest ever recorded,” Hubbard said. “But the lack of rainfall means that coastal rivers produced very little sand to fill in what was lost from the beaches, so recovery has been slow.”

El Niño waves breach the foredune in central California in January 2016.

Credit: David Hubbard

Rivers are the primary suppliers of sand to California beaches, despite long-term water reductions in the 20th century due to extensive dam construction. California’s extreme drought resulted in lower river flows, which in turn equated to less sand being carried to the coast to help sustain beaches.

While most beaches in the survey eroded beyond historical extremes, some fared better than others. The condition of the beach before the winter of 2015 strongly influenced the severity of the erosion and the ability to recover afterward through natural replenishment processes.

Mild wave activity in the Pacific Northwest as well as artificial augmentation of beaches (adding sand) in Southern California prior to the winter of 2015-2016 prevented some areas from eroding beyond historical extremes.

Eroding foredune scarp during a large El Niño wave event in January 2016 in central California.

Credit: David Hubbard

“We need to understand these challenges, which include rising sea level and the fact that most of the problems occur during these peak El Niño events,” Hubbard said. “Then we need to restore and manage our coasts in ways that will enable us to deal with these events and conserve beach ecosystems.”

The U.S. Army Corps of Engineers, the California Department of Parks and Recreation, the Division of Boating and Waterways, the U.S. Geological Survey and the Northwest Association of Networked Ocean Observing Systems also funded the research.

Comments Off on El Niño One Of The Most Powerful Weather Events Of the Last 145 Years

January 28th, 2017

The more teenagers delay smoking marijuana until they’re older, the better it is for their brains, but there may be little ill effect if they start after age 17, says a new Université de Montréal study.

Adolescents who smoke pot as early as 14 do worse by 20 on some cognitive tests and drop out of school at a higher rate than non-smokers, confirms the study, published Dec. 29 in Development and Psychopathology, a Cambridge University Press journal.

“Overall, these results suggest that, in addition to academic failure, fundamental life skills necessary for problem-solving and daily adaptation […] may be affected by early cannabis exposure,” the study says.

However, the cognitive declines associated with cannabis do not seem to be global or widespread, cautioned the study’s lead author, Natalie Castellanos-Ryan, an assistant professor at UdeM’s School of Psychoeducation.

Credit: Université de Montréal

Her study found links between cannabis use and brain impairment only in the areas of verbal IQ and specific cognitive abilities related to frontal parts of the brain, particularly those that require learning by trial-and-error.

In addition, if teenagers hold off until age 17 before smoking their first joint, those impairments are no longer discernible. “We found that adolescents who started using cannabis at 17 or older performed equally well as adolescents who did not use cannabis,” said Castellanos-Ryan.

In the study, she and her team of researchers at UdeM and CHU Saint-Justine, the university’s affiliated children’s hospital, looked at 294 teenagers who were part of the Montreal Longitudinal and Experimental Study, a well-known cohort of 1,037 white French-speaking males from some of the city’s poorer neighbourhoods. The teenagers completed a variety of cognitive tests at ages 13, 14 and 20 and filled out a questionnaire once a year from ages 13 to 17 and again at 20, between 1991 and 1998.

Roughly half – 43 per cent – reported smoking pot at some point during that time, most of them only a few times a year. At 20 years of age, 51 per cent said they still used the drug. In general, those who started early already had poor short-term memory and poor working memory (that is, the ability to store information such as a phone number long enough to use it, or follow an instruction shortly after it was given). Conversely, the early users also had good verbal skills and vocabulary; Castellanos-Ryan suggested one possible explanation: “It takes quite a lot of skills for a young adolescent to get hold of drugs; they’re not easy-access.

She and her team found smoking cannabis during adolescence was only linked to later difficulties with verbal abilities and cognitive abilities of learning by trial-and-error, and those abilities declined faster in teens who started smoking early than teens who started smoking later. The early adopters also tended to drop out of school sooner, which helped explain the decrease in their verbal abilities. “The results of this study suggest that the effects of cannabis use on verbal intelligence are explained not by neurotoxic effects on the brain, but rather by a possible social mechanism: Adolescents who use cannabis are less likely to attend school and graduate, which may then have an impact on the opportunities to further develop verbal intelligence,” said Castellanos-Ryan.

Besides filling out questionnaires about their use of drugs and alcohol over the previous year, the boys participated in a number of tests to measure their cognitive development. For example, they were given words and numbers to remember and repeat in various configurations, were asked to learn new associations between various images, played a card game to gauge their response to winning or losing money, and, in a test of their vocabulary, had to name objects and describe similarities between words. In general, those who performed poorly in language tests and tests that required learning by trial-and-error, either to make associations between images or to detect a shift in the ratio of gains to losses during the card game, reported smoking pot in their young teens.

What should be the takeaway from the study?

“I think that we should focus on delaying onset (of marijuana use),” said Castellanos-Ryan, who next intends to study whether these results can be replicated in other samples of adolescents and to see if cannabis use is associated with other problems, such as drug abuse, later in life. Prevention is especially important now, she added, since marijuana is much more potent than it was in the 1990s, and because teenagers today have a more favourable attitude to its use, viewing marijuana as much less harmful than other recreational drugs.

“But it is important to stick to the evidence we have and not exaggerate the negatives of cannabis,” she cautioned. “We can’t tell children, ‘If you smoke cannabis you’re going to damage your brain massively and ruin your life.’ We have to be realistic and say, ‘We are finding evidence that there are some negative effects related to cannabis use, especially if you start early, and so, if you can hold off as long as you can – at least until you’re 17 – then it’s less likely there’ll be an impact on your brain.'”

Comments Off on Delaying Pot Smoking To Age 17 Is Better For Teen’s Brains, New Study Suggest

January 24th, 2017

U.S. chain restaurants participating in a National Restaurant Association initiative to improve the

nutritional quality of their children’s menus have made no significant changes compared with restaurants not participating in the program, according to a new study led by Harvard T.H. Chan School of Public Health. Among both groups, the researchers found no meaningful improvements in the amount of calories, saturated

fat, or

sodium in kids’ menu offerings during the first three years following the launch of the Kids LiveWell initiative in 2011.

They also found that sugary drinks still made up 80% of children’s beverage options, despite individual restaurant pledges to reduce their prevalence.

Credit: iStock

The study was published online January 11, 2017, in the American Journal of Preventive Medicine.

“Although some healthier options were available in select restaurants, there is no evidence that these voluntary pledges have had an industry-wide impact,” said lead author Alyssa Moran, a doctoral student in the Department of Nutrition at Harvard Chan School. “As public health practitioners, we need to do a better job of engaging restaurants in offering and promoting healthy meals to kids.”

In 2011 and 2012, more than one in three children and adolescents consumed fast food every day, according to the study. For kids, eating more restaurant food is associated with higher daily calorie intake from added sugar and saturated fats.

This is the first study to look at trends in the nutrient content of kids’ meals among national restaurant chains at a time when many were making voluntary pledges to improve quality. By 2015, more than 150 chains with 42,000 locations in the U.S. were participating in Kids LiveWell—which requires that at least one meal and one other item on kids’ menus meet nutritional guidelines.

Using data obtained from the nutrition census MenuStat, the researchers examined trends in the nutrient content of 4,016 beverages, entrees, side dishes, and desserts offered on children’s menus in 45 of the nation’s top 100 fast food, fast casual, and full-service restaurant chains between 2012 and 2015. Out of the sample, 15 restaurants were Kids LiveWell participants.

The researchers found that while some restaurants were offering healthier kids’ menu options, the average kids’ entrée still far exceeded recommendations for sodium and saturated fat. Kids’ desserts contained nearly as many calories and almost twice the amount of saturated fat as an entrée. And even when soda had been removed from children’s menus, it was replaced with other sugary beverages such as flavored milks and sweetened teas.

The authors would like to see the restaurant industry adhere to voluntary pledges and consider working with government agencies, researchers, and public health practitioners to apply evidence-based nutrition guidelines across a broader range of kids’ menu items. They also suggest tracking restaurant commitments to determine whether restaurants currently participating in Kids LiveWell improve the nutritional quality of their offerings over time.

Senior author of the paper was Christina Roberto of the University of Pennsylvania’s Perelman School of Medicine.

“Trends in Nutrient Content of Children’s Menu Items in U.S. Chain Restaurants,” Alyssa J. Moran, Jason P. Block, Simo G. Goshev, Sara N. Bleich, Christina A. Roberto, American Journal of Preventive Medicine, online January 11, 2017, doi: 10.1016/j.amepre.2016.11.007

Comments Off on Children’s Menus Present Issues Despite Industry Pledges

January 24th, 2017

Within the next fifty years, scientists at BAE Systems believe that battlefield commanders could deploy a new type of directed energy laser and lens system, called a Laser Developed Atmospheric Lens which is capable of enhancing commanders’ ability to observe adversaries’ activities over much greater distances than existing sensors.

At the same time, the lens could be used as a form of ‘deflector shield’ to protect friendly aircraft, ships, land vehicles and troops from incoming attacks by high power laser weapons that could also become a reality in the same time period.

Credit: BAE

The Laser Developed Atmospheric Lens (LDAL) concept, developed by technologists at the Company’s military aircraft facility in Warton, Lancashire, has been evaluated by the Science and Technology Facilities Council (STFC) Rutherford Appleton Laboratory and specialist optical sensors company LumOptica and is based on known science. It works by simulating naturally occurring phenomena and temporarily – and reversibly – changes the Earth’s atmosphere into lens-like structures to magnify or change the path of electromagnetic waves such as light and radio signals.

LDAL is a complex and innovative concept that copies two existing effects in nature; the reflective properties of the ionosphere and desert mirages. The ionosphere occurs at a very high altitude and is a naturally occurring layer of the Earth’s atmosphere which can be reflective to radio waves – for example it results in listeners being able to tune in to radio stations that are many thousands of miles away. The radio signals bounce off the ionosphere allowing them to travel very long distances through the air and over the Earth’s surface. The desert mirage provides the illusion of a distant lake in the hot desert. This is because the light from the blue sky is ‘bent’ or refracted by the hot air near the surface and into the vision of the person looking into the distance.

Future concepts from BAE Systems

Credit: BAE

LDAL simulates both of these effects by using a high pulsed power laser system and exploiting a physics phenomena called the ‘Kerr Effect’ to temporarily ionise or heat a small region of atmosphere in a structured way. Mirrors, glass lenses, and structures like Fresnel zone plates could all be replicated using the atmosphere, allowing the physics of refraction, reflection, and diffraction to be exploited.

“Working with some of the best scientific minds in the UK, we’re able to incorporate emerging and disruptive technologies and evolve the landscape of potential military technologies in ways that, five or ten years ago, many would never have dreamed possible,” said Professor Nick Colosimo, BAE Systems’ Futurist and Technologist.

Professor Bryan Edwards, Leader of STFC’s Defence, Security and Resilience Futures Programme said of the work: “For this evaluation project, STFC’s Central Laser Facility team worked closely with colleagues at BAE Systems and by harnessing our collective expertise and capabilities we have been able to identify new ways in which cutting edge technology, and our understanding of fundamental physical processes and phenomena, has the potential to contribute to enhancing the safety and security of the UK.”

Craig Stacey, CEO at LumOptica added: “This is a tremendously exciting time in laser physics. Emerging technologies will allow us to enter new scientific territories and explore ever new applications. We are delighted to be working with BAE Systems on the application of such game-changing technologies, evaluating concepts which are approaching the limits of what is physically possible and what might be achieved in the future.”

BAE Systems has developed some of the world’s most innovative technologies and invests in research and development to generate future products and capabilities. The Company has a portfolio of patents and patent applications covering approximately 2000 inventions internationally. Earlier this year, the Company unveiled two other futuristic technology concepts, including envisaging that small Unmanned Air Vehicles (UAVs) bespoke to specific military operations, could be ‘grown’ in large-scale labs through chemistry and that armed forces of the future could be using rapid response aircraft equipped with engines capable of propelling those aircraft to hypersonic speeds to meet rapidly emerging threats.

Comments Off on Future Battlefields With Deflector Shields And Laser Weapons

January 20th, 2017

Fraunhofer researchers have developed a process enabling the production of a two millimeter flat camera. Similar to the eyes of insects, its lens is partitioned into 135 tiny facets. Following nature‘s model, the researchers have named their mini-camera concept facetVISION. They presented the new lens at the CES technical trade fair in Las Vegas,

The mini-camera from the Fraunhofer IOF has a thickness of only two millimeters at a resolution of one megapixel.

The cameras are therefore suitable for use in the automotive and printing industries and in medical engineering.

Thanks to their low thickness, their basic principle may change the design of future smartphones.

The facetVISION camera can be industrially manufactured in mass production. Fraunhofer researchers have shown this in trial runs.

Credit: © Photo Fraunhofer IOF

Just as the insects’ eyes, the Fraunhofer technology is composed of many small, uniform lenses. They are positioned close together, similar to the pieces of a mosaic. Each facet receives only a small section of its surroundings. The insect’s brain aggregates the many individual images of the facets to a whole picture. In the newly developed facetVISION camera, micro-lenses and aperture arrays take over these functions. Due to the offset of each lens to its associated aperture, each optical channel has an individual viewing direction and always depicts another area of the field of vision.

“With a camera thickness of only two millimeters, this technology, taken from nature’s model, will enable us to achieve a resolution of up to four megapixel”, says Andreas Brückner, project manager at the Fraunhofer Institute for Applied Optics and Precision Engineering IOF in Jena.

“This is clearly a higher resolution compared to cameras in industrial applications – for example in robot technology or automobile production.” This technology was developed together with scientists from the Fraunhofer Institute for Integrated Circuits IIS in Erlangen and was funded by the Fraunhofer-Zukunftsstiftung.

Economical production on wafers

The micro-lenses of the Fraunhofer researchers can get economically manufactured in large quantities – using processes similar to those applied in the semiconductor industry. Computer chips are mass-produced on wafers (large and thin semiconductor slices) and subsequently separated by sawing. Accordingly, thousands facetVISION camera lenses can be manufactured at the Fraunhofer IOF in parallel. “The cameras are suitable in medical engineering, for instance – for optical sensors, which will be able to quickly and easily examine blood”, says Brückner.

The first prototype of the technology transfers the images from the camera to the smartphone by Bluetooth via a transmission box.

Credit: © Photo Fraunhofer IOF

“In the printing industry, however, such cameras are needed to check the print image at high resolution while the machine is running.” Further applications: Cameras in cars that help parking or in industrial robots that prevent collisions between man and machines.

An eye on smartphones

Compound eye technology is also suitable for integration into smartphones: today, their mini camera lens is normally five millimeters thick in order to show a satisfactorily sharp image of the surroundings. The manufacturers of ultra-thin smartphones face the following challenge: since the camera is thicker than the smartphone housing, it sticks out of the smartphone’s back cover. The manufacturers call this the “camera-bump” – the unaesthetic “camera bulge”.

The camera lenses for smartphones are, however, not made on wafers, but in injection molded plastic. In this procedure, hot liquid plastic is poured into the mold in a similar way as batter into a waffle iron. Robots then assemble the finished lenses into the smartphone camera. “We would like to transfer the insect eye principle to this production technology”, says Brückner. “For example, it will be possible to place several smaller lenses next to each other in the smartphone camera. The combination of facet effect and proven injection molded lenses will enable resolutions of more than 10 megapixels in a camera requiring just a thickness of around three and a half millimeters.”

Comments Off on Mini-Camera Features Lens Similar To Insect Eyes

December 20th, 2016

When you find yourself in an eerie place or the beat drops just right during a favorite song, the chills start multiplying. You know the feeling. It is a shiver that seems to come from within and makes your hairs stand on end.

William Griffith, PhD, professor and head of the Department of Neuroscience and Experimental Therapeutics at the Texas A&M College of Medicine, said goose bumps are a normal biological response to powerful emotions from dramatic or stressful situations linked to fight-or-flight. “Fight-or-flight is in response to something, typically being scared, shocked or encountering a predator, that prepares us to fight or flee,” Griffith said. “It’s part of an adrenaline reflex.” Strong emotional reaction to music or climatic scenarios can also trigger this adrenaline release.

Fight-or-flight is an autonomic nervous system response that is automatic, or not consciously controlled. This system, which is considered a peripheral nervous system because it is outside of the brain and spinal cord, regulates other involuntary functions like heart rate, breathing and digestion, explained Griffith.

Credit: YouTube

When a risk is perceived, the autonomic nervous system begins its reaction in the amygdala, a part of the brain responsible for some decision-making. This triggers the nearby hypothalamus, which links the nervous system to the hormone-producing endocrine system via the pituitary gland in the brain. This gland secretes a substance called adrenocorticotropic hormone (ACTH) while the adrenal glands, situated above the kidneys, release the hormone epinephrine, also known as adrenaline. These chemical releases produce the steroid hormone cortisol to increase blood pressure and blood sugar and suppress the digestive and immune systems in response to stress.

This rush occurs throughout the body to activate a boost of energy that prepares the muscles for quick reaction to the potential threat. In turn, this hormonal release accelerates heart rate and lung function, expands muscular blood vessels and inhibits systems that are not essential to advancing or retreating like libido and digestion, among other things.

“These hormonal releases also cause the arrector pili muscles that surround the individual hair follicles to contract, making the hairs to stand on end and causing goose bumps,” Griffith said.

When these tiny muscles contact, each hair is elevated and depressions are created in the skin surrounding each follicle. The skin’s prickly surface shares resemblance with the flesh of a goose or other poultry whose feathers have been plucked, hence the term “goose bumps.”

Goose bumps are an inherited phenomenon that animals and humans both experience when adrenaline is released. When an animal’s body heat lowers, the hairs are raised to thicken the fur’s insulation and preserve more of the body’s heat. An animal can also use this hair-raising mechanism to increase its perceived size and make it seem more intimidating when threatened.

“Humans don’t necessarily have the ability or need to manipulate their body hair in such a way like our ancestors,” Griffith said, “but the trait still remains in our DNA, whether that adrenaline release is prompted by an uncanny environment or a sensational song.”

So, the next time you have “all the feels,” remember it is just the brain releasing the same chemicals that tell the skin to form goose bumps.

Comments Off on Why People Get The Chills When They Are Not Cold

December 20th, 2016

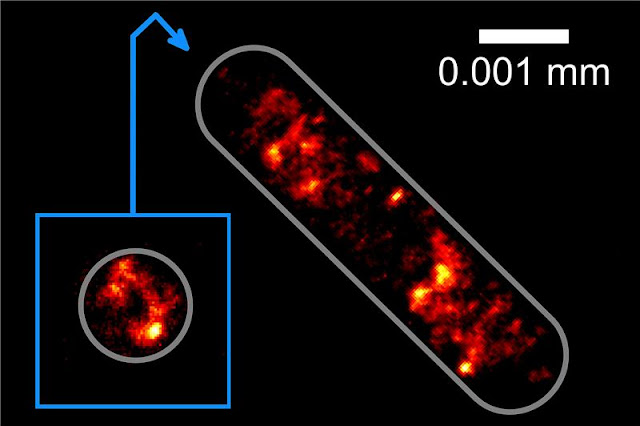

Up to now, if scientists wanted to study blood cells, algae, or bacteria under the microscope, they had to mount these cells on a substrate such as a glass slide. Physicists at Bielefeld and Frankfurt Universities have developed a method that traps biological cells with a laser beam enabling them to study them at very high resolutions.

In science fiction books and films, the principle is known as the ‘tractor beam’. Using this procedure, the physicists have obtained superresolution images of the DNA in single bacteria. The physicist Robin Diekmann and his colleagues published this new development (13.12.2016) in a recent issue of the research journal ‘Nature Communications’.

One of the problems facing researchers who want to examine biological cells microscopically is that any preparatory treatment will change the cells. Many bacteria prefer to be able to swim freely in solution. Blood cells are similar: They are continuously in rapid flow, and do not remain on surfaces. Indeed, if they adhere to a surface, this changes their structure and they die.

Picture of the distribution of the genetic information in an Escherichia coli bacterial cell: Physicists at Bielefeld University are the first to photograph this distribution at the highest optical resolution without anchoring the cells on a glass substrate.

Photo: Bielefeld University

‘Our new method enables us to take cells that cannot be anchored on surfaces and then use an optical trap to study them at a very high resolution. The cells are held in place by a kind of optical tractor beam. The principle underlying this laser beam is similar to the concept to be found in the television series “Star Trek”,’ says Professor Dr. Thomas Huser. He is the head of the Biomolecular Photonics Research Group in the Faculty of Physics. ‘What’s special is that the samples are not only immobilized without a substrate but can also be turned and rotated. The laser beam functions as an extended hand for making microscopically small adjustments.’

The Bielefeld physicists have further developed the procedure for use in superresolution fluorescence microscopy. This is considered to be a key technology in biology and biomedicine because it delivers the first way to study biological processes in living cells at a high scale – something that was previously only possible with electron microscopy. To obtain images with such microscopes, researchers add fluorescent probes to the cells they wish to study, and these will then light up when a laser beam is directed towards them. A sensor can then be used to record this fluorescent radiation so that researchers can even gain three-dimensional images of the cells.

Prof. Dr. Thomas Huser and his team have further developed a procedure for the superresolution microscopy of cells. This enables them to hold the cells without using substrates and obtain optical images with a similar resolution to that obtained with electron microscopes. P

Photo: Bielefeld University

In their new method, the Bielefeld researchers use a second laser beam as an optical trap so that the cells float under the microscope and can be moved at will. ‘The laser beam is very intensive but invisible to the naked eye because it uses infrared light,’ says Robin Diekmann, a member of the Biomolecular Photonics Research Group.

‘When this laser beam is directed towards a cell, forces develop within the cell that hold it within the focus of the beam,’ says Diekmann. Using their new method, the Bielefeld physicists have succeeded in holding and rotating bacterial cells in such a way that they can obtain images of the cells from several sides. Thanks to the rotation, the researchers can study the three-dimensional structure of the DNA at a resolution of circa 0.0001 millimetres.

Professor Huser and his team want to further modify the method so that it will enable them to observe the interplay between living cells. They would then be able to study, for example, how germs penetrate cells.

To develop the new methods, the Bielefeld scientists are working together with Prof. Dr. Mike Heilemann and Christoph Spahn from the Johann Wolfgang Goethe University of Frankfurt am Main.

Comments Off on Tractor Beam Traps Bacteria

December 14th, 2016

It’s only a matter of time before drugs are administered via patches with painless microneedles instead of unpleasant injections. But designers need to balance the need for flexible, comfortable-to-wear material with effective microneedle penetration of the skin. Swedish researchers say they may have cracked the problem.

In the recent volume of PLOS ONE, a research team from KTH Royal Institute of Technology in Stockholm reports a successful test of its microneedle patch, which combines stainless steel needles embedded in a soft polymer base – the first such combination believed to be scientifically studied. The soft material makes it comfortable to wear, while the stiff needles ensure reliable skin penetration.

Credit: KTH Royal Institute of Technology

Unlike epidermal patches, microneedles penetrate the upper layer of the skin, just enough to avoid touching the nerves. This enables delivery of drugs, extraction of physiological signals for fitness monitoring devices, extracting body fluids for real-time monitoring of glucose, pH level and other diagnostic markers, as well as skin treatments in cosmetics and bioelectric treatments.

Frank Niklaus, professor of micro and nanofabrication at KTH, says that practically all microneedle arrays being tested today are “monoliths”, that is, the needles and their supporting base are made of the same – often hard and stiff – material. While that allows the microneedles to penetrate the skin, they are uncomfortable to wear. On the other hand, if the whole array is made from softer materials, they may fit more comfortably, but soft needles are less reliable for penetrating the skin.

“To the best of our knowledge, flexible and stretchable patches with arrays of sharp and stiff microneedles have not been demonstrated to date,” he says.

They actually tested two variations of their concept, one which was stretchable and slightly more flexible than the other. The more flexible patch, which has a base of molded thiol-ene-epoxy-based thermoset film, conformed well to deformations of the skin surface and each of the 50 needles penetrated the skin during a 30 minute test.

A successful microneedle product could have major implications for health care delivery. “The chronically ill would not have to take daily injections,” says co-author Niclas Roxhed, who is research leader at the Department of Micro and Nanotechnology at KTH.

In addition to addressing people’s reluctance to take painful shots, microneedles also offer a hygiene benefit. The World Health Organization estimates that about 1.3 million people die worldwide each year due to improper handling of needles.

“Since the patch does not enter the bloodstream, there is less risk of spreading infections,” Roxhed says.

Comments Off on Painless Micro-Needle Patch Could Replace Needles

December 14th, 2016

James Webber took up barefoot running 12 years ago. He needed to find a new passion after deciding his planned career in computer-aided drafting wasn’t a good fit. Eventually, his shoeless feet led him to the University of Arizona, where he enrolled as a doctoral student in the School of Anthropology.

Webber was interested in studying the mechanics of running, but as the saying goes, one must learn to walk before they can run, and that — so to speak — is what Webber has been doing in his research.

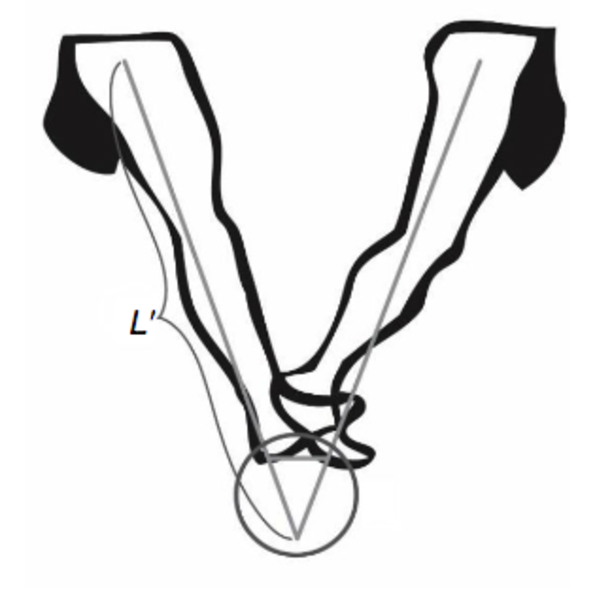

His most recent study on walking, published in the Journal of Experimental Biology, specifically explores why humans walk with a heel-to-toe stride, while many other animals — such as dogs and cats — get around on the balls of their feet.

Credit: University of Arizona

It was an especially interesting question from Webber’s perspective, because those who do barefoot running, or “natural running,” land on the middle or balls of their feet instead of the heels when they run — a stride that would feel unnatural when walking.

Indeed, humans are pretty set in our ways with how we walk, but our heel-first style could be considered somewhat curious.

“Humans are very efficient walkers, and a key component of being an efficient walker in all kind of mammals is having long legs,” Webber said. “Cats and dogs are up on the balls of their feet, with their heel elevated up in the air, so they’ve adapted to have a longer leg, but humans have done something different. We’ve dropped our heels down on the ground, which physically makes our legs shorter than they could be if were up on our toes, and this was a conundrum to us (scientists).”

Webber’s study, however, offers an explanation for why our heel-strike stride works so well, and it still comes down to limb length: Heel-first walking creates longer “virtual legs,” he says.

We Move Like a Human Pendulum

When humans walk, Webber says, they move like an inverted swinging pendulum, with the body essentially pivoting above the point where the foot meets the ground below. As we take a step, the center of pressure slides across the length of the foot, from heel to toe, with the true pivot point for the inverted pendulum occurring midfoot and effectively several centimeters below the ground. This, in essence, extends the length of our “virtual legs” below the ground, making them longer than our true physical legs.

As Webber explains: “Humans land on their heel and push off on their toes. You land at one point, and then you push off from another point eight to 10 inches away from where you started. If you connect those points to make a pivot point, it happens underneath the ground, basically, and you end up with a new kind of limb length that you can understand. Mechanically, it’s like we have a much longer leg than you would expect.”

Webber and his adviser and co-author, UA anthropologist David Raichlen, came to the conclusion after monitoring study participants on a treadmill in the University’s Evolutionary Biomechanics Lab. They looked at the differences between those asked to walk normally and those asked to walk toe-first. They found that toe-first walkers moved slower and had to work 10 percent harder than those walking with a conventional stride, and that conventional walkers’ limbs were, in essence, 15 centimeters longer than toe-first walkers.

Researchers examined differences between heel-first and toe-first walking in the UA’s Evolutionary Biomechanics Lab.

Image courtesy of James Webber

“The extra ‘virtual limb’ length is longer than if we had just had them stand on their toes, so it seems humans have found a novel way of increasing our limb length and becoming more efficient walkers than just standing on our toes,” Webber said. “It still all comes down to limb length, but there’s more to it than how far our hip is from the ground. Our feet play an important role, and that’s often something that’s been overlooked.”

When the researchers sped up the treadmill to look at the transition from walking to running, they also found that toe-first walkers switched to running at lower speeds than regular walkers, further showing that toe-first walking is less efficient for humans.

Ancient Human Ancestors Had Extra-Long Feet

It’s no wonder humans are so set in our ways when it comes to walking heel-first — we’ve been doing it for a long time. Scientists know from footprints found preserved in volcanic ash in Latoli, Tanzania, that ancient hominins practiced heel-to-toe walking as early as 3.6 million years ago.

When you think of walking humans as moving like an inverted pendulum, the pivot point for the pendulum essentially occurs beneath the ground.

Image courtesy of James Webber

Our feet have changed over the years, however. Early bipeds (animals that walk on two feet) appear to have had rigid feet that were proportionally much longer than ours today — about 70 percent the length of their femur, compared to 54 percent in modern humans. This likely helped them to be very fast and efficient walkers. While modern humans held on to the heel-first style of walking, Webber suggests our toes and feet may have gotten shorter, proportionally, as we became better runners in order to pursue prey.

“When you’re running, if you have a really long foot and you need to push off really hard way out at the end of your foot, that adds a lot of torque and bending,” Webber said. “So the idea is that as we shifted into running activities, our feet started to shrink because it maybe it wasn’t as important to be super-fast walkers. Maybe it became important to be really good runners.”

Comments Off on Why We Walk On Our Heels Instead Of Our Toes

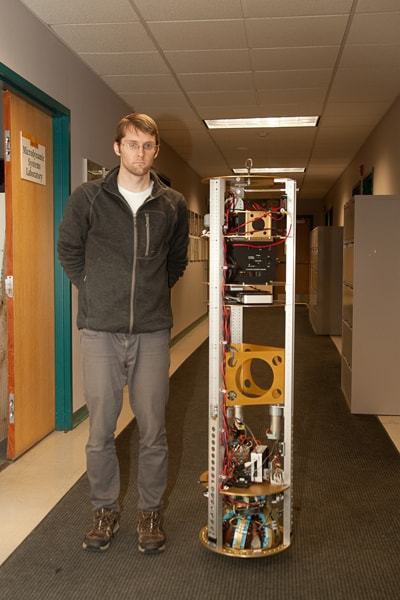

October 18th, 2016

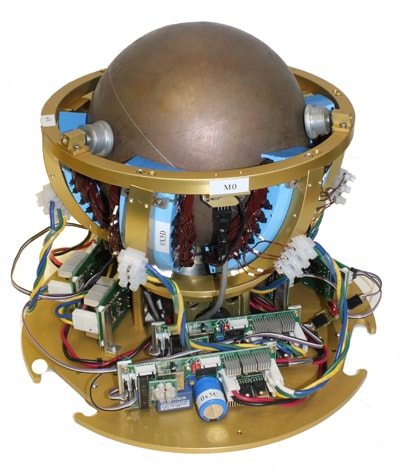

More than a decade ago,

Ralph Hollis invented the

ballbot, an elegantly simple robot whose tall, thin body glides atop a sphere slightly smaller than a bowling ball. The latest version, called SIMbot, has an equally elegant motor with just one moving part: the ball.

The only other active moving part of the robot is the body itself.

The spherical induction motor eliminates the robot’s mechanical drive system.

The spherical induction motor (SIM) invented by Hollis, a research professor in Carnegie Mellon University’sRobotics Institute, andMasaaki Kumagai, a professor of engineering at Tohoku Gakuin University in Tagajo, Japan, eliminates the mechanical drive systems that each used on previous ballbots. Because of this extreme mechanical simplicity, SIMbot requires less routine maintenance and is less likely to suffer mechanical failures.

The new motor can move the ball in any direction using only electronic controls. These movements keep SIMbot’s body balanced atop the ball.

Early comparisons between SIMbot and a mechanically driven ballbot suggest the new robot is capable of similar speed — about 1.9 meters per second, or the equivalent of a very fast walk — but is not yet as efficient, said Greg Seyfarth, a former member of Hollis’ lab who recently completed his master’s degree in robotics.

Induction motors are nothing new; they use magnetic fields to induce electric current in the motor’s rotor, rather than through an electrical connection. What is new here is that the rotor is spherical and, thanks to some fancy math and advanced software, can move in any combination of three axes, giving it omnidirectional capability. In contrast to other attempts to build a SIM, the design by Hollis and Kumagai enables the ball to turn all the way around, not just move back and forth a few degrees.

Though Hollis said it is too soon to compare the cost of the experimental motor with conventional motors, he said long-range trends favor the technologies at its heart.

“This motor relies on a lot of electronics and software,” he explained. “Electronics and software are getting cheaper. Mechanical systems are not getting cheaper, or at least not as fast as electronics and software are.”

SIMbot’s mechanical simplicity is a significant advance for ballbots, a type of robot that Hollis maintains is ideally suited for working with people in human environments. Because the robot’s body dynamically balances atop the motor’s ball, a ballbot can be as tall as a person, but remain thin enough to move through doorways and in between furniture. This type of robot is inherently compliant, so people can simply push it out of the way when necessary. Ballbots also can perform tasks such as helping a person out of a chair, helping to carry parcels and physically guiding a person.

Greg Seyfarth and SIMbot

Until now, moving the ball to maintain the robot’s balance has relied on mechanical means. Hollis’ ballbots, for instance, have used an “inverse mouse ball” method, in which four motors actuate rollers that press against the ball so that it can move in any direction across a floor, while a fifth motor controls the yaw motion of the robot itself.

“But the belts that drive the rollers wear out and need to be replaced,” said Michael Shomin, a Ph.D. student in robotics. “And when the belts are replaced, the system needs to be recalibrated.” He said the new motor’s solid-state system would eliminate that time-consuming process.

The rotor of the spherical induction motor is a precisely machined hollow iron ball with a copper shell. Current is induced in the ball with six laminated steel stators, each with three-phase wire windings. The stators are positioned just next to the ball and are oriented slightly off vertical.

The six stators generate travelling magnetic waves in the ball, causing the ball to move in the direction of the wave. The direction of the magnetic waves can be steered by altering the currents in the stators.

Hollis and Kumagai jointly designed the motor. Ankit Bhatia, a Ph.D. student in robotics, and Olaf Sassnick, a visiting scientist from Salzburg University of Applied Sciences, adapted it for use in ballbots.

Getting rid of the mechanical drive eliminates a lot of the friction of previous ballbot models, but virtually all friction could be eliminated by eventually installing an air bearing, Hollis said. The robot body would then be separated from the motor ball with a cushion of air, rather than passive rollers.