September 28th, 2015

The pace and scale of technological change is so frenetic that some predict we are entering a fourth industrial revolution, powered by artificial intelligence (AI), the internet of things and big data. Indeed, the changes are coming so thick and fast, that it can be difficult to take a step back and attempt to understand the phenomenon. Searching for metaphors to describe what is happening,

Klaus Schwab chose a tsunami: “You see small signs at the shore, and suddenly the wave sweeps in.” The transformations are so overwhelming, that rather than riding the waves, we let them overpower us.

But it’s time to come up for air. We spoke with 800 leading experts and executives from the ICT community to get their take on what our digital future looks like. What big trends will define it? What timescale are we talking? And what impact will it have on society? Here are their 13 signs that a fourth industrial revolution might be around the corner.

1. Implantable and wearable technologies

Remember how bulky mobile phones used to be in the 80s and even the 90s? By 2025, you might look back at your smartphone and say the same thing. The vast majority (82%) of respondents said that in the next 10 years, the first implantable mobile phone will be available commercially. Even more of them (91%) said that 10% of us would be wearing clothes that are connected to the internet.

2. Our digital presence

Just 10 years ago, a “digital presence” meant having an email address. Now, even your grandparents have a Facebook page, a Twitter account and a personal website. By 2025, 80% of people across the world will have a digital presence, according to 84% of the experts we surveyed.

3. Vision as the new interface

If, like me, you were unfortunate enough to need glasses from a young age, you’ll remember the relentless taunting from classmates. But by 2025, we bespectacled folks could be the coolest kids on the block: 86% of those surveyed think that 10% of reading glasses will be connected to the internet, allowing the wearer to access a wealth of apps and data on the go.

4. Ubiquitous computing

For those of us privileged enough to live in places where internet access is the norm, it might seem like everything has moved online: but still, 57% of the world is not connected. Almost 80% of respondents said that in the next decade, 90% of the global population will have regular access to the internet, democratizing the many benefits of the digital revolution.

5. A supercomputer in your pocket

Last year, global mobile penetration rates stood at 50%. According to 81% of those we surveyed, within the next decade, 90% of the world will be using smartphones. Not only will mobile penetration rates increase, but the devices themselves will be even more sophisticated, making yesterday’s supercomputers pale in comparison.

6. Storage for all

Digital hoarders, rejoice: you can continue producing vast quantities of content without having to worry about where to store it. By 2025, 91% of the experts we interviewed said most of us (90%) will have unlimited and free digital storage.

7. The internet of and for things

Glasses, clothing, accessories – with increasing computer power and falling hardware prices, we can connect almost anything to the internet. Within the next decade, there will be 1 trillion sensors connected to the internet of things, according to 89% of respondents. The opportunities this development will provide are almost limitless: connected sensors will improve safety (of everything from planes to food), boost productivity and help us manage our resources more efficiently and sustainably.

8. Smart cities and smarter homes

Many of us are now lucky enough to have a couple of connected devices in our homes: maybe a Sonos wireless sound system and a smart TV. But by 2025, 70% of survey respondents think 50% of internet traffic delivered to homes will be for appliances and devices such as smart fridges, thermostats and security systems. Our cities are a little bit further behind: only 64% of respondents said we would have a city with a population of 50,000 with no traffic lights. But of those cars stuck at the red light, 10% of them will be driverless, according to 79% of those surveyed.

9. Big data for big insights

The first government census took place almost 6,000 years ago and since then, very little has changed: in many countries, residents still receive a paper-based form in the mail. But with all the data we’re now producing, this might soon be a thing of the past. Most survey respondents (83%) think that by 2025, at least one government will have replaced its census with big data sources.

10. Robots, decision-making and the world of work

We’ve all heard about the benefits of a diverse board of directors: but does that extend to robots? Maybe not, because less than half (45%) of respondents thought that by 2025, we would see the first AI machine on a corporate board. That doesn’t mean we won’t be seeing robots in the workplace in the next 10 years: 75% of those surveyed said 30% of corporate audits would be carried out by robots, and 86% said we would see the first robotic pharmacist in the US.

11. The rise of digital currencies

Today, only a small fraction of the world’s GDP – around 0.025% – is held on the blockchain, a digital ledger where transactions made in currencies like bitcoin are recorded. Only 58% of respondents thought that amount would increase to 10% by 2025. But by that date, 73% of them said they thought we’d see a government collect taxes via a blockchain.

12. The sharing economy

When April Rinne, a sharing economy expert, came to Davos two years ago, very few people knew of the concept: “Whenever I asked anyone I met if they had heard of the phrase, I would receive blank stares.” Today, Uber and Airbnb, two of the most well-known sharing economy firms, are the most and third-most valuable start-ups in the world. Their growth looks set to continue: according to 67% of respondents, by 2025 more trips will be through car shares than in private cars.

13. 3D printing

A recent article on our blog referred to 3D printing as “one of the pillars of the future of manufacturing”. The experts we surveyed agreed: 84% of them said that within the next 10 years, the first 3D-printed car will be in production, and 81% said 5% of consumer products would be printed in 3D. But the technology will transform more than just manufacturing: 76% of respondents said that by 2025, medical professionals will have carried out the first transplant of a 3D-printed liver.

Comments Off on 13 signs the fourth industrial revolution is almost here

September 28th, 2015

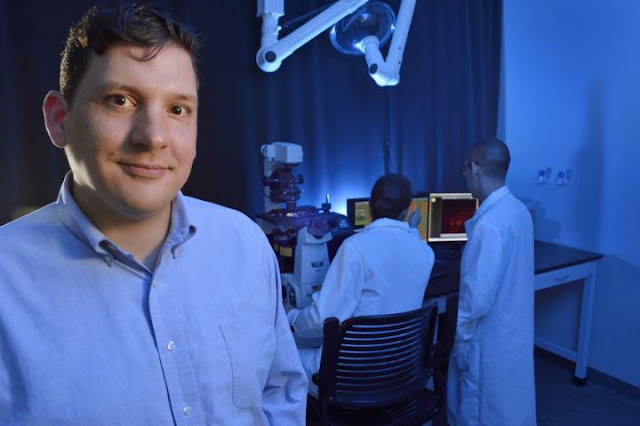

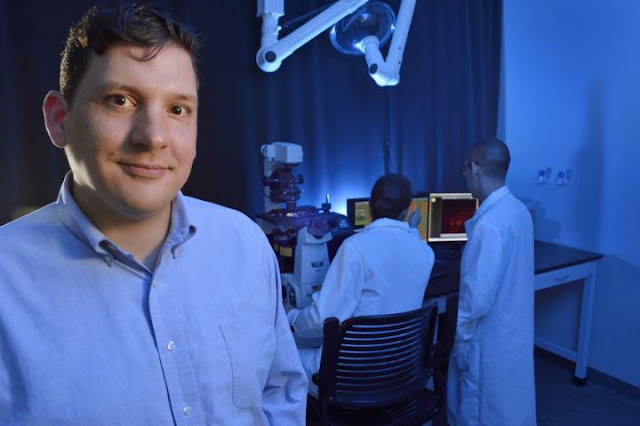

Women react differently to negative images compared to men, which may be explained by subtle differences in brain function. This neurobiological explanation for women’s apparent greater sensitivity has been demonstrated by researchers at the CIUSSS de l’Est-de-l’Île-de-Montréal (Institut universitaire en santé mentale de Montréal) and the University of Montreal, whose findings were published today in Psychoneuroendocrinology.

“Not everyone’s equal when it comes to mental illness,” said Adrianna Mendrek, a researcher at the Institut universitaire en santé mentale de Montréal and lead author of the study. “Greater emotional reactivity in women may explain many things, such as their being twice as likely to suffer from depression and anxiety disorders compared to men,” Mendrek added, who is also an associate professor at the University of Montreal’s Department of Psychiatry.

“Not everyone’s equal when it comes to mental illness. Greater emotional reactivity in women may explain many things, such as their being twice as likely to suffer from depression and anxiety disorders compared to men,” Adrianna Mendrek, a researcher at the Institut universitaire en santé mentale de Montréal and professor at Université de Montréal (University of Montreal)

Credit: Greg Kerr, CC BY 2.0, https://flic.kr/p/b33XC2

In their research, Mendrek and her colleagues observed that certain areas of the brains of women and men, especially those of the limbic system, react differently when exposed to negative images. They therefore investigated whether women’s brains work differently than men’s and whether this difference is modulated by psychological (male or female traits) or endocrinological (hormonal variations) factors.

For the study, 46 healthy participants – including 25 women – viewed images and said whether these evoked positive, negative, or neutral emotions. At the same time, their brain activity was measured by brain imaging. Blood samples were taken beforehand to determine hormonal levels (e.g., estrogen, testosterone) in each participant.

The researchers found that subjective ratings of negative images were higher in women compared to men. Higher testosterone levels were linked to lower sensitivity, while higher feminine traits (regardless of sex of tested participants) were linked to higher sensitivity. Furthermore, while, the dorsomedial prefrontal cortex (dmPFC) and amygdala of the right hemisphere were activated in both men and women at the time of viewing, the connection between the amygdale and dmPFC was stronger in men than in women, and the more these two areas interacted, the less sensitivity to the images was reported. “This last point is the most significant observation and the most original of our study,” said Stéphane Potvin, a researcher at the Institut universitaire en santé mentale and co-author of the study.

The amygdale is a region of the brain known to act as a threat detector and activates when an individual is exposed to images of fear or sadness, while the dmPFC is involved in cognitive processes (e.g., perception, emotions, reasoning) associated with social interactions. “A stronger connection between these areas in men suggests they have a more analytical than emotional approach when dealing with negative emotions,” added Potvin, who is also an associate professor at the University of Montreal’s Department of Psychiatry. “It is possible that women tend to focus more on the feelings generated by these stimuli, while men remain somewhat ‘passive’ toward negative emotions, trying to analyse the stimuli and their impact.”

This connection between the limbic system and the prefrontal cortex appeared to be modulated by testosterone – the male hormone – which tends to reinforce this connection, as well as by an individual’s gender (as measured be the level of femininity and masculinity). “So there are both biological and cultural factors that modulate our sensitivity to negative situations in terms of emotions,” Mendrek explained. “We will now look at how the brains of men and women react depending on the type of negative emotion (e.g., fear, sadness, anger) and the role of the menstrual cycle in this reaction.”

Comments Off on Study shows women negative emotions different than men

August 27th, 2015

Alton Parrish.

Scott X. Mao’s new process solves an age-old conundrum

Materials scientists have long sought to form glass from pure, monoatomic metals. Scott X. Mao and colleagues did it.

Their paper, “Formation of Monoatomic Metallic Glasses Through Ultrafast Liquid Quenching,” was recently published online in Nature, a leading science journal.

Metallic Glasses

Credit: Wikipedia

Mao, William, Kepler Whiteford Professor of Mechanical Engineering and Materials Science at the University of Pittsburgh, says, “This is a fundamental issue explored by people in this field for a long time, but nobody could solve the problem. People believed that it could be done, and now we’re able to show that it is possible.”

Metallic glasses are unique in that their structure is not crystalline (as it is in most metals), but rather is disordered, with the atoms randomly arranged. They are sought for various commercial applications because they are very strong and are easily processed.

Mao’s novel method of creating metallic glass involved developing and implementing a new technique (a cooling nano-device under in-situ transmission electron microscope) that enabled him and his colleagues to achieve an unprecedentedly high cooling rate that allowed for the transformation of liquefied elemental metals tantalum and vanadium into glass.

Comments Off on Pitt engineer turns metal into glass

August 27th, 2015

The Daily Journalist

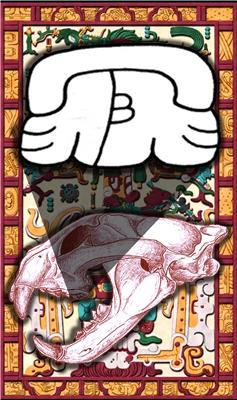

63 years since the discovery of the tomb of Mayan King Pakal located inside the Temple of Inscriptions in Palenque, Chiapas (southern state of Mexico), the researcher Guillermo Bernal Romero from the Maya Studies Center, of the Institute of Philological Investigations of the National University of Mexico (UNAM), deciphered the T514 glyph meaning YEJ: “sharp edge”.

Credit: National University of Mexico

The scholar explained that “the name is related to the nine warriors depicted on the walls of the tomb, also refers to the war, capturing prisoners, and conquering cities. The tomb itself is a glorification of war, has a symbolic relationship with various elements, for example, the nine levels of the Mayan underworld. “The YEJ glyph is associated with Te’ which means “spear”, so this finding allows to can read, for the first time the name of the chamber where the sacred tomb of the ruler is: “The House of the Nine Sharp Spears “.

The enigma was solved by studying different elements, including the jaguar, sacred animal of the Mayan universe; by analyzing various skulls and observe their molars, the researcher related the information with the glyphs and determined that the glyph in question, is the schematic representation of the upper molar of a jaguar, which is at least registered in more than 50 Mayan inscriptions with a war focus.

Credit: National University of Mexico

The researcher, Bernal Romero, explained that after 1700 years of being hidden, by using the glyph YEJ it can also deciphered the full name of the “C House of the Palenque Palace:” The House of the Sharp Spear”, which is the residence of Mayan King Janaahb K’inich ‘Pakal.”This finding provides a leap in accuracy and understanding of Mayan life. Today we know that there few wars, but they had a warrior philosophy, that is why deciphering this glyph helps determine the rate of wars in the late classic, between 700 and 800 AD, full cycle of the Mayan” the doctor in Mesoamerican Studies also highlighted.

Is important to note that there are about twenty thousand 500 Mayan glyphs, 80 percent have already been decoded; from those at Palenque, 90 percent are already interpreted.

The announcement of the discovery was made in the framework of the 45th anniversary of the founding of the Maya Studies Center, of the Institute of Philological Investigations of the National University of Mexico. (Agencia ID)

Comments Off on Deciphering of Mayan glyph, provides a leap for understanding Mayan culture

August 27th, 2015

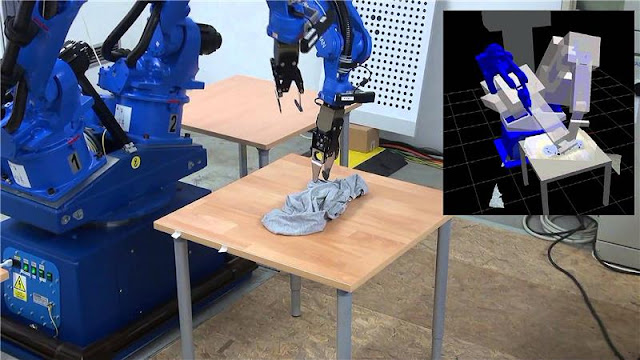

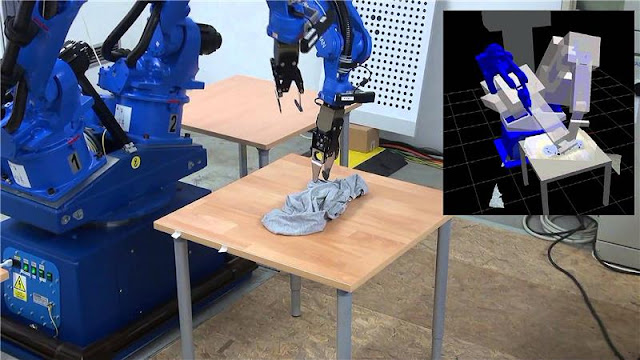

A Mexican in Europe has helped design the prototype of a robot that is able to separate, stretch, smooth wrinkles and fold clothes. This technology participates in almost all the washing process, but introducing the clothes into the washing machine.

The engineer in industrial robotics, Gerardo Aragon Camarasa, graduated from the School of Mechanical and Electrical Engineering (ESIME) of the Polytechnic Institute of Mexico (IPN). And now he is at the University of Glasgow in Scotland, as a post doctorate researcher who told us that technology is just starting and requires more work to be a final product. Although, he acknowledges that international companies have contacted them with interest to take over the prototype.

Credit: Investigación y Desarrollo

The Mexican researcher explains that the robot is unique by separating each garment, but there are some similar ones in Prague, Czech Republic and one in Greece.

The robot has two standard robotic arms with six degrees of freedom, these are used on a daily basis in automated production lines in the car industry for welding and painting.

Aragon Camarasa explains that in the robot they designed both arms were mounted on a rotating base, which provides an extra degree of freedom for handling clothes, similar to the movement of the human waist. Regarding the sensors, the robot has a pair of grippers (tweezers) at each end to simulate a pair of fingers that can rub each other, providing the ability to perceive the texture of the material through touch.

At the University of Glasgow, the Mexican is working together with Li (Kevin) Sun and Dr. Paul Siebert, the last one opened the doors to him in this institution.

“I participated in the development of the project as a doctoral student under the tutelage of Paul. After two years of shaping the project proposal, it was accepted and months before completing the doctorate, Dr. Siebert offered me the job. ”

The project was sponsored by the European Union under the 7th Framework Programme for Research and Technological Development with a duration of three years.

Research to develop the prototype was completed in January. The robot has three Kinects that provide real-time 3D images and maps. These are located on the wrists and waist of the arms, serving for operation tasks.

Additionally, “the robot has an active head, which is the main contribution of the Glasgow project, which serves to capture high resolution and reconstruction of 3D maps, while the eyes move and focus the objects or points of interest in the clothes. The information processed by the head serves for accurate handling, and recognizing the type of material, to separate and define structures such as wrinkles and edges”.

The consortium members involved in the project are CERTH in Greece, CVUT in Prague, the universities of Genoa in Italy and Glasgow in Scotland, and Neovision, a company from the Czech Republic.

This company would be responsible for marketing the robot, though the intellectual property belongs to each university.

A little bit of history

Gerardo Aragon recalls that when he finished his studies at the ESIME, his thesis advisor, Dr. Emmanuel Merchan Cruz, suggested a doctoral study in the UK and his parents urged him to undertake postgraduate studies in another country.

Although he contacted several professors from various universities, only two wrote back telling him about their research and how he could fit in the working group.

Dr. Siebert offered various PhD projects and one of those was the robotic vision with an active head. After being accepted into college, he sought support from the National Council of Science and Technology (CONACYT) and the Alban program of the European Union offering support to Latin American students.

“The University of Glasgow, to date, has given me support as any other student and now as a member of the academic staff. Mexicans are highly regarded here, especially if you want to work and deliver a quality product. “(Agencia ID).

Comments Off on Service robots classifies, smooths and folds clothes

August 17th, 2015

By NASA.

This colorful bubble is a planetary nebula called NGC 6818, also known as the Little Gem Nebula. It is located in the constellation of Sagittarius (The Archer), roughly 6,000 light-years away from us. The rich glow of the cloud is just over half a light-year across — humongous compared to its tiny central star — but still a little gem on a cosmic scale.

Image credit: ESA/Hubble & NASA, Acknowledgement: Judy Schmidt

When stars like the sun enter “retirement,” they shed their outer layers into space to create glowing clouds of gas called planetary nebulae. This ejection of mass is uneven, and planetary nebulae can have very complex shapes. NGC 6818 shows knotty filament-like structures and distinct layers of material, with a bright and enclosed central bubble surrounded by a larger, more diffuse cloud.

Scientists believe that the stellar wind from the central star propels the outflowing material, sculpting the elongated shape of NGC 6818. As this fast wind smashes through the slower-moving cloud it creates particularly bright blowouts at the bubble’s outer layers.

Hubble previously imaged this nebula back in 1997 with its Wide Field Planetary Camera 2, using a mix of filters that highlighted emission from ionized oxygen and hydrogen. This image, while from the same camera, uses different filters to reveal a different view of the nebula.

Some of the most breathtaking views in the Universe are created by nebulae — hot, glowing clouds of gas. This new NASA/ESA Hubble Space Telescope image shows the center of the Lagoon Nebula, an object with a deceptively tranquil name, in the constellation of Sagittarius. The region is filled with intense winds from hot stars, churning funnels of gas, and energetic star formation, all embedded within an intricate haze of gas and pitch-dark dust.

Stormy Seas in Sagittarius

Image Credit: NASA, ESA, J. Trauger (Jet Propulson Laboratory)

Comments Off on A gem and storm in Sagittarius

August 17th, 2015

By Alton Parrish.

A Mexican in Europe has helped design the prototype of a robot that is able to separate, stretch, smooth wrinkles and fold clothes. This technology participates in almost all the washing process, but introducing the clothes into the washing machine.

The engineer in industrial robotics, Gerardo Aragon Camarasa, graduated from the School of Mechanical and Electrical Engineering (ESIME) of the Polytechnic Institute of Mexico (IPN). And now he is at the University of Glasgow in Scotland, as a post doctorate researcher who told us that technology is just starting and requires more work to be a final product. Although, he acknowledges that international companies have contacted them with interest to take over the prototype.

Credit: Investigación y Desarrollo

The Mexican researcher explains that the robot is unique by separating each garment, but there are some similar ones in Prague, Czech Republic and one in Greece.

The robot has two standard robotic arms with six degrees of freedom, these are used on a daily basis in automated production lines in the car industry for welding and painting.

Aragon Camarasa explains that in the robot they designed both arms were mounted on a rotating base, which provides an extra degree of freedom for handling clothes, similar to the movement of the human waist. Regarding the sensors, the robot has a pair of grippers (tweezers) at each end to simulate a pair of fingers that can rub each other, providing the ability to perceive the texture of the material through touch.

At the University of Glasgow, the Mexican is working together with Li (Kevin) Sun and Dr. Paul Siebert, the last one opened the doors to him in this institution.

“I participated in the development of the project as a doctoral student under the tutelage of Paul. After two years of shaping the project proposal, it was accepted and months before completing the doctorate, Dr. Siebert offered me the job. ”

The project was sponsored by the European Union under the 7th Framework Programme for Research and Technological Development with a duration of three years.

Research to develop the prototype was completed in January. The robot has three Kinects that provide real-time 3D images and maps. These are located on the wrists and waist of the arms, serving for operation tasks.

Additionally, “the robot has an active head, which is the main contribution of the Glasgow project, which serves to capture high resolution and reconstruction of 3D maps, while the eyes move and focus the objects or points of interest in the clothes. The information processed by the head serves for accurate handling, and recognizing the type of material, to separate and define structures such as wrinkles and edges”.

The consortium members involved in the project are CERTH in Greece, CVUT in Prague, the universities of Genoa in Italy and Glasgow in Scotland, and Neovision, a company from the Czech Republic.

This company would be responsible for marketing the robot, though the intellectual property belongs to each university.

A little bit of history

Gerardo Aragon recalls that when he finished his studies at the ESIME, his thesis advisor, Dr. Emmanuel Merchan Cruz, suggested a doctoral study in the UK and his parents urged him to undertake postgraduate studies in another country.

Although he contacted several professors from various universities, only two wrote back telling him about their research and how he could fit in the working group.

Dr. Siebert offered various PhD projects and one of those was the robotic vision with an active head. After being accepted into college, he sought support from the National Council of Science and Technology (CONACYT) and the Alban program of the European Union offering support to Latin American students.

“The University of Glasgow, to date, has given me support as any other student and now as a member of the academic staff. Mexicans are highly regarded here, especially if you want to work and deliver a quality product. “(Agencia ID).

Comments Off on Service robots classifies, smooths and folds clothes

August 17th, 2015

By Alton Parrish.

Stanford experts say that China devalued its currency to help spur exports, growth and employment. It wants its currency to become a pre-eminent one in the global economy.

Stanford economists Michael Spence and Nicholas Hope are carefully watching China’s currency devaluation and its effect on the American and international economies.

Credit: Shutterstock

China’s surprise devaluation of its currency this week will likely boost the country’s exports and generate more jobs at home, Stanford scholars say.

Since Tuesday, China’s currency has fallen 4.4 percent, sparking concern among the numerous countries intertwined with the world’s second largest economy. There is more to the story, according to China experts at Stanford.

A. Michael Spence, a Stanford economist who has studied China, said that China’s currency devaluation indicates it is struggling to meet its 7 percent economic growth target for this year and that domestic growth engines are not working fast enough.

“In addition, the devaluation relative to the U.S. dollar probably reflects the strength of the dollar. The picture would look different relative to the euro and yen. The (Chinese) stock market volatility did not help this year,” said Spence, professor and dean emeritus of the Graduate School of Business at Stanford. In 2001, he was awarded the Nobel Memorial Prize in Economic Sciences for his contributions to the analysis of markets with asymmetric information.

As for why China is devaluing its currency, Spence said that Chinese officials are trying to increase exports by making Chinese goods cheaper. Chinese exports fell 8 percent last month compared with a year ago.

Spence advises that China should use its considerable assets to accelerate reforms and long-term growth-oriented investments as it grapples with a slowing economy.

It is a delicate economic time for China, as Spence and other experts say the country could be caught in a “middle-income trap” – a common danger for developing economies when they begin to lose their competitive edge in exporting manufactured goods because their wages are on a rising trend.

“To simplify, the middle income transition requires a major shift in the growth dynamics and supportive policies. Many countries do not make this shift. It can be thought of as sticking with a successful formula beyond its useful life,” said Spence, also a senior fellow at Stanford’s Hoover Institution.

Critics have said China manipulates its currency to gain a trade advantage. Some U.S. policymakers have long argued that the renminbi is undervalued and that this has influenced many American companies to move production to China.

Stanford economist Nicholas Hope said the devaluation – though it received widespread news coverage – is such a small one that its impact is more symbolic than substantive.

“The chief effect of the Chinese move is to serve notice that China really is managing its exchange rate on a basket of currencies rather than a loose dollar peg,” said Hope, director of the China Program for the Stanford Center for International Development, which is part of the Stanford Institute for Economic Policy Research.

Hope said several factors could have contributed to the sudden adjustment of the fixing point of China’s currency to the U.S. dollar.

“First, for some months the rate has languished at the weak end of the range around the old 6.1162 fixing (of the currency), and the adjustment recognizes what the market has been telling the Chinese authorities,” he noted.

Second, after a lengthy period of stability at around 6.21 renminbi yuan to the dollar, China might be reminding market participants (along with the International Monetary Fund) that the Chinese currency floats, even if it is not freely flexible, according to Hope.

Third, after announcing a decline in international reserves for a third consecutive month, China might be discouraging speculative capital outflow by a small pre-emptive weakening of the currency, he said.

“Finally, given that export performance has disappointed, and even though the current account remains in surplus, the move is in the direction of maintaining China’s international competitiveness,” Hope said.

Hope suggested that China would be better off if it accelerated structural reforms that contribute to greater efficiency of investment and higher productivity. “Appropriate policies to boost domestic demand could help as well,” he added.

Overall, he believes the impact of the devaluation will be minimal.

“To the extent that others are affected, those countries that compete with China for sales to the European Union and the U.S. markets are likely to experience the biggest impact,” Hope said.

Comments Off on China’s currency responding more closely to market forces

August 9th, 2015

By Miami and Duke University.

When political candidates give a speech or debate an opponent, it’s not just what they say that matters — it’s also how they say it.

A new study by researchers at the University of Miami and Duke University shows that voters naturally seem to prefer candidates with deeper voices, which they associate with strength and competence more than age.

“Our analyses of both real-life elections and data from experiments show that candidates with lower-pitched voices are generally more successful at the polls,” explains Casey Klofstad, associate professor of political science at the University of Miami College of Arts and Sciences, who is corresponding author on both studies.

Human voice pitch

Credit: Wikipedia

The researchers say our love for leaders with lower-pitched voices may harken back to “caveman instincts” that associate leadership ability with physical prowess more than wisdom and experience.

“Modern-day political leadership is more about competing ideologies than brute force,” said study co-author Casey Klofstad, associate professor of political science at Miami. “But at some earlier time in human history it probably paid off to have a literally strong leader.”

Part of a larger field of research aimed at understanding how unconscious biases nudge voters towards one candidate or another, the findings are scheduled to appear online Friday, Aug. 7, in the open access journal PLOS ONE.

The results are consistent with a previous study by Klofstad and colleagues which also found that candidates with deeper voices get more votes. The researchers found that a deep voice conveys greater physical strength, competence and integrity. The findings held up for female candidates, too.

The question that remained was: why?

Conflating baritones with brawn has some merit, Klofstad said. Men and women with lower-pitched voices generally have higher testosterone, and are physically stronger and more aggressive.

What was difficult to explain, however, was what physical strength has to do with leadership in the modern age, or why people with deeper voices should be considered intrinsically more competent, or having greater integrity.

That got them thinking, maybe our love for lower-pitched voices makes sense because it favors candidates who are older and thus wiser and more experienced.

To test the idea, Klofstad and biologists Rindy Anderson and Steve Nowicki of Duke conducted two experiments.

First, they conducted a survey which suggested there might be something to the idea.

Eight hundred volunteers completed an online questionnaire with information about the age and sex of two hypothetical candidates and indicated who they would vote for.

The candidates ranged in age from 30 to 70, but those in their 40s and 50s were most likely to win.

“That’s when leaders are not so young that they’re too inexperienced, but not so old that their health is starting to decline or they’re no longer capable of active leadership,” Klofstad said.

“Low and behold, it also happens to be the time in life when people’s voices reach their lowest pitch,” Klofstad said.

For the second part of the study, the researchers asked 400 men and 403 women to listen to pairs of recorded voices saying, “I urge you to vote for me this November.”

Each paired recording was based on one person, whose voice pitch was then altered up and down with computer software.

After listening to each pair, the voters were asked which voice seemed stronger, more competent and older, and who they would vote for if they were running against each other in an election.

The deeper-voiced candidates won 60 to 76 percent of the votes. But when the researchers analyzed the voters’ perceptions of the candidates, they were surprised to find that strength and competence mattered more than age.

Whether candidates with lower voices are actually more capable leaders in the modern world is still unclear, they say.

As a next step, Klofstad and colleagues calculated the mean voice pitch of the candidates from the 2012 U.S. House of Representatives elections and found that candidates with lower-pitched voices were more likely to win. Now, they plan to see if their voice pitch data correlates with objective measures of leadership ability, such as years in office or number of bills passed.

Most people would like to think they make conscious, rational decisions about who to vote for based on careful consideration of the candidates and the issues, Klofstad said.

“We think of ourselves as rational beings, but our research shows that we also make thin impressionistic judgments based on very subtle signals that we may or may not be aware of.”

Biases aren’t always bad, Klofstad said. It may be there are good reasons to go with our gut.

“But if it turns out that people with lower voices are actually poorer leaders, then it’s bad that voters are cuing into this signal if it’s not actually a reliable indicator of leadership ability.”

“Becoming more aware of the biases influencing our behavior at the polls may help us control them or counteract them if they’re indeed leading us to make poor choices,” Klofstad said.

This research was supported by the Office of the Provost and the Fuqua School of Business at Duke.

Comments Off on What does a politician’s voice pitch convey to voters?

August 9th, 2015

Bar Ilan University.

The Ackerman Family Bar-Ilan University Expedition to Gath, headed by Prof. Aren Maeir, has discovered the fortifications and entrance gate of the biblical city of Gath of the Philistines, home of Goliath and the largest city in the land during the 10th-9th century BCE, about the time of the “United Kingdom” of Israel and King Ahab of Israel. The excavations are being conducted in the Tel Zafit National Park, located in the Judean Foothills, about halfway between Jerusalem and Ashkelon in central Israel.

This is a view of the remains of the Iron Age city wall of Philistine Gath.

Credit: Prof. Aren Maeir, Director, Ackerman Family Bar-Ilan University Expedition to Gath

Prof. Maeir, of the Martin (Szusz) Department of Land of Israel Studies and Archaeology, said that the city gate is among the largest ever found in Israel and is evidence of the status and influence of the city of Gath during this period. In addition to the monumental gate, an impressive fortification wall was discovered, as well as various building in its vicinity, such as a temple and an iron production facility. These features, and the city itself were destroyed by Hazael King of Aram Damascus, who besieged and destroyed the site at around 830 BCE.

The city gate of Philistine Gath is referred to in the Bible (in I Samuel 21) in the story of David’s escape from King Saul to Achish, King of Gath.

Now in its 20th year, the Ackerman Family Bar-Ilan University Expedition to Gath, is a long-term investigation aimed at studying the archaeology and history of one of the most important sites in Israel. Tell es-Safi/Gath is one of the largest tells (ancient ruin mounds) in Israel and was settled almost continuously from the 5th millennium BCE until modern times.

These are views of the Iron Age fortifications of the lower city of Philistine Gath.

Credit: Prof. Aren Maeir, Director, Ackerman Family Bar-Ilan University Expedition to Gath

The archaeological dig is led by Prof. Maeir, along with groups from the University of Melbourne, University of Manitoba, Brigham Young University, Yeshiva University, University of Kansas, Grand Valley State University of Michigan, several Korean universities and additional institutions throughout the world.

Among the most significant findings to date at the site: Philistine Temples dating to the 11th through 9th century BCE, evidence of an earthquake in the 8th century BCE possibly connected to the earthquake mentioned in the Book of Amos I:1, the earliest decipherable Philistine inscription ever to be discovered, which contains two names similar to the name Goliath; a large assortment of objects of various types linked to Philistine culture; remains relating to the earliest siege system in the world, constructed by Hazael, King of Aram Damascus around 830 BCE, along with extensive evidence of the subsequent capture and destruction of the city by Hazael, as mentioned in Second Kings 12:18; evidence of the first Philistine settlement in Canaan (around 1200 BCE); different levels of the earlier Canaanite city of Gath; and remains of the Crusader castle “Blanche Garde” at which Richard the Lion-Hearted is known to have been.

Comments Off on Gath: Biblical Philistine City of Goliath Found by Archaeologists

August 9th, 2015

By Osaka University.

Great progress in creating new fundamental technologies such as medical applications and non-destructive inspection of social infrastructures

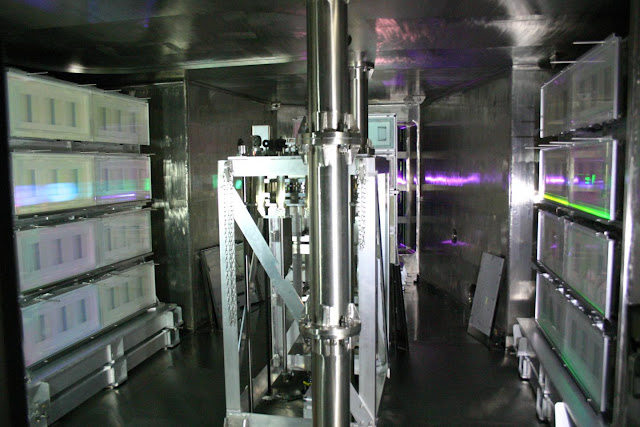

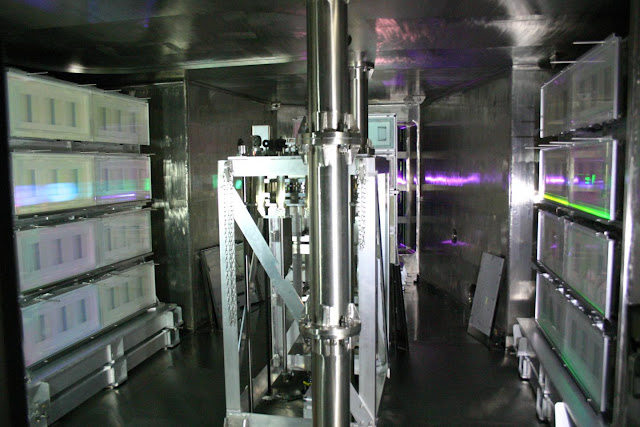

The Institute of Laser Engineering (ILE), Osaka University, has succeeded to reinforce the Petawatt laser “LFEX” to deliver up to 2,000 trillion watts in the duration of one trillionth of one second (this corresponds to 1000 times the integrated electric power consumed in the world). By using this high-power laser, it is now possible to generate all of the high-energy quantum beams (electrons, ions, gamma ray, neutron, positron).

This is a pulse compressor of LFEX using 16 sheets of the dielectric multilayer film diffraction grating of 91cm x 42cm.

Credit: Osaka University

Owing to such quantum beams with large current, we can make a big step forward not only for creating new fundamental technologies such as medical applications and non-destructive inspection of social infrastructures to contribute to our future life of longevity, safety, and security, but also for realization of laser fusion energy triggered by fast ignition.

Accomplishments include:

Enhancement of the Petawatt laser “LFEX” up to 2,000 trillion watts for one trillionth of one second.

High power output implemented by a 4-beam amplifier technology and the world’s highest performance dielectric multilayer diffraction grating of large diameter.

Big step forward for creating such new fundamental technologies as cancer therapy for medical applications and non-destructive inspection of bridges and buildings, to contribute to our future life of longevity, safety, and security, and for the realization of fast ignition as an energy resource.

Background and output of research

Petawatt lasers are used for study of basic science, generating such high-energy quantum beams as neutrons and ions, but only a few facilities in the world have Petawatt laser. So far, Petawatt lasers in the world have had relatively a small output (to a few tens of joules). ILE has achieved the world’s largest laser output of dozens of times those at other world-class lasers facilities (1,000 joules or more).

High power laser “LFEX” is one, that has been bringing together the best of Japanese optical technology, and greatly contributed to the improvement of the technical capabilities of domestic companies such as high precision polishing with the flatness of 0.1-micrometer on one-meter large diameter quartz glass material, high-strength multi-layer film, large flash lamp, high charge density high voltage capacitors, Faraday rotator using the world’s first superconducting magnet for nuclear magnetic resonance imaging (MRI). Also, one-meter-class dielectric multilayer diffraction grating has been developed in “LFEX Laser” project, and is worldwide appreciated.

This is a panoramic view of amplifying section of LFEX, that is composed of four laser beams; the size of each beam has a rectangular of 37cm x 37cm. To realize the large-diameter laser beam, we have developed a large optical element.

Credit: Osaka University

This high output has been implemented by a 4-beam amplifier technology and the world’s highest performance dielectric multilayer diffraction grating of a large diameter. In addition, to bring out the performance of Petawatt laser most effectively, the first rising of the laser pulse should be steep enough. For example, the laser pulse is expected to stand steep in its temporal evolution just as Tokyo Sky Tree stands high to flat, instead of the Tokyo Tower, which spreads the scaffolding to the hilly area. LFEX laser has thus first succeeded to suppress undesirable extra lights (noise), that exist in front of the main pulse, by a factor of ten order of magnitudes compared to the pulse peak (10-digit pulse contrast). The height of the noise is equivalent to the size of the influenza virus to the whole height of the Sky Tree.

Diffraction grating is an optical element, that produces spectral decomposition of light by utilizing the diffraction by grating-like pattern. Its effect is the same as the prism, and people should often have the experience to decompose the sunlight into various colors. In order to obtain inexpensive gastric diffraction efficiency, the surface of the diffraction grating is generally coated with a thin film of gold or silver, but it will be less effective against strong laser light. The dielectric multilayer film is a coating with a high strength for the high intensity light to efficiently reflect intense light such as a laser. One can make a diffraction grating of high laser strength by subjecting dielectric multilayer film on the back surface of the diffraction grating. In “LFEX laser”, as the last of the optical element which the laser energy is maximized, it is used to reduce a pulse one trillionth of a second (1 picosecond).

Taking all the advanced high-power-laser technologies, we have completed the Petawatt laser “LFEX”, that can be now available to study basic science and practical applications even deeper than earlier.

Impact of this research (the significance of this research)

ILE has been promoting a variety of studies using high-power laser is, as a joint use and joint research center. “LFEX laser” enables us to generate high energy pulses of quantum beams with large current, and one can expect such medical applications as particle beam cancer therapy and non-destructive inspection for bridges and etc. (or defect inspection by gamma-ray beam and neutrons).

Furthermore, it is expected that the fast ignition scheme proposed by ILE, Osaka, will produce fusion energy with a relatively small laser system. The heating laser, which is indispensable for the fast ignition, can be now realized with the help of all the high-techs used for LFEX. It is thus largely expected that a break through will be made in the research of laser fusion.

Comments Off on World-Largest Petawatt Laser Delivers 2,000 Trillion Watts Output

July 21st, 2015

By Alton Parrish.

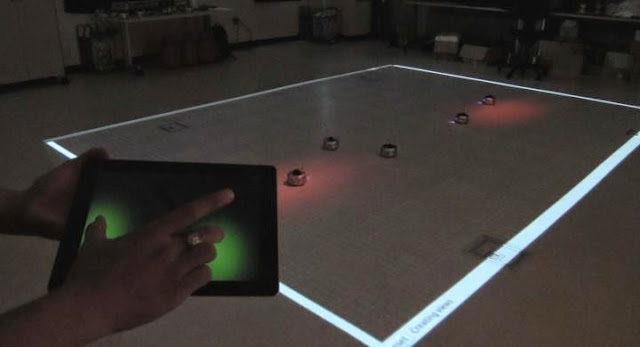

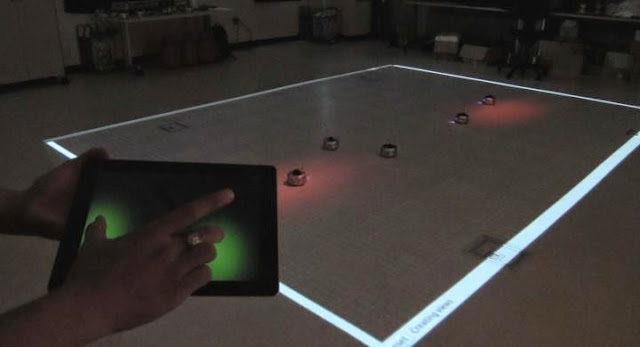

Forget the Vulcan mind-meld of the Star Trek generation — as far as mind control techniques go, bacteria is the next frontier. Understanding the biochemical sensing between organisms could have far reaching implications in ecology, biology, and roboticsWaren Ruder used a mathematical model to demonstrate that bacteria can control the behavior of an inanimate device like a robot.

Credit: Virginia Tech

In a paper published July 16 in Scientific Reports, which is part of the Nature Publishing Group, a Virginia Tech scientist used a mathematical model to demonstrate that bacteria can control the behavior of an inanimate device like a robot.”Basically we were trying to find out from the mathematical model if we could build a living microbiome on a nonliving host and control the host through the microbiome,” said Ruder, an assistant professor of biological systems engineering in both the College of Agriculture and Life sciences and the College of Engineering.

“We found that robots may indeed be able to have a working brain,” he said.

Ruder spoke about his development in a recent video.

Scientist shows bacteria could control robots

Credit: Virginia Tech

For future experiments, Ruder is building real-world robots that will have the ability to read bacterial gene expression levels in E. coli using miniature fluorescent microscopes. The robots will respond to bacteria he will engineer in his lab.On a broad scale, understanding the biochemical sensing between organisms could have far reaching implications in ecology, biology, and robotics.In agriculture, bacteria-robot model systems could enable robust studies that explore the interactions between soil bacteria and livestock. In healthcare, further understanding of bacteria’s role in controlling gut physiology could lead to bacteria-based prescriptions to treat mental and physical illnesses. Ruder also envisions droids that could execute tasks such as deploying bacteria to remediate oil spills.The findings also add to the ever-growing body of research about bacteria in the human body that are thought to regulate health and mood, and especially the theory that bacteria also affect behavior.

The study was inspired by real-world experiments where the mating behavior of fruit flies was manipulated using bacteria, as well as mice that exhibited signs of lower stress when implanted with probiotics.

Ruder’s approach revealed unique decision-making behavior by a bacteria-robot system by coupling and computationally simulating widely accepted equations that describe three distinct elements: engineered gene circuits in E. coli, microfluid bioreactors, and robot movement.

The bacteria in the mathematical experiment exhibited their genetic circuitry by either turning green or red, according to what they ate. In the mathematical model, the theoretical robot was equipped with sensors and a miniature microscope to measure the color of bacteria telling it where and how fast to go depending upon the pigment and intensity of color.

The model also revealed higher order functions in a surprising way. In one instance, as the bacteria were directing the robot toward more food, the robot paused before quickly making its final approach — a classic predatory behavior of higher order animals that stalk prey.

Ruder’s modeling study also demonstrates that these sorts of biosynthetic experiments could be done in the future with a minimal amount of funds, opening up the field to a much larger pool of researchers.

The Air Force Office of Scientific Research funded the mathematical modeling of gene circuitry in E. coli, and the Virginia Tech Student Engineers’ Council has provided funding to move these models and resulting mobile robots into the classroom as teaching tools.

Ruder conducted his research in collaboration with biomedical engineering doctoral student Keith Heyde, who studies phyto-engineering for biofuel synthesis.

“We hope to help democratize the field of synthetic biology for students and researchers all over the world with this model,” said Ruder. “In the future, rudimentary robots and E. coli that are already commonly used separately in classrooms could be linked with this model to teach students from elementary school through Ph.D.-level about bacterial relationships with other organisms.”

Comments Off on Robot with bacteria brain possible

July 4th, 2015

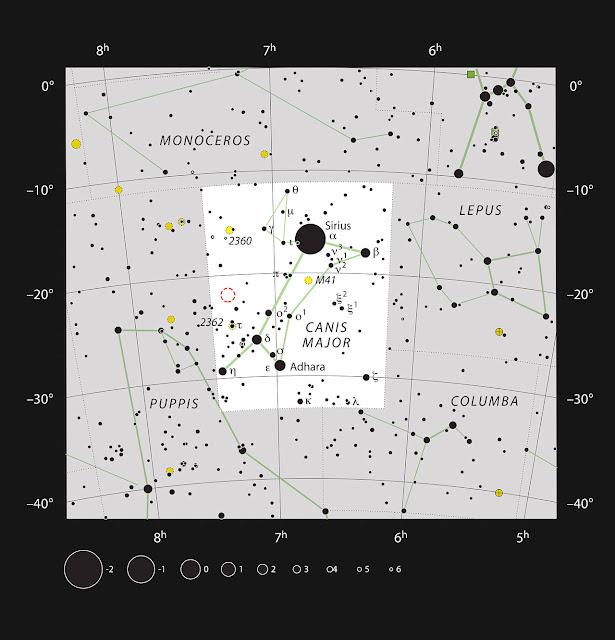

By ESO.

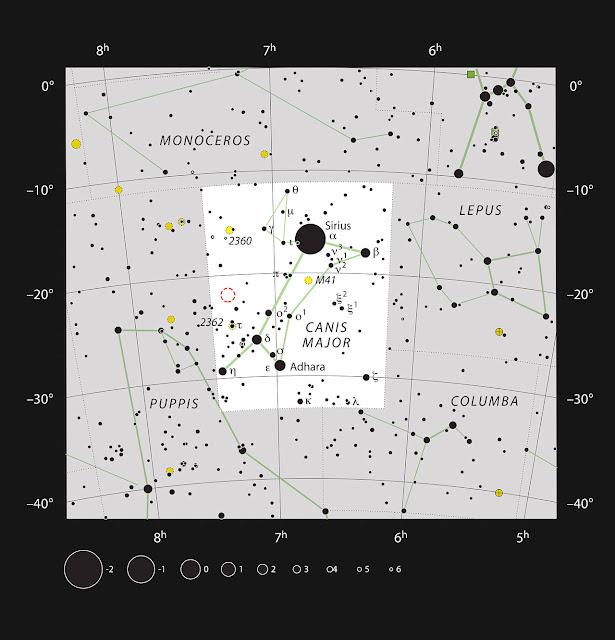

Discovered from England by the tireless observer Sir William Herschel on 20 November 1784, the bright star cluster NGC 2367 lies about 7000 light-years from Earth in the constellation Canis Major. Having only existed for about five million years, most of its stars are young and hot and shine with an intense blue light. This contrasts wonderfully in this new image with the silky-red glow from the surrounding hydrogen gas.

This rich view of an array of colorful stars and gas was captured by the Wide Field Imager (WFI) camera, on the MPG/ESO 2.2-meter telescope at ESO’s La Silla Observatory in Chile. It shows a young open cluster of stars known as NGC 2367, an infant stellar grouping that lies at the center of an immense and ancient structure on the margins of the Milky Way.

Credit: ESO/G. Beccari

Open clusters like NGC 2367 are a common sight in spiral galaxies like the Milky Way, and tend to form in their host’s outer regions. On their travels about the galactic centre, they are affected by the gravity of other clusters, as well as by large clouds of gas that they pass close to. Because open clusters are only loosely bound by gravity to begin with, and because they constantly lose mass as some of their gas is pushed away by the radiation of the young hot stars, these disturbances occur often enough to cause the stars to wander off from their siblings, just as the Sun is believed to have done many years ago. An open cluster is generally expected to survive for a few hundred million years before it is completely dispersed.

This video starts with a view of the southern Milky Way and takes us on a journey towards the open star cluster NGC 2367, not far from the bright star Sirius in the constellation of Canis Major (The Greater Dog). The MPG/ESO 2.2-metre telescope at ESO’s La Silla Observatory in Chile captured this richly colourful view. The brighter cluster stars are very young by stellar standards and still shine with a hot bluish colour.

In the meantime, clusters serve as excellent case studies for stellar evolution. All the constituent stars are born at roughly the same time from the same cloud of material, meaning they can be compared alongside one another with greater ease, allowing their ages to be readily determined and their evolution mapped.

Like many open clusters, NGC 2367 is embedded within an emission nebula), from which its stars were born. The remains show up as wisps and clouds of hydrogen gas, ionised by the ultraviolet radiation being emitted by the hottest stars. What is more unusual is that, as you begin to pan out from the cluster and its nebula, a far more expansive structure is revealed: NGC 2367 and the nebula containing it are thought to be the nucleus of a larger nebula, known as Brand 16, which in turn is only a small part of a huge supershell, known as GS234-02.

This chart shows the constellation of Canis Major (The Greater Dog). Most of the stars visible to the naked eye on a clear night are shown. The location of the bright open cluster, NGC 2367, which can well seen in a small telescope, is marked with a red circle.

Credit: ESO/IAU and Sky & Telescope

The GS234-02 supershell lies towards the outskirts of our galaxy, the Milky Way. It is a vast structure, spanning hundreds of light-years. It began its life when a group of particularly massive stars, producing strong stellar winds, created individual expanding bubbles of hot gas.

This pan video gives a close-up view of an array of colourful stars and gas that was captured by the Wide Field Imager (WFI) camera, on the MPG/ESO 2.2-metre telescope at ESO’s La Silla Observatory in Chile. It shows a young open cluster of stars known as NGC 2367, an infant stellar grouping that lies at the centre of an immense and ancient structure on the margins of the Milky Way.

These neighbouring bubbles eventually merged to form a superbubble, and the short life spans of the stars at its heart meant that they exploded as supernovae at similar times, expanding the superbubble even further, to the point that it merged with other superbubbles, which is when the supershell was formed. The resulting formation ranks as one of the largest possible structures within a galaxy.

This concentrically expanding system, as ancient as it is enormous, provides a wonderful example of the intricate, interrelated structures that are sculpted in galaxies by the lives and deaths of stars.

Comments Off on Discovery buried in the heart of a giant

June 24th, 2015

By UC Berkeley.

Researchers at University of California, Berkeley have taken inspiration from the cockroach to create a robot that can use its body shape to manoeuvre through a densely cluttered environment.

Fitted with the characteristic rounded shell of the discoid cockroach, the running robot can perform a roll manoeuvre to slip through gaps between grass-like vertical beam obstacles without the need for additional sensors or motors.

(Top) A discoid cockroach rolls its body to the side and quickly maneuvers through narrow gaps between densely cluttered, grass-like beam obstacles. (Bottom) Adding a rounded, ellipsoidal, exoskeletal shell enables the VelociRoACH legged robot to traverse beam obstacles using roll maneuvers, without adding sensors or changing the open-loop control.

Credit: Chen Li. Courtesy of PolyPEDAL Lab, Biomimetic Millisystems Lab, and CiBER, UC Berkeley

It is hoped the robot can inspire the design of future terrestrial robots to use in a wide variety of scenarios, from monitoring the environment to search and rescue operations.

The first results of the robot’s performance have been presented today, 23 June 2015, in IOP Publishing’s journal Bioinspiration & Biomimetics.

Whilst many terrestrial robots have been developed with a view to perform a wide range of tasks by avoiding obstacles, few have been specifically designed to traverse obstacles.

Lead author of the study Chen Li, from the University of California, Berkeley, said: “The majority of robotics studies have been solving the problem of obstacles by avoiding them, which largely depends on using sensors to map out the environment and algorithms that plan a path to go around obstacles.

A discoid cockroach rolls its body to the side and quickly maneuvers through narrow gaps between densely cluttered, grass-like beam obstacles. With its unmodified cuboidal body, the VelociRoACH robot becomes stuck while trying to traverse beam obstacles, because it turns to the left or right as soon as its body contacts a beam. By adding a rounded, ellipsoidal, exoskeletal shell inspired from the cockroach, the robot rolls its body to the side and maneuvers through beam obstacles, without adding sensors or changing the open-loop control.

“However, when the terrain becomes densely cluttered, especially as gaps between obstacles become comparable or even smaller than robot size, this approach starts to run into problems as a clear path cannot be mapped.”

In their study, the researchers used high-speed cameras to study the movement of discoid cockroaches through an artificial obstacle course containing grass-like vertical beams with small spacing. Living on the floor of tropical rainforests, this specific type of cockroach frequently encounters a wide variety of cluttered obstacles, such as blades of grass, shrubs, leaf litter, tree trunks, and fungi.

The cockroaches were fitted with three different artificial shells to see how their movement was affected by body shape when moving through the vertical beams. The shapes of the three shells were: an oval cone with a similar shape to the cockroaches’ body; a flat oval; and a flat rectangle.

When the cockroaches were unmodified, the researchers found that, although they sometimes pushed through the beams or climbed over them, they most frequently used a fast and effective roll manoeuvre to slip through the obstacles. In these instances, the cockroaches rolled their body so that their thin sides could fit through the gaps and their legs could push off the beams to help them manoeuvre through the obstacles.

As their body became less rounded by wearing the three artificial shells, it became harder for the cockroaches to move through the obstacles, because they were less able to perform the fast and effective roll manoeuvre.

After examining the cockroaches, the researchers then tested a small, rectangular, six-legged robot and observed whether it was able to traverse a similar obstacle course.

The researchers found that with a rectangular body the robot could rarely traverse the grass-like beams, and frequently collided with the obstacles and became stuck between them.

When the robot was fitted with the cockroach-inspired rounded shell, it was much more likely to successfully move through the obstacle course using a similar roll manoeuvre to the cockroaches. This adaptive behaviour came about with no change to the robot programming, showing that the intelligent behaviour came from the shell.

“We showed that our robot can traverse grass-like beam obstacles at high probability, without adding any sensory feedback or changes in motor control, thanks to the thin, rounded shell that allows the robot body to roll to reduce terrain resistance.” Li continued. “This is a terrestrial analogy of the streamlined shapes that reduce drag on birds, fish, airplanes and submarines as they move in fluids. We call this ‘terradynamic’ streamlining.”

“There may be other shapes besides the thin, rounded one that are good for other purposes, such as climbing up and over obstacles of other types. Our next steps will be to study a diversity of terrain and animal shapes to discover more terradynamic shapes, and even morphing shapes. These new concepts will enable terrestrial robots to go through various cluttered environments with minimal sensors and simple controls.”

Comments Off on Cockroach-Inspired robot uses body streamlining

June 24th, 2015

By Peter Tarr.

For decades, health-conscious people around the globe have taken antioxidant supplements and eaten foods rich in antioxidants, figuring this was one of the paths to good health and a long life.

Yet clinical trials of antioxidant supplements have repeatedly dashed the hopes of consumers who take them hoping to reduce their cancer risk. Virtually all such trials have failed to show any protective effect against cancer. In fact, in several trials, antioxidant supplementation has been linked with increased rates of certain cancers. In one trial, smokers taking extra beta carotene had higher, not lower, rates of lung cancer.

Drs. Tuveson and Chandel explain why eating foods rich in antioxidants, as well as taking antioxidant supplements, can actually promote cancer, rather than fight or prevent it, as conventional wisdom suggests.

Credit: Cold Spring Harbor Laboratory (CSHL)

In a brief paper appearing today in The New England Journal of Medicine, David Tuveson, M.D., Ph.D., Cold Spring Harbor Laboratory Professor and Director of Research for theLustgarten Foundation, and Navdeep S. Chandel, Ph.D., of the Feinberg School of Medicine at Northwestern University, propose why antioxidant supplements might not be working to reduce cancer development, and why they may actually do more harm than good.

Their insights are based on recent advances in the understanding of the system in our cells that establishes a natural balance between oxidizing and anti-oxidizing compounds. These compounds are involved in so-called redox (reduction and oxidation) reactions essential to cellular chemistry.

Oxidants like hydrogen peroxide are essential in small quantities and are manufactured within cells. There is no dispute that oxidants are toxic in large amounts, and cells naturally generate their own anti-oxidants to neutralize them. It has seemed logical to many, therefore, to boost intake of antioxidants to counter the effects of hydrogen peroxide and other similarly toxic “reactive oxygen species,” or ROS, as they are called by scientists. All the more because it is known that cancer cells generate higher levels of ROS to help feed their abnormal growth.

Drs. Tuveson and Chandel propose that taking antioxidant pills or eating vast quantities of foods rich in antioxidants may be failing to show a beneficial effect against cancer because they do not act at the critical site in cells where tumor-promoting ROS are produced – at cellular energy factories called mitochondria. Rather, supplements and dietary antioxidants tend to accumulate at scattered distant sites in the cell, “leaving tumor-promoting ROS relatively unperturbed,” the researchers say.

Quantities of both ROS and natural antioxidants are higher in cancer cells – the paradoxically higher levels of antioxidants being a natural defense by cancer cells to keep their higher levels of oxidants in check, so growth can continue. In fact, say Tuveson and Chandel, therapies that raise the levels of oxidants in cells may be beneficial, whereas those that act as antioxidants may further stimulate the cancer cells. Interestingly, radiation therapy kills cancer cells by dramatically raising levels of oxidants. The same is true of chemotherapeutic drugs – they kill tumor cells via oxidation.

Paradoxically, then, the authors suggest that “genetic or pharmacologic inhibition of antioxidant proteins” – a concept tested successfully in rodent models of lung and pancreatic cancers — may be a useful therapeutic approach in humans. The key challenge, they say, is to identify antioxidant proteins and pathways in cells that are used only by cancer cells and not by healthy cells. Impeding antioxidant production in healthy cells will upset the delicate redox balance upon which normal cellular function depends.

The authors propose new research to profile antioxidant pathways in tumor and adjacent normal cells, to identify possible therapeutic targets.

Comments Off on How Antioxidants Can Accelerate Cancers And Why They Don’t Protect Against Them

June 24th, 2015

When the National Science Foundation (NSF) was founded in 1950, the laser didn’t exist. Some 65 years later, the technology is ubiquitous.

As a tool, the laser has stretched the imaginations of countless scientists and engineers, making possible everything from stunning images of celestial bodies to high-speed communications. Once described as a “solution looking for a problem,” the laser powered and pulsed its way into nearly every aspect of modern life.

Laser light can cool atoms, in this case ytterbium atoms (central sphere), to temperatures so low that the atoms appear nearly motionless. A laser trap made from magnetic fields and laser light can capture the atoms so physicists can study their quantum behaviors.

Credit: E. Edwards, Joint Quantum Institute

For its part, NSF funding enabled research that has translated into meaningful applications in manufacturing, communications, life sciences, defense and medicine. NSF also has played a critical role in training the scientists and engineers who perform this research.

“We enable them [young researchers] at the beginning of their academic careers to get funding and take the next big step,” said Lawrence Goldberg, senior engineering adviser in NSF’s Directorate for Engineering.

Getting started

During the late 1950s and throughout the 1960s, major industrial laboratories run by companies such as Hughes Aircraft Company, AT&T and General Electric supported laser research as did the Department of Defense. These efforts developed all kinds of lasers–gas, solid-state (based on solid materials), semiconductor (based on electronics), dye and excimer (based on reactive gases).

Single molecule fluorescence imaging enabled researchers to study a cell membrane covered with amyloid-beta, a peptide believed to cause Alzheimer’s disease.

Credit: Robin Johnson, M.D., Ph.D.

Like the first computers, early lasers were often room-size, requiring massive tables that held multiple mirrors, tubes and electronic components. Inefficient and power-hungry, these monoliths challenged even the most dedicated researchers. Undaunted, they refined components and techniques required for smooth operation.

As the 1960s ended, funding for industrial labs began to shrink as companies scaled back or eliminated their fundamental research and laser development programs. To fill the void, the federal government and emerging laser industry looked to universities and NSF.

Despite budget cuts in the 1970s, NSF funded a range of projects that helped improve all aspects of laser performance, from beam shaping and pulse rate to energy consumption. Support also contributed to developing new materials essential for continued progress toward new kinds of lasers. As efficiency improved, researchers began considering how to apply the technology.

Charge of the lightwave

One area in particular, data transmission, gained momentum as the 1980s progressed. NSF’s Lightwave Technology Program in its engineering directorate was critical not only because the research it funded fueled the Internet, mobile devices and other high-bandwidth communications applications, but also because many of the laser advances in this field drove progress in other disciplines.

An important example of this crossover is optical coherence tomography (OCT). Used in the late 1980s in telecommunications to find faults in miniature optical waveguides and optical fibers, this imaging technique was adapted by biomedical researchers in the early 1990s to noninvasively image microscopic structures in the eye. The imaging modality is now commonly used in ophthalmology to image the retina. NSF continues to fund OCT research.

As laser technology matured through the 1990s, applications became more abundant. Lasers made their way to the factory floor (to cut, weld and drill) and the ocean floor (to boost signals in transatlantic communications). The continued miniaturization of lasers and the advent of optical fibers radically altered medical diagnostics as well as surgery.

Focus on multidisciplinary research

In 1996, NSF released its first solicitation solely targeting multidisciplinary optical science and engineering. The initiative awarded $13.5 million via 18 three-year awards. Grantees were selected from 76 proposals and 627 pre-proposals. Over a dozen NSF program areas participated.

The press release announcing the awards described optical science and engineering as “an ‘enabling’ technology” and went on to explain that “for such a sweeping field, the broad approach…emphasizing collaboration between disciplines, is particularly effective. By coordinating program efforts, the NSF has encouraged cross-disciplinary linkages that could lead to major findings, sometimes in seemingly unrelated areas that could have solid scientific as well as economic benefits.”

“There is an advantage in supporting groups that can bring together the right people,” said Denise Caldwell, director of NSF’s Division of Physics. In one such case, she says NSF’s support of the Center for Ultrafast Optical Science (CUOS) at the University of Michigan led to advances in multiple areas including manufacturing, telecommunications and precision surgery.

An artist’s depiction of a coherent (laser-like) x-ray pulse. These rainbows can support extremely short, attosecond light pulses. For comparison, one attosecond is the time it takes for light to travel the length of three hydrogen atoms.

Credit: Tenio Popmintchev and Brad Baxley, JILA, University of Colorado Boulder

During the 1990s, CUOS scientists were developing ultrafast lasers. As they explored femtosecond lasers–ones with pulses one quadrillionth of a second–they discovered that femtosecond lasers drilled cleaner holes than picosecond lasers—ones with pulses one trillionth of a second.

Although they transferred the technology to the Ford Motor Company, a young physician at the university heard about the capability and contacted the center. The collaboration between the clinician and CUOS researchers led to IntraLASIK technology used by ophthalmologists for cornea surgery as well as a spin-off company, Intralase (funded with an NSF Small Business Innovative Research grant).

More recently, NSF support of the Engineering Research Center for Extreme Ultraviolet Science and Technology at Colorado State University has given rise to the development of compact ultrafast laser sources in the extreme UV and X-ray spectral regions.

This work is significant because these lasers will now be more widely available to researchers, diminishing the need for access to a large source like a synchrotron. Compact ultrafast sources are opening up entirely new fields of study such as attosecond dynamics, which enables scientists to follow the motion of electrons within molecules and materials.

Identifying new research directions

NSF’s ability to foster collaborations within the scientific community has also enabled it to identify new avenues for research. As laser technology matured in the late 1980s, some researchers began to consider the interaction of laser light with biological material. Sensing this movement, NSF began funding research in this area.

“Optics has been a primary force in fostering this interface,” Caldwell said.

One researcher who saw NSF taking the lead in pushing the frontiers of light-matter interactions was University of Michigan researcher Duncan Steel.

At the time, Steel continued pursuing quantum electronics research while using lasers to enable imaging and spectroscopy of single molecules in their natural environment. Steel and his colleagues were among the first to optically study how molecular self-assembly of proteins affects the neurotoxicity of Alzheimer’s disease.

“New classes of light sources and dramatic changes in detectors and smart imaging opened up new options in biomedical research,” Steel said. “NSF took the initiative to establish a highly interdisciplinary direction that then enabled many people to pursue emergent ideas that were rapidly evolving. That’s one of NSF’s biggest legacies–to create new opportunities that enable scientists and engineers to create and follow new directions for a field.”

Roadmaps for the future

In the mid-1990s, the optical science and engineering research community expressed considerable interest in developing a report to describe the impact the field was having on national needs as well as recommend new areas that might benefit from focused effort from the community.

As a result, in 1998, the National Research Council (NRC) published Harnessing Light: Optical Science and Engineering for the 21st Century. Funded by the Defense Advanced Research Projects Agency, NSF and the National Institute of Standards and Technology (NIST), the 331-page report was the first comprehensive look at the laser juggernaut.

That report was significant because, for the first time, the community focused a lens on its R&D in language that was accessible to the public and to policymakers. It also laid the groundwork for subsequent reports. Fifteen years after Harnessing Light, NSF was the lead funding agency for another NRC report, Optics and Photonics: Essential Technologies for Our Nation.

Widely disseminated through the community’s professional societies, the Optics and Photonicsreport led to a 2014 report by the National Science and Technology Council’s Fast Track Action Committee on Optics and Photonics, Building a Brighter Future with Optics and Photonics.

The committee, comprised of 14 federal agencies and co-chaired by NSF and NIST, would identify cross-cutting areas of optics and photonics research that, through interagency collaboration, could benefit the nation. It also was also set to prioritize these research areas for possible federal investment and set long-term goals for federal optics and photonics research.

Developing a long-term, integrated approach

One of the recommendations from the NRC Optics and Photonics report was creation of a national photonics initiative to identify critical technical priorities for long-term federal R&D funding. To develop these priorities, the initiative would draw on researchers from industry, universities and the government as well as policymakers. Their charge: Provide a more integrated approach to industrial and federal optics and photonics R&D spending.

In just a year, the National Photonics Initiative was formed through the cooperative efforts of the Optical Society of America, SPIE–the international society for optics and photonics, the IEEE Photonics Society, the Laser Institute of America and the American Physical Society Division of Laser Science. One of the first fruits of this forward-looking initiative is a technology roadmap for President Obama’s BRAIN Initiative.

To assess NSF’s own programs and consider future directions, the agency formed an optics and photonics working group in 2013 to develop a roadmap to “lay the groundwork for major advances in scientific understanding and creation of high-impact technologies for the next decade and beyond.”

The working group, led by Goldberg and Charles Ying, from NSF’s Division of Materials Research, inventoried NSF’s annual investment in optics and photonics. Their assessment showed that NSF invests about $300 million each year in the field.

They also identified opportunities for future growth and investment in photonic/electronic integration, biophotonics, the quantum domain, extreme UV and X-ray, manufacturing and crosscutting education and international activities.

As a next step, NSF also formed an optics and photonics advisory subcommittee for its Mathematical and Physical Sciences Directorate Advisory Committee. In its final report released in July 2014, the subcommittee identified seven research areas that could benefit from additional funding, including nanophotonics, new imaging modalities and optics and photonics for astronomy and astrophysics.

That same month, NSF released a “Dear Colleague Letter” to demonstrate the foundation’s growing interest in optics and photonics and to stimulate a pool of proposals, cross-disciplinary in nature, that could help define new research directions.

And so the laser, once itself the main focus of research, takes its place as a device that extends our ability to see.

“To see is a fundamental human drive,” Caldwell said. “If I want to understand a thing, I want to see it. The laser is a very special source of light with incredible capabilities.”

Comments Off on Lasers on the Road to Ubiquity

May 26th, 2015

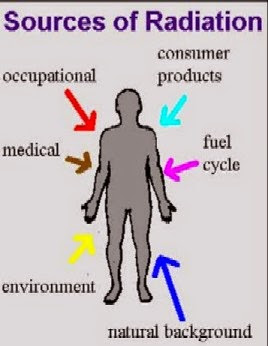

By University of Maryland.

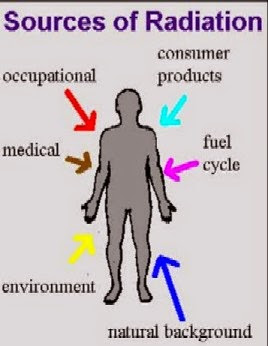

As a result of research performed by scientists at the University of Maryland School of Medicine (UM SOM), the U.S. Food and Drug Administration has approved the use of a drug to treat the deleterious effects of radiation exposure following a nuclear incident. The drug, Neupogen®, is the first ever approved for the treatment of acute radiation injury.

Credit: Oregon State University

The research was done by Thomas J. MacVittie, PhD, professor, and Ann M. Farese, MA, MS, assistant professor, both in the University of Maryland School of Medicine (UM SOM) Department of Radiation Oncology’s Division of Translational Radiation Sciences. The investigators did their research in a non-human clinical model of high-dose radiation.

“Our research shows that this drug works to increase survival by protecting blood cells,” said Dr. MacVittie, who is considered one of the nation’s leading experts on radiation research. “That is a significant advancement, because the drug can now be used as a safe and effective treatment for the blood cell effects of severe radiation poisoning.”

Radiation damages the bone marrow, and as a result decreases production of infection-fighting white blood cells. Neupogen® counteracts these effects. The drug, which is made by Amgen, Inc., was first approved in 1991 to treat cancer patients receiving chemotherapy. Although doctors may use it “off label” for other indications, the research and the resulting approval would speed up access to and use of the drug in the event of a nuclear incident.

This planning is already under way. In 2013, the Biomedical Advanced Research and Development Authority (BARDA), an arm of the Department of Health and Human Services, bought $157 million worth of Neupogen® for stockpiles around the country in case of nuclear accident or attack.

Neupogen® is one of several “dual-use” drugs that are being examined for their potential use as countermeasures in nuclear incidents. These drugs have everyday medical uses, but also may be helpful in treating radiation-related illness in nuclear events. Dr. MacVittie and Ms. Farese are continuing their research on other dual-use countermeasures to radiation. They are now focusing on remedies for other aspects of radiation injury, including problems with the gastrointestinal tract and the lungs.