Posts by AltonParrish:

- Guinea: 6 currently deployed,

- Liberia: 12 currently deployed

- Nigeria: 4 currently deployed

- Sierra Leone: 9 currently deployed

Flu viruses disguised as waste

October 25th, 2014

By Alton Parrish.

Viruses cannot multiply without cellular machinery. Although extensive research into how pathogens invade cells has been conducted for a number of viruses, we do not fully understand how the shell of a virus is cracked open during the onset of infection thus releasing the viral genome. An ETH Zurich led research team discovered how this mechanism works for the influenza virus – with surprising results.

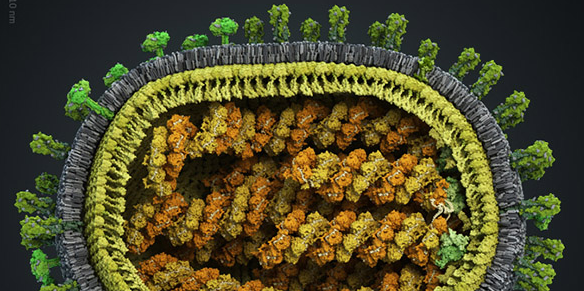

The currently most accurate model of the flu virus shows how the capsid (yellow-green layer) protects the virus RNA.

Viral infections always follow a similar course. The pathogen infiltrates the host cells and uses their replication and protein production machinery to multiply. The virus has to overcome the initial barrier by docking on the surface of the cell membrane. The cell engulfs the virus in a bubble and transports it towards the cell nucleus. During this journey, the solution inside the bubble becomes increasingly acidic. The acidic pH value is ultimately what causes the virus’s outer shell to melt into the membrane of the bubble.

Capsid cracked open like a nut

However, this is only the first part of the process. Like other RNA viruses, the flu virus has to overcome a further obstacle before releasing its genetic code: the few pieces of RNA that make up the genome of the flu virus are packed into a capsid, which keeps the virus stable when moving from cell to cell. The capsid also protects the viral genes against degradation.

Until now, very little has been known about how the capsid of the flu virus is cracked open. A team of researchers from the ETH Zurich, the Friedrich Miescher Institute for Biomedical Research in Basel and the Biological Research Center in Szeged (Hungary) has now discovered exactly how this key aspect of flu infection works: the capsid of the influenza A virus imitates a bundle of protein waste – called an aggresome – that the cell must disentangle and dispose. Deceived in such a way, the cellular waste pickup and disposal complex cracks open the capsid. This discovery has recently been published in the journal Science.

The virus capsid carries cellular waste ‘labels’ on its surface. These waste labels, called unanchored ubiquitin, call into action an enzyme known as histone deacetylase (HDAC6), which binds to ubiquitin. At the same time, HDAC6 also binds to scaffolding motor proteins, pulling the perceived “garbage bundle” apart so that it can be degraded. This mechanical stress causes the capsid to tear, releasing the genetic material of the virus. The viral RNA molecules pass through the pores of the cell nucleus, again with the help of cellular transport factors. Once within the nucleus, the cell starts to reproduce the viral genome and build new virus proteins.

Tricking the waste pickup and disposal system

This finding came as a great surprise to the researchers. The waste disposal system of a cell is essential for eliminating protein garbage. If the cell fails to dispose of these waste proteins (caused by stress or heat) quickly enough, the waste starts to aggregate. To get rid of these aggregates, the cell activates its machinery, which dismantles the clumps and breaks them down into smaller pieces, so that they can be degraded. It is precisely this mechanism that the influenza virus exploits.

The flu virus gets close to the cell nucleus inside a bubble, opens its capsid (violet ball) using the cell’s waste pickup and disposal system, and then smuggles its genes into the cell nucleus (bottom left).

Image: from Banerjee et al., 2014

The researchers were also surprised by how long the opening of the capsid takes, with the process lasting around 20-30 minutes. The total infection period – from docking onto the cell’s surface to the RNA entering the cell nucleus – is two hours. “The process is gradual and more complex than we thought,” says Yohei Yamauchi, former postdoc with ETH professor Ari Helenius, who detected HDAC6 by screening human proteins for their involvement in viral infection. In a follow-up study, lead author Indranil Banerjee confirmed how the flu virus is programmed to trick HDAC6 into opening its capsid.

A mouse model provided encouraging proof. If the protein HDAC6 was absent, the flu infection was significantly weaker than in wild-type mice: the flu viruses did not have a central docking point for binding to the waste disposal system.

Stopping waste labels from binding

The researchers headed by Professor of Biochemistry, Ari Helenius have broken new ground with their study. Little research has previously been conducted on how an animal virus opens its capsid. This is one of the most important stages during infection, says the virologist. “We did, however, underestimate the complexity associated with unpacking the capsid,” admits Helenius. Although he wrote a paper on the subject 20 years ago, he did not further pursue his research at that time. He attributes the current breakthrough to new systemic approaches to researching complex systems.

Whether there are therapeutic applications for the findings remains to be seen as an absence of HDAC6 merely moderates the infection rather than prevents it. The known HDAC6 inhibitors target its two active areas. Blocking the enzymatic activity does not help prevent HDAC6 from binding to ubiquitin, but rather supports the virus by stabilizing the cell’s framework.

“We would need a substance that prevents HDAC6 from binding to ubiquitin, without touching the enzyme,” says Yamauchi. Nevertheless, the structure of HDAC6 indicates that this is possible and follow-up experiments are already planned. The researchers have already filed a patent for this purpose.

Quick mutations

These new findings also underpin one of the main challenges of fighting viruses. Viruses make intelligent use of many processes that are essential for our cells. These processes cannot simply be “switched off”, as the side effects would be severe. Furthermore, viruses mutate very quickly. In the case of the flu medicine Tamiflu, the influenza virus evaded it by a mutation that changed the target protein of the active substance on its surface, thereby rendering the drug useless.

It is possible that other viruses might use the waste processing system to decapsidate or uncoat their DNA or RNA and to infect cells efficiently. Helenius does not plan to conduct further research in this field, however, as ETH Zurich will dissolve the research group upon his retirement.

Comments Off on Flu viruses disguised as waste

First 3D map of the hidden universe holds backbone of cosmic web

October 19th, 2014By Alton Parrish.

Now a team of astronomers led by Khee-Gan Lee, a post-doc at the Max Planck Institute for Astronomy, has created a map of hydrogen absorption revealing a three-dimensional section of the universe 11 billions lightyears away – the first time the cosmic web has been mapped at such a vast distance. Since observing to such immense distances is also looking back in time, the map reveals the early stages of cosmic structure formation when the Universe was only a quarter of its current age, during an era when the galaxies were undergoing a major ‘growth spurt’.

The map was created by using faint background galaxies as light sources, against which gas could be seen by the characteristic absorption features of hydrogen. The wavelengths of each hydrogen feature showed the presence of gas at a specific distance from us.

Using the light from faint background galaxies for this purpose had been thought impossible with current telescopes – until Lee carried out calculations that suggested otherwise. To ensure success, Lee and his colleagues obtained observing time at Keck Observatory, home of the two largest and most scientifically productive telescopes in the world.

Although bad weather limited the astronomers to observing for only 4 hours, the data they collected with the LRIS instrument was completely unprecedented. “We were pretty disappointed as the weather was terrible and we only managed to collect a few hours of good data. But judging by the data quality as it came off the telescope, it was clear to me that the experiment was going to work,” said Max Plank’s Joseph Hennawi, who was part of the observing team.

“The data were obtained using the LRIS spectrograph on the Keck I telescope,” Lee said. “With its gargantuan 10m-diameter mirror, this telescope effectively collected enough light from our targeted galaxies that are more than 15 billion times fainter than the faintest stars visible to the naked eye. Since we were measuring the dimming of blue light from these distant galaxies caused by the foreground gas, the thin atmosphere at the summit of Mauna Kea allowed more of this blue light to reach the telescope and be measured by the highly sensitive detectors of the LRIS spectrograph. The data we collected would have taken at least several times longer to obtain on any other telescope.”

Their absorption measurements using 24 faint background galaxies provided sufficient coverage of a small patch of the sky to be combined into a 3D map of the foreground cosmic web. A crucial element was the computer algorithm used to create the 3D map: due to the large amount of data, a naïve implementation of the map-making procedure would require an inordinate amount of computing time.

The resulting map of hydrogen absorption reveals a three-dimensional section of the universe 11 billions lightyears away – this is first time the cosmic web has been mapped at such a vast distance. Since observing to such immense distances is also looking back in time, the map reveals the early stages of cosmic structure formation when the Universe was only a quarter of its current age, during an era when the galaxies were undergoing a major ‘growth spurt’.

The W. M. Keck Observatory operates the largest, most scientifically productive telescopes on Earth. The two, 10-meter optical/infrared telescopes near the summit of Mauna Kea on the Island of Hawaii feature a suite of advanced instruments including imagers, multi-object spectrographs, high-resolution spectrographs, integral-field spectroscopy and world-leading laser guide star adaptive optics systems.

The Low Resolution Imaging Spectrometer (LRIS) is a very versatile visible-wavelength imaging and spectroscopy instrument commissioned in 1993 and operating at the Cassegrain focus of Keck I. Since it has been commissioned it has seen two major upgrades to further enhance its capabilities: addition of a second, blue arm optimized for shorter wavelengths of light; and the installation of detectors that are much more sensitive at the longest (red) wavelengths.

Keck Observatory is a private 501(c) 3 non-profit organization and a scientific partnership of the California Institute of Technology, the University of California and NASA.

Comments Off on First 3D map of the hidden universe holds backbone of cosmic web

Coffee: If you’re not shaking, you need another cup

October 13th, 2014By Alton Parrish.

A team of researchers, including one from The University of Western Australia, has found there may be some truth in the slogan: “Coffee: If you’re not shaking, you need another cup.” They’ve identified the genes that determine just how much satisfaction you can get from caffeine.

The results could also help to explain why coffee, which is a major dietary source of caffeine and among the most widely consumed beverages in the world, is implicated in a range of health conditions.

Six new regions of DNA (loci) associated with coffee drinking behaviour are reported in the study published inMolecular Psychiatry.

The findings support the role of caffeine in promoting regular coffee consumption and may point to the molecular mechanisms that underlie why caffeine has different effects on different people.

The researchers included Dr Jennie Hui, the Director of the Busselton Health Study Laboratory and Adjunct Senior Research Fellow in UWA’s School of Population Health.

The study was led by Marilyn Cornelis, a Postdoctoral Fellow at Harvard University, and colleagues who conducted a genome-wide association study of coffee consumption for 120,000 people of European and African-American ancestry.

“The findings highlight the properties of caffeine that give some of us the genetic propensity to consume coffee,” Dr Hui said.

“Some of the gene regions determine the amount of coffee that makes individuals feel they are satisfied psychologically and others physiologically. What this tells us is that there may be molecular mechanism at work behind the different health and pharmacological effects of coffee and its constituents.”

The authors of the study identified two loci, near genes BDNF and SLC6A4, that potentially reduce the level of satisfaction that we get from caffeine which may in turn lead to increased consumption.

Other regions near the genes POR and ABCG2 are involved in promoting the metabolism of caffeine.

The authors also identified loci in GCKR and near MLXIPL, genes involved in metabolism but not previously linked to either metabolism or a behavioural trait, such as coffee drinking.

The authors suggest that variations in GCKR may impact the glucose-sensing process of the brain, which may in turn influence responses to caffeine or some other component of coffee.

However, further studies are required to determine the effects of these two loci on coffee drinking behaviour.

The Busselton Health Study (BHS) is one of the world’s longest running epidemiological research programs. Since 1966, it has contributed to an understanding of many common diseases and health conditions. The unique BHS database is compiled and managed by UWA’s School of Population Health.

Comments Off on Coffee: If you’re not shaking, you need another cup

40,000 year old Indonesian cave painting stuns historians

October 9th, 2014

By Griffin University.

Cave paintings from the Indonesian island of Sulawesi are at least 40 thousand years old, according to a study published this week in the scientific journal Nature.

This is compatible in age with the oldest known rock art from Europe, long seen as the birthplace of ‘Ice Age’ cave painting and home to the most sophisticated artworks in early human cultural history.

These new findings challenge long-cherished views about the origins of cave art, one of the most fundamental developments in our evolutionary past, according to Maxime Aubert from Griffith University, thedating expert who co-led the study.

“It is often assumed that Europe was the centre of the earliest explosion in human creativity, especially cave art, about 40 thousand years ago’, said Aubert, ‘but our rock art dates from Sulawesi show that at around the same time on the other side of the world people were making pictures of animals as remarkable as those in the Ice Age caves of France and Spain.”

The prehistoric images are from limestone caves near Maros in southern Sulawesi, a large island east of Borneo. They consist of stencilled outlines of human hands – made by blowing or spraying paint around hands pressed against rock surfaces – and paintings of primitive fruit-eating pigs called babirusas (‘pig-deer’).

These ancient images were first reported more than half a century ago, but there had been no prior attempts to date them.

Scientists determined the age of the paintings by measuring the ratio of uranium and thorium isotopes in small stalactite-like growths, called ‘cave popcorn’, which had formed over the art.

Using this high-precision method, known as U-series dating, samples from 14 paintings at seven caves were shown to range in age from 39.9 to 17.4 thousand years ago. As the cave popcorn grew on top of the paintings the U-series dates only provide minimum ages for the art, which could be far older.

The most ancient Sulawesi motif dated by the team, a hand stencil made at least ~40 thousand years ago, is now the oldest evidence ever discovered of this widespread form of rock art. A large painting of a female babirusa also yielded a minimum age of 35.4 thousand years, making it one of the earliest known figurative depictions in the world, if not the earliest.

This suggests that the creative brilliance required to produce the beautiful and stunningly life-like portrayals of animals seen in Palaeolithic Europe, such as those from the famous sites of Chauvet and Lascaux, could have particularly deep roots within the human lineage.

There are more than 90 cave art sites in the Maros area, according to Muhammad Ramli and Budianto Hakim, Indonesian co-leaders of the study, and hundreds of individual paintings and stencils, many of which are likely to be tens of thousands of years old.

The local cultural heritage management authority is now implementing a new policy to protect these rock art localities, some of which are already inscribed on the tentative list of World Heritage sites.

Comments Off on 40,000 year old Indonesian cave painting stuns historians

Supersonic jet, from Tokyo to New York in 4 hours

October 5th, 2014

By University of Miami.

Imagine flying from New York to Tokyo in only four hours aboard a fuel-efficient supersonic jet that looks like something right out of Star Wars. Such a plane could become reality in the next two to three decades, thanks to the efforts of a University of Miami aerospace engineering professor.

Ge-Cheng Zha’s supersonic, bi-directional flying wing will be able to whisk passengers from New York to Tokyo in four hours.

Credit: University of Miami

Ge-Cheng Zha, along with collaborators from Florida State University, is designing a supersonic, bi-directional flying wing (SBiDir-FW) capable of whisking passengers to destinations in significantly less time than it would take conventional aircraft—and without the sonic boom generated by aircraft breaking the sound barrier.

The project has just been awarded a $100,000 grant from NASA’s prestigious Innovative Advanced Conceptsprogram, allowing Zha and colleagues to continue development of their high-tech, futuristic plane.

“I am glad that this novel concept is recognized by the technological authorities for its scientific merit,” said Zha, a professor in UM’s College of Engineering and principal investigator of the project. “This prize means that NASA rewards audacious thinking.”

NASA selected Zha’s design and other the projects based on their potential to transform future aerospace missions, enable new capabilities, or significantly alter and improve current approaches to launching, building, and operating aerospace systems.

“These selections represent the best and most creative new ideas for future technologies that have the potential to radically improve how NASA missions explore new frontiers,” said Michael Gazarik, director of NASA’s Space Technology Program. “Through the NASA Innovative Advanced Concepts program, NASA is taking the long-term view of technological investment and the advancement that is essential for accomplishing our missions. We are inventing the ways in which next-generation aircraft and spacecraft will change the world and inspiring Americans to take bold steps.”

With his supersonic bi-directional flying wing, Zha hopes to achieve zero sonic boom, low supersonic wave drag, and low fuel consumption for passenger travel.

“I am hoping to develop an environmentally friendly and economically viable airplane for supersonic civil transport in the next 20 to 30 years,” said Zha. “Imagine flying from New York to Tokyo in four hours instead of 15 hours.”

Conventional airplanes are composed of a tube-shaped fuselage with two wings inserted and are symmetric along the longitudinal axis. Such a fuselage is designed to hold passengers and cargo, with lift being generated by the wings. “Hence, a conventional airplane is not very efficient and generates a strong sonic boom,” says Zha.

The SBiDir-FW platform is symmetric along both the longitudinal and span axes. It is a flying wing that generates lift everywhere and is able to rotate 90 degrees during flight between supersonic and subsonic speeds, explains Zha.

“No matter how fast a supersonic plane can fly, it needs to take off and land at very low speed, which severely hurts the high-speed supersonic performance for a conventional airplane,” said Zha. “The SBiDir-FW removes this performance conflict by rotating the airplane to fly in two different directions at subsonic and supersonic. Such rotation enables the SBiDir-FW to achieve superior performance at both supersonic and subsonic speeds.”

Innovative Advanced Concepts awards enable scientists to explore basic feasibility and properties of a potential breakthrough concept. The projects were chosen through a peer-review process that evaluated their innovation and how technically viable they are. All are very early in development—20 years or longer from being used on a mission.

Louis Cattafesta, professor of aerospace engineering at Florida State University, and Farrukh Alvi, professor and director of the Florida Center for Advanced Aero-Propulsion at FSU, are also working on the SBiDir-FW.

Comments Off on Supersonic jet, from Tokyo to New York in 4 hours

City of origin of HIV virus found

October 5th, 2014

By Alton Parrish.

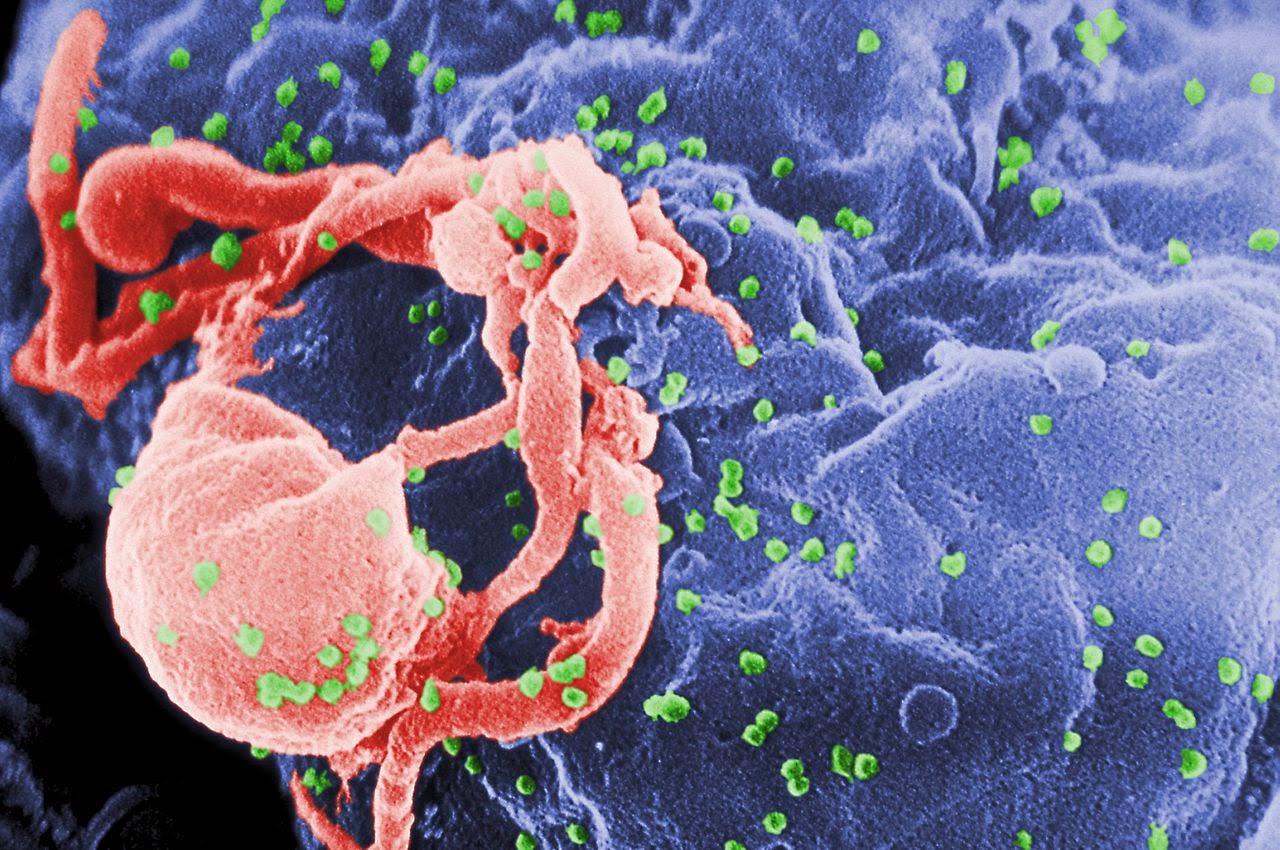

The HIV pandemic with us today is almost certain to have begun its global spread from Kinshasa, the capital of the Democratic Republic of the Congo (DRC), according to a new study.

An international team, led by Oxford University and University of Leuven scientists, has reconstructed the genetic history of the HIV-1 group M pandemic, the event that saw HIV spread across the African continent and around the world, and concluded that it originated in Kinshasa. The team’s analysis suggests that the common ancestor of group M is highly likely to have emerged in Kinshasa around 1920 (with 95% of estimated dates between 1909 and 1930).

HIV is known to have been transmitted from primates and apes to humans at least 13 times but only one of these transmission events has led to a human pandemic. It was only with the event that led to HIV-1 group M that a pandemic occurred, resulting in almost 75 million infections to date. The team’s analysis suggests that, between the 1920s and 1950s, a ‘perfect storm’ of factors, including urban growth, strong railway links during Belgian colonial rule, and changes to the sex trade, combined to see HIV emerge from Kinshasa and spread across the globe.

A report of the research is published in this week’s Science.

‘Until now most studies have taken a piecemeal approach to HIV’s genetic history, looking at particular HIV genomes in particular locations,’ said Professor Oliver Pybus of Oxford University’s Department of Zoology, a senior author of the paper.

‘Our study required the development of a statistical framework for reconstructing the spread of viruses through space and time from their genome sequences,’ said Professor Philippe Lemey of the University of Leuven’s Rega Institute, another senior author of the paper. ‘Once the pandemic’s spatiotemporal origins were clear they could be compared with historical data and it became evident that the early spread of HIV-1 from Kinshasa to other population centres followed predictable patterns.’

One of the factors the team’s analysis suggests was key to the HIV pandemic’s origins was the DRC’s transport links, in particular its railways, that made Kinshasa one of the best connected of all central African cities.

‘Data from colonial archives tells us that by the end of 1940s over one million people were travelling through Kinshasa on the railways each year,’ said Dr Nuno Faria of Oxford University’s Department of Zoology, first author of the paper. ‘Our genetic data tells us that HIV very quickly spread across the Democratic Republic of the Congo (a country the size of Western Europe), travelling with people along railways and waterways to reach Mbuji-Mayi and Lubumbashi in the extreme South and Kisangani in the far North by the end of the 1930s and early 1950s. This helped establishing early secondary foci of HIV-1 transmission in regions that were well connected to southern and eastern African countries. We think it is likely that the social changes around the independence in 1960 saw the virus ‘break out’ from small groups of infected people to infect the wider population and eventually the world.’

It had been suggested that demographic growth or genetic differences between HIV-1 group M and other strains might be major factors in the establishment of the HIV pandemic. However the team’s evidence suggests that, alongside transport, social changes such as the changing behaviour of sex workers, and public health initiatives against other diseases that led to the unsafe use of needles may have contributed to turning HIV into a full-blown epidemic – supporting ideas originally put forward by study co-author Jacques Pepin from the Université de Sherbrooke, Canada.

Professor Oliver Pybus said: ‘Our research suggests that following the original animal to human transmission of the virus (probably through the hunting or handling of bush meat) there was only a small ‘window’ during the Belgian colonial era for this particular strain of HIV to emerge and spread into a pandemic. By the 1960s transport systems, such as the railways, that enabled the virus to spread vast distances were less active, but by that time the seeds of the pandemic were already sown across Africa and beyond.’

The team says that more research is needed to understand the role different social factors may have played in the origins of the HIV pandemic; in particular research on archival specimens to study the origins and evolution of HIV, and research into the relationship between the spread of Hepatitis C and the use of unsafe needles as part of public health initiatives may give further insights into the conditions that helped HIV to spread so widely.

Comments Off on City of origin of HIV virus found

Artificial cells act like the real thing

September 25th, 2014By Alton Parrish.

Imitation, they say, is the sincerest form of flattery, but mimicking the intricate networks and dynamic interactions that are inherent to living cells is difficult to achieve outside the cell.

(L-R) Eyal Karzbrun, Alexandra Tayar and Prof. Roy Bar-Ziv

Credit: Weizmann Institute of Science

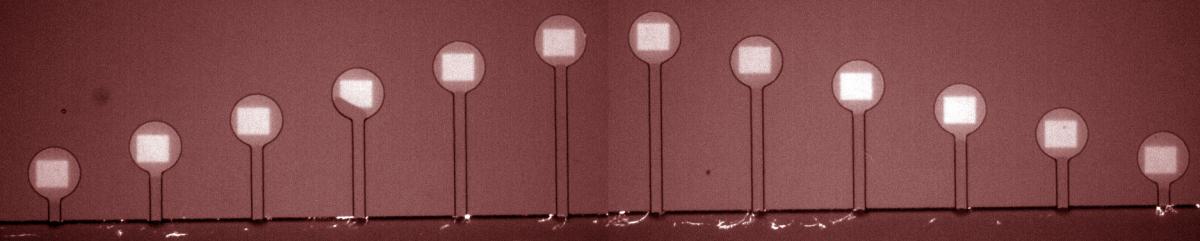

The system, designed by PhD students Eyal Karzbrun and Alexandra Tayar in the lab of Prof. Roy Bar-Ziv of the Weizmann Institute’s Materials and Interfaces Department, in collaboration with Prof. Vincent Noireaux of the University of Minnesota, comprises multiple compartments “etched’’ onto a biochip.

By coding two regulatory genes into the sequence, the scientists created a protein synthesis rate that was periodic, spontaneously switching from periods of being “on” to “off.”

Fluorescent image of DNA (white squares) patterned in circular compartments connected by capillary tubes to the cell-free extract flowing in the channel at bottom. Compartments are 100 micrometers in diameter

Credit: Weizmann Institute of Science

The scientists then asked whether the artificial cells actually communicate and interact with one another like real cells. Indeed, they found that the synthesized proteins that diffused through the array of interconnected compartments were able to regulate genes and produce new proteins in compartments farther along thenetwork. In fact, this system resembles the initial stages of morphogenesis – the biological process that governs the emergence of the body plan in embryonic development.

With the artificial cell system, according to Bar-Ziv, one can, in principle, encode anything: “Genes are like Lego in which you can mix and match various components to produce different outcomes; you can take aregulatory element from E. coli that naturally controls gene X, and produce a known protein; or you can take the same regulatory element but connect it to gene Y instead to get different functions that do not naturally occur in nature.”

Comments Off on Artificial cells act like the real thing

Consumers eat more mercury than previously thought

September 25th, 2014

By Alton Parrish.

New measurements from fish purchased at retail seafood counters in 10 different states show the extent to which mislabeling can expose consumers to unexpectedly high levels of mercury, a harmful pollutant.

Fishery stock “substitutions”—which falsely present a fish of the same species, but from a different geographic origin—are the most dangerous mislabeling offense, according to new research by University of Hawai‘i at Mānoa scientists.

Chilean sea bass fillet

Photo courtesy Flickr user Artizone

“Accurate labeling of seafood is essential to allow consumers to choose sustainable fisheries,” said UH Mānoa biologist Peter B. Marko, lead author of the new study published in the scientific journal PLOS One. “But consumers also rely on labels to protect themselves from unhealthy mercury exposure. Seafood mislabeling distorts the true abundance of fish in the sea, defrauds consumers, and can cause unwanted exposure to harmful pollutants such as mercury.”

The study included two kinds of fish: those labeled as Marine Stewardship Council- (MSC-) certified Chilean sea bass, and those labeled simply as Chilean sea bass (uncertified). The MSC-certified version is supposed to be sourced from the Southern Ocean waters of South Georgia, near Antarctica, far away fromman-made sources of pollution. MSC-certified fish is often favored by consumers seeking sustainably harvested seafood but is also potentially attractive given its consistently low levels of mercury.

In a previous study, the scientists had determined that fully 20 percent of fish purchased as Chilean sea bass were not genetically identifiable as such. Further, of those Chilean sea bass positively identified using DNA techniques, 15 percent had genetic markers that indicated that they were not sourced from the South Georgia fishery.

In the new study, the scientists used the same fish samples to collect detailed mercury measurements. When they compared the mercury in verified, MSC-certified sea bass with the mercury levels of verified, non-certified sea bass, they found no significant difference in the levels. That’s not the story you would have expected based on what is known about geographic patterns of mercury accumulation in Chilean sea bass.

Fish market in Oahu’s Chinatown

Photo courtesy Flickr user Michelle Lee.

“What’s happening is that the species are being substituted,” Marko explained. “The ones that are substituted for MSC-certified Chilean sea bass tend to have very low mercury, whereas those substituted for uncertified fish tend to have very high mercury. These substitutions skew the pool of fish used for MSC comparison purposes, making certified and uncertified fish appear to be much more different than they actually are.”

But there’s another confounding factor. Even within the verified, MSC-certified Chilean sea bass samples, certain fish had very high mercury levels—up to 2 or 3 times higher than expected, and sometimes even greater than import limits to some countries.

Marko and his team again turned to genetics to learn more about these fishes’ true nature. “It turns out thatthe fish with unexpectedly high mercury originated from some fishery other than the certified fishery in South Georgia,” said Marko. “Most of these fish had mitochondrial DNA that indicated they were from Chile. Thus, fishery stock substitutions are also contributing to the pattern by making MSC-certified fish appear to have more mercury than they really should have.”

The bottom line: Most consumers already know that mercury levels vary between species, and many public outreach campaigns have helped educate the public about which fish species to minimize or avoid. Less appreciated is the fact that mercury varies considerably within a species.

“Because mercury accumulation varies within a species’ geographic range, according to a variety of environmental factors, the location where the fish is harvested matters a great deal,” Marko said.

“Although on average MSC-certified fish is a healthier option than uncertified fish, with respect to mercury contamination, our study shows that fishery-stock substitutions can result in a larger proportional increase in mercury,” Marko said. “We recommend that consumer advocates take a closer look at the variation in mercury contamination depending on the geographic source of the fishery stock when they consider future seafood consumption guidelines.”

Comments Off on Consumers eat more mercury than previously thought

13 World Mysteries Without Explanation

September 24th, 2014Chinese mosaic lines

These strange lines are found at coordinates: 40°27’28.56″N, 93°23’34.42″E. There isn’t much information available on these strange, yet beautiful mosaic lines carved in the desert of the Gansu Sheng province in China.

Some records indicate they were created in 2004, but nothing seems official. Of note, these lines are somewhat near the Mogao Caves, which is a World Heritage Site. The lines span a very huge distance and yet still retain their linear proportions despite the curvature of the rough terrain.

Unexplained stone doll

The July 1889 find in Nampa, Idaho, of a small human figure during a well-drilling operation caused intense scientific interest last century. Unmistakably made by human hands, it was found at a depth of 320 feet which would place its age far before the arrival of man in this part of the world.

The find has never been challenged except to say that it was impossible. creationism.org

The first stone calendar

In the Sahara Desert in Egypt lie the oldest known astronomically aligned stones in the world: Nabta. Over one thousand years before the creation of Stonehenge, people built a stone circle and other structures on the shoreline of a lake that has long since dried up. Over 6,000 years ago, stone slabs three meters high were dragged over a kilometer to create the site. Shown above is one of the stones that remains. Although at present the western Egyptian desert is totally dry, this was not the case in the past. There is good evidence that there were several humid periods in the past (when up to 500 mm of rain would fall per year) the most recent one during the last interglacial and early last glaciation periods which stretched between 130,000 and 70,000 years ago.

During this time, the area was a savanna and supported numerous animals such as extinct buffalo and large giraffes, varieties of antelope and gazelle. Beginning around the 10th millennium BC, this region of the Nubian Desert began to receive more rainfall, filling a lake. Early people may have been attracted to the region due to the source of water. Archaeological findings may indicate human occupation in the region dating to at least somewhere around the 10th and 8th millennia BC.

300 million year old iron screw

In the summer of 1998, Russian scientists who were investigating an area 300 th km southwest of Moscow on the remains of a meteorite, discovered a piece of rock which enclosed an iron screw. Geologists estimate that the age of the rock is 300-320 million years.

At that time there were not only intelligent life forms on earth, not even dinosaurs. The screw which is clearly visible in the head and nut, has a length of about cm and a diameter of about three millimeters.

Ancient rocket ship

This ancient cave painting from Japan is dated to be more than 5000 BC.

Sliding stones

Even NASA cannot explain it. It’s best to gaze in wonder at the sliding rocks on this dry lake bed in Death Valley National Park. Racetrack Playa is almost completely flat, 2.5 miles from north to south and 1.25 miles from east to west, and covered with cracked mud.

The rocks, some weighing hundreds of pounds, slide across the sediment, leaving furrows in their wakes, but no one has actually witnessed it.

Pyramid power

Teotihuacan, Mexico. Embedded in the walls of this ancient Mexican city are large sheets of mica. The closest place to quarry mica is located in Brazil, thousands of miles away. Mica is now used in technology and energy production so the question raised is why did the builders go to such extremes to incorporate this mineral into the building of their city.

Were these ancient architects harnessing some long forgotten source of energy in order to power their city?

Dog Deaths

Dog suicides at The Overtoun Bridge, near Milton, Dumbarton, Scotland. Built in 1859, the Overtoun Bridge has become famous for the number of unexplained instances in which dogs have apparently committed suicide by leaping off of it.

The incidents were first recorded around the 1950′s or 1960′s when it was noticed that dogs – usually the long-nosed variety like Collies – would suddenly and unexpectedly leap off the bridge and fall fifty feet to their deaths

Fossilized giant

The fossilized Irish giant from 1895 is over 12 feet tall. The giant was discovered during a mining operation in Antrim, Ireland. This picture is courtesy “the British Strand magazine of December 1895″ Height, 12 foot 2 inches; girth of chest, 6 foot 6 inches; length of arms 4 foot 6 inches. There are six toes on the right foot

The six fingers and toes remind some people of Bible passage 2 Samuel 21:20 ”And there was yet a battle in Gath, where was a man of great stature, that had on every hand six fingers, and on every foot six toes, four and twenty in number; and he also was born to the giant”

Pyramid of Atlantis?

Scientists continues to explore the ruins of megaliths in the so-called Yucatan channel near Cuba. They have been found for many miles along the coast. American archaeologists, who discovered this place, immediately declared that they found Atlantis (not the first time in history, underwater archaeology).

Now it’s occasionally visited by scuba divers, and all other interested can enjoy only in surveying and computer reconstruction of the buried water city of a millennium age.

Giants in Nevada

Nevada Indian legend of 12 foot red-haired giants that lived in the area when they arrived. The story has the Native American’s killing off the giants at a cave. Excavations of guano in 1911 turned up this human jaw. Here it is compared to a casting of a normal man’s jawbone.

In 1931 two skeletons were found in a lake bed. One was 8′ tall, the other just under 10′ tall.

Inexplicable Wedge

Lolladoff plate

Comments Off on 13 World Mysteries Without Explanation

First indirect evidence of so-far undetected strange Baryons

September 18th, 2014

By Alton Parrish.

RHIC is one of just two places in the world where scientists can create and study a primordial soup of unbound quarks and gluons—akin to what existed in the early universe some 14 billion years ago. The research is helping to unravel how these building blocks of matter became bound into hadrons, particles composed of two or three quarks held together by gluons, the carriers of nature’s strongest force.

Added Berndt Mueller, Associate Laboratory Director for Nuclear and Particle Physics at Brookhaven, “This finding is particularly remarkable because strange quarks were one of the early signatures of the formation of the primordial quark-gluon plasma. Now we’re using this QGP signature as a tool to discover previously unknown baryons that emerge from the QGP and could not be produced otherwise.”

The evidence comes from an effect on the thermodynamic properties of the matter nuclear physicists can detect coming out of collisions at RHIC. Specifically, the scientists observe certain more-common strange baryons (omega baryons, cascade baryons, lambda baryons) “freezing out” of RHIC’s quark-gluon plasma at a lower temperature than would be expected if the predicted extra-heavy strange baryons didn’t exist.

“It’s similar to the way table salt lowers the freezing point of liquid water,” said Mukherjee. “These ‘invisible’ hadrons are like salt molecules floating around in the hot gas of hadrons, making other particles freeze out at a lower temperature than they would if the ‘salt’ wasn’t there.”

To see the evidence, the scientists performed calculations using lattice QCD, a technique that uses points on an imaginary four-dimensional lattice (three spatial dimensions plus time) to represent the positions of quarks and gluons, and complex mathematical equations to calculate interactions among them, as described by the theory of quantum chromodynamics (QCD).

“The calculations tell you where you have bound or unbound quarks, depending on the temperature,” Mukherjee said.

The scientists were specifically looking for fluctuations of conserved baryon number and strangeness and exploring how the calculations fit with the observed RHIC measurements at a wide range of energies.

The calculations show that inclusion of the predicted but “experimentally uncharted” strange baryons fit better with the data, providing the first evidence that these so-far unobserved particles exist and exert their effect on the freeze-out temperature of the observable particles.

These findings are helping physicists quantitatively plot the points on the phase diagram that maps out the different phases of nuclear matter, including hadrons and quark-gluon plasma, and the transitions between them under various conditions of temperature and density.

“To accurately plot points on the phase diagram, you have to know what the contents are on the bound-state, hadron side of the transition line—even if you haven’t seen them,” Mukherjee said. “We’ve found that the higher mass states of strange baryons affect the production of ground states that we can observe. And the line where we see the ordinary matter moves to a lower temperature because of the multitude of higher states that we can’t see.”

The research was carried out by the Brookhaven Lab’s Lattice Gauge Theory group, led by Frithjof Karsch, in collaboration with scientists from Bielefeld University, Germany, and Central China Normal University. The supercomputing calculations were performed using GPU-clusters at DOE’s Thomas Jefferson National Accelerator Facility (Jefferson Lab), Bielefeld University, Paderborn University, and Indiana University with funding from the Scientific Discovery through Advanced Computing (SciDAC) program of the DOE Office of Science (Nuclear Physics and Advanced Scientific Computing Research), the Federal Ministry of Education and Research of Germany, the German Research Foundation, the European Commission Directorate-General for Research & Innovation and the GSI BILAER grant. The experimental program at RHIC is funded primarily by the DOE Office of Science.

Brookhaven National Laboratory is supported by the Office of Science of the U.S. Department of Energy. The Office of Science is the single largest supporter of basic research in the physical sciences in the United States, and is working to address some of the most pressing challenges of our time. For more information, please visit science.energy.gov.

Comments Off on First indirect evidence of so-far undetected strange Baryons

Synapse Program Develops Advanced Brain-Inspired Chip

September 16th, 2014

By DARPA.

New chip design mimics brain’s power-saving efficiency; uses 100x less power for complex processing than state-of-the-art chips

DARPA-funded researchers have developed one of the world’s largest and most complex computer chips ever produced—one whose architecture is inspired by the neuronal structure of the brain and requires only a fraction of the electrical power of conventional chips.

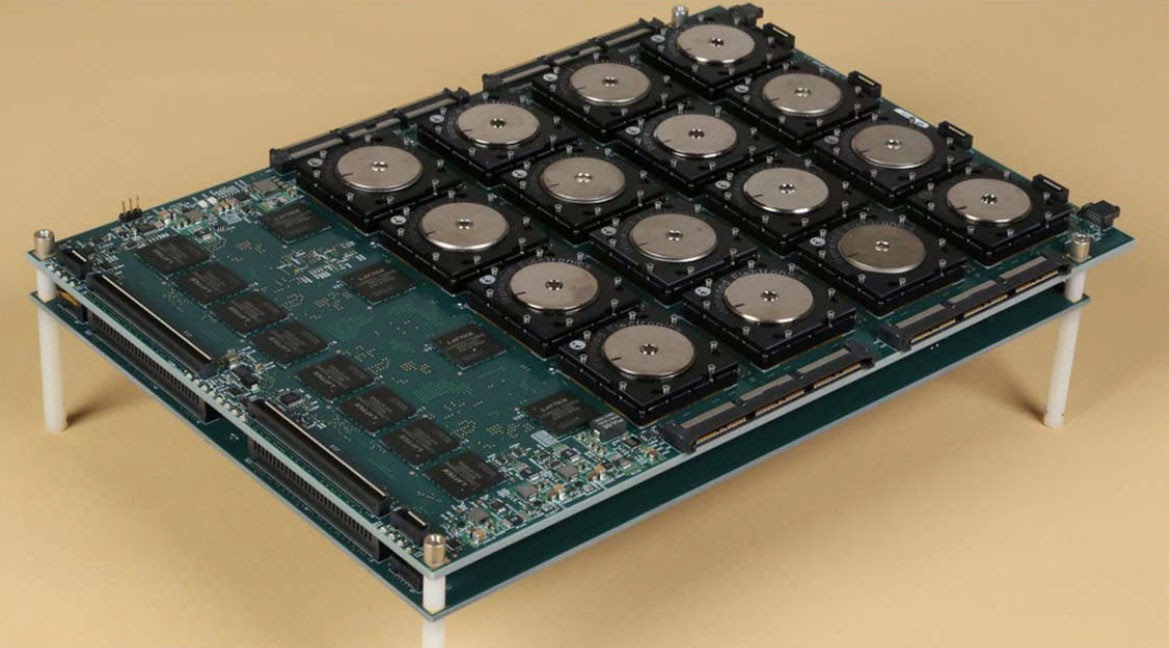

A circuit board shows 16 of the new brain-inspired chips in a 4 X 4 array along with interface hardware. The board is being used to rapidly analyze high-resolutions images.

Courtesy: IBM

Designed by researchers at IBM in San Jose, California, under DARPA’s Systems of Neuromorphic Adaptive Plastic Scalable Electronics (SyNAPSE) program, the chip is loaded with more than 5 billion transistors and boasts more than 250 million “synapses,” or programmable logic points, analogous to the connections between neurons in the brain. That’s still orders of magnitude fewer than the number of actual synapses in the brain, but a giant step toward making ultra-high performance, low-power neuro-inspired systems a reality.

Many tasks that people and animals perform effortlessly, such as perception and pattern recognition, audio processing and motor control, are difficult for traditional computing architectures to do without consuming a lot of power. Biological systems consume much less energy than current computers attempting the same tasks. The SyNAPSE program was created to speed the development of a brain-inspired chip that could perform difficult perception and control tasks while at the same time achieving significant energy savings.

The SyNAPSE-developed chip, which can be tiled to create large arrays, has one million electronic “neurons” and 256 million electronic synapses between neurons. Built on Samsung Foundry’s 28nm process technology, the 5.4 billion transistor chip has one of the highest transistor counts of any chip ever produced.

Each chip consumes less than 100 milliWatts of electrical power during operation. When applied to benchmark tasks of pattern recognition, the new chip achieved two orders of magnitude in energy savings compared to state-of-the-art traditional computing systems.

The high energy efficiency is achieved, in part, by distributing data and computation across the chip, alleviating the need to move data over large distances. In addition, the chip runs in an asynchronous manner, processing and transmitting data only as required, similar to how the brain works. The new chip’s high energy efficiency makes it a candidate for defense applications such as mobile robots and remote sensors where electrical power is limited.

“Computer chip design is driven by a desire to achieve the highest performance at the lowest cost. Historically, the most important cost was that of the computer chip. But Moore’s law—the exponentially decreasing cost of constructing high-transistor-count chips—now allows computer architects to borrow an idea from nature, where energy is a more important cost than complexity, and focus on designs that gain power efficiency by sparsely employing a very large number of components to minimize the movement of data.

IBM’s chip, which is by far the largest one yet made that exploits these ideas, could give unmanned aircraft or robotic ground systems with limited power budgets a more refined perception of the environment, distinguishing threats more accurately and reducing the burden on system operators,” said Gill Pratt, DARPA program manager.

“Our troops often are in austere environments and must carry heavy batteries to power mobile devices, sensors, radios and other electronic equipment. Air vehicles also have very limited power budgets because of the impact of weight. For both of these environments, the extreme energy efficiency achieved by the SyNAPSE program’s accomplishments could enable a much wider range of portable computing applications for defense.”

Another potential application for the SyNAPSE-developed chip is neuroscience modelling. The large number of electronic neurons and synapses in each chip and the ability to tile multiple chips could lead to the development of complex, networked neuromorphic simulators for testing network models in neurobiology and deepening current understanding of brain function.

Comments Off on Synapse Program Develops Advanced Brain-Inspired Chip

What Lit Up The Universe

September 8th, 2014

By Alton Parrish.

Credit: Andrew Pontzen/Fabio Governato

New research from UCL shows we will soon uncover the origin of the ultraviolet light that bathes the cosmos, helping scientists understand how galaxies were built.

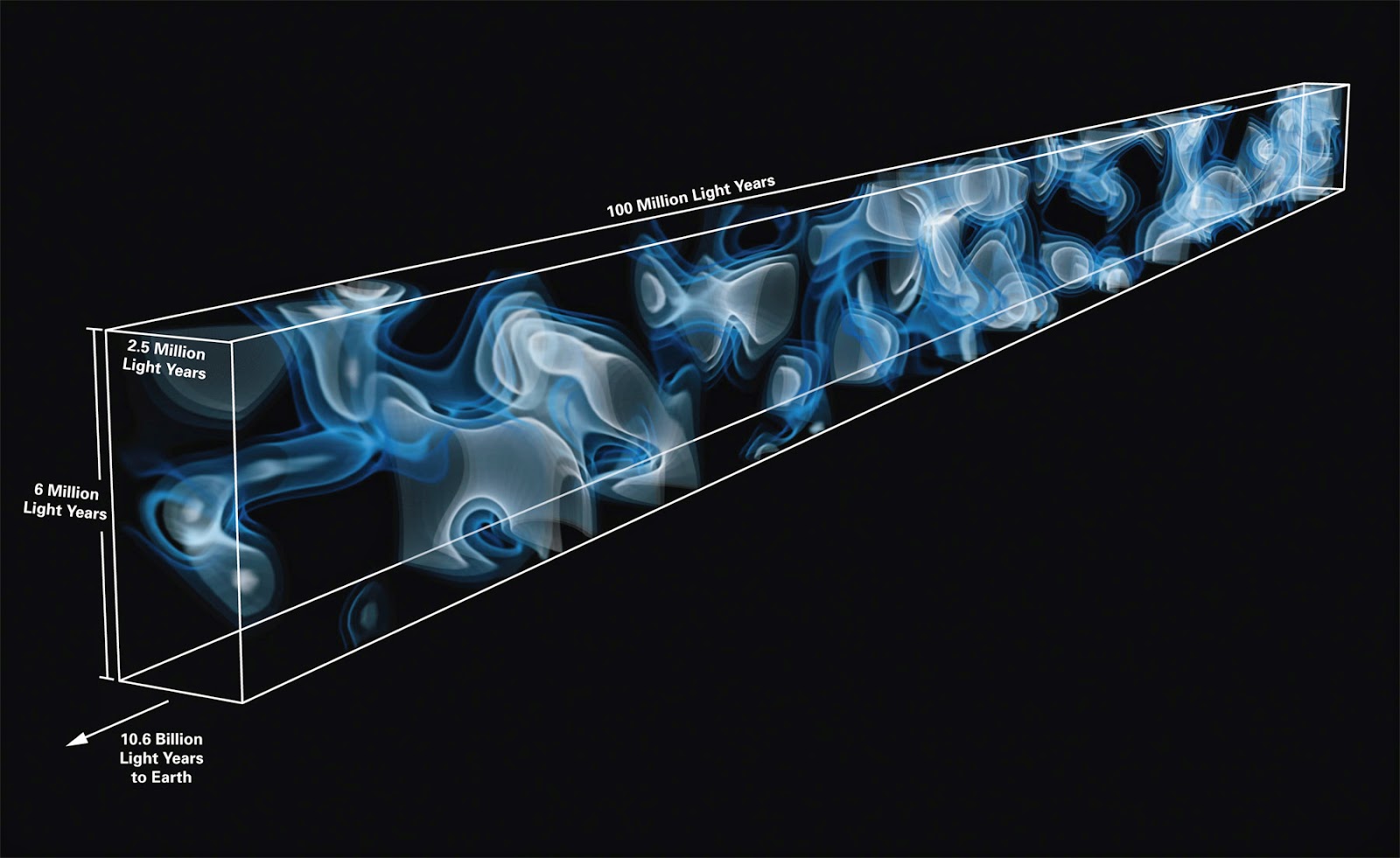

A computer model shows one scenario for how light is spread through the early universe on vast scales (more than 50 million light years across). Astronomers will soon know whether or not these kinds of computer models give an accurate portrayal of light in the real cosmos.

The study published today in The Astrophysical Journal Letters by UCL cosmologists Dr Andrew Pontzen and Dr Hiranya Peiris (both UCL Physics & Astronomy), together with collaborators at Princeton and Barcelona Universities, shows how forthcoming astronomical surveys will reveal what lit up the cosmos.

“Which produces more light? A country’s biggest cities or its many tiny towns?” asked Dr Pontzen, lead author of the study. “Cities are brighter, but towns are far more numerous. Understanding the balance would tell you something about the organisation of the country. We’re posing a similar question about the universe: does ultraviolet light come from numerous but faint galaxies, or from a smaller number of quasars?”

Quasars are the brightest objects in the Universe; their intense light is generated by gas as it falls towards a black hole. Galaxies can contain millions or billions of stars, but are still dim by comparison. Understanding whether the numerous small galaxies outshine the rare, bright quasars will provide insight into the way the universe built up today’s populations of stars and planets. It will also help scientists properly calibrate their measurements of dark energy, the agent thought to be accelerating the universe’s expansion and determining its far future.

The new method proposed by the team builds on a technique already used by astronomers in which quasars act as beacons to understand space. The intense light from quasars makes them easy to spot even at extreme distances, up to 95% of the way across the observable universe. The team think that studying how this light interacts with hydrogen gas on its journey to Earth will reveal the main sources of illumination in the universe, even if those sources are not themselves quasars.

Two types of hydrogen gas are found in the universe – a plain, neutral form and a second charged form which results from bombardment by UV light. These two forms can be distinguished by studying a particular wavelength of light called ‘Lyman-alpha’ which is only absorbed by the neutral type of hydrogen. Scientists can see where in the universe this ‘Lyman-alpha’ light has been absorbed to map the neutral hydrogen.

Since the quasars being studied are billions of light years away, they act as a time capsule: looking at the light shows us what the universe looked like in the distant past. The resulting map will reveal where neutral hydrogen was located billions of years ago as the universe was vigorously building its galaxies.

An even distribution of neutral hydrogen gas would suggest numerous galaxies as the source of most light, whereas a much less uniform pattern, showing a patchwork of charged and neutral hydrogen gas, would indicate that rare quasars were the primary origin of light.

Current samples of quasars aren’t quite big enough for a robust analysis of the differences between the two scenarios; however, a number of surveys currently being planned should help scientists find the answer.

Chief among these is the DESI (Dark Energy Spectroscopic Instrument) survey which will include detailed measurements of about a million distant quasars. Although these measurements are designed to reveal how the expansion of the universe is accelerating due to dark energy, the new research shows that results from DESI will also determine whether the intervening gas is uniformly illuminated. In turn, the measurement of patchiness will reveal whether light in our universe is generated by ‘a few cities’ (quasars) or by ‘many small towns’ (galaxies).

Co-author Dr Hiranya Peiris, said: “It’s amazing how little is known about the objects that bathed the universe in ultraviolet radiation while galaxies assembled into their present form. This technique gives us a novel handle on the intergalactic environment during this critical time in the universe’s history.”

Dr Pontzen, said: “It’s good news all round. DESI is going to give us invaluable information about what was going on in early galaxies, objects that are so faint and distant we would never see them individually. And once that’s understood in the data, the team can take account of it and still get accurate measurements of how the universe is expanding, telling us about dark energy. It illustrates how these big, ambitious projects are going to deliver astonishingly rich maps to explore. We’re now working to understand what other unexpected bonuses might be pulled out from the data.”

Comments Off on What Lit Up The Universe

A City 6,000 Feet Under The Sea

September 8th, 2014

By Alton Parrish.

The Swedish research ship “Albatross” had just returned from a peaceful reconnaissance in the South Atlantic.

“This may be about the most astonishing thing you ever heard, A City 6,000 Feet Under The Sea, reports Jonathan Gray in his newsletter -”

Had you peeped through a lattice window in a little house outside Stockholm, you might have seen two men, one of them bearded, huddled across a table, engaged in lively talk.

One of them, in fact, looked almost wild-eyed. But, knowing him as a well-balanced, sober man, you would have to admit that whatever it was that now had him so excited must be something extraordinary.

“I swear to you, it’s incredible! Do you know, we were sounding the seabed 700 miles east of Brazil. And we brought up core samples of fresh-water plants! Can you believe that! And do you know how deep they were? Three thousand metres!”

The speaker was Professor Hans Pettersson, who had led the expedition.

And he added: “These samples actually contained micro-organisms, twigs, plants and even tree bark.”

Another discovery

Within a similar time frame, discussion was hot in London. Coral had been recovered from depths of over 3,000 feet (1,000 metres) in mid-Atlantic Ocean sites. Now we all know that coral grows only close to sea level.

So in London, England, someone else was making a chilling diagnosis: “Either the seabed dropped thousands of feet or the sea rose mightily.”

And still another

Meanwhile, at Columbia University in the U.S.A., Professor Maurice Ewing, a prominent marine geologist, was reporting on an expedition that had descended to submerged plateaus at a depth of 5,000 feet.

“It’s quite amazing,” he said. “At 5,000 feet down, they discovered prehistoric beach sand. It was brought up in one case from a depth of nearly three and one half miles, far from any place where beaches exist today.

“One deposit was 1,200 miles from the nearest land.”

As we all know, sandy beaches form from waves breaking on the edge of the coastal rim of the seas. Beach sand does not form deep down on the ocean bottom.

Then Ewing dropped his bombshell: “Either the land must have sunk 2 or 3 miles or the sea must have been 2 or 3 miles lower than now. Either conclusion is startling.

These facts mean one of two things: either there was a mighty ocean bed subsidence (unexplainable by orthodox science), or a huge (likewise unexplained) addition of water to the ocean.

Let’s briefly consider these.

1. Sudden subsidence

Much of the landscape that is now drowned by the ocean still has sharp, fine profiles. But these sharp, fine profiles would have been eroded, and the lava covering the ocean floor would have decayed if all this rocky terrain had been

immersed in sea water for more than 15,000 years.

You see, chemical and mechanical forces are very destructive. Sharp edges and points can be ground down and blunted by abrasion, erosion, and the action of waves.

But the entire seabed below the present surf zone has retained its sharpness of profile. Had the subsidence taken place gradually, chemical and other forces would have ground down this sharp profile within a few hundred years.

If the land had sunk slowly, even the surf would have worn away these profiles. No, it was a rapid subsidence, if a subsidence it was.

This sudden collapse of an area covering many millions of square miles does not support a gradual sinking, but rather a cataclysmic event.

Such subsidence is perfectly in keeping with the centuries-long adjustments that occurred after the Great Flood trauma.

2. Pre-Flood sea level lower

However, some of the data strongly suggest that the sea level actually was several thousand feet lower than at present – that there was less water in the ocean at one time. These discoveries suggest that we are uncovering evidence of a former sea level!

But this would call for a relatively sudden increase of 30 percent in the volume of the ocean. The compelling question is, where did this water come from? Few geologists can bring themselves to answer this.

Obviously, melting ice sheets could never have contained enough water to raise the ocean level thousands of feet. So we can forget melting ice sheets being the cause.

City on the sea bed?

Now for another surprise. This time the excitement was in the Pacific. The year was 1965. A research vessel named “Anton Brunn” was investigating the Nazca Trench, off Peru.

The sonar operator called for the captain.

“I don’t know what to make of this,” he murmured. “Around here, the ocean floor is all mud bottom. But just take in these sonar recordings… unusual shapes on the ocean floor! I’m puzzled.”

“Better lower a camera,” came the order.

At a depth of 6,000 feet a photograph revealed huge upright pillars and walls, some of which seemed to have writing on them. In other nearby locations, apparently artificially shaped stones lay on their sides, as though they had toppled over.

The crew rubbed their eyes and kept staring. Could this really be? …the remains of a city under a mass of water more than a mile deep!

Was it overwhelmed suddenly by some gigantic disaster? And now it was buried under 6,000 feet of ocean?

Comments Off on A City 6,000 Feet Under The Sea

Ebola virus mutating quickly says scientific genomic

September 1st, 2014By Alton Parrish.

More than 300 genetic mutations have been discovered in the Ebola virus according to genetic researchers.

In response to an ongoing, unprecedented outbreak of Ebola virus disease (EVD) in West Africa, a team of researchers from the Broad Institute and Harvard University, in collaboration with the Sierra Leone Ministry ofHealth and Sanitation and researchers across institutions and continents, has rapidly sequenced and analyzed more than 99 Ebola virus genomes. Their findings could have important implications for rapid field diagnostic tests. The team reports its results online in the journal Science.

Ebola Virus Particles

Credit: Thomas W. Geisbert, Boston University School of Medicine – PLoS Pathogens

For the current study, researchers sequenced 99 Ebola virus genomes collected from 78 patients diagnosed with Ebola in Sierra Leone during the first 24 days of the outbreak (a portion of the patients contributed samples more than once, allowing researchers a clearer view into how the virus can change in a single individual over the course of infection).

The team found more than 300 genetic changes that make the 2014 Ebola virus genomes distinct from the viral genomes tied to previous Ebola outbreaks. They also found sequence variations indicating that, from the samples sequenced, the EVD outbreak started from a single introduction into humans, subsequently spreading from person to person over many months.

The variations they identified were frequently in regions of the genome encoding proteins. Some of the genetic variation detected in these studies may affect the primers (starting points for DNA synthesis) used in PCR-based diagnostic tests, emphasizing the importance of genomic surveillance and the need for vigilance.

To accelerate response efforts, the research team released the full-length sequences on National Center for Biotechnology Information’s (NCBI’s) DNA sequence database in advance of publication, making these data available to the global scientific community.

“By making the data immediately available to the community, we hope to accelerate response efforts,” said co-senior author Pardis Sabeti, a senior associate member at the Broad Institute and an associate professor at Harvard University. “Upon releasing our first batch of Ebola sequences in June, some of the world’s leading epidemic specialists contacted us, and many of them are now also actively working on the data. We were honored and encouraged. A spirit of international and multidisciplinary collaboration is needed to quickly shedlight on the ongoing outbreak.”

The 2014 Zaire ebolavirus (EBOV) outbreak is unprecedented both in its size and in its emergence in multiple populated areas. Previous outbreaks had been localized mostly to sparsely populated regions of Middle Africa, with the largest outbreak in 1976 reporting 318 cases. The 2014 outbreak has manifested in the more densely-populated West Africa, and since it was first reported in Guinea in March 2014, 2,240 cases have been reported with 1,229 deaths (as of August 19).

Augustine Goba, Director of the Lassa Laboratory at the Kenema Government Hospital and a co-first author of the paper, identified the first Ebola virus disease case in Sierra Leone using PCR-based diagnostics. “We established surveillance for Ebola well ahead of the disease’s spread into Sierra Leone and began retrospective screening for the disease on samples as far back as January of this year,” said Goba. “This was possible because of our long-standing work to diagnose and study another deadly disease, Lassa fever. We could thus identify cases and trace the Ebola virus spread as soon as it entered our country.”

The research team increased the amount of genomic data available on the Ebola virus by four fold and used the technique of “deep sequencing” on all available samples. Deep sequencing is sequencing done enough times to generate high confidence in the results. In this study, researchers sequenced at a depth of 2,000 times on average for each Ebola genome to get an extremely close-up view of the virus genomes from 78 patients. This high-resolution view allowed the team to detect multiple mutations that alter protein sequences — potential targets for future diagnostics, vaccines, and therapies.

The Ebola strains responsible for the current outbreak likely have a common ancestor, dating back to the very first recorded outbreak in 1976. The researchers also traced the transmission path and evolutionary relationships of the samples, revealing that the lineage responsible for the current outbreak diverged from the Middle African version of the virus within the last ten years and spread from Guinea to Sierra Leone by 12 people who had attended the same funeral.

The team’s catalog of 395 mutations (over 340 that distinguish the current outbreak from previous ones, and over 50 within the West African outbreak) may serve as a starting point for other research groups. “We’ve uncovered more than 300 genetic clues about what sets this outbreak apart from previous outbreaks,” said Stephen Gire, a research scientist in the Sabeti lab at the Broad Institute and Harvard. “Although we don’t know whether these differences are related to the severity of the current outbreak, by sharing these data with the research community, we hope to speed up our understanding of this epidemic and support global efforts to contain it.”

“There is an extraordinary battle still ahead, and we have lost many friends and colleagues already like our good friend and colleague Dr. Humarr Khan, a co-senior author here,” said Sabeti. “By providing this data to the research community immediately and demonstrating that transparency and partnership is one way we hope to honor Humarr’s legacy. We are all in this fight together.”

This work was supported by Common Fund and National Institute of Allergy and Infectious Diseases in theNational Institutes of Health, Department of Health and Human Services, as well as by the National Science Foundation, the European Union Seventh Framework Programme, the World Bank, and the Natural Environment Research Council.

Other researchers who contributed to this work include Augustine Goba, Kristian G. Andersen, Rachel S. G. Sealfon, Daniel J. Park, Lansana Kanneh, Simbirie Jalloh, Mambu Momoh, Mohamed Fullah, Gytis Dudas, Shirlee Wohl, Lina M. Moses, Nathan L. Yozwiak, Sarah Winnicki, Christian B. Matranga, Christine M. Malboeuf, James Qu, Adrianne D. Gladden, Stephen F. Schaffner, Xiao Yang, Pan-Pan Jiang, Mahan Nekoui, Andres Colubri, Moinya Ruth Coomber, Mbalu Fonnie, Alex Moigboi, Michael Gbakie, Fatima K. Kamara, Veronica Tucker, Edwin Konuwa, Sidiki Saffa, Josephine Sellu, Abdul Azziz Jalloh, Alice Kovoma, James Koninga, Ibrahim Mustapha, Kandeh Kargbo, Momoh Foday, Mohamed Yillah, Franklyn Kanneh, Willie Robert, James L. B. Massally, Sinéad B. Chapman, James Bochicchio, Cheryl Murphy, Chad Nusbaum, Sarah Young, Bruce W. Birren, Donald S.Grant, John S. Scheiffelin, Eric S. Lander, Christian Happi, Sahr M. Gevao, Andreas Gnirke, Andrew Rambaut, Robert F. Garry, and S. Humarr Khan.

About the Broad Institute of MIT and Harvard

The Eli and Edythe L. Broad Institute of MIT and Harvard was launched in 2004 to empower this generation of creative scientists to transform medicine. The Broad Institute seeks to describe all the molecular components of life and their connections; discover the molecular basis of major human diseases; develop effective new approaches to diagnostics and therapeutics; and disseminate discoveries, tools, methods, and data openly to the entire scientific community.

Founded by MIT, Harvard, and its affiliated hospitals, and the visionary Los Angeles philanthropists Eli and Edythe L. Broad, the Broad Institute includes faculty, professional staff, and students from throughout the MIT and Harvard biomedical research communities and beyond, with collaborations spanning over a hundred private and public institutions in more than 40 countries worldwide.

Comments Off on Ebola virus mutating quickly says scientific genomic

Northrop Grumman developing XS-1 Experimental space plane design for DARPA

September 1st, 2014By Alton Parrish.

Northrop Grumman Corporation with Scaled Composites and Virgin Galactic is developing a preliminary design and flight demonstration plan for the Defense Advanced Research Projects Agency’s (DARPA) Experimental Spaceplane XS-1 program.

Credit: Northrop Grumman

XS-1 has a reusable booster that when coupled with an expendable upper stage provides affordable, available and responsive space lift for 3,000-pound class spacecraft into low Earth orbit. Reusable boosters with aircraft-like operations provide a breakthrough in space lift costs for this payload class, enabling new generations of lower cost, innovative and more resilient spacecraft.

The company is defining its concept for XS-1 under a 13-month, phase one contract valued at $3.9 million. In addition to low-cost launch, the XS-1 would serve as a test-bed for a new generation of hypersonic aircraft.

A key program goal is to fly 10 times in 10 days using a minimal ground crew and infrastructure. Reusableaircraft-like operations would help reduce military and commercial light spacecraft launch costs by a factor of 10 from current launch costs in this payload class.

To complement its aircraft, spacecraft and autonomous systems capabilities, Northrop Grumman has teamed with Scaled Composites of Mojave, which will lead fabrication and assembly, and Virgin Galactic, the privately-funded spaceline, which will head commercial spaceplane operations and transition.

“Our team is uniquely qualified to meet DARPA’s XS-1 operational system goals, having built and transitioned many developmental systems to operational use, including our current work on the world’s only commercial spaceline, Virgin Galactic’s SpaceShipTwo,” said Doug Young, vice president, missile defense and advanced missions, Northrop Grumman Aerospace Systems.

“We plan to bundle proven technologies into our concept that we developed during related projects for DARPA, NASA and the U.S. Air Force Research Laboratory, giving the government maximum return on thoseinvestments,” Young added.

The design would be built around operability and affordability, emphasizing aircraft-like operations including:

– Clean pad launch using a transporter erector launcher, minimal infrastructure and ground crew;

– Highly autonomous flight operations that leverage Northrop Grumman’s unmanned aircraft systems experience; and

– Aircraft-like horizontal landing and recovery on standard runways.

Comments Off on Northrop Grumman developing XS-1 Experimental space plane design for DARPA

Ground X-Vehicle Technology with more armor

August 25th, 2014

By DARPA.

GXV-T seeks to develop revolutionary technologies to make future armored fighting vehicles more mobile, effective and affordable

For the past 100 years of mechanized warfare, protection for ground-based armored fighting vehicles and their occupants has boiled down almost exclusively to a simple equation: More armor equals more protection. Weapons’ ability to penetrate armor, however, has advanced faster than armor’s ability to withstand penetration. As a result, achieving even incremental improvements in crew survivability has required significant increases in vehicle mass and cost.

Ground-based armored fighting vehicles and their occupants have traditionally relied on armor and maneuverability for protection. The amount of armor needed for today’s threat environments, however, is becoming increasingly burdensome and ineffective against ever-improving weaponry. combat situations.

DARPA’s Ground X-Vehicle Technology (GXV-T) program seeks to develop revolutionary technologies to enable a layered approach to protection that would use less armor more strategically and improve vehicles’ ability to avoid detection, engagement and hits by adversaries. Such capabilities would enable smaller, fastervehicles in the future to more efficiently and cost-effectively tackle varied and unpredictable

GXV-T’s technical goals include the following improvements relative to today’s armored fighting vehicles:

Reduce vehicle size and weight by 50 percent

Reduce onboard crew needed to operate vehicle by 50 percent

Increase vehicle speed by 100 percent

Access 95 percent of terrain

Reduce signatures that enable adversaries to detect and engage vehicles

The GXV-T program provides the following four technical areas as examples where advanced technologies could be developed that would meet the program’s objectives:

Radically Enhanced Mobility – Ability to traverse diverse off-road terrain, including slopes and various elevations; advanced suspensions and novel track/wheel configurations; extreme speed; rapid omnidirectional movement changes in three dimensions

Survivability through Agility – Autonomously avoid incoming threats without harming occupants through technologies such as agile motion (dodging) and active repositioning of armor

Crew Augmentation – Improved physical and electronically assisted situational awareness for crew and passengers; semi-autonomous driver assistance and automation of key crew functions similar to capabilities found in modern commercial airplane cockpits

Signature Management – Reduction of detectable signatures, including visible, infrared (IR), acoustic and electromagnetic (EM)

Technology development beyond these four examples is desired so long as it supports the program’s goals. DARPA is particularly interested in engaging nontraditional contributors to help develop leap-ahead technologies in the focus areas above, as well as other technologies that could potentially improve both the survivability and mobility of future armored fighting vehicles.

DARPA aims to develop GXV-T technologies over 24 months after initial contract awards, which are currently planned on or before April 2015. The GXV-T program plans to pursue research, development, design andtesting and evaluation of major subsystem capabilities in multiple technology areas with the goal of integrating these capabilities into future ground X-vehicle demonstrators.

Comments Off on Ground X-Vehicle Technology with more armor

Loves makes sex better for most women say study

August 25th, 2014By Alton Parrish.

In a series of interviews, heterosexual women between the ages of 20 and 68 and from a range of backgrounds said that they believed love was necessary for maximum satisfaction in both sexual relationships and marriage. The benefits of being in love with a sexual partner are more than just emotional. Most of the women in the study said that love made sex physically more pleasurable.

Women who loved their sexual partners also said they felt less inhibited and more willing to explore their sexuality.

“When women feel love, they may feel greater sexual agency because they not only trust their partners but because they feel that it is OK to have sex when love is present,” Montemurro said.

While 50 women of the 95 that were interviewed said that love was not necessary for sex, only 18 of the women unequivocally believed that love was unnecessary in a sexual relationship.

Older women who were interviewed indicated that this connection between love, sex and marriage remained important throughout their lifetimes, not just in certain eras of their lives.

The connection between love and sex may show how women are socialized to see sex as an expression of love, Montemurro said. Despite decades of the women’s rights movement and an increased awareness of women’s sexual desire, the media continue to send a strong cultural message for women to connect sex and love and to look down on girls and women who have sex outside of committed relationships.

“On one hand, the media may seem to show that casual sex is OK, but at the same time, movies and television, especially, tend to portray women who are having sex outside of relationships negatively,” said Montemurro.

In a similar way, the media often portray marriage as largely sexless, even though the participants in the studysaid that sex was an important part of their marriage, according to Montemurro, who presented her findings today (Aug. 19) at the annual meeting of the American Sociological Association.

“For the women I interviewed, they seemed to say you need love in sex and you need sex in marriage,” said Montemurro.

From September 2008 to July 2011, Montemurro conducted in-depth interviews with 95 women who lived in Pennsylvania, New Jersey and New York. The interviews generally lasted 90 minutes.

Although some of the women who were interviewed said they had sexual relationships with women, most of the women were heterosexual and all were involved in heterosexual relationships.

Funds from the Career Development Professorship and the Rubin Fund supported this work.

Comments Off on Loves makes sex better for most women say study

9/11 dust cloud may have caused widespread pregnancy issues

August 17th, 2014By Alton Parrish.

These mothers were more likely to give birth prematurely and deliver babies with low birth weights. Their babies – especially baby boys – were also more likely to be admitted to neonatal intensive care units after birth. The study, led by the Wilson School’s Janet Currie and Hannes Schwandt, was released by the National Bureau of Labor Economics in August.

“Previous research into the health impacts of in utero exposure to the 9/11 dust cloud on birth outcomes has shown little evidence of consistent effects. This is a puzzle given that 9/11 was one of the worst environmental catastrophes to have ever befallen New York City,” said Currie, Henry Putnam Professor of Economics and Public Affairs, director of the economics department and director of the Wilson School’s Center for Health and Wellbeing.

The collapse of the two towers created a zone of negative air pressure that pushed dust and smoke into the avenues surrounding the World Trade Center site. Other past studies have shown that environmental exposure to the World Trade Center dust cloud was associated with significant adverse effects on the health of adult community residents and emergency workers.

Using data on all births that were in utero on Sept. 11, 2001 in New York City and comparing those babies to their siblings, the researchers found that, for mothers in their first trimester during 9/11, exposure to this catastrophe more than doubled their chances of delivering a baby prematurely. Of the babies born, boys were more likely to have birth complications and very low birth weights. They were also more likely to be admitted to the NICU.

The neighborhoods most affected by the 9/11 dust cloud included Lower Manhattan, Battery Park City, SoHo, TriBeCa, Civic Center, Little Italy, Chinatown and the Lower East Side. Previous studies analyzing the aftermath of 9/11 on health failed to account for many women living in Lower Manhattan, who were generally less likely to have poor birth outcomes than women living in other neighborhoods.

The paper, “The 9/11 Dust Cloud and Pregnancy Outcomes: A Reconsideration,” can be found on NBER.

Comments Off on 9/11 dust cloud may have caused widespread pregnancy issues

3D printed interactive speakers

August 10th, 2014By Alton Parrish.

Forget everything you know about what a loudspeaker should look like. Scientists at Disney Research, Pittsburgh have developed methods using a 3D printer to produce electrostatic loudspeakers that can take the shape of anything, from a rubber ducky to an abstract spiral.

Credit: Disney Research

The method developed by Ishiguro and Ivan Poupyrev, a former Disney Research, Pittsburgh principal research scientist, was presented April 29 at the Conference on Human Factors in Computing Systems (CHI) in Toronto.