Posts by AltonParrish:

- Hallway meetings – Coworkers who work together in the same building often discuss things informally in the hall or break room that the remote worker is unable to be privy to. This leaves the telecommuter at a big disadvantage by not being able to participate in the day to day office conversation. Even with the best communications systems, many small details will be missed that can affect the ability of the remote workers to do their jobs effectively.

- IT problems – Modern technology is fantastic but not infallible. Electronic glitches or internet problems can leave the remote worker helpless and unable to get their work submitted as needed. This is particularly bad for critically time sensitive material. If the boss is depending on a report from a remote employee, but doesn’t get it in time for the meeting because of technical difficulties, everyone looks bad.

- Misunderstanding instructions – Emails are a great way to communicate quickly and efficiently to coworkers, but they also are at risk for misunderstandings to occur. Written instructions that may seem pretty straightforward to the author can be totally misinterpreted by the reader. Remote workers are often denied the advantage of seeing facial expressions and hearing vocal inflections that can influence the meaning of words. A lot of time and productivity can be wasted by silly mistakes in electronic communications.

- Promotions – Another disadvantage to remote workers are opportunities for promotions within the company they work for. Employees who work from home may find themselves in a stagnant job description and be unable to advance their careers and pay scale. Supervisory positions are most likely to be offered to employees who actually work at the place of business and the lack of visibility for telecommuters can leave them overlooked for other promotions as well.

- Socializing with coworkers – There’s no question that the social network of coworkers is an important part of anyone’s job. Having personal relationships away from the workplace is a great advantage for advancing one’s career and building a good rapport with coworkers. Remote workers are usually left out of these social gatherings. They’re not going to be invited to stop after work at the local watering hole to unwind or be able to participate in impromptu office parties.

New fast charging nano-supercacitors for electric cars

August 4th, 2014By Alton Parrish.

Innovative nano-material based supercapacitors are set to bring mass market appeal a good step closer to the lukewarm public interest in Germany. This movement is currently being motivated by the advancements in the state-of-the-art of this device.

Electric cars are very much welcomed in Norway and they are a common sight on the roads of the Scandinavian country – so much so that electric cars topped the list of new vehicle registrations for the second time. This poses a stark contrast to the situation in Germany, where electric vehicles claim only a small portion of the market.

Innovative nano-material based supercapacitors are set to bring mass market appeal a good step closer to the lukewarm public interest in Germany. This movement is current-ly being motivated by the advancements in the state-of-the-art of this device.

Credit: © Fraunhofer IPA

Of the 43 million cars on the roads in Germany, only a mere 8000 are electric powered. The main factors discouraging motorists in Germany from switching to electric vehicles are the high investments cost, their short driving ranges and the lack of charging stations. Another major obstacle en route to the mass acceptance of electric cars is the charging time involved.

The minutes involved in refueling conventional cars are so many folds shorter that it makes the situation almost incomparable. However, the charging durations could be dramatically shortened with the inclusion of supercapacitors. These alternative energy storage devices are fast charging and can therefore better support the use of economical energy in electric cars.

Taking traditional gasoline-powered vehicles for instance, the action of braking converts the kinetic energy into heat which is dissipated and unused. Per contra, generators on electric vehicles are able to tap into the kinetic energy by converting it into electricity for further usage. This electricity often comes in jolts and requires storage devices that can withstand high amount of energy input within a short period of time.

In this example, supercapacitors with their capability in capturing and storing this converted energy in an instant fits in the picture wholly. Unlike batteries that offer limited charging/discharging rates, supercapacitors require only seconds to charge and can feed the electric power back into the air-conditioning systems, defogger, radio, etc. as required.

Rapid energy storage devices are distinguished by their energy and power density characteristics – in other words, the amount of electrical energy the device can deliver with respect to its mass and within a given period of time.

Supercapacitors are known to possess high power density, whereby large amounts of electrical energy can be provided or captured within short durations, albeit at a short-coming of low energy density. The amount of energy in which supercapacitors are able to store is generally about 10% that of electrochemical batteries (when the two devices of same weight are being compared).

This is precisely where the challenge lies and what the “ElectroGraph” project is attempting to address. ElectroGraph is a project supported by the EU and its consortium consists of ten partners from both research institutes and industries. One of the main tasks of this project is to develop new types of supercapacitors with significantly improved energy storage capacities.

As the project is approaches its closing phase in June, the project coordinator at Fraunhofer Institute for Manufacturing Engineering and Automation IPA in Stuttgart, Carsten Glanz explained the concept and approach taken en route to its successful conclusion: “during the storage process, the electrical energy is stored as charged particles attached on the electrode material.” “So to store more energy efficiently, we designed light weight electrodes with larger, usable surfaces.”

Graphene electrodes significantly improve energy efficiency

In numerous tests, the researcher and his team investigated the nano-material graphene, whose extremely high specific surface area of up to 2,600 m2/g and high electrical conductivity practically cries out for use as an electrode material. It consists of an ultrathin monolayer lattice made of carbon atoms. When used as an electrode material, it greatly increases the surface area with the same amount of material. From this aspect, graphene is showing its potential in replacing activated carbon – the material that has been used in commercial supercapacitors to date – which has a specific surface area between 1000 and 1800 m2/g.

“The space between the electrodes is filled with a liquid electrolyte,” revealed Glanz. “We use ionic liquids for this purpose. Graphene-based electrodes together with ionic liquid electrolytes present an ideal material combination where we can operate at higher voltages.”

“By arranging the graphene layers in a manner that there is a gap between the individual layers, theresearchers were able to establish a manufacturing method that efficiently uses the intrinsic surface area available of this nano-material. This prevents the individual graphene layers from restacking into graphite, which would reduce the storage surface and consequently the amount of energy storage capacity. “Our electrodes have already surpassed commercially available one by 75 percent in terms of storage capacity,” emphasizes the engineer.

“I imagine that the cars of the future will have a battery connected to many capacitors spread throughout the vehicle, which will take over energy supply during high-power demand phases during acceleration for example and ramming up of the air-conditioning system. These capacitors will ease the burden on the battery and cover voltage peaks when starting the car. As a result, the size of massive batteries can be reduced.”

In order to present the new technology, the ElectroGraph consortium developed a demonstrator consisting of supercapacitors installed in an automobile side-view mirror and charged by a solar cell in an energetically self-sufficient system. The demonstrator will be unveiled at the end of May during the dissemination workshop at Fraunhofer IPA.

Comments Off on New fast charging nano-supercacitors for electric cars

Most distant galaxy ever seen

August 4th, 2014By NASA.

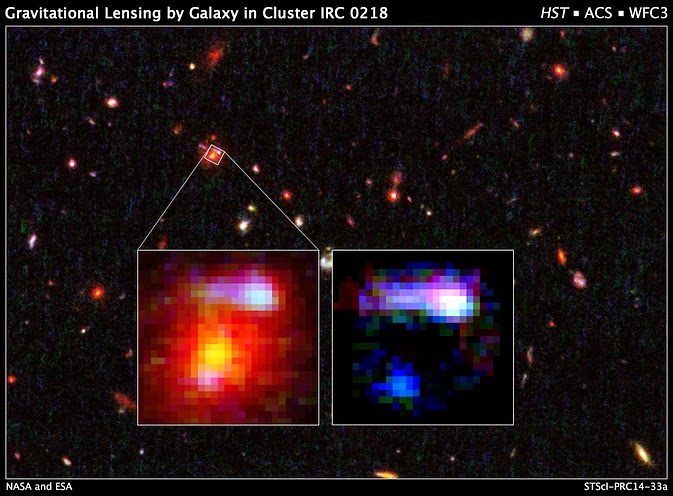

Astronomers using NASA’s Hubble Space Telescope have unexpectedly discovered the most distant galaxy that acts as a cosmic magnifying glass. Seen here as it looked 9.6 billion years ago, this monster elliptical galaxy breaks the previous record-holder by 200 million years.

The farthest cosmic lens yet found, a massive elliptical galaxy, is shown in the inset image at left. The galaxy existed 9.6 billion years ago and belongs to the galaxy cluster, IRC 0218.

Image Credit: NASA and ESA

These “lensing” galaxies are so massive that their gravity bends, magnifies, and distorts light from objects behind it, a phenomenon called gravitational lensing. Finding one in such a small area of the sky is so rare that you would normally have to survey a region hundreds of times larger to find just one.

The object behind the cosmic lens is a tiny spiral galaxy undergoing a rapid burst of star formation. Its lighthas taken 10.7 billion years to arrive here and seeing this chance alignment at such a great distance from Earth is a rare find. Locating more of these distant lensing galaxies will offer insight into how young galaxies in the early universe build themselves up into the massive dark-matter-dominated galaxies of today. Dark matter cannot be seen, but it accounts for the bulk of the universe’s matter.

“When you look more than 9 billion years ago in the early universe, you don’t expect to find this type of galaxy lensing at all,” explained lead researcher Kim-Vy Tran of Texas A&M University in College Station. “It’s very difficult to see an alignment between two galaxies in the early universe. Imagine holding a magnifying glassclose to you and then moving it much farther away. When you look through a magnifying glass held at arm’s length, the chances that you will see an enlarged object are high. But if you move the magnifying glass across the room, your chances of seeing the magnifying glass nearly perfectly aligned with another object beyond it diminishes.”

Team members Kenneth Wong and Sherry Suyu of Academia Sinica Institute of Astronomy & Astrophysics (ASIAA) in Taipei, Taiwan, used the gravitational lensing from the chance alignment to measure the giant galaxy’s total mass, including the amount of dark matter, by gauging the intensity of its lensing effects on the background galaxy’s light. The giant foreground galaxy weighs 180 billion times more than our sun and is a massive galaxy for its time. It is also one of the brightest members of a distant cluster of galaxies, called IRC 0218.

“There are hundreds of lens galaxies that we know about, but almost all of them are relatively nearby, in cosmic terms,” said Wong, first author on the team’s science paper. “To find a lens as far away as this one is a very special discovery because we can learn about the dark-matter content of galaxies in the distant past. By comparing our analysis of this lens galaxy to the more nearby lenses, we can start to understand how that dark-matter content has evolved over time.”

The team suspects the lensing galaxy continued to grow over the past 9 billion years, gaining stars and dark matter by cannibalizing neighboring galaxies. Tran explained that recent studies suggest these massive galaxies gain more dark matter than stars as they continue to grow. Astronomers had assumed dark matter and normal matter build up equally in a galaxy over time, but now know the ratio of dark matter to normal matter changes with time. The newly discovered distant lensing galaxy will eventually become much more massive than the Milky Way and will have more dark matter, too.

Tran and her team were studying star formation in two distant galaxy clusters, including IRC 0218, when they stumbled upon the gravitational lens. While analyzing spectrographic data from the W.M. Keck Observatory in Hawaii, Tran spotted a strong detection of hot hydrogen gas that appeared to arise from a giant elliptical galaxy.

The detection was surprising because hot hydrogen gas is a clear signature of star birth. Previous observations showed that the giant elliptical, residing in the galaxy cluster IRC 0218, was an old, sedate galaxy that had stopped making stars a long time ago. Another puzzling discovery was that the young stars were at a much farther distance than the elliptical galaxy. Tran was very surprised, worried and thought her team made a major mistake with their observations.

The astronomer soon realized she hadn’t made a mistake when she looked at the Hubble images taken in blue wavelengths, which revealed the glow of fledgling stars. The images, taken by Hubble’s Advanced Camera for Surveys and the Wide Field Camera 3, revealed a blue, eyebrow-shaped object next to a smeared blue dot around the massive elliptical. Tran recognized the unusual features as the distorted, magnified images of a more distant galaxy behind the elliptical galaxy, the signature of a gravitational lens.

To confirm her gravitational-lens hypothesis, Tran’s team analyzed Hubble archival data from two observing programs, the 3D-HST survey, a near-infrared spectroscopic survey taken with the Wide Field Camera 3, and the Cosmic Assembly Near-infrared Deep Extragalactic Legacy Survey, a large Hubble deep-sky program. The data turned up another fingerprint of hot gas connected to the more distant galaxy.

The distant galaxy is too small and far away for Hubble to determine its structure. So, team members analyzed the distribution of light in the object to infer its spiral shape. In addition, spiral galaxies are more plentiful during those early times. The Hubble images also revealed at least one bright compact region near the center. The team suspects the bright region is due to a flurry of star formation and is most likely composed of hot hydrogen gas heated by massive young stars. As Tran continues her star-formation study in galaxy clusters, she will be hunting for more signatures of gravitational lensing.

The team’s results appeared in the July 10 issue of The Astrophysical Journal Letters.

The Hubble Space Telescope is a project of international cooperation between NASA and the European Space Agency. NASA’s Goddard Space Flight Center in Greenbelt, Maryland, manages the telescope. TheSpace Telescope Science Institute (STScI) in Baltimore conducts Hubble science operations. STScI is operated for NASA by the Association of Universities for Research in Astronomy, Inc., in Washington.

Comments Off on Most distant galaxy ever seen

2000 year old mystery of the binding media in China’s polychrome terracotta army solved

August 4th, 2014By Hongtao Yan.

Even as he conquered rival kingdoms to create the first united Chinese empire in 221 B.C., China’s First Emperor Qin Shihuang ordered the building of a glorious underground palace complex, mirroring his imperial capital near present-day Xi’an, that would last for an eternity.

More than a quarter-century ago, the United Nations Educational, Scientific and Cultural Organization inscribed the Mausoleum of the First Qin Emperor on its World Heritage List – a chronicle of the most fantastic and important cultural and historical sites around the world.

Describing the site, UNESCO experts stated: “Qin (d. 210 B.C.), the first unifier of China, is buried, surrounded by the famous terracotta warriors, at the center of a complex designed to mirror the urban plan of the capital, Xianyang. The small figures are all different; with their horses, chariots and weapons, they are masterpieces of realism and also of great historical interest.”

Describing the site as “one of the most fabulous archaeological reserves in the world,” UNESCO experts pointed out the immense value of investigating the technology involved in creating and coloring these lifelike warriors: “The documentary value of a group of hyper realistic sculptures where no detail has been neglected – from the uniforms of the warriors, their arms, to even the horses’ halters – is enormous. Furthermore, the information to be gleaned from the statues concerning the craft and techniques of potters and bronze-workers is immeasurable.”

Archaeological excavations and research conducted since the discovery of the First Emperor’s polychrome army have revealed “the surfaces of the terracotta warriors were initially covered with one or two layers of an East Asian lacquer … obtained from lacquer trees,” according to Hongtao Yan and Jingjing An, scientists at the College of Chemistry and Materials Science, Northwest University, in the Chinese city of Xi’an.

In an article coauthored with Tie Zhou, Yin Xia and Bo Rong, scholars at the Key Scientific Research Base of Ancient Polychrome Pottery Conservation, the State Administration for Cultural Heritage, connected with the Museum of Emperor Qin Shihuang’s Terracotta Army, these researchers stated: “This lacquer was used as a base-coat for the polychrome layers, with one layer of polychrome being placed on top of the lacquer in the majority of cases.”

Credit: Wikipedia

These five scholars likewise revealed in the study, which was published in the Chinese Science Bulletin, that polychrome layers applied to these sculpted imperial guards were composed of natural inorganic pigments and binding media. These pigments have been identified as including cinnabar [HgS], apatite [Ca5(PO4)3OH], azurite [Cu3(CO3)2(OH)2] and malachite [Cu2CO3(OH)2], etc., but the precise composition of binding media used in the painting process had long eluded scientists.

Research aimed at solving this puzzle faced an array of obstacles: extremely low levels the proteinaceous binding media in the polychrome layers of Qin Shihuang’s terracotta army have survived being submerged in almost six meters of water-saturated loess for more than two millennia.

“Following almost 22 centuries of storage under these conditions, the remaining pieces of original polychromy that have survived on the sculptures contain extremely small amounts of the binding media,” theresearchers wrote in an article titled “Identification of proteinaceous binding media for the polychrome terracotta army of Emperor Qin Shihuang by MALDI-TOF-MS.”

“A large amount of the polychromy has already been lost through pillaging as well as damage resulting from fires and the long-lasting effect of water,” they explained.

To solve the more than 2000-year-old enigma, these researchers used matrix-assisted laser desorption/ionization time-of-flight mass spectrometry (MALDI-TOF-MS) to identify the binding material. MALDI-TOF-MS offers high levels of sensitivity, requires only a minimal sample pretreatment process and can be used to reliably identify different types of proteinaceous material.

The researchers prepared “artificially aged” model samples by mixing different pigments with either animal glue or an adhesive concocted from free-range chicken eggs. To replicate the processes involved in the degradation of the pigments and binding media of the actual terracotta warriors, the model samples were buried in loess soil at a depth of one meter for one year.

Historical samples of the polychrome terracotta army were obtained from the Museum of Emperor Qin Shihuang’s Terracotta Army in Xi’an to facilitate a comparative analysis.

Proteins were extracted from the model samples and from the historical samples, and the extracts were subjected to an ultrasonic bath treatment. The mixtures were then centrifuged and the supernatants collected. A method involving the complexation of EDTA in combination with dialysis was used to eliminate any interference in the polychrome layers taken from the historical samples. The extracted proteins were hydrolyzed with Sequencing grade trypsin to generate peptide fragment.

The binding media of the historical and model samples were analyzed by MALDI-TOF-MS, and the resulting peptide mass fingerprints of each sample were compared.

The peptide mass fingerprints of the historical samples were very similar to those of the animal glue modelsamples, having most peak masses in common.

The data obtained from the peptide mass fingerprints revealed that animal glue was present in the polychrome layers of Qin Shihuang’s terracotta army even though this proteinaceous binding material underwent significant changes in terms of protein content during the two millennia the terracotta army was deployed underground. The binding media could be ascertained due to the fact that the peptide massfingerprint of the animal glue proteins could be identified with certainty.

“To the best of our knowledge,” wrote the five researchers, “this work represents the first account of the proteinaceous binding media from a 2200-year-old historical sample in China being identified by MALDI-TOF-MS.”

Comments Off on 2000 year old mystery of the binding media in China’s polychrome terracotta army solved

Natures strongest super glue comes unstuck

July 22nd, 2014

By Newcastle University.

Over a 150 years since it was first described by Darwin, scientists are finally uncovering the secrets behind the super strength of barnacle glue.

Still far better than anything we have been able to develop synthetically, barnacle glue – or cement – sticks to any surface, under any conditions.

But exactly how this superglue of superglues works has remained a mystery – until now.

An international team of scientists led by Newcastle University, UK, and funded by the US Office of Naval Research, have shown for the first time that barnacle larvae release an oily droplet to clear the water from surfaces before sticking down using a phosphoprotein adhesive.

Publishing their findings this week in the prestigious academic journal Nature Communications, author Dr Nick Aldred says the findings could pave the way for the development of novel synthetic bioadhesives for use in medical implants and micro-electronics. The research will also be important in the production of new anti-fouling coatings for ships.

“It’s over 150 years since Darwin first described the cement glands of barnacle larvae and little work has been done since then,” says Dr Aldred, a research associate in the School of Marine Science and Technology at Newcastle University, one of the world’s leading institutions in this field of research.

“We’ve known for a while there are two components to the bioadhesive but until now, it was thought they behaved a bit like some of the synthetic glues – mixing before hardening. But that still left the question, how does the glue contact the surface in the first place if it is already covered with water? This is one of the key hurdles to developing glues for underwater applications.

“Advances in imaging techniques, such as 2-photon microscopy, have allowed us to observe the adhesion process and characterise the two components. We now know that these two substances play very different roles – one clearing water from the surface and the other cementing the barnacle down.

“The ocean is a complex mixture of dissolved ions, the pH varies significantly across geographical areas and, obviously, it’s wet. Yet despite these hostile conditions, barnacle glue is able to withstand the test of time.

“It’s an incredibly clever natural solution to this problem of how to deal with a water barrier on a surface it will change the way we think about developing bio-inspired adhesives that are safe and already optimised to work in conditions similar to those in the human body, as well as marine paints that stop barnacles from sticking.”

Barnacles have two larval stages – the nauplius and the cyprid. The nauplius, is common to most crustacea and it swims freely once it hatches out of the egg, feeding in the plankton.

The final larval stage, however, is the cyprid, which is unique to barnacles. It investigates surfaces, selecting one that provides suitable conditions for growth. Once it has decided to attach permanently, the cyprid releases its glue and cements itself to the surface where it will live out the rest of its days.

“The key here is the technology. With these new tools we are able to study processes in living tissues, as they happen. We can get compositional and molecular information by other methods, but they don’t explain the mechanism. There’s no substitute for seeing things with your own eyes. ” explains Dr Aldred.

“In the past, the strong lasers used for optically sectioning biological samples have typically killed the samples, but now technology allows us to study life processes exactly as they would happen in nature.”

Credit: Physical Chemistry Chemical Physics

Comments Off on Natures strongest super glue comes unstuck

People in leadership positions may sacrifice privacy for security

July 22nd, 2014By Penn State University.

People with higher job status may be more willing to compromise privacy for security reasons and also be more determined to carry out those decisions, according to researchers.

This preoccupation with security may shape policy and decision-making in areas ranging from terrorism to investing, and perhaps cloud other options, said Jens Grossklags, assistant professor of information sciences and technology, Penn State.

People in leadership roles tend to be more decisive about guarding security, often at the expense of privacy.

Image: © iStock Photo Devrimb

‘

“What may get lost in the decision-making process is that one can enhance security without the negative impact on privacy,” said Grossklags. “It’s more of a balance, not a tradeoff, to establish good practices and sensible rules on security without negatively impacting privacy.”

In two separate experiments, the researchers examined how people with high-status job assignments evaluated security and privacy and how impulsive or patient they were in making decisions. The researchers found that participants who were randomly placed in charge of a project tended to become more concerned with security issues. In a follow-up experiment, people appointed as supervisors also showed a more patient, long-term approach to decision-making, added Grossklags, who worked with Nigel J. Barradale, assistant professor of finance, Copenhagen Business School.

The findings may explain why people who are in leadership roles tend to be more decisive about guardingsecurity, often at the expense of privacy, according to the researchers. In the real world, high-status decision-makers would include politicians and leaders of companies and groups.

“Social status shapes how privacy and security issues are settled in the real world,” said Grossklags. “Hopefully, by calling attention to these tendencies, decision makers can rebalance their priorities on securityand privacy.”

The researchers, who presented their findings today (July 16) at the Privacy Enhancing Technologies Symposium in Amsterdam, Netherlands, used two groups of volunteers in the studies. In the first experiment, they randomly assigned 146 participants roles as either a supervisor or a worker to determine how those assignments changed the way leaders approached security or privacy during a task.

People who were appointed supervisors showed a significant increase in their concern for security. The researchers also found that participants who were assigned a worker-level status expressed higher concern for privacy, but not significantly higher.

Another experiment, made up of 120 participants, examined whether patience was correlated with high-status assignments. The researchers asked the participants how long they would delay accepting a prize from a bank if the size of that prize would increase over time.

For example, the participants were asked how much money they would need to receive immediately to make them indifferent to receiving $80 in two months. As in the previous experiment, the researchers divided the group into high-status supervisors and low-status workers.

The low-status workers were more impulsive — they were willing to sacrifice 35 percent more to receive theprize money now rather than later. The supervisors, on the other hand, were more willing to wait, a sign that they would be more patient in making decisions with long-term consequences such as privacy and security.

The Experimental Social Science Laboratory at the University of California at Berkeley supported this work.

Comments Off on People in leadership positions may sacrifice privacy for security

Sharpest map of Mars surface properties

July 18th, 2014

By Arizona State University.

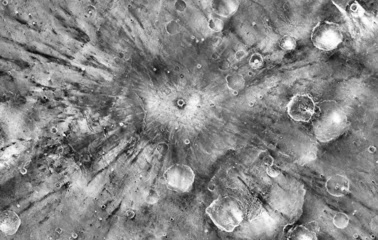

A heat-sensing camera designed at Arizona State University has provided data to create themost detailed global map yet made of Martian surface properties.

The map uses data from the Thermal Emission Imaging System (THEMIS), a nine-band visual and infrared camera on NASA’s Mars Odysseyorbiter. A version of the map optimized for scientific researchers is available at the U.S. Geological Survey (USGS).

The new Mars map was developed by the Geological Survey’s Robin Fergason at the USGS Astrogeology Science Center in Flagstaff, Arizona, in collaboration with researchers at ASU’s Mars Space Flight Facility. The work reflects the close ties between space exploration efforts at Arizona universities and the U.S. Geological Survey.

A small impact crater on Mars named Gratteri, 4.3 miles (6.9 km) wide, lies at the center of large dark streaks. Unlike an ordinary daytime photo, this nighttime image shows how warm various surface areas are. Brighter tones mean warmer temperatures, which indicate areas with rockier surface materials. Darker areas indicate cooler and dustier terrain. For example, the bright narrow rings scattered across the image show where rocks are exposed on the uplifted rims of impact craters. Broad, bright areas show expanses of bare rock and durable crust. Fine-grain materials, such as dust and sand, show up as dark areas, most notably in the streaky rays made of fine material flung away in the aftermath of the meteorite’s impact.

Surface properties tell geologists about the physical nature of a planet or moon’s surface. Is a particular area coated with dust, and if so, how thick is it likely to be? Where are the outcrops of bedrock? How loose are the sediments that fill this crater or that valley? A map of surface properties lets scientists begin to answer questions such as these.

Darker means cooler and dustier

The new map uses nighttime temperature images to derive the “thermal inertia” for areas of Mars, each the size of a football field. Thermal inertia is a calculated value that represents how fast a surface heats up and cools off. As day and night alternate on Mars, loose, fine-grain materials such as sand and dust change temperature quickly and thus have low values of thermal inertia. Bedrock represents the other end of the thermal inertia range: because it cools off slowly at night and warms up slowly by day, it has a high thermal inertia.

“Darker areas in the map are cooler at night, have a lower thermal inertia and likely contain fine particles, such as dust, silt or fine sand,” Ferguson says. The brighter regions are warmer, she explains, and have surfaces with higher thermal inertia. These consist perhaps of coarser sand, surface crusts, rock fragments, bedrock or combinations of these materials.

The designer and principal investigator for the THEMIS camera is Philip Christensen, Regents’ Professor of Geological Sciences in the School of Earth and Space Exploration, part of the College of Liberal Arts and Sciences on the Tempe campus. (Four years ago, Christensen and ASU researchers used daytime THEMIS images to create a global Mars map depicting the planet’s landforms, such as craters, volcanoes, outflow channels, landslides, lava flows and other features.)

“A tremendous amount of effort has gone into this great global product, which will serve engineers, scientists and the public for many years to come,” Christensen says. “This map provides data not previously available, and it will enable regional and global studies of surface properties. I’m eager to use it to discover newinsights into the recent surface history of Mars.”

As Fergason notes, the map has an important practical side. “NASA used THEMIS images to find safe landing sites for the Mars Exploration Rovers in 2004, and for Curiosity, the Mars Science Laboratory rover, in 2012,” she says. “THEMIS images are now helping NASA select a landing site for its next Mars rover in 2020.”

Comments Off on Sharpest map of Mars surface properties

‘Nanocamera’ takes pictures at distances smaller than light’s own wavelength

July 18th, 2014By Alton Parrish.

How is it possible to record optically encoded information for distances smaller than the wavelength of light?

Researchers at the University of Illinois at Urbana-Champaign have demonstrated that an array of novel gold, pillar-bowtie nanoantennas (pBNAs) can be used like traditional photographic film to record light for distances that are much smaller than the wavelength of light (for example, distances less than ~600 nm for red light). A standard optical microscope acts as a “nanocamera” whereas the pBNAs are the analogous film.

“Unlike conventional photographic film, the effect (writing and curing) is seen in real time,” explained Kimani Toussaint, an associate professor of mechanical science and engineering, who led the research. “We have demonstrated that this multifunctional plasmonic film can be used to create optofluidic channels without walls. Because simple diode lasers and low-input power densities are sufficient to record near-field optical information in the pBNAs, this increases the potential for optical data storage applications using off-the-shelf, low-cost, read-write laser systems.”

“Particle manipulation is the proof-of-principle application,” stated Brian Roxworthy, first author of the group’s paper, “Multifunctional Plasmonic Film for Recording Near-Field Optical Intensity,” published in the journal, Nano Letters. “Specifically, the trajectory of trapped particles in solution is controlled by the pattern written into the pBNAs. This is equivalent to creating channels on the surface for particle guiding except that these channels do not have physical walls (in contrast to those optofluidics systems where physical channels are fabricated in materials such as PDMS).”

To prove their findings, the team demonstrated various written patterns—including the University’s “Block I” logo and brief animation of a stick figure walking—that were either holographically transferred to the pBNAs or laser-written using steering mirrors (see video).

Image of the Illinois “I” logo recorded by the plasmonic film; each bar in the letter is approximately 6 micrometers.

“We wanted to show the analogy between what we have made and traditional photographic film,” Toussaint added. “There’s a certain cool factor with this. However, we know that we’re just scratching the surface since the use of plasmonic film for data storage at very small scales is just one application. Our pBNAs allow us to do so much more, which we’re currently exploring.”

The researchers noted that the fundamental bit size is currently set by the spacing of the antennas at 425-nm. However, the pixel density of the film can be straightforwardly reduced by fabricating smaller array spacing and a smaller antenna size, as well as using a more tightly focusing lens for recording.

“For a standard Blu-ray/DVD disc size, that amounts to a total of 28.6 gigabites per disk,” Roxworthy added. “With modifications to array spacing and antenna features, it’s feasible that this value can be scaled to greater than 75 gigabites per disk. Not to mention, it can be used for other exciting photonic applications, such as lab-on-chip nanotweezers or sensing.”

“In our new technique, we use controlled heating via laser illumination of the nanoantennas to change the plasmonic response instantaneously, which shows an innovative but easy way to fabricate spatially changing plasmonic structures and thus opens a new avenue in the field of nanotech-based biomedical technologies and nano optics,” said Abdul Bhuiya, a co-author and member of the research team.

In addition to Bhuiya and Roxworthy, who earned his PhD in electrical and computer engineering at Illinois and is now a National Research Council postdoctoral researcher at the National Institute of Standards and Technology (NIST), the research team includes postdoctoral researchers V. V. Gopala K. Inavalli and Hao Chen. All are members of Toussaint’s Laboratory for Photonics Research of Bio/nano Environments (PROBE) at Illinois. Toussaint is currently on sabbatical as a Martin Luther King, Jr. Visiting Associate Professor at theMassachusetts Institute of Technology.

Comments Off on ‘Nanocamera’ takes pictures at distances smaller than light’s own wavelength

What is Bitcoin? Will digital currency replace paper money?

July 18th, 2014

By Penn State University.

Credit: PSU

The questions Franklin raised about the nature of money and its regulation in society are still relevant. It’s easy to imagine that the famously prescient and witty statesman would have an opinion or two about the controversial emerging currencies of today’s digital economy.

Could digital currency be poised to replace paper money?

The pursuit of an independent digital currency began in the early 1990s, explains John Jordan, clinical professor of supply chain and information systems at Penn State. Today’s big contender, the Bitcoin, was introduced in 2008 by a person (or group of people) known only by the pseudonym Satoshi Nakamoto.

Bitcoin is a digital payment network that allows users to engage in direct transactions without the oversight of a banking organization or government. It’s based on open source software, meaning the programming is published publicly, and any developer around the world can download, review or modify the source code.

Bitcoin is not technically a currency, though it functions like one, says Jordan. That’s not just his opinion; it’s also the conclusion of the IRS, which has decided to legally regard Bitcoin as property rather than currency.

Jordan compares Bitcoin to poker chips: “Poker chips can be used as a stand-in for money in certain situations, such as in a casino, and they can also be exchanged for money, but they aren’t money; their value is dependent on the casino system itself.”

“Emerging currency” of the 1700s: A three-pence note from Pennsylvania, signed by Thomas Wharton, the colony’s president, and printed by Ben Franklin and David Hall.

Image: public domain

Bitcoin users are represented anonymously on the peer-to-peer network, and each user has a digital “wallet” that holds their bitcoins. (The accepted practice is to capitalize the word when referring to the concept and community, and to use lower-case when referring to the system’s tradable units.) When Bitcoin transactions between digital wallets occur, those transactions must be verified by other Bitcoin users and added to the shared, public ledger.

“Transactions are verified through the process of cryptography,” explains Jordan, “essentially, very difficult mathematical algorithms solvable only with powerful computing systems. That’s what keeps the system secure and the ledger complete.”

This process of verifying transactions and adding them to the network ledger is called “mining,” and the activity of mining is also what creates more bitcoin. “Essentially, I can create more bitcoin by doing the work of the Bitcoin community — processing transactions,” Jordan says. The combined computing power of these peer-to-peer transactions is not just fast, he adds; it is a great deal faster than the world’s fastest supercomputer.

If you find any of this mind-boggling to follow, you’re not alone; its inherent complexity and technical jargon have given rise to countless “Bitcoin for dummies” articles, television drama plots, and even stand-up comedy skits. Some university campuses, including Penn State, now even offer active Bitcoin clubs.

But let’s get down to dollars and cents: what about Bitcoin’s exchange rate? Says Jordan, “As computers gain more power and the math algorithms are solved more quickly, the system adjusts by making problems more difficult to solve — to control how much Bitcoin is created. Consequently, Bitcoin’s exchange rate with the dollar fluctuates and can be tracked in real time online.”

All bitcoins in existence were originally created through mining, but today, users without the computational power to mine can purchase bitcoins on online exchanges and even at some special ATMs.

One of the primary advantages of Bitcoin, Jordan adds, is its lack of oversight by a government of financial organization. Unlike traditional payment methods like credit cards or money orders, transaction fees on the Bitcoin network are often nonexistent — or, at least, much lower.

“In concept, this is great news for small business owners,” says Jordan. “In a low-margin business, those three percent charges on credit card transactions can really add up.”

And Bitcoin can provide a huge advantage for foreign workers sending money home to families still in their home countries. “In this way, it’s really an artifact of globalization,” says Jordan. “Billions of dollars in remittances are transferred every year, and when doing it by Bitcoin, no money is lost to fees and service charges.”

Beyond the potential savings in transfer fees, many people see an ideological advantage to the anonymity of the Bitcoin system and its existence outside of governmental oversight.

“A lot of people think the government really doesn’t need to know what we’re doing,” says Jordan. Unfortunately, the anonymity of crypto-currencies perfectly serves the needs of the so-called ‘dark net,’ the online trade in drugs and other illegal goods. In 2013, FBI agents busted Silk Road, the web’s biggest black market in drugs, and seized more than $28 million worth in bitcoins.

“Since it’s a user’s private key that performs a transaction, and the person behind the key is invisible, Bitcoin is an attractive choice for illicit transactions,” notes Jordan. Regardless of its advantages for less nefarious business activities, Jordan is skeptical that Bitcoin will grow enough in popularity to gain widespread acceptance.

“Bitcoin is a really clever system, and it’s useful in many ways,” he says, “but it’s still too volatile and it’s impossible to imagine it existing outside of a computational environment.”

That’s one of its potential downfalls. “It’s good at what it does, but it’s early and the system was not designed to scale,” Jordan says. “Interestingly, economists speculate that, although Bitcoin may ultimately fail as money, it could succeed as something else — like a method for documenting chain of custody. For the time being, Bitcoin remains viable only in selected scenarios. While it may ultimately decline in popularity or disappear completely, other cryptography-based systems will evolve to capitalize on the opportunity.”

As Benjamin Franklin saw it, “Without continual growth and progress, such words as improvement, achievement, and success have no meaning.”

John Jordan is clinical professor of supply chain and information systems in Penn State’s Smeal College of Business.

Comments Off on What is Bitcoin? Will digital currency replace paper money?

Bizarre Blast Nearby Mimics Universe’s Most Ancient Stars

July 12th, 2014By ESA.

ESA’s XMM-Newton observatory has helped to uncover how the Universe’s first stars ended their lives in giant explosions.

Astronomers studied the gamma-ray burst GRB130925A – a flash of very energetic radiation streaming from a star in a distant galaxy – using space- and ground-based observatories.

They found the culprit producing the burst to be a massive star, known as a blue supergiant. These huge stars are quite rare in the relatively nearby Universe where GRB130925A is located, but are thought to have been very common in the early Universe, with almost all of the very first stars having evolved into them over the course of their short lives.

Artist’s impression of an exploding blue supergiant star

. Credit: NASA/Swift/A. Simonnet, Sonoma State Univ.

But unlike other blue supergiants we see nearby, GRB130925A’s progenitor star contained very little in the way of elements heavier than hydrogen and helium. The same was true for the first stars to form in the Universe, making GRB130925A a remarkable analogue for similar explosions that occurred just a few hundred million years after the Big Bang.

“There have been several theoretical studies predicting what a gamma-ray burst produced by a primordial star would look like,” says Luigi Piro of the Istituto Astrofisica e Planetologia Spaziali in Rome, Italy, and lead author of a new paper appearing in The Astrophysical Journal Letters. “With our discovery, we’ve shown that these predictions are likely to be correct.”

Astronomers believe that primordial stars were very large, perhaps several hundred times the mass of the Sun. This large bulk then fuelled ultralong gamma-ray bursts lasting several thousand seconds, up to a hundred times the length of a ‘normal’ gamma-ray burst.

Indeed, GRB130925A had a very long duration of around 20 000 seconds, but it also exhibited additional peculiar features not previously spotted in a gamma-ray burst: a hot cocoon of gas emitting X-ray radiation and a strangely thin wind.

Both of these phenomena allowed astronomers to implicate a blue supergiant as the stellar progenitor. Crucially, they give information on the proportion of the star composed of elements other than hydrogen and helium, elements that astronomers group together under the term ‘metals’.

Artist’s impression of a Gamma-ray burst.

Credit: ESA, illustration by ESA/ECF

After the Big Bang, the Universe was dominated by hydrogen and helium and therefore the first stars that formed were very metal-poor. However, these first stars made heavier elements via nuclear fusion and scattered them throughout space as they evolved and exploded.

This process continued as each new generation of stars formed, and thus stars in the nearby Universe are comparatively metal-rich.

Finding GRB130925A’s progenitor to be a metal-poor blue supergiant is significant, offering the chance to explore an analogue of one of those very first stars at close quarters. Dr Piro and his colleagues speculate that it might have formed out of a pocket of primordial gas that somehow survived unaltered for billions of years.

“The quest to understand the first stars that formed in the Universe some 13 billion years ago is one of the great challenges of modern astrophysics,” notes Dr Piro.

“Detecting one of these stars directly is beyond the reach of any present or future observatory due to the immense distances involved.

“But it should ultimately be possible to find them as they explode at the end of their lives, producing powerful flashes of radiation.”

As a nearby counterpart, however, GRB130925A has offered astronomers the opportunity to gain some insight into these first stars today.

“XMM-Newton’s space-based location and sensitive X-ray instruments were key to observing the later stages of this blast, several months after it first appeared,” says ESA’s XMM-Newton project scientist Norbert Schartel.

“At these times, the fingerprints of the progenitor star were clearer, but the source itself was so dim that only XMM-Newton’s instruments were sensitive enough to take the detailed measurements needed to characterise the explosion.”

A number of space- and ground-based missions were involved in the discovery and characterisation of GRB130925A. Alongside the XMM-Newton observations, the astronomers involved in this study also used X-ray data gathered at different times with NASA’s Swift Burst Alert Telescope, and radio data from the CSIRO’s Australia Telescope Compact Array.

“Combining these observations was crucial to get a full picture of this event,” added Eleonora Troja of NASA’s Goddard Space Flight Center in Maryland, USA, a co-author of the paper.

“This new understanding of GRB130925A means that we now have strong indications how a primordial explosion might look – and therefore what to search for in the distant Universe,” says Dr Schartel.

The search will require powerful facilities. The NASA/ESA/CSA James Webb Space Telescope, an infrared successor to the Hubble Space Telescope due for launch in 2018, and ESA’s planned Athena mission, a large X-ray observatory following on from XMM-Newton in 2028, will both have key roles to play.

BACKGROUND INFORMATION

“A Hot Cocoon in the Ultralong GRB 130925A: Hints of a PopIII-like Progenitor in a Low Density Wind Environment” by L. Piro et al. is published in The Astrophysical Journal Letters.

GRB130925A triggered the Swift Burst Alert Telescope on 25 September 2013 at 04:11:24 GMT. Early gamma-ray emission was detected by Integral, and the burst was subsequently observed by the Fermi Gamma-Ray Burst Monitor, Konus-Wind, Swift’s X-ray telescope, Chandra, the Gamma-Ray Burst Optical/Near-Infrared Detector, the Hubble Space Telescope and the CSIRO’s Australia Telescope Compact Array. GRB130925A was located in a galaxy so far away that its light has been travelling for 3.9 billion years.

ESA’s XMM-Newton was launched in December 1999. The largest scientific satellite to have been built in Europe, it is also one of the most sensitive X-ray observatories ever flown. More than 170 wafer-thin, cylindrical mirrors direct incoming radiation into three high-throughput X-ray telescopes. XMM-Newton’s orbit takes it almost a third of the way to the Moon, allowing for long, uninterrupted views of celestial objects.

Comments Off on Bizarre Blast Nearby Mimics Universe’s Most Ancient Stars

The existence of Goldilock planets is proven false

July 4th, 2014

University of Penn State.

Mysteries about controversial signals coming from a dwarf star considered to be a prime target in the searchfor extraterrestrial life now have been solved in research led by scientists at Penn State University.

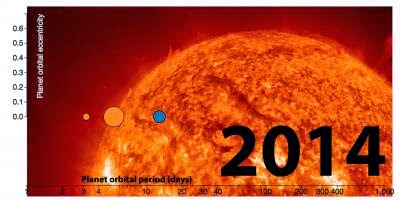

“This result is exciting because it explains, for the first time, all the previous and somewhat conflicting observations of the intriguing dwarf star Gliese 581, a faint star with less mass than our Sun that is just 20 light years from Earth,” said lead author Paul Robertson, a postdoctoral fellow at Penn State who is affiliated with Penn State’s Center for Exoplanets and Habitable Worlds. As a result of this research, the planets now confirmed to be orbiting this dwarf star total exactly three.

“We also have proven that some of the other controversial signals are not coming from two additional proposed Goldilocks planets in the star’s habitable zone, but instead are coming from activity within the star itself,” said Suvrath Mahadevan, an assistant professor of astronomy and astrophysics at Penn State and a coauthor of the research paper. None of the three remaining planets, whose existence the research confirms, are solidly inside this star system’s habitable zone, where liquid water could exist on a rocky planet like Earth.

Mysteries about controversial signals coming from a dwarf star considered to be a prime target in the searchfor extraterrestrial life now have been solved in research led by scientists at Penn State University. The scientists have proven, for the first time, that some of the signals, which were suspected to be coming from two planets orbiting the star at a distance where liquid water could potentially exist, actually are coming from events inside the star itself, not from so-called “Goldilocks planets” where conditions are just right for supporting life.

The research team made its discovery by analyzing Doppler shifts in existing spectroscopic observations of the star Gliese 581 obtained with the ESO HARPS and Keck HIRES spectrographs. The Doppler shifts that the scientists focused on were the ones most sensitive to magnetic activity. Using careful analyses and techniques, they boosted the signals of the three innermost planets around the star, but “the signals attributed to the existence of the two controversial planets disappeared, becoming indistinguishable from measurement noise,” Mahadevan said. “The disappearance of these two signals after correcting for the star’s activity indicates that these signals in the original data must have been produced by the activity and rotation of the star itself, not by the presence of these two suspected planets.

“Our improved detection of the real planets in this system gives us confidence that we are now beginning to sufficiently eliminate Doppler signals from stellar activity to discover new, habitable exoplanets, even when they are hidden beneath stellar noise, said Robertson. “While it is unfortunate to find that two such promising planets do not exist, we feel that the results of this study will ultimately lead to more Earth-like planets.”

Mysteries about controversial signals coming from a dwarf star considered to be a prime target in the searchfor extraterrestrial life now have been solved in research led by scientists at Penn State University. The scientists have proven, for the first time, that some of the signals, which were suspected to be coming from two planets orbiting the star at a distance where liquid water could potentially exist, actually are coming from events inside the star itself, not from so-called “Goldilocks planets” where conditions are just right for supporting life. The study is published by the journal Science in its early online Science Express edition on July 3, 2014, and also in a later print edition of the journal.

Credit: NASA/Penn State University

Older stars such as Gliese 581, an “M dwarf” star in the constellation Libra about one-third the mass of our Sun, have until now been considered highly attractive targets in the search for extraterrestrial life because they are generally less active and so are better targets for Doppler observations. “The new result from our research highlights a source of astrophysical noise even with old M dwarfs because the harmonics of the star’s rotation can be in the same range as that of its habitable zone, raising the risk of false detections of nonexistent planets,” Mahadevan said.

Blue indicates detections of candidate planets in the just-right region inside or near the habitable zone, where liquid water could exist. Orange indicates detections in the too-hot region that is too close to the star. Green indicates detections in the too-cold region farther away from the star and outside the habitable zone.

The size of each planet in this figure corresponds to its minimum mass. Some simplifications have been made for illustrative purposes. The refereed literature provides a complete history of the scientific publications relevant to this star and its planets.

The background image is a composite photo of our sun taken by Alan Friedman. The left side of the sun is seen through a filter that allows the camera to see wavelengths of light only in the deep-blue range, while the right side is seen through a filter that blocks all wavelengths except those in the red range. While the blue region is traditionally used to detect a star’s activity, this study used the red region of the light spectrum.

Comments Off on The existence of Goldilock planets is proven false

Baby boom in South Western United States from 500 to 13.000 A.D.

July 4th, 2014

By Alton Parrish.

Scientists have sketched out one of the greatest baby booms in North American history, a centuries-long “growth blip” among southwestern Native Americans between 500 and 1300 A.D.

It was a time when the early features of civilization–including farming and food storage–had matured to a level where birth rates likely “exceeded the highest in the world today,” the researchers report in this week’s issue of the journal Proceedings of the National Academy of Sciences.

Reconstruction of life on a Hohokam platform mound in the Sonoran Desert in the 13th century A.D.

City: Pueblo Grande Museum, City of Phoenix

City: Pueblo Grande Museum, City of Phoenix

Then a crash followed, says Tim Kohler, an anthropologist at Washington State University (WSU), offering a warning sign to the modern world about the dangers of overpopulation.

“We can learn lessons from these people,” says Kohler, who co-authored the paper with WSU researcher Kelsey Reese.

The study looks at a century’s worth of data on thousands of human remains found at hundreds of sites across the Four Corners region of the Southwest.

“This research reconstructed the complexity of human population birth rate change and demographic variability linked with the introduction of agriculture in the Southwest U.S.,” says Alan Tessier, acting deputydivision director in the National Science Foundation’s (NSF) Directorate for Biological Sciences, which supported the research through NSF’s Dynamics of Coupled Natural and Human Systems (CNH) Program.

“It illustrates the coupling and feedbacks between human societies and their environment.”

CNH is also co-funded by NSF’s Directorates for Geosciences and Social, Behavioral & Economic Sciences.

While many of the remains studied have been repatriated, the data let Kohler assemble a detailed chronology of the region’s Neolithic Demographic Transition, in which stone tools reflect an agricultural transition from cutting meat to pounding grain.

“It’s the first step toward all the trappings of civilization that we currently see,” says Kohler.

Maize, which we know as corn, was grown in the region as early as 2000 B.C.

At first, populations were slow to respond, probably because of low productivity, says Kohler. But by 400 B.C., he says, the crop provided 80 percent of the region’s calories.

Sites like Pueblo Bonito in northern New Mexico reached their maximum size in the early 1100s A.D.

Credit: Nate Crabtree Photography

Credit: Nate Crabtree Photography

Crude birth rates–the number of newborns per 1,000 people per year–were by then on the rise, mounting steadily until about 500 A.D.

The growth varied across the region.

People in the Sonoran Desert and Tonto Basin, in what is today Arizona, were more culturally advanced, with irrigation, ball courts, and eventually elevated platform mounds and compounds housing elite families.

Yet birth rates were higher among people to the North and East, in the San Juan Basin and northern San Juan regions of Northwest New Mexico and Southwest Colorado.

Kohler said that the Sonoran and Tonto people eventually would have had difficulty finding new farming opportunities for many children, since corn farming required irrigation. Water from canals also may have carried harmful protozoa, bacteria and viruses.

But groups to the Northeast would have been able to expand maize production into new areas as their populations grew.

Around 900 A.D., populations remained high but birth rates began to fluctuate.

The mid-1100s saw one of the largest known droughts in the Southwest. The region likely hit its carrying capacity.

Pottery became common across the Southwest around A.D. 600; many vessels stored corn.

Credit: Bureau of Land Management/Anasazi Heritage Center Collections/Mark Montgomery

Credit: Bureau of Land Management/Anasazi Heritage Center Collections/Mark Montgomery

From the mid-1000s to 1280, by which time all the farmers had left, conflicts raged across the northern Southwest but birth rates remained high.

“They didn’t slow down,” says Kohler. “Birth rates were expanding right up to the depopulation. Why not limit growth? Maybe groups needed to be big to protect their villages and fields.

“It was a trap, however.”

The northern Southwest had as many as 40,000 people in the mid-1200s, but within 30 years it was empty, leaving a mystery.

Ears of corn from a “Basketmaker II period” cache in Colorado, dating to the third century B.C.

Credit: Karen Adams, Crow Canyon Archaeological Center

Perhaps the population had grown too large to feed itself as the climate deteriorated. Then as people began to leave, that may have made it harder to maintain the social unity needed for defense and new infrastructure, says Kohler.

Whatever the reason, he says, the ancient Puebloans show that population growth has clear consequences.

Comments Off on Baby boom in South Western United States from 500 to 13.000 A.D.

Bizarre Parasite Discovered

June 28th, 2014

By University of Bonn.

Researchers from the University of Bonn and from China have discovered a fossil fly larva with a spectacular sucking apparatus.

Around 165 million years ago, a spectacular parasite was at home in the freshwater lakes of present-day Inner Mongolia (China): A fly larva with a thorax formed entirely like a sucking plate. With it, the animal could adhere to salamanders and suck their blood with its mouthparts formed like a sting. To date no insect is known that is equipped with a similar specialised design. The international scientific team is now presenting its findings in the journal “eLIFE”.

This reconstruction shows how scientists think the fly larvae adhered to the skin of the amphibian.

Credit: Graphic: Yang Dinghua, Nanjing

The parasite, an elongate fly larva around two centimeters long, had undergone extreme changes over the course of evolution: The head is tiny in comparison to the body, tube-shaped with piercer-like mouthparts at the front. The mid-body (thorax) has been completely transformed underneath into a gigantic sucking plate; the hind-body (abdomen) has caterpillar-like legs.

The parasite, an elongate fly larva around two centimeters long, had undergone extreme changes over the course of evolution: The head is tiny in comparison to the body, tube-shaped with piercer-like mouthparts at the front. The mid-body (thorax) has been completely transformed underneath into a gigantic sucking plate; the hind-body (abdomen) has caterpillar-like legs.

The international research team believes that this unusual animal is a parasite which lived in a landscape with volcanoes and lakes what is now northeastern China around 165 million years ago. In this fresh water habitat, the parasite crawled onto passing salamanders, attached itself with its sucking plate, and penetrated the thin skin of the amphibians in order to suck blood from them.

The Fossil: thanks to the fine-grained mudstone, the details of the two centimeter long parasite Qiyia jurassica are exceptionally preserved.

Credit: Photo: Bo Wang, Nanjing

“The parasite lived the life of Reilly”, says Prof. Jes Rust from the Steinmann Institute for Geology, Mineralogy and Palaeontology of the University of Bonn. This is because there were many salamanders in the lakes, as fossil finds at the same location near Ningcheng in Inner Mongolia (China) have shown.

“There scientists had also found around 300,000 diverse and exceptionally preserved fossil insects”, reports the Chinese scientist Dr. Bo Wang, who is researching in palaeontology at the University of Bonn as a PostDoc with sponsorship provided by the Alexander von Humboldt Foundation. The spectacular fly larva, which has received the scientific name of “Qiyia jurassica”, however, was a quite unexpected find. “Qiyia” in Chinese means “bizarre”; “jurassica” refers to the Jurassic period to which the fossils belong.

A fine-grained mudstone ensured the good state of preservation of the fossil

For the international team of scientists from the University of Bonn, the Linyi University (China), the Nanjing Institute of Geology and Palaeontology (China), the University of Kansas (USA) and the Natural History Museum in London (England), the insect larva is a spectacular find: “No insect exists today with a comparable body shape”, says Dr Bo Wang. That the bizarre larva from the Jurassic has remained so well-preserved to the present day is partly due to the fine-grained mudstone in which the animals were embedded.

“The finer the sediment, the better the details are reproduced in the fossils”, explains Dr Torsten Wappler of the Steinmann-Institut of the University of Bonn. The conditions in the groundwater also prevented decomposition by bacteria.

Unusual parasite: the head of Qiyia jurassica is tiny in comparison to the body with tube-shaped and piercer-like mouthparts at the front. The thorax onto which the abdomen with the caterpillar-like legs is connected has been transformed into a sucking plate underneath.

Credit: Graphic: Yang Dinghua, Nanjing

Astonishingly, no fossil fish are found in the freshwater lakes of this Jurassic epoch in China. “On the other hand, there are almost unlimited finds of fossilised salamanders, which were found by the thousand”, says Dr Bo Wang. This unusual ecology could explain why the bizarre parasites survived in the lakes: fish are predators of fly larvae and usually hold them in check. “The extreme adaptations in the design of Qiyia jurassica show the extent to which organisms can specialise in the course of evolution”, says Prof. Rust.

As unpleasant as the parasites were for the salamanders, their deaths were not caused by the fly larvae. “A parasite only sometimes kills its host when it has achieved its goal, for example, reproduction or feeding “, Dr Wappler explains. If Qiyia jurassica had passed through the larval stage, it would have grown into an adult insect after completing metamorphosis. The scientists don’t yet have enough information to speculate as to what the adult it would have looked like, and how it might have lived.

Comments Off on Bizarre Parasite Discovered

Killer Television: Too Much TV May Increase Risk Of Early Death In Adults

June 28th, 2014By University of Navarra.

Adults who watch TV for three hours or more each day may double their risk of premature death compared to those who watch less, according to new research published in the Journal of the American Heart Association.

“Television viewing is a major sedentary behavior and there is an increasing trend toward all types of sedentary behaviors,” said Miguel Martinez-Gonzalez, M.D., Ph.D., M.P.H., the study’s lead author and professor and chair of the Department of Public Health at the University of Navarra in Pamplona, Spain. “Our findings are consistent with a range of previous studies where time spent watching television was linked to mortality.”

Credit: www.healthcentral.com

Researchers assessed 13,284 young and healthy Spanish university graduates (average age 37, 60 percent women) to determine the association between three types of sedentary behaviors and risk of death from all causes: television viewing time, computer time and driving time. The participants were followed for a median 8.2 years. Researchers reported 97 deaths, with 19 deaths from cardiovascular causes, 46 from cancer and 32 from other causes.

The risk of death was twofold higher for participants who reported watching three or more hours of TV a day compared to those watching one or less hours. This twofold higher risk was also apparent after accounting for a wide array of other variables related to a higher risk of death.

Researchers found no significant association between the time spent using a computer or driving and higher risk of premature death from all causes. Researchers said further studies are needed to confirm what effects may exist between computer use and driving on death rates, and to determine the biological mechanisms explaining these associations.

“As the population ages, sedentary behaviors will become more prevalent, especially watching television, and this poses an additional burden on the increased health problems related to aging,” Martinez-Gonzalez said. “Our findings suggest adults may consider increasing their physical activity, avoid long sedentary periods, and reduce television watching to no longer than one to two hours each day.”

The study cited previous research that suggests that half of U.S. adults are leading sedentary lives.

The American Heart Association recommends at least 150 minutes of moderate-intensity aerobic activity, or at least 75 minutes of vigorous aerobic activity each week. You should also do moderate- to high-intensity muscle strengthening at least two days a week.

Co-authors are Francisco Basterra Gortari, M.D., Ph.D.; Maira Bes Rastrollo, Pharm.D., Ph.D.; Alfredo Gea, Pharm.D.; Jorge Nunez Cordoba, M.D., Ph.D.; and EstefaniaToledo, M.D., Ph.D. All belong to CIBER-OBN a research network funded by the Instituto de Salud Carlos III (Official Spanish agency for funding biomedical research).

Comments Off on Killer Television: Too Much TV May Increase Risk Of Early Death In Adults

Did the Khazars Convert To Judaism? New Research Says ‘No’

June 28th, 2014

By Alton Parrish.

Did the Khazars convert to Judaism? The view that some or all Khazars, a central Asian people, became Jews during the ninth or tenth century is widely accepted. But following an exhaustive analysis of the evidence, Hebrew University of Jerusalem researcher Prof. Shaul Stampfer has concluded that such a conversion, “while a splendid story,” never took place.

Prof. Shaul Stampfer is the Rabbi Edward Sandrow Professor of Soviet and East European Jewry, in the department of the History of the Jewish People at the Hebrew University’s Mandel Institute of Jewish Studies. The research has just been published in the Jewish Social Studies journal, Vol. 19, No. 3 (online at http://bit.ly/khazars).

After an exhaustive analysis, the Hebrew University’s Prof. Shaul Stampfer concluded that the Khazar conversion to Judaism is a legend with no factual basis.

Photo courtesy Prof. Stampfer

From roughly the seventh to tenth centuries, the Khazars ruled an empire spanning the steppes between the Caspian and Black Seas. Not much is known about Khazar culture and society: they did not leave a literary heritage and the archaeological finds have been meager. The Khazar Empire was overrun by Svyatoslav of Kiev around the year 969, and little was heard from the Khazars after. Yet a widely held belief that the Khazars or their leaders at some point converted to Judaism persists.

Reports about the Jewishness of the Khazars first appeared in Muslim works in the late ninth century and in two Hebrew accounts in the tenth century. The story reached a wider audience when the Jewish thinker and poet Yehudah Halevi used it as a frame for his book The Kuzari. Little attention was given to the issue in subsequent centuries, but a key collection of Hebrew sources on the Khazars appeared in 1932 followed by a little-known six-volume history of the Khazars written by the Ukrainian scholar Ahatanhel Krymskyi.

Henri Gregoire published skeptical critiques of the sources, but in 1954 Douglas Morton Dunlop brought the topic into the mainstream of accepted historical scholarship withThe History of the Jewish Khazars. Arthur Koestler’s best-selling The Thirteenth Tribe (1976) brought the tale to the attention of wider Western audiences, arguing that East European Ashkenazi Jewry was largely of Khazar origin.

Many studies have followed, and the story has also garnered considerable non-academic attention; for example, Shlomo Sand’s 2009 bestseller, The Invention of the Jewish People, advanced the thesis that the Khazars became Jews and much of East European Jewry was descended from the Khazars. But despite all the interest, there was no systematic critique of the evidence for the conversion claim other than a stimulating but very brief and limited paper by Moshe Gil of Tel Aviv University.

Stampfer notes that scholars who have contributed to the subject based their arguments on a limited corpus of textual and numismatic evidence. Physical evidence is lacking: archaeologists excavating in Khazar lands have found almost no artifacts or grave stones displaying distinctly Jewish symbols. He also reviews various key pieces of evidence that have been cited in relation to the conversion story, including historical and geographical accounts, as well as documentary evidence.

Among the key artifacts are an apparent exchange of letters between the Spanish Jewish leader Hasdai ibn Shaprut and Joseph, king of the Khazars; an apparent historical account of the Khazars, often called the Cambridge Document or the Schechter Document; various descriptions by historians writing in Arabic; and many others.

Taken together, Stampfer says, these sources offer a cacophony of distortions, contradictions, vested interests, and anomalies in some areas, and nothing but silence in others. A careful examination of the sources shows that some are falsely attributed to their alleged authors, and others are of questionable reliability and not convincing.

Many of the most reliable contemporary texts, such as the detailed report of Sallam the Interpreter, who was sent by Caliph al-Wathiq in 842 to search for the mythical Alexander’s wall; and a letter of the patriarch of Constantinople, Nicholas, written around 914 that mentions the Khazars, say nothing about their conversion.

Citing the lack of any reliable source for the conversion story, and the lack of credible explanations for sources that suggest otherwise or are inexplicably silent, Stampfer concludes that the simplest and most convincing answer is that the Khazar conversion is a legend with no factual basis. There never was a conversion of a Khazar king or of the Khazar elite, he says.

Years of research went into this paper, and Stampfer ruefully noted that “Most of my research until now has been to discover and clarify what happened in the past. I had no idea how difficult and challenging it would be to prove that something did not happen.”

In terms of its historical implications, Stampfer says the lack of a credible basis for the conversion story means that many pages of Jewish, Russian and Khazar history have to be rewritten. If there never was a conversion, issues such as Jewish influence on early Russia and ethnic contact must be reconsidered.

Stampfer describes the persistence of the Khazar conversion legend as a fascinating application of Thomas Kuhn’s thesis on scientific revolution to historical research. Kuhn points out the reluctance of researchers to abandon familiar paradigms even in the face of anomalies, instead coming up with explanations that, though contrived, do not require abandoning familiar thought structures. It is only when “too many” anomalies accumulate that it is possible to develop a totally different paradigm—such as a claim that the Khazar conversion never took place.

Stampfer concludes, “We must admit that sober studies by historians do not always make for great reading, and that the story of a Khazar king who became a pious and believing Jew was a splendid story.” However, in his opinion, “There are many reasons why it is useful and necessary to distinguish between fact and fiction – and this is one more such case.”

Comments Off on Did the Khazars Convert To Judaism? New Research Says ‘No’

5G revolution to connect 8 billion people and 7 trillion objects!

June 21st, 2014

By Alton Parrish.

We are witnessing an exponential increase of wireless traffic demand due to the worldwide proliferation of mobile Internet applications. It is forecasted that, by 2020, the network infrastructure will be capable of embracing trillions of devices according to a plethora of application-specific requirements in a flexible and truly mobile way.

One key to addressing this technological challenge is effective communication between the different parties involved in the networking field. As part of its leading role in the concerted European effort on 5G research, IMDEA Networks aims to facilitate this networking process through various collaborative actions. Among its recent success stories is the creation of a Joint Research Unit (JRU) in 5G technologies with Telefónica. Also, one of its Research Professors, Joerg Widmer, awarded a prestigious ERC Consolidator Grant, has just launched the SEARCHLIGHT project to investigate mmWave communication, one of the key 5G technologies.

The latest scientific networking initiative by the Madrid-based Institute has been its Sixth Annual International Workshop, this year exploring the 5G Network Revolution. On June 11th members of the Institute’s Scientific Council, representatives of industry and prominent researchers met to address the huge explosion in mobile data of our hyperconnected society.

Comments Off on 5G revolution to connect 8 billion people and 7 trillion objects!

54% of teens involved in child porn?

June 21st, 2014By Drexel University.

A new study from Drexel University found that the majority of young people are not aware of the legal ramifications of underage sexting.

Sexting among youth is more prevalent than previously thought, according to a new study from Drexel University that was based on a survey of undergraduate students at a large northeastern university. More than 50 percent of those surveyed reported that they had exchanged sexually explicit text messages, with or without photographic images, as minors.

The study also found that the majority of young people are not aware of the legal ramifications of underage sexting. In fact, most respondents were unaware that many jurisdictions consider sexting among minors – particularly when it involves harassment or other aggravating factors – to be child pornography, a prosecutable offense. Convictions of these offenses carry steep punishments, including jail time and sex offender registration.