Posts by AltonParrish:

Is Antimatter Anti-Gravity?

May 1st, 2013By Alton Parrish.

Antimatter is strange stuff. It has the opposite electrical charge to normal matter and, when it meets its matter counterpart, the two annihilate in a flash of light.

Credit: Image by Chukman So, UC Berkeley.

Almost everyone, including the physicists, thinks that antimatter will likely fall at the same rate as normal matter, but no one has ever dropped antimatter to see if this is true, said Joel Fajans, UC Berkeley professor of physics. And while there are many indirect indications that matter and antimatter weigh the same, they all rely on assumptions that might not be correct. A few theorists have argued that some cosmological conundrums, such as why there is more matter than antimatter in the universe, could be explained if antimatter did fall upward.

In a new paper published online on April 30 in Nature Communications, the UC Berkeley physicists and their colleagues with the ALPHA experiment at CERN, the European Organization for Nuclear Research in Geneva, Switzerland, report the first direct measurement of gravity’s effect on antimatter, specifically antihydrogen in free fall. Though far from definitive – the uncertainty is about 100 times the expected measurement – the UC Berkeley experiment points the way toward a definitive answer to the fundamental question of whether matter falls up or down.

“This is the first word, not the last,” Fajans. “We’ve taken the first steps toward a direct experimental test of questions physicists and nonphysicists have been wondering about for more than 50 years. We certainly expect antimatter to fall down, but just maybe we will be surprised.”

Fajans and fellow physics professor Jonathan Wurtele employed data from the Antihydrogen Laser Physics Apparatus (ALPHA) at CERN. The experiment captures antiprotons and combines them with antielectons (positrons) to make antihydrogen atoms, which are stored and studied for a few seconds in a magnetic trap. Afterward, however, the trap is turned off and the atoms fall out. The two researchers realized that by analyzing how antihydrogen fell out of the trap, they could determine if gravity pulled on antihydrogen differently than on hydrogen.

Antihydrogen did not behave weirdly, so they calculated that it cannot be more than 110 times heavier than hydrogen. If antimatter is anti-gravity – and they cannot rule it out – it doesn’t accelerate upward with more than 65 Gs.

“We need to do better, and we hope to do so in the next few years,” Wurtele said. ALPHA is being upgraded and should provide more precise data once the experiment reopens in 2014.

The paper was coauthored by other members of the ALPHA team, including UC Berkeley postdoctoral fellow Andre Zhmoginov and lecturer Andrew Charman.

Combating H7N9: Using Lessons Learned from APEIR’s Studies on H5N1

April 23rd, 2013By Alton Parrish.

The recent human cases of H7N9 avian influenza demonstrate the importance of adopting the lessons learned from H5N1 avian influenza. Studies on this disease recently completed by researchers from the Asia Partnership on Emerging Infectious Diseases Research (APEIR) developed a series of messages for policy makers that are highly relevant to the current outbreak.\

Professor Liu Wenjun of the Chinese Academy of Sciences Institute of Microbiology said: ”With H7N9 we are already seeing marked falls in demand for poultry and this can have a major effect on the livelihoods of the rural poor who depend on the sale of chickens for a significant part of their disposable income. While it was necessary to close infected markets to protect public health, the flow-on effects for producers and others associated with the poultry industry are massive and there will be a need to look for alternative means of support for these producers.”

The economic studies recommended that support from the government is needed to build slaughtering facilities and freezers to help adjust to market price fluctuation. “At present many farmers cannot sell their poultry and ways need to be found to support these farmers when market shocks occur.”

“In areas affected by H7N9 influenza, which already covers Jiangsu, Zhejiang and Anhui provinces and beyond, support for taking up alternative jobs should be considered for households rearing poultry so that households can make up for their losses from raising poultry and maintain their living standards. The studies on H5N1 found that despite shifts in government policies towards support for large scale industrial poultry production, small scale production still needs support as it is a major source of income for women and the rural poor.”

The team conducting studies on the effectiveness of control measures against H5N1 found that there were significant deficiencies in biosecurity practices in most of the farms studied, especially, but not only, small scale farms. The measures in place on these farms would not be sufficient to prevent an H7N9 influenza virus from gaining entry to farms and infecting poultry. This means that, for areas where this virus is not yet present, farm biosecurity measures need to be strengthened, as recommended also by FAO, but the measures proposed and adopted have to be affordable and in line with existing production systems.

The various studies also found that with H5N1 control, wide area culling in which all poultry in a large zone around known infected flocks are culled had very severe effects on livelihoods because of the level of disruption and hardship it caused producers and the rural poor. There was also no evidence to suggest that it was more effective than limited culling, coupled with surveillance to detect other infected flocks. Compensation provides partial coverage of the losses but does not cover the loss of business or the loans farmers have taken out if they are not allowed to recommence business for an extended period of time.

Studies on wild birds conducted as part of APEIR demonstrated the importance of undertaking surveillance in wild birds to characterise the influenza viruses carried by these birds. The genetic information obtained so far on the H7N9 virus suggests that the H and N components of this virus were probably derived from wild birds, and also possibly from poultry. It is also evident from the genetic studies that the surveillance systems in place have not detected close relatives of the original host of these viruses and need to be strengthened. The studies conducted by APEIR did find some additional influenza virus subtypes other than H5N1 viruses, and this information helps in understanding the transmission of other influenza viruses by wild birds. Although no H7N9 viruses were detected, the viruses found were fully characterised and gene sequences uploaded to gene databases, adding to the pool of data available for comparison by scientists trying to unravel the origin of novel viruses.

APEIR recommended that all gene sequences of influenza viruses should be shared as soon as they are available and this has been done by Chinese scientists for H7N9 viruses. APEIR researchers, including Professor Lei Fumin of the Institute of Zoology of the Chinese Academy of Sciences, are currently investigating the possible role of wild birds in transmission of H7N9 avian influenza. Professor Lei Fumin said, “We have already seen suggestions that this virus could be transmitted widely among migratory birds and poultry, and it is important to assess the likelihood of this through scientific studies on wild birds as they fly north through China to their summer breeding grounds.”

Policy makers in China may again be faced with a decision on whether or not to use vaccination to contain this disease so as to reduce the likelihood of exposure of humans to the H7N9 virus. APEIR studies on policy development showed the importance of having sound evidence on the merits and pitfalls of vaccination so that these can be weighed up scientifically without outside interference. Although there is no evidence so far that this virus will result in a human pandemic, this outbreak provides a reminder of the importance for all countries to ensure they have an appropriate stockpile of antiviral medication.

This study on avian influenza policies also found that agriculture sectoral policy should be coherent with public health sectoral policy and should aim to reduce the risk of emergence of human pandemic agents.

Dr. Pongpisut Jongudomsuk, Director of the Health Systems Research Institute, Thailand and Chair of the APEIR Steering Committee, said: “APEIR is a unique Asian trust-based EIDs research network composed of over 30 partner institutions from six countries (Cambodia, China, Indonesia, Lao PDR, Thailand and Vietnam). We have established partnerships and networks on the global, regional and country levels.”

“Much has been learned from studies conducted by APEIR researchers and we have an opportunity now to adopt the lessons so as to minimise effects on livelihoods and to prevent the disease caused by H7N9 avian influenza. APEIR is poised to play an important role in investigating and combating H7N9.”

For more information about APEIR and the five avian influenza projects please contact the APEIR Coordinating Office at visit the APEIR website

Seamy Underbelly Of Credit Card Theft Reveals Surprisingly Sophisticated Network Of Crook

April 23rd, 2013By Andy Henion.

Credit: University of Arizona

The thieves, Thomas Holt found, run an online marketplace for stolen credit data similar to eBay or Amazon where reputations drive sales. Thieves sell data and money laundering services, advertised via web forums, and send and receive payments electronically or through an intermediary. They even provide feedback on transactions to help weed out sellers who cannot be trusted to deliver the illegal goods.

Thomas Holt, associate professor in the School of Criminal Justice, uncovered a surprisingly sophisticated network of online crooks who steal credit card information.

“These aren’t just 15-year-olds stealing credit card info online and using it to buy pornography,” said Holt, associate professor of criminal justice. “These are thieves who come to trust one another. There’s a layer of sophistication here that can’t be understated, that’s very different than what we think about with other forms of crime.”

First, credit card information is stolen from an individual or group. Tactics can include hacking into the database of a bank, retailer or other service provider; sending emails to consumers masquerading as a bank to acquire sensitive details such as usernames and passwords (called phishing); and skimming. Examples of skimming include attaching a hard-to-spot device on an ATM machine or a crooked waiter who wears an electronic belt that can capture a card’s details.

The thief then advertises his haul in an online forum, with details such as card type, country of origin and asking price. Holt said a Visa Classic card, for example, might go for $5 to $20 per card, with a price discount for buying large amounts of data.

The winning buyer finalizes the deal online and sends the money through an electronic payment service. If the seller isn’t known or trusted, a middleman, called a guarantor, is used to assure the data is good before payment is sent – minus a fee.

For the buyers, there is any number of illicit service providers to then help them make purchases in a way that doesn’t raise suspicion or to pull money directly from the accounts – minus a fee.

All of this is done in a rather democratic fashion – unlike, say, the hierarchical structure of the mafia, said Holt, who monitored two English-language and two Russian-language forums for the study.

Some policymakers have called for flooding the online forums with bogus comments in an attempt to build mistrust and bring them down. But Holt said this strategy won’t necessarily work for organized forums with managers who can monitor and remove comments as in the forums he sampled.

A better strategy, he said, might be for law enforcement authorities to infiltrate the sophisticated networks with a long-term undercover operation. It’s a challenge, but one that might be more effective than other strategies called for by researchers.

Signs Of Dark Matter Detected

April 16th, 2013By Alton Parrish.

The experiment, called the Alpha Magnetic Spectrometer, or AMS, was mounted on the side of the orbiting International Space Station (ISS) in May 2011, when it was delivered there by a space shuttle crew that included astronaut Michael Fincke ’89. Fincke has logged more time in space than any other active astronaut, having spent more than a year in space, including more than 50 hours on spacewalks.

Astronaut Michael Fincke ’89 captured this image of his own reflection during a spacewalk. The AMS, already installed on the International Space Station, is just behind him at top left.

So far, the magnetic detector has recorded more than 30 billion “events” — impacts from cosmic rays. Of those, 6.8 billion have been identified as impacts from electrons or their antimatter counterpart, positrons — identified through comparisons of their numbers, energies and directions of origin.

AMS’s most eagerly anticipated findings — observations that would either confirm or disprove the existence of theoretical particles that might be a component of dark matter — have yet to be made, but Ting expressed confidence that an answer to that question will be obtained once more data is collected. The experiment is designed to keep going for at least 10 years.

In the meantime, the results so far — showing more positrons than expected — already demonstrate that new physical phenomena are being observed, Ting and Kounine said. What’s not yet clear is whether this is proof of dark matter in the form of exotic particles called neutralinos, which have been theorized but never observed, or whether it can instead be explained by emissions from distant pulsars.

Kounine explained that in addition to its primary focus on identifying signs of dark matter, AMS is also capable of detecting a wide variety of phenomena involving particles in space. For example, he said, “it can identify all the species of ions that exist in space” — particles whose abundance may help to refine theories about the origins and interactions of matter in the universe. “It has great potential to produce a lot more physics results,” Kounine said.

An answer on whether the observed particles are being produced by collisions of dark matter will come from graphing the numbers of electrons and positrons versus the energy of those particles. If the number of particles declines gradually toward higher energies, that would indicate their source is probably pulsars. But if it declines abruptly, that would be clear evidence of dark matter.

“Clearly, these observations point to the existence of a new physical phenomenon,” Kounine said. “But we can’t tell [yet] whether it’s from a particle origin, or astrophysical.”

Fincke, one of two astronauts who actually attached the AMS to the exterior of the ISS, said he was honored to have had the opportunity to deliver such an important payload. He was joined on the mission — the last flight of space shuttle Endeavour, and the second-to-last of NASA’s entire shuttle program — by another MIT alum, Greg Chamitoff PhD ’92.

Before the mission, the Endeavour astronauts visited CERN in Switzerland, where AMS’s mission control center is located, to learn about the precious payload they would be installing, Fincke said. “That got our crew to be extremely motivated to ensure success,” he said.

The device itself, Fincke explained, “was built to have as little interaction with astronauts as possible”: Once bolted into place, it requires no further attention. And while it was designed to withstand inadvertent impacts, he said that he and his fellow astronauts were careful to give it a wide berth. “We didn’t want to even get close,” he said.

Ancient Bronze Warship Ram Reveals Secrets

April 16th, 2013By Alton Parrish.

Dr Flemming said: “Casting a large alloy object weighing more than 20kg is not easy. To find out how it was done we needed specialists who could analyse the mix of metals in the alloys; experts who could study the internal crystal structure and the distribution of gas bubbles; and scholars who could examine the classical literature and other known examples of bronze castings.

“Although the Belgammel Ram was probably the first one ever found, other rams have since been found off the coast of Israel and off western Sicily. We have built a body of expertise and techniques that will help with future studies of these objects and improve the accuracy of past analysis.”

Dr Chris Hunt and Annita Antoniadou of Queen’s University Belfast used radiocarbon dating of burnt wood found inside the ram to date it to between

100 BC to 100 AD. This date is consistent with the decorative style of the tridents and bird motive on the top of the ram, which were revealed in detail by laser-scanned images taken by archaeologist Dr Jon Adams of the University of Southampton.

It is possible that during its early history the bronze would have been remelted and mixed with other bronze on one or more occasions, perhaps when a warship was repaired or maybe captured.

The X-ray team produced a 3-D image of the ram’s internal structure using a machine capable of generating X-rays of 10 mevs to shine through 15cm of solid bronze. By rotating the ram on a turntable and making 360 images they created a complete 3-D replica of the ram similar to a medical CT scan. An animation of the X-rays has been put together by Dr Richard Boardman of m-VIS (mu-VIS), a dedicated centre for computed tomography (CT) at the University of Southampton.

Further analysis was carried out by geochemists Professor Ian Croudace, Dr Rex Taylor and Dr Richard Pearce at the University of Southampton Ocean and Earth Science (based at the National Oceanography Centre). Micro-drilled samples show that the composition of the bronze was 87 per cent copper, 6 per cent tin and 7 per cent lead. The concentrations of the different metals vary throughout the casting. Scanning Electron Microscopy, SEM, reveals that the lead was not dissolved with the other metals to make a composite alloy but that it had separated out into segregated intergranular blobs within the alloy as the metal cooled.

These results indicate the likelihood that the Belgammel Ram was cast in one piece and cooled as a single object. The thicker parts cooled more slowly than the thin parts so that the crystal structure and number of bubbles trapped in the metal varies from place to place.

The isotope characterisation of the lead component found in the bronze (an alloy of copper and tin) can be used as a fingerprint to reveal the origin of the lead ore used in making the metal alloy. Up until now, this approach has only provided a general location in the Mediterranean. But recent advances in the analysis technique means that the location can be identified with higher accuracy. The result shows that the lead component of the metal could have come from a district of Attica in Greece called Lavrion. An outcome of this improved technique means that the method can now be applied to other ancient metal artefacts to discover where the ore was sourced.

Micro-X-Ray fluorescence of the surface showed that corrosion by seawater had dissolved out some of the copper leaving it richer in tin and lead. It is significant that when comparing photographs from 1964 and 2008 there is no indication of change in the surface texture. This implies that the metal is stable and is not suffering from “Bronze Disease,” a corrosion process that can destroy bronze artefacts.

The Belgammel Ram was found by a group of three British service sports divers off the coast of Libya at the mouth of a valley called Waddi Belgammel, near Tobruk. Using a rubber dinghy and rope they dragged it 25 metres to the surface. It was brought home to the UK as a souvenir but when the divers discovered that it was a rare antiquity, the ram was loaned to the Fitzwilliam Museum, Cambridge.

Ken Oliver is the only surviving member of that group of three and the effective owner. He decided in 2007 that is should be returned to a museum in Libya. With the help of the British Society for Libyan Studies this was arranged in 2010. During the intervening period Dr Nic Flemming invited experts to undertake scientific investigations prior to its return to Libya. These services were offered freely and would have cost many tens of thousands of pounds if conducted commercially. The team’s objective was to understand how such a large bronze was cast, the history and composition of the alloy, its strength, how it was used in naval warfare, and how it survived 2000 years under the sea.

Since the Belgammel Ram was discovered, other rams have been found, some off the coast of Israel near Athlit, and more recently, off western Sicily. The latter finds look to be the remains of a battle site. On the 8th April there is a one-day colloquium hosted by the Faculty of Classics, University of Oxford, to discuss the finds of the Egadi Islands Project.

Nic Flemming continued: “We have learned such a huge amount from the Belgammel Ram and have developed new techniques which will help us unpick future mysteries.

“We will never know why the Belgammel Ram was on the seabed near Tobruk. There may have been a battle in the area, a skirmish with pirates. It could be that it was cargo from an ancient commercial vessel, about to be sold as salvage. The fragments of wood inside the ram show signs of fire, and we now know that partsof the bronze had been heated to a high temperature since it was cast which caused the crystal structure to change. The ship may have caught fire and the ram fell into the sea as the flames licked towards it. Some things will always remain a mystery. But we are pleased that we have gleaned so many details from this study that will help future work.”

The Libyan uprising of 2011 resulted in many battles in the area around the museum. Fortunately the museum suffered no damage. The Belgammel Ram is safe.

Cellphones That Detect Asthma

April 11th, 2013By Alton Parrish.

An interdisciplinary team of Stony Brook University researchers have been selected to receive a three year National Science Foundation (NSF) award for the development of a personalized asthma monitor that uses nanotechnology to detect known airway inflammation biomarkers in the breath. The project, “Personalized Asthma Monitor Detecting Nitric Oxide in Breath,” comes with a $599,763 award funded through August 31, 2015.

The researchers, led by Perena Gouma, PhD, Professor in the Department of Materials Science and Engineering and Director of the Center for Nanomaterials and Sensor Development at Stony Brook, and her research collaborators, Milutin Stanacevic, PhD, Associate Professor, Department of Electrical and Computer Engineering; and Sanford Simon, PhD, Professor, Departments of Biochemistry and Cell Biology and Pathology; are developing a nanosensor-based microsystem that captures, quantifies, and displays an accurate measure of the nitric oxide concentration in a single-exhaled breath.

Through the Smart Health and Wellbeing Program under which this grant was issued, the NSF seeks to address fundamental technical and scientific issues that support much needed transformation of healthcare from reactive and hospital-centered to preventive, proactive, evidence-based, person-centered and focused on wellbeing rather than disease. This is a Type I: Exploratory Project, which means that it will investigate the proof-of-concept or feasibility of a novel technology, including processes and approaches that promote smart health and wellbeing.

“We are very excited about the NSF’s support of our research, which will enable us to make the leap from breath-gas testing devices to actual breath-test diagnostics for asthma and other airway diseases,” said Professor Gouma. “Our team brings together multidisciplinary expertise that spans the science, engineering and medical fields and aims to use the latest nanotechnologies to provide the public with affordable, personalized, non-invasive, nitric oxide breath diagnostic devices.”

According to Professor Gouma, the technology studied in this project provides an effective and practical means to quantitate nitric oxide levels in breath in a relatively simple and noninvasive way – detecting fractional exhaled nitric oxide, a known biomarker for measuring airway inflammation. “The device will be especially suitable for use by a wide range of compromised individuals, such as the elderly, young children and otherwise incapacitated patients,” she added.

Since 2002, Professor Gouma has received funding from the NSF to develop sensor nanotechnologies for medical applications. In 2003, she received an NSF NIRT (Nanoscale Interdisciplinary Research Team) award, which helped to establish the Center for Nanomaterials and Sensor Development. Professor Gouma’s research has been featured by the NSF Science Nation and has been widely publicized in the media, including Fox News’ Sunday Housecall; Scientific American; Personalized Medicine; Women’s Health and more. She is a Fulbright Scholar, serves as an Associate Editor of IEEE Sensors Journal and theJournal of the American Ceramic Society.

Asthma – A chronic disease that causes an inflammation and narrowing of the lung’s airways. Symptoms include chest tightness, wheezing, shortness of breath and coughing.

Contacts and sources:

Inside Science TV (ISTV)

Transiting Exoplanet Survey Satellite (TESS) To See Exoplanets

April 8th, 2013By Alton Parrish.

TESS team partners include the MIT Kavli Institute for Astrophysics and Space Research (MKI) and MIT Lincoln Laboratory; NASA’s Goddard Spaceflight Center; Orbital Sciences Corporation; NASA’s Ames Research Center; the Harvard-Smithsonian Center for Astrophysics; The Aerospace Corporation; and the Space Telescope Science Institute.

The project, led by principal investigator George Ricker, a senior research scientist at MKI, will use an array of wide-field cameras to perform an all-sky survey to discover transiting exoplanets, ranging from Earth-sized planets to gas giants, in orbit around the brightest stars in the sun’s neighborhood.

An exoplanet is a planet orbiting a star other than the sun; a transiting exoplanet is one that periodically eclipses its host star.

“TESS will carry out the first space-borne all-sky transit survey, covering 400 times as much sky as any previous mission,” Ricker says. “It will identify thousands of new planets in the solar neighborhood, with a special focus on planets comparable in size to the Earth.”

TESS relies upon a number of innovations developed by the MIT team over the past seven years. “For TESS, we were able to devise a special new ‘Goldilocks’ orbit for the spacecraft — one which is not too close, and not too far, from both the Earth and the moon,” Ricker says.

As a result, every two weeks TESS approaches close enough to the Earth for high data-downlink rates, while remaining above the planet’s harmful radiation belts. This special orbit will remain stable for decades, keeping TESS’s sensitive cameras in a very stable temperature range.

With TESS, it will be possible to study the masses, sizes, densities, orbits and atmospheres of a large cohort of small planets, including a sample of rocky worlds in the habitable zones of their host stars. TESS will provide prime targets for further characterization by the James Webb Space Telescope, as well as other large ground-based and space-based telescopes of the future.

TESS project members include Ricker; Josh Winn, an associate professor of physics at MIT; and Sara Seager, a professor of planetary science and physics at MIT.

“We’re very excited about TESS because it’s the natural next step in exoplanetary science,” Winn says.

“The selection of TESS has just accelerated our chances of finding life on another planet within the next decade,” Seager adds.

MKI research scientists Roland Vanderspek and Joel Villasenor will serve as deputy principal investigator and payload scientist, respectively. Principal research scientist Alan Levine serves as a co-investigator. Tony Smith of Lincoln Lab will manage the TESS payload effort, Lincoln Lab will develop the optical cameras and custom charge-coupled devices required by the mission.

“NASA’s Explorer Program gives us a wonderful opportunity to carry out forefront space science with a relatively small university-based group and on a time scale well-matched to the rapidly evolving field of extrasolar planets,” says Jackie Hewitt, a professor of physics and director of the Kavli Institute for Astrophysics and Space Research. “At MIT, TESS has the involvement of faculty and research staff of the Kavli Institute, the Department of Physics, and the Department of Earth, Atmospheric, and Planetary Sciences, so we will be actively engaging students in this exciting work.”

Previous sky surveys with ground-based telescopes have mainly picked out giant exoplanets. NASA’s Kepler spacecraft has recently uncovered the existence of many smaller exoplanets, but the stars Kepler examines are faint and difficult to study. In contrast, TESS will examine a large number of small planets around the very brightest stars in the sky.

“The TESS legacy will be a catalog of the nearest and brightest main-sequence stars hosting transiting exoplanets, which will forever be the most favorable targets for detailed investigations,” Ricker said.

The other mission selected today by NASA is the Neutron Star Interior Composition Explorer (NICER). It will be mounted on the International Space Station and measure the variability of cosmic X-ray sources, a process called X-ray timing, to explore the exotic states of matter within neutron stars and reveal their interior and surface compositions. NICER’s principal investigator is Keith Gendreau of NASA’s Goddard Space Flight Center in Greenbelt, Md. The MKI group, lead by Ricker, is also a partner in the NICER mission.

“The Explorer Program has a long and stellar history of deploying truly innovative missions to study some of the most exciting questions in space science,” John Grunsfeld, NASA’s associate administrator for science, said in the space agency’s statement today. “With these missions we will learn about the most extreme states of matter by studying neutron stars and we will identify many nearby star systems with rocky planets in the habitable zone for further study by telescopes such as the James Webb Space Telescope.”

The Explorer Program is NASA’s oldest continuous program and has launched more than 90 missions. It began in 1958 with the Explorer 1, which discovered the Earth’s radiation belts. Another Explorer mission, the Cosmic Background Explorer, led to a Nobel Prize. NASA’s Goddard Space Flight Center manages the program for the agency’s Science Mission Directorate in Washington.

Global Solar Photovoltaic Industry Is Likely Now A Net Energy Producer

April 8th, 2013By Alton Parrish.

The rapid growth of the solar power industry over the past decade may have exacerbated the global warming situation it was meant to soothe, simply because most of the energy used to manufacture the millions of solar panels came from burning fossil fuels. That irony, according to Stanford University researchers, is coming to an end.

For the first time since the boom started, the electricity generated by all of the world’s installed solar photovoltaic (PV) panels last year probably surpassed the amount of energy going into fabricating more modules, according to Michael Dale, a postdoctoral fellow at Stanford’s Global Climate & Energy Project (GCEP). With continued technological advances, the global PV industry is poised to pay off its debt of energy as early as 2015, and no later than 2020.

“This analysis shows that the industry is making positive strides,” said Dale, who developed a novel way of assessing the industry’s progress globally in a study published in the current edition of Environmental Science & Technology. “Despite its fantastically fast growth rate, PV is producing – or just about to start producing – a net energy benefit to society.”

Brian Webster (left) and Mario Richard install photovoltaic (PV) modules on an Englewood, Colo., home. Manufacturing and installing solar panels require large amounts of electricity. But Stanford scientists have found that the global PV industry likely produces more electricity than it consumes.

Credit: Dennis Schroeder/NREL

The achievement is largely due to steadily declining energy inputs required to manufacture and install PV systems, according to co-author Sally Benson, GCEP’s director. The new study, Benson said, indicates that the amount of energy going into the industry should continue to decline, while the issue remains an important focus of research.

“GCEP is focused on developing game-changing energy technologies that can be deployed broadly. If we can continue to drive down the energy inputs, we will derive greater benefits from PV,” she said. “Developing new technologies with lower energy requirements will allow us to grow the industry at a faster rate.”

The energy used to produce solar panels is intense. The initial step in producing the silicon at the heart of most panels is to melt silica rock at 3,000 degrees Fahrenheit using electricity, commonly from coal-fired power plants.

As investment and technological development have risen sharply with the number of installed panels, the energetic costs of new PV modules have declined. Thinner silicon wafers are now used to make solar cells, less highly refined materials are now used as the silicon feedstock, and less of the costly material is lost in the manufacturing process. Increasingly, the efficiency of solar cells using thin film technologies that rely on earth-abundant materials such as copper, zinc, tin and carbon have the potential for even greater improvements.

To be considered a success – or simply a positive energy technology – PV panels must ultimately pay back all the energy that went into them, said Dale. The PV industry ran an energy deficit from 2000 to now, consuming 75 percent more energy than it produced just five years ago. The researchers expect this energy debt to be paid off as early as 2015, thanks to declining energy inputs, more durable panels and more efficient conversion of sunlight into electricity.

Strategic implications

If current rapid growth rates persist, by 2020 about 10 percent of the world’s electricity could be produced by PV systems. At today’s energy payback rate, producing and installing the new PV modules would consume around 9 percent of global electricity. However, if the energy intensity of PV systems continues to drop at its current learning rate, then by 2020 less than 2 percent of global electricity will be needed to sustain growth of the industry.

This may not happen if special attention is not given to reducing energy inputs. The PV industry’s energetic costs can differ significantly from its financial costs. For example, installation and the components outside the solar cells, like wiring and inverters, as well as soft costs like permitting, account for a third of the financial cost of a system, but only 13 percent of the energy inputs. The industry is focused primarily on reducing financial costs.

Continued reduction of the energetic costs of producing PV panels can be accomplished in a variety of ways, such as using less materials or switching to producing panels that have much lower energy costs than technologies based on silicon. The study’s data covers the various silicon-based technologies as well as newer ones using cadmium telluride and copper indium gallium diselenide as semiconductors. Together, these types of PV panels account for 99 percent of installed panels.

The energy payback time can also be reduced by installing PV panels in locations with high quality solar resources, like the desert Southwest in the United States and the Middle East. “At the moment, Germany makes up about 40 percent of the installed market, but sunshine in Germany isn’t that great,” Dale said. “So from a system perspective, it may be better to deploy PV systems where there is more sunshine.”

This accounting of energetic costs and benefits, say the researchers, should be applied to any new energy-producing technology, as well as to energy conservation strategies that have large upfront energetic costs, such as retrofitting buildings. GCEP researchers have begun applying the analysis to energy storage and wind power.

Star Formation Surprisingly Close To Galaxy’s Supermassive Black Hole

April 4th, 2013by Alton Parrish.

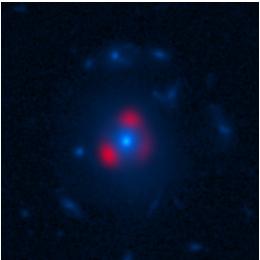

Credit: Vieira et al., ALMA (ESO, NAOJ, NRAO), NASA, NRAO/AUI/NSF.

The results, published in a set of papers to appear in the journal Natureand in the Astrophysical Journal, will help astronomers better understand when and how the earliest massive galaxies formed.

The most intense bursts of star birth are thought to have occurred in the early Universe in massive, bright galaxies. These starburst galaxies converted vast reservoirs of gas and dust into new stars at a furious pace – many thousands of times faster than stately spiral galaxies like our own Milky Way.

The international team of researchers first discovered these distant starburst galaxies with the National Science Foundation’s 10-meter South Pole Telescope. Though dim in visible light, they were glowing brightly in millimeter wavelength light, a portion of the electromagnetic spectrum that the new ALMA telescope was designed to explore.

Using only 16 of ALMA’s eventual full complement of 66 antennas, the researchers were able to precisely determine the distance to 18 of these galaxies, revealing that they were among the most distant starburst galaxies ever detected, seen when the Universe was only one to three billion years old. These results were surprising because very few similar galaxies had previously been discovered at similar distances, and it wasn’t clear how galaxies that early in the history of the Universe could produce stars at such a prodigious rate.

“The more distant the galaxy, the further back in time one is looking, so by measuring their distances we can piece together a timeline of how vigorously the Universe was making new stars at different stages of its 13.7 billion-year history,” said Joaquin Vieira a postdoctoral scholar at Caltech who led the team and is lead author of the Nature paper.

In fact, two of these galaxies are the most distant starburst galaxies published to date — so distant that their light began its journey when the Universe was only one billion years old. Intriguingly, emission from water molecules was detected in one of these record-breakers, making it the most distant detection of water in the Universe published to date.

“ALMA’s sensitivity and wide wavelength range mean we could make our measurements in just a few minutes per galaxy — about one hundred times faster than before,” said Axel Weiss of the Max-Planck-Institute for Radioastronomy in Bonn, Germany, who led the work to measure the distances to the galaxies. “Previously, a measurement like this would be a laborious process of combining data from both visible-light and radio telescopes.”

The galaxies found in this study have relatives in the local Universe, but the intensity of star formation in these distant objects is unlike anything seen nearby. “Our most extreme galactic neighbors are not forming stars nearly as energetically as the galaxies we observed with ALMA,” said Vieira. “These are monstrous bursts of star formation.”

The new results indicate these galaxies are forming 1,000 stars per year, compared to just 1 per year for our Milky Way galaxy.

This unprecedented measurement was made possible by gravitational lensing, in which the light from a distant galaxy is distorted and magnified by the gravitational force of a nearer foreground galaxy. “These beautiful pictures from ALMA show the background galaxies warped into arcs of light known as Einstein rings, which encircle the foreground galaxies,” said Yashar Hezaveh of McGill University in Montreal, Canada, who led the study of the gravitational lensing. “The dark matter surrounding galaxies half-way across the Universe effectively provides us with cosmic telescopes that make the very distant galaxies appear bigger and brighter.”

Analysis of this gravitational distortion reveals that some of the distant star-forming galaxies are as bright as 40 trillion Suns, and that gravitational lensing has magnified this light by up to 22 times. Future observations with ALMA using gravitational lensing can take a more detailed look at the distribution of dark matter surrounding galaxies.

“This is an amazing example of astronomers from around the world collaborating to make an exciting discovery with this new facility,” said Daniel Marrone with the University of Arizona, principal investigator of the ALMA gravitational lensing study. “This is just the beginning for ALMA and for the study of these starburst galaxies. Our next step is to study these objects in greater detail and figure out exactly how and why they are forming stars at such prodigious rates.”

ALMA, an international astronomy facility, is a partnership of Europe, North America and East Asia in cooperation with the Republic of Chile. ALMA construction and operations are led on behalf of Europe by ESO, on behalf of North America by the National Radio Astronomy Observatory (NRAO), and on behalf of East Asia by the National Astronomical Observatory of Japan (NAOJ). The Joint ALMA Observatory (JAO) provides the unified leadership and management of the construction, commissioning and operation of ALMA.

The National Radio Astronomy Observatory is a facility of the National Science Foundation, operated under cooperative agreement by Associated Universities, Inc.

Toxin Crops A Bad Idea Say Scientist

April 1st, 2013By Alton Parrish.

A major agricultural pest, the moth Helicoverpa zea and its caterpillar go by many common names, depending on the crop they feed on: shown here is a “corn earworm.”

Credit: Jose Roberto Peruca

A strategy widely used to prevent pests from quickly adapting to crop-protecting toxins may fail in some cases unless better preventive actions are taken, suggests new research by University of Arizona entomologists published in the Proceedings of the National Academy of Sciences.

Corn and cotton have been genetically modified to produce pest-killing proteins from the bacterium Bacillus thuringiensis, or Bt for short. Compared with typical insecticide sprays, theBt toxins produced by genetically engineered crops are much safer for people and the environment, explained Yves Carrière, a professor of entomology in the UA College of Agriculture and Life Sciences who led the study.

Although Bt crops have helped to reduce insecticide sprays, boost crop yields and increase farmer profits, their benefits will be short-lived if pests adapt rapidly, said Bruce Tabashnik, a co-author of the study and head of the UA department of entomology. “Our goal is to understand how insects evolve resistance so we can develop and implement more sustainable, environmentally friendly pest management,” he said.

Bt crops were first grown widely in 1996, and several pests have already become resistant to plants that produce a single Bt toxin. To thwart further evolution of pest resistance to Bt crops, farmers have recently shifted to the “pyramid” strategy: each plant produces two or more toxins that kill the same pest. As reported in the study, the pyramid strategy has been adopted extensively, with two-toxin Bt cotton completely replacing one-toxin Bt cotton since 2011 in the U.S.

Most scientists agree that two-toxin plants will be more durable than one-toxin plants. The extent of the advantage of the pyramid strategy, however, rests on assumptions that are not always met, the study reports. Using lab experiments, computer simulations and analysis of published experimental data, the new results help explain why one major pest has started to become resistant faster than anticipated.

“The pyramid strategy has been touted mostly on the basis of simulation models,” said Carrière. “We tested the underlying assumptions of the models in lab experiments with a major pest of corn and cotton. The results provide empirical data that can help to improve the models and make the crops more durable.”

One critical assumption of the pyramid strategy is that the crops provide redundant killing, Carrière explained. “Redundant killing can be achieved by plants producing two toxins that act in different ways to kill the same pest,” he said, “so, if an individual pest has resistance to one toxin, the other toxin will kill it.”

Credit: Thierry Brevault/CIRAD

“We obviously can’t release resistant insects into the field, so we breed them in the lab and bring in the crop plants to do feeding experiments,” Carrière said. For their experiments, the group collected cotton bollworm – also known as corn earworm or Helicoverpa zea –, a species of moth that is a major agricultural pest, and selected it for resistance against one of the Bt toxins, Cry1Ac.

As expected, the resistant caterpillars survived after munching on cotton plants producing only that toxin. The surprise came when Carrière’s team put them on pyramided Bt cotton containing Cry2Ab in addition to Cry1Ac.

If the assumption of redundant killing is correct, caterpillars resistant to the first toxin should survive on one-toxin plants, but not on two-toxin plants, because the second toxin should kill them, Carrière explained.

“But on the two-toxin plants, the caterpillars selected for resistance to one toxin survived significantly better than caterpillars from a susceptible strain.”

These findings show that the crucial assumption of redundant killing does not apply in this case and may also explain the reports indicating some field populations of cotton bollworm rapidly evolved resistance to both toxins.

Moreover, the team’s analysis of published data from eight species of pests reveals that some degree of cross-resistance between Cry1 and Cry2 toxins occurred in 19 of 21 experiments. Contradicting the concept of redundant killing, cross-resistance means that selection with one toxin increases resistance to the other toxin.

According to the study’s authors, even low levels of cross-resistance can reduce redundant killing and undermine the pyramid strategy. Carrière explained that this is especially problematic with cotton bollworm and some other pests that are not highly susceptible to Bttoxins to begin with.

The team found violations of other assumptions required for optimal success of the pyramid strategy. In particular, inheritance of resistance to plants producing only Bt toxin Cry1Ac was not recessive, which is expected to reduce the ability of refuges to delay resistance.

Insects can carry two forms of the same gene for resistance to Bt – one confers susceptibility and the other resistance. When resistance to a toxin is recessive, one resistance allele is not sufficient to increase survival. In other words, offspring that inherit one allele of each type will not be resistant, while offspring that inherit two resistance alleles will be resistant.

Refuges consist of standard plants that do not make Bt toxins and thus allow survival of susceptible pests. Under ideal conditions, inheritance of resistance is recessive and the susceptible pests emerging from refuges greatly outnumber the resistant pests. If so, the matings between two resistant pests needed to produce resistant offspring are unlikely. But if inheritance of resistance is dominant instead of recessive, as seen with cotton bollworm, matings between a resistant moth and a susceptible moth can produce resistant offspring, which hastens resistance.

According to Tabashnik, overly optimistic assumptions have led the EPA to greatly reduce requirements for planting refuges to slow evolution of pest resistance to two-toxin Btcrops.

The new results should come as a wakeup call to consider larger refuges to push resistance further into the future, Carrière pointed out. “Our simulations tell us that with 10 percent of acreage set aside for refuges, resistance evolves quite fast, but if you put 30 or 40 percent aside, you can substantially delay it.”

“Our main message is to be more cautious, especially with a pest like the cotton bollworm,” Carrière said. “We need more empirical data to refine our simulation models, optimize our strategies and really know how much refuge area is required. Meanwhile, let’s not assume that the pyramid strategy is a silver bullet.”

Contacts and sources:

Daniel Stolte

University of Arizona

Programming Synthetic Life: The Coming Biology Revolution

March 28th, 2013By Alton Parrish.

Christine Fu photo.

Keynote speaker Juan Enriquez, a self-described “curiosity expert” and co-founder of the company Synthetic Genomics, compared the digital revolution spawned by thinking of information as a string of ones and zeros to the coming synthetic biology revolution, premised on thinking about life as a mix of interchangeable parts – genes and gene networks – that can be learned and manipulated like any language.

At the moment, this genetic manipulation, a natural outgrowth of genetic engineering, focuses on altering bacteria and yeast to produce products they wouldn’t normally make, such as fuels or drugs. “To do with biology what you would do if you were designing a piece of software,” according to moderator Corey Powell, editor at large of Discover, which plans to publish a story about the conference and post the video online.

UC Berkeley chemical engineer Jay Keasling has been a key player in developing the field of synthetic biology over the last decade. Enriquez introduced Keasling as someone “who in his spare time goes out and tries to build stuff that will cure malaria, and biofuels and the next generation of clean tech, all while mentoring students at this university and at the national labs and creating whole new fields of science.”

Keasling, director of SynBERC, a UC Berkeley-led multi-institution collaboration that is laying the foundations for the field, expressed excitement about the newest development: the release next month by the pharmaceutical company sanofi aventis of a synthetic version of artemsinin, “the world’s best antimalarial drug,” he said. Sparked by discoveries in Keasling’s lab more than a decade ago, the drug is produced by engineered yeast and will be the first product from synthetic biology to reach the market.

“There are roughly 300 to 500 million cases of malaria each year,” he said. “Sanofi will initially produce about 100 million treatments, which will cover one-third to one-quarter of the need.”

Biofuels from yeast

As CEO of the Joint BioEnergy Institute, Keasling is now focused on engineering microbes to turn “a billion tons of biomass that go unutilized in the U.S. on an annual basis … into fuel, producing roughly a third of the need in the U.S.”

But other advances are on the horizon, he said, such as engineering new materials and engineering “green” replacements for all the products now made from petroleum. “Some of these have the potential to significantly reduce our carbon footprint, by say, 80 percent,” he said.

Christine Fu photo.

Virginia Ursin, Technology Prospecting Lead and Science Fellow at Monsanto Corp., noted that industry sees synthetic biology’s triumphs as being 10-20 years down the road, but anticipated, for example, producing enzymes used in manufacturing or even engineering microbes that live on plants to improve plant growth.

“Engineering (microbes) to increase their impact on (plant) health or protection against disease is probably going to be one of the nearer term impacts of synthetic biology on agriculture,” she said. Ultimately, she said, the field could have a revolutionary impact on agriculture similar to the green revolution sparked by the development of chemical fertilizers.

But the implications of being able to engineer cells go deeper, according to Enriquez.

“This isn’t just about economic growth, this is also about where we are going as a human species,” he said. Humans will no longer merely adapt to or adopt the environment, but “begin to understand how life is written, how life is coded, how life is copied, and how you can rewrite life.”

Scientists’ moral choices

Directly guiding “the evolution of microbes, bacteria, plants, animals and even ourselves,” as Enriquez put it, sounds like science fiction. George Church, a biologist at Harvard Medical School, suggested that we might want to bring back extinct animals, such as the mammoth to help restore Arctic permafrost disintegrating under the impact of global warming.

In response, ethicist Laurie Zoloth of Northwestern University urged caution in exploiting the technology of synthetic biology.

“You could change the world, and you have a powerful technology,” she said. “I am more interested in what this technology makes of the women and men doing it. What sorts of interior moral choices they need to be making and how you create scientists who aren’t only good at all these technical skills but very good at asking and thinking seriously about ethical and moral questions and coming to terms with the implications of their work.”

Given the current crises of climate change and ecological change, she added, “frankly, without this work, I don’t think we have such great answers for (them).”

Church acknowledged that “we have an obligation to do it right. But because our environment, our world is changing, the decision to do nothing is a gigantic risk. The decision to do a particular new thing is a risk. We have to get better at risk assessment and safety engineering.”

Drew Endy, a bioengineering professor at Stanford, summed up his hopes for synthetic biology. “What I would like to imagine as a longer-term encompassing vision is that humanity figured out how to reinvent the manufacturing of the things we need so that we can do it in partnership with nature; not to replace nature, but to dance better with it in sustaining what it means to be a flourishing human civilization.”

By Robert Sanders,

University of California Berkeley

Dawn Of Time Mapped

March 25th, 2013By Alton Parrish.

“Roughly speaking the things that we are finding that are not as we expect are features that are across the whole sky. When you look only at the large features on this map you find that that our best fitting model, our best theory has a problem fitting the data, there is a lack of signal that we would expect to see,” he says.

The news that the early universe is not quite as was thought has left the greatest minds in cosmology spinning with excitement.

George Efstathiou, Professor of Astrophysics, University of Cambridge, is a key member of the Planck Science Team.

“The idea that you can actually experimentally test what happened at the Big Bang still amazes me,” he says.

The Big Bang theory remains intact of course, but the concept of inflation could be put to test by the Planck data.

“We see these strange patterns that are not expected in inflationary theory, the simplest inflationary theories,” explains Efstathiou.

“So there’s a real possibility that we have an incomplete picture. It may be that we have been fooled, that inflation didn’t happen. It’s perfectly possible that there was some phase of the universe before the Big Bang actually happened where you can track the history of the universe to a pre-Big Bang period.”

The Planck mission could test ideas about how the early universe was formed.The puzzle is that at small scales the data fits the theoretical model very nicely, but at larger scales the signal from the cosmic microwave background is much weaker than expected.

Efstathiou is looking for answers: “Can we find a theoretical explanation that links together the different phenomena that we have seen, the different little discrepancies, with inflationary theory? That’s where there’s the potential for a paradigm shift, because at the moment there’s no obvious theoretical explanation that links together these anomalies that we have seen. But if you found a theory that links phenomena that were previously unrelated, then that’s a pointer to new physics.”

It appears that the audacious Planck mission really will shed new light on the dawn of time.

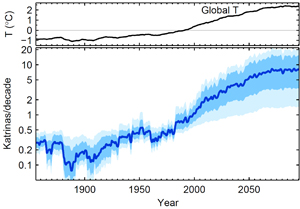

Ten Times More Hurricanes Like Katrina Expected

March 19th, 2013By Alton Parrish.

“Instead of choosing between the two methods, I have chosen to use temperatures from all around the world and combine them into a single model,” explains climate scientist Aslak Grinsted, Centre for Ice and Climate at the Niels Bohr Institute at the University of Copenhagen.

He takes into account the individual statistical models and weights them according to how good they are at explaining past storm surges. In this way, he sees that the model reflects the known physical relationships, for example, how the El Niño phenomenon affects the formation of cyclones. The research was performed in collaboration with colleagues from China and England.

Credit: Background image: NASA/GSFC

Now 10 times as many storms like Katrina are expected

“We find that 0.4 degrees Celcius warming of the climate corresponds to a doubling of the frequency of extreme storm surges like the one following Hurricane Katrina. With the global warming we have had during the 20th century, we have already crossed the threshold where more than half of all ‘Katrinas’ are due to global warming,” explains Aslak Grinsted.

Credit: Niels Bohr Institute

The extreme storm surge from Superstorm Sandy in the autumn 2012 flooded large sections of New York and other coastal cities in the region – here you see Marblehead, Massachusetts. New research shows that such hurricane surges will become more frequent in a warmer climate.

Article in PNAS

Mysterious And Intriguing Zw II 28

March 16th, 2013

Credit: ESA/Hubble and NASA

The sparkling pink and purple loop of Zw II 28 is not a typical ring galaxy due to its lack of a visible central companion. For many years it was thought to be a lone circle on the sky, but observations using Hubble have shown that there may be a possible companion lurking just inside the ring, where the loop appears to double back on itself. The galaxy has a knot-like, swirling ring structure, with some areas appearing much brighter than others.

Contacts and sources:

3-D Image Of Buried Mars Flood Channels

March 8th, 2013By Alton Parrish.

The spacecraft took numerous images during the past few years that showed channels attributed to catastrophic flooding in the last 500 million years. Mars during this period had been considered cold and dry. These channels are essential to understanding the extent to which recent hydrologic activity prevailed during such arid conditions. They also help scientists determine whether the floods could have induced episodes of climate change.

This illustration schematically shows where the Shallow Radar instrument on NASA’s Mars Reconnaissance Orbiter detected flood channels that had been buried by lava flows in the Elysium Planitia region of Mars.

Marte Vallis consists of multiple perched channels formed around streamlined islands. These channels feed a deeper and wider main channel.

In this illustration, the surface has been elevated, and scaled by a factor of one to 100 for clarity. The color scale represents the elevation of the buried channels relative to a Martian datum, or reference elevation. The reason the values are negative is because the elevation of the surface of Mars in this region is also a negative — below average global elevation.

Credit:NASA/JPL-Caltech/Sapienza University of Rome/Smithsonian Institution/USGS

SHARAD was provided by the Italian Space Agency. Its operations are led by Sapienza University of Rome, and its data are analyzed by a joint U.S.-Italian science team. NASA’s Jet Propulsion Laboratory, a division of the California Institute of Technology in Pasadena, manages the Mars Reconnaissance Orbiter for the NASA Science Mission Directorate, Washington. Lockheed Martin Space Systems, Denver, built the spacecraft.Image

The estimated size of the flooding appears to be comparable to the ancient mega flood that created the Channeled Scablands in the Pacific Northwest region of the United States in eastern Washington.

The findings are reported in the March 7 issue of Science Express by a team of scientists from NASA, the Smithsonian Institution, and the Southwest Research Institute in Houston.

“Our findings show the scale of erosion that created the channels previously was underestimated and the channel depth was at least twice that of previous approximations,” said Gareth Morgan, a geologist at the National Air and Space Museum’s Center for Earth and Planetary Studies in Washington and lead author on the paper. “This work demonstrates the importance of orbital sounding radar in understanding how water has shaped the surface of Mars.”

The channels lie in Elysium Planitia, an expanse of plains along the Martian equator and the youngest volcanic region on the planet. Extensive volcanism throughout the last several hundred million years covered most of the surface of Elysium Planitia, and this buried evidence of Mars’ older geologic history, including the source and most of the length of the 620-mile-long (1000-kilometer-long) Marte Vallis channel system. To probe the length, width and depth of these underground channels, the researchers used MRO’s Shallow Radar (SHARAD).

Marte Vallis’ morphology is similar to more ancient channel systems on Mars, especially those of the Chryse basin. Many scientists think the Chryse channels likely were formed by the catastrophic release of ground water, although others suggest lava can produce many of the same features. In comparison, little is known about Marte Vallis.

With the SHARAD radar, the team was able to map the buried channels in three dimensions with enough detail to see evidence suggesting two different phases of channel formation. One phase etched a series of smaller branching, or “anastomosing,” channels that are now on a raised “bench” next to the main channel. These smaller channels flowed around four streamlined islands. A second phase carved the deep, wide channels.

“In this region, the radar picked up multiple ‘reflectors,’ which are surfaces or boundaries that reflect radio waves, so it was possible to see multiple layers, ” said Lynn Carter, the paper’s co-author from NASA’s Goddard Space Flight Center in Greenbelt, Md. “We have rarely seen that in SHARAD data outside of the polar ice regions of Mars.”

The mapping also provided sufficient information to establish the floods that carved the channels originated from a now-buried portion of the Cerberus Fossae fracture system. The water could have accumulated in an underground reservoir and been released by tectonic or volcanic activity.

“While the radar was probing thick layers of dry, solid rock, it provided us with unique information about the recent history of water in a key region of Mars,” said co-author Jeffrey Plaut of NASA’s Jet Propulsion Laboratory (JPL), Pasadena, Calif.

The Italian Space Agency provided the SHARAD instrument on MRO and Sapienza University of Rome leads its operations. JPL manages MRO for NASA’s Science Mission Directorate in Washington. Lockheed Martin Space Systems of Denver built the orbiter and supports its operations.

This image shows the entire distance traveled from the landing site (dark smudge at left) to its location as of 2 January 2013 (the rover is bright feature at right). The tracks are not seen where the rover has recently driven over the lighter-toned surface, which may be more indurated than the darker soil.

Credit: NASA/JPL/University of Arizona

Source: NASA

Helicoprion: 270 Million Year Old Mystery Of Teeth Like Circular Saw Blade

March 4th, 2013By Alton Parrish.

The Idaho State University (ISU) Museum of Natural History has the largest public collection of Helicoprion spiral-teeth fossils in the world. The fossils of this 270-million-year-old fish have long mystified scientists because, for the most part, the only remains of the fish are its teeth because its skeletal system was made of cartilage, which doesn’t preserve well. No one could determine how these teeth – that look similar to a spiral saw blade – fit into a prehistoric fish with a poor fossil record, long assumed to be a species of a shark.

“New CT scans of a unique specimen from Idaho show the spiral of teeth within the jaws of the animal, giving new information on what the animal looked like, how it ate,” said Leif Tapanila, principal investigator of the study, who is an ISU Associate Professor of Geosciences and Idaho Museum of Natural History division head and research curator.

Scientists at Idaho State University used CT scans of newly discovered specimens to make three dimensional virtual reconstructions of the jaws of the ancient fish Helicoprion.

The fossils of this 270-million-year-old fish have long mystified scientists due in most part to the remains of the fish being its teeth. This is because its skeleton system was made of cartilage, which doesn’t preserve well. No one could determine how these teeth – that look similar to a spiral saw blade – fit into a prehistoric fish with a poor fossil record, long assumed to be a species of a shark.

“New CT scans of a unique specimen from Idaho show the spiral of teeth within the jaws of the animal, giving new information on what the animal looked like, how it ate,” said Leif Tapanila, principal investigator of the study.

“We were able to answer where the set of teeth fit in the animal,” Tapanila said. “They fit in the back of the mouth, right next to the back joint of the jaw. We were able to refute that it might have been located at the front of the jaw.”

Located in the back of the jaw, the teeth were “saw-like,” with the jaw creating a rolling-back and slicing mechanism. The Helicoprion also likely ate soft-tissued prey such as squid, rather that hunting creatures with hard shells.

Another major find was that this famous fish, presumed to be a shark, is more closely related to ratfish; “The main thing it has in common with sharks is the structure of its teeth, everything else is Holocephalan (like a ratfish)”, Tapanila.

Based on the three dimensional virtual reconstruction of the Helicoprian’s jaw, Idaho Museum of Natural History is creating a full-bodied reconstruction of a modest-sized, 13-foot long Helicoprion. Larger specimens are thought to have grown as long as 25 feet.

Photo by Ray Troll

Most of the authors of the paper. Left to right: Jason Ramsay, Cheryl Wilga, Alan Pradel, Robert Schlader, Jesse Pruitt and Leif Tapanila working on the CT specimen of Helicoprion.

Photo by Ray Troll

“We were able to answer where the set of teeth fit in the animal,” Tapanila said. “They fit in the back of the mouth, right next to the back joint of the jaw. We were able to refute that it might have been located at the front of the jaw.”

Located in the back of the jaw, the teeth were “saw-like,” with the jaw creating a rolling-back and slicing mechanism. The Helicoprion also likely ate soft-tissued prey such as squid, rather that hunting creatures with hard shells.

Another major find was that this famous fish, presumed to be a shark, is more closely related to ratfish, than sharks. Both of these species are fish with cartilage for a skeletal structure, rather than bone, but they are classified differently.

Based on the 3-D virtual reconstruction of the Helicoprian’s jaw, the ISU researcher can infer other characteristics about the fish. Using this information, the Idaho Museum of Natural History is creating a full-bodied reconstruction of a modest-sized, 13-foot long Helicoprion, which probably grew as long as 25 feet. This model will be part of the IMNH’s new Helicoprion exhibit that will open this summer, which includes artwork by Ray Troll, a well-regarded scientific illustrator as well as a fine arts artist.

The ISU team of researchers on this project included Tapanila, Jesse Pruitt, Alan Pradel, Cheryl D. Wilga, Jason B. Ramsay, Robert Schlader and Dominique Didier. Support for the project, which will include three more scientific studies on different aspects of the Helicoprion, was provided by the National Science Foundation, Idaho Museum of Natural History, American Museum of Natural History, University of Rhode Island and Millersville University.

Contacts and sources:

For more information about the Idaho Virtualization Laboratory, visit vl.imnh.isu.edu.

DNA Building Blocks Found Between Stars

March 1st, 2013By Alton Parrish.

Using new technology at the telescope and in laboratories, researchers have discovered an important pair of prebiotic molecules in interstellar space. The discoveries indicate that some basic chemicals that are key steps on the way to life may have formed on dusty ice grains floating between the stars.

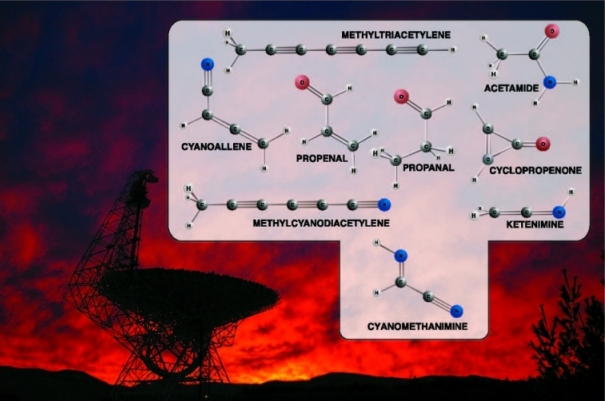

In the video below, students and their astronomer-adviser share the excitement of discovery.

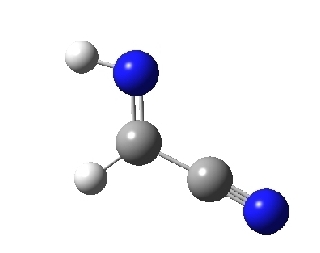

One of the newly-discovered molecules, called cyanomethanimine, is one step in the process that chemists believe produces adenine, one of the four nucleobases that form the “rungs” in the ladder-like structure of DNA. The other molecule, called ethanamine, is thought to play a role in forming alanine, one of the twenty amino acids in the genetic code.

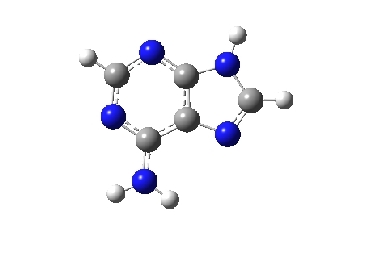

Structure of cyanomethanimine, newly discovered in interstellar space. Blue=nitrogen, grey=carbon, white=hydrogen.

Credit: NRAO/AUI/NSF.

“Finding these molecules in an interstellar gas cloud means that important building blocks for DNA and amino acids can ‘seed’ newly-formed planets with the chemical precursors for life,” said Anthony Remijan, of the National Radio Astronomy Observatory (NRAO).

In each case, the newly-discovered interstellar molecules are intermediate stages in multi-step chemical processes leading to the final biological molecule. Details of the processes remain unclear, but the discoveries give new insight on where these processes occur.

Credit: NRAO/AUI/NSF

Previously, scientists thought such processes took place in the very tenuous gas between the stars. The new discoveries, however, suggest that the chemical formation sequences for these molecules occurred not in gas, but on the surfaces of ice grains in interstellar space.

“We need to do further experiments to better understand how these reactions work, but it could be that some of the first key steps toward biological chemicals occurred on tiny ice grains,” Remijan said.

The discoveries were made possible by new technology that speeds the process of identifying the “fingerprints” of cosmic chemicals. Each molecule has a specific set of rotational states that it can assume. When it changes from one state to another, a specific amount of energy is either emitted or absorbed, often as radio waves at specific frequencies that can be observed with the GBT.

New laboratory techniques have allowed astrochemists to measure the characteristic patterns of such radio frequencies for specific molecules. Armed with that information, they then can match that pattern with the data received by the telescope. Laboratories at the University of Virginia and the Harvard-Smithsonian Center for Astrophysics measured radio emission from cyanomethanimine and ethanamine, and the frequency patterns from those molecules then were matched to publicly-available data produced by a survey done with the GBT from 2008 to 2011.

Credit: NRAO/AUI/NSF

A team of undergraduate students participating in a special summer research program for minority students at the University of Virginia (U.Va.) conducted some of the experiments leading to the discovery of cyanomethanimine. The students worked under U.Va. professors Brooks Pate and Ed Murphy, and Remijan. The program, funded by the National Science Foundation, brought students from four universities for summer research experiences. They worked in Pate’s astrochemistry laboratory, as well as with the GBT data.

“This is a pretty special discovery and proves that early-career students can do remarkable research,” Pate said.

The researchers are reporting their findings in the Astrophysical Journal Letters.

The National Radio Astronomy Observatory is a facility of the National Science Foundation, operated under cooperative agreement by Associated Universities, Inc.

Flu Pandemic Threat Ended: Breakthrough New Drug Stops Virus Spread, Works On Drug Resistant Strains

February 25th, 2013By Alton Parrish.

The breakthrough drug is the result of a global collaboration between scientists from CSIRO, the University of British Columbia and the University of Bath. CSIRO scientists helped to design the new drug to protect against epidemic and pandemic flu strains.

The new drug has stopped the spread of virus strains in their tracks in laboratory testing – even those resistant strains of the virus!

This is done through binding drug molecules into the neuraminidase, like a key into a lock, blocking it from its normal role.

Credit: CSIRO/Magipics

Credit: Tom Wennekes, UBC

Credit: Tom Wennekes, UBC

“By taking advantage of the virus’s own ‘molecular machinery’ to attach itself,” Withers adds. “The new drug could remain effective longer, since resistant virus strains cannot arise without destroying their own mechanism for infection.”

NB: An animation and step-by-step cartoon of the new drug’s mechanism are available at

Background of New Flu Drug

Partners and funders

The research is funded by the Canadian Institutes of Health Research, the Canada Foundation for Innovation, the British Columbia Knowledge Development Fund.

The research team includes scientists from UBC, Simon Fraser University, and Centre for Disease Control in B.C., the University of Bath in the U.K. and CSIRO Materials Science and Engineering in Australia.

The new drug technology was developed in collaboration with The Centre for Drug Research and Development (CDRD) and has been advanced into CDRD Ventures’ Inc., CDRD’s commercialization vehicle, in order to secure private sector partners and investors to develop it through clinical trials.

CDRD is Canada’s national drug development and commercialization centre, which provides expertise and infrastructure to enable researchers from leading health research institutions to advance promising early-stage drug candidates. CDRD’s mandate is to de-risk discoveries stemming from publicly-funded health research and transform them into viable investment opportunities for the private sector — thus successfully bridging the commercialization gap between academia and industry, and translating research discoveries into new therapies for patients.

Nasty flu viruses bind onto sugars on the cell surface. To be able to spread they need to remove these sugars. The new drug works by preventing the virus from removing sugars and blocking the virus from infecting more cells. It is hoped the drug will also be effective against future strains of the virus.

Dr Jenny McKimm-Breschkin, a researcher in the team that developed the very first flu drug Relenza, explains to us how it all works.

Watch the CSIRO chat with Dr McKimm-Breschkin:

Six Years In Space For THEMIS: Understanding The Magnetosphere Better Than Ever

February 21st, 2013By Alton Parrish.

“Scientists have been trying to understand what drives changes in the magnetosphere since the 1958 discovery by James Van Allen that Earth was surrounded by rings of radiation,” says David Sibeck, project scientist for THEMIS at NASA’s Goddard Space Flight Center in Greenbelt, Md. “Over the last six years, in conjunction with other key missions such as Cluster and the recently launched Van Allen Probes to study the radiation belts, THEMIS has dramatically improved our understanding of the magnetosphere.”

Since that 1958 discovery, observations of the radiation belts and near Earth space have shown that in response to different kinds of activity on the sun, energetic particles can appear almost instantaneously around Earth, while in other cases they can be wiped out completely. Electromagnetic waves course through the area too, kicking particles along, pushing them ever faster, or dumping them into the Earth’s atmosphere. The bare bones of how particles and waves interact have been described, but with only one spacecraft traveling through a given area at a time, it’s impossible to discern what causes the observed changes during any given event.

“Trying to understand this very complex system over the last 40 years has been quite difficult,” says Vassilis Angelopoulos, the principal investigator for THEMIS at the University of California in Los Angeles (UCLA). “But very recently we have learned how even small variations in the solar wind – which buffets Earth’s space environment at a million miles an hour — can sometimes cause extreme responses, causing more particles to arrive or to be lost.”

An artist’s concept of the THEMIS spacecraft orbiting around Earth.

“The interesting thing about this paper is that it shows how the magnetosphere actually gets quite a bit of energy from the solar wind, even by seemingly innocuous rotations in the magnetic field,” says Angelopoulos. “People hadn’t realized that you could get waves from these types of events, but there was a one-to-one correspondence. One THEMIS spacecraft saw an instability at the bow shock and another THEMIS spacecraft then saw the waves closer to Earth.”

Since all the various waves in the magnetosphere are what can impart energy to the particles surrounding Earth, knowing just what causes each kind of wave is yet another important part of the space weather puzzle.