Posts by AltonParrish:

Aztec Conquest Altered Genetics Among Early Mexico Inhabitants, New DNA Study Shows

February 3rd, 2013By Alton Parrish.

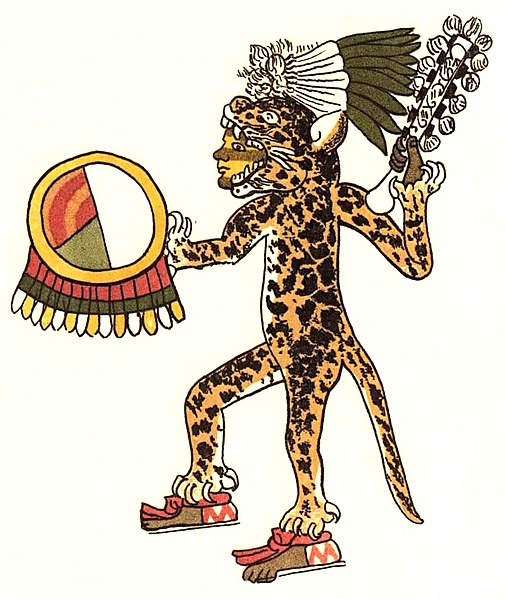

Learning more about changes in the size, composition, and structure of past populations helps anthropologists understand the impact of historical events, including imperial conquest, colonization, and migration, Mata-Míguez says. The case of Xaltocan is extremely valuable because it provides insight into the effects of Aztec imperialism on Mesoamerican populations.

Historical documents suggest that residents fled Xaltocan in 1395 AD, and that the Aztec ruler sent taxpayers to resettle the site in 1435 AD. Yet archaeological evidence indicates some degree of population stability across the imperial transition, deepening the mystery. Recently unearthed human remains from before and after the Aztec conquest at Xaltocan provide the rare opportunity to examine this genetic transition.

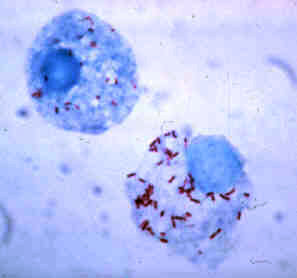

Photo provided by Lisa Overholtzer, Wichita State University.

In focusing on mitochondrial DNA, this study only traced the history of maternal genetic lines at Xaltocan. Future aDNA analyses will be needed to clarify the extent and underlying causes of the genetic shift, but this study suggests that Aztec imperialism may have significantly altered at least some Xaltocan households.

Credit: Wikipedia

Global Warming Less Extreme Than Feared?

January 25th, 2013By Alton Parrish.

Climate sensitivity is a measure of how much the global mean temperature is expected to rise if we continue increasing our emissions of greenhouse gases into the atmosphere.CO2 is the primary greenhouse gas emitted by human activity. A simple way to measure climate sensitivity is to calculate how much the mean air temperature will rise if we were to double the level of overall CO2 emissions compared to the world’s pre-industrialised level around the year 1750.If we continue to emit greenhouse gases at our current rate, we risk doubling that atmospheric CO2 level in roughly 2050.

In the Norwegian project, however, researchers have arrived at an estimate of 1.9°C as the most likely level of warming.

For their analysis, Professor Berntsen and his colleagues entered all the factors contributing to human-induced climate forcings since 1750 into their model. In addition, they entered fluctuations in climate caused by natural factors such as volcanic eruptions and solar activity. They also entered measurements of temperatures taken in the air, on ground, and in the oceans.

The researchers used a single climate model that repeated calculations millions of times in order to form a basis for statistical analysis. Highly advanced calculations based on Bayesian statistics were carried out by statisticians at the Norwegian Computing Center.

Professor Berntsen says this temperature increase will first be upon us only after we reach the doubled level of CO2 concentration (compared to 1750) and maintain that level for an extended time, because the oceans delay the effect by several decades. The researchers have arrived at an estimate of 1.9°C as the most likely level of warming.

Professor Berntsen explains the changed predictions: “The Earth’s mean temperature rose sharply during the 1990s. This may have caused us to overestimate climate sensitivity.

“We are most likely witnessing natural fluctuations in the climate system – changes that can occur over several decades – and which are coming on top of a long-term warming. The natural changes resulted in a rapid global temperature rise in the 1990s, whereas the natural variations between 2000 and 2010 may have resulted in the levelling off we are observing now.”

Climate issues must be dealt with

Terje Berntsen emphasises that his project’s findings must not be construed as an excuse for complacency in addressing human-induced global warming. The results do indicate, however, that it may be more within our reach to achieve global climate targets than previously thought.

Regardless, the fight cannot be won without implementing substantial climate measures within the next few years.

But the findings of the Norwegian project indicate that particulate emissions probably have less of an impact on climate through indirect cooling effects than previously thought.

So the good news is that even if we do manage to cut emissions of sulphate particulates in the coming years, global warming will probably be less extreme than feared.

About the project

Geophysicists at the research institute CICERO collaborated with statisticians at the Norwegian Computing Center on a novel approach to global climate calculations in the project “Constraining total feedback in the climate system by observations and models”. The project received funding from the Research Council of Norway’s NORKLIMA programme.

The researchers succeeded in reducing uncertainty around the climatic effects of feedback mechanisms, and their findings indicate a lowered estimate of probable global temperature increase as a result of human-induced emissions of greenhouse gases.

The project researchers were able to carry out their calculations thanks to the free use of the high-performance computing facility in Oslo under the Norwegian Metacenter for Computational Science (Notur). The research project is a prime example of how collaboration across subject fields can generate surprising new findings.

Contacts and sources:

Thomas Keilman

The Research Council of Norway

Japan Tsunami Debris Hits Hawaii, NOAA RFP To Remove Dock From Japan In Washington Olympic National Park

January 21st, 2013By Alton Parris.

Credit: DLNR

Llanes contacted the Department of Land and Natural Resources’ (DLNR) marine debris call-in line at (808) 587-0400 and kept in touch with Hawaii District Boating Manager, Nancy Murphy, to coordinate his arrival Tuesday afternoon at Honokohau.

DLNR immediately notified the National Oceanic and Atmospheric Administration’s Marine Debris Program and kept the program informed at all times. NOAA in turn notified its U.S. Coast Guard and National Parks Service contacts. The state Department of Health has been contacted regarding a testing for radiation levels.

While still at sea, Llanes spoke by phone with DLNR’s aquatic invasive species specialist, Jonathan Blodgett, who determined that Llanes had already scraped off blue mussels, an alien species in Hawaii, well out at sea, leaving only typical gooseneck barnacles that are common pelagic species and not harmful to native marine species.

Llanes told DLNR officials the skiff appeared identical to the four other small boats that have arrived in Hawaii waters since October 2012. He said he found it upside down and flipped it over.

“On behalf of NOAA and the State of Hawaii, we ask that anyone who finds personal items, which may have come from the tsunami, to please report them to county, state and/or federal officials,” said William J. Aila, Jr., DLNR chairperson. “Please show aloha and respect to the people of Japan, and the regions that suffered devastation from the 2011 tsunami. Remember, these items may be all someone has left.”

By being able to communicate with this boater in advance of his arrival, DLNR was able to quickly provide important guidance to prevent introduction of possible invasive marine species to island waters, and to ensure the skiff was met on arrival and properly handled and stored pending ownership verification.

DLNR recommends that boaters, fishers and coastal users view online guidelines for reporting and handling marine debris, including possible Japan tsunami marine debris (JTMD). They can be found on DLNR’s updated website at www.hawaii.gov/dlnr.

Chronology

As of Jan. 10, 2013 — NOAA has received more than 1,400 reports of potential Japan tsunami marine debris to disasterdebris@noaa.gov from the U.S., Canada, and Mexico. With the assistance of the Consulates and Government of Japan, 18 items have been confirmed as lost during the March 2011 tsunami.

Prior to this recent boat, five confirmed items have arrived in Hawaii since Sept. 18, 2012: 1) a large blueplastic bin; 2) a fishing boat recovered 700 miles north of Maui by a Hawaii longline fisherman; 3) a skiff found at Midway Atoll; 4) a skiff found at Kahana Bay, Oahu; and 5) a skiff found at Punaluu, Oahu (four boats, one plastic bin).

The dock weighs about 185 tons and is 65 feet long, 20 feet wide and 7.5 feet tall. Although the dock has stayed in the same general location since its arrival on the beach, it is still quite mobile in the surf. As changing tides and waves continue to shift and move the dock, the dock will continue to batter the coastline, creating a hazard for visitors and wildlife and damaging both the coastal environment and the dock. The intertidal area of the Olympic Coast is home to the most diverse ecosystem of marine invertebrates and seaweeds on the west coast of North America.

Most of the dock’s volume is Styrofoam-type material, which is encased in steel reinforced concrete. The concrete has already been damaged, exposing rebar and releasing foam into the ocean and onto the beach where it can potentially be ingested by fish, birds and marine mammals. Leaving the dock in place could result in the release of over 200 cubic yards of foam into federally protected waters and wilderness coast.

Japan Confirms dock’s origin

The Japanese government has confirmed that the dock was washed into the Pacific Ocean during the March 11, 2011, tsunami. Based on the fender production serial number in a picture, the Japanese government positively identified the dock as coming from Aomori Prefecture.

Credit: NOAA

Determining the origin of marine debris is challenging. When possible, NOAA works closely with the government of Japan to determine whether an item originated in the tsunami impact zone. To date, 19 items have been definitively traced back to the tsunami, typically by registration number or some other unique marking. This is the fourth confirmed item found in Washington.

In this case, NOAA worked with, and greatly appreciates the assistance from, many Japanese agencies to identify where the dock came from, including:

NOAA has announced a Request for Proposal (RFP) to solicit proposals from professional marine salvage contractors. Information can be found at:https://www.fbo.gov/spg/DOC/NOAA/WASC/AB133C-13-RP-0144/listing.html.

The deadline for submitting proposals is Jan 22, 2013 2:00 pm Pacific.

For the latest updates on the 65-foot dock that washed ashore in Olympic National Park, visit the Washington Department of Ecology’s incident webpage.

For the latest information on tsunami debris, please visit the NOAA Marine Debris Program website at http://marinedebris.noaa.gov/tsunamidebris/.

To report findings of possible tsunami marine debris, please call DLNR at (808) 587-0400 or send an email to dlnr.marine.debris@hawaii.gov and disasterdebris@noaa.gov.

Infant Formula Can Be Fatal To Premature Babies Say UC San Diego Scientists

January 13th, 2013By Alton Parrish.

Image Credit: Alexander Penn, Department of Bioengineering, UC San Diego Jacobs School of Engineering. Blue tint added for visual clarity.Penn and others had previously determined that the partially digested food in a mature, adult intestine is capable of killing cells, due to the presence of free fatty acids which have a “detergent” capacity that damages cell membranes. The intestines of healthy adults and older children have a mature mucosal barrier that may prevent damage due to free fatty acids. However, the intestine is leakier at birth, particularly for preterm infants, which could be why they are more susceptible to necrotizing enterocolitis.Therefore, the researchers wanted to know what happens to breast milk as compared to infant formula when they are exposed to digestive enzymes. They “digested,” in vitro, infant formulas marketed for full term and preterm infants as well as fresh human breast milk using pancreatic enzymes or fluid from an intestine. They then tested the formula and milk for levels of free fatty acids. They also tested whether these fatty acids killed off three types of cells involved in necrotizing enterocolitis: epithelial cells that line the intestine, endothelial cells that line blood vessels, and neutrophils, a type of white blood cell that is a kind of “first responder” to inflammation caused by trauma in the body.Overwhelmingly, the digestion of formula led to cellular death, or cytotoxicity – in less than 5 minutes in some cases – while breast milk did not. For example, digestion of formula caused death in 47 percent to 99 percent of neutrophils while only 6 percent of them died as a result of milk digestion. The study found that breast milk appears to have a built-in mechanism to prevent cytotoxicity. The research team believes most food, like formula, releases high levels of free fatty acids during digestion, but that breast milk is digested in a slower, more controlled, process.

Credit: Alexander Penn, Department of Bioengineering, UC San Diego Jacobs School of Engineering.Currently, many neonatal intensive care units are moving towards formula-free environments, but breastfeeding a premature infant can be challenging or physically impossible and supplies of donor breast milk are limited. To meet the demand if insufficient breast milk is available, less cytotoxic milk replacements will need to be designed in the future that pose less risk for cell damage and for necrotizing enterocolitis, the researchers concluded.This may be of benefit not only to premature infants, but also to full-term infants at higher risk for disorders that are associated with gastrointestinal problems and more leaky intestines, such as autism spectrum disorder. Dr. Sharon Taylor, a professor of pediatric medicine at UC San Diego School of Medicine and a pediatric gastroenterologist at Rady Children’s Hospital-San Diego, said the study offers more support to an already ongoing push by hospitals, including neonatal intensive care units, to encourage breastfeeding even in more challenging circumstances in the NICU. For patients who are too premature or frail to nurse, Dr. Taylor said hospital staff should provide consultation and resources to help mothers pump breast milk that can be fed to the baby through a tube.The research was carried out in collaboration with Dr. Taylor, Karen Dobkins of the Department of Psychology, and Angelina Altshuler and James Small of the Department of Bioengineering at UC San Diego and was funded by the National Institutes of Health (NS071580 and GM85072). The researchers conclude that breast milk has a significant ability to reduce cytotoxicity that formula does not have. One next step is to determine whether these results are replicated in animal studies and whether intervention can prevent free fatty acids from causing intestinal damage or death from necrotizing enterocolitis.

Feed Your Family Fresh Food And Fish Year Round No Matter Where You Live

January 6th, 2013By Alton Parrish.

GLOBE (hedron) has already entered the global design Buckminster Fuller Challenge 2012. Awards will be given to designs that have significant potential to solve humanities most pressing problems.Having enough food to go around has always been a vexing issue for humanity, and while modern agriculture has greatly stabilized our food supply, it comes with a host of negative environmental consequences. Meanwhile, it is estimated that the world population will be over 9 billion people by 2050. More than half of those people will live in urban areas. So, how can we produce food in an economical and ecologically sustainable fashion as we shift away from agrarian societies?

This rooftop structure will house fish and plants, growing in harmony to nourish each other. It utilizes aquaponics, a marriage of aquaculture (fish cultivation) and hydroponics (soil-free, water-based agriculture). What makes aquaponics innovative is its closed-loop recycling of wastewater from fish tanks to fertilize plants. After absorbing the nutrients, clean aerated water returns back to the fish tanks and the cycle repeats again.

Recirculating aquaponics systems are relatively uncommon in the U.S., but in Australia, frequent drought and DIY enthusiasm have propelled aquaponics into a bona fide movement. It’s easy to see why, when you compare the costs and benefits of an aquaponics system to conventional methods. A single five-foot tower can produce over 200 heads of lettuce a year, while using 80–90% less water than a conventional soil-based system. Aquaponics systems are compact, cheap to construct, and require no fertilizers or pesticides.

The GLOBE (hedron) project is still in its prototyping phase, but could transform aquaponics from a niche gardening exercise to a mainstream urban farming system. The creators promise a bountiful harvest of 400 kg of vegetables and 100 kg of fish each year, providing food for 16 people. Insulating panels would ensure year-round production, even in cooler weather. The dome is designed for easy shipping and assembly, and will be constructed with renewable materials where possible, like bamboo.

Do you have a flat, unused roof that you’d be willing to install an aquaponics dome on? Would you be willing to get your hands wet in return for hyper-fresh veggies and seafood? The GLOBE (hedron) dome is wonderfully creative alternative to our current food system, and we’re excited to see where it progresses.

Amazonian Tribal Warfare Sheds Light On Modern Violence, Says MU Anthropologist

October 4th, 2012By Alton Parrish.

In the tribal societies of the Amazon forest, violent conflict accounted for 30 percent of all deaths before contact with Europeans, according to a recent study by University of Missouri anthropologist Robert Walker. Understanding the reasons behind those altercations in the Amazon sheds light on the instinctual motivations that continue to drive human groups to violence, as well as the ways culture influences the intensity and frequency of violence.

The same reasons – revenge, honor, territory and jealousy over women – that fueled deadly conflicts in the Amazon continue to drive violence in today’s world,” said Walker, lead author and assistant professor of anthropology in MU’s College of Arts and Science. “Humans’ evolutionary history of violent conflict among rival groups goes back to our primate ancestors. It takes a great deal of social training and institutional control to resist our instincts and solve disputes with words instead of weapons. Fortunately, people have developed ways to channel those instincts away from actual deadly conflict. For example, sports and video games often involve the same impulses to defeat a rival group.”Walker examined records of 1,145 violent deaths in 44 societies in the Amazon River basin of South America by reviewing 11 previous anthropological studies. He analyzed the deaths on a case-by-case basis to determine what cultural factors influenced the body counts. Internal raids among tribes with similar languages and cultures were found to be more frequent, but with fewer fatalities, when compared to the less frequent, but deadlier, external raids on tribes of different language groups.

The study “Body counts in lowland South American violence,” was published in the journal Evolution & Human Behavior. Drew Bailey, a recent doctoral graduate in psychological science from MU, was co-author.

Contacts and sources:

Tim Wall

University of Missouri-Columbia

Second Mona Lisa Revealed, Called Isleworth Mona Lisa, Painted First

October 4th, 2012A consortium unveiled last week what it claims to be Leonardo’s original painting of the Mona Lisa, sparking controversy in the art world

The Mona Lisa Foundation will present evidence for the ‘Isleworth Mona Lisa’ having been painted by Leonardo da Vinci, saying they are backed up by art historians Alessandro Vezzosi and Carlo Pedretti.

They claim it was painted a decade before the famous portrait of Lisa Gherardini del Giocondo, who is thought to have sat for the painting which now sits in the Louvre between 1503 and 1506, based on regression tests, mathematical comparisons and historical and archival records.

But although this announcement is generating a lot of excitement, an Oxford University art historian is sceptical about the claims.

‘The reliable primary evidence provides no basis for thinking that there was “an earlier” portrait of Lisa del Giocondo,’ says Martin Kemp, emeritus professor of the History of Art.

‘The Isleworth Mona Lisa miss-translates subtle details of the original, including the sitter’s veil, her hair, the translucent layer of her dress, the structure of the hands. The landscape is devoid of atmospheric subtlety.

‘The head, like all other copies, does not capture the profound elusiveness of the original.’

Much of the Foundation’s claim rests on scientific analysis which produced deep images of the painting by infrared reflectology and X-ray but Professor Kemp says this evidence does not add up.

‘The scientific analysis can at most state that there is nothing to say that this cannot be by Leonardo,’ he says. ‘The infrared reflectography and X-ray points very strongly to its not being by Leonardo.’

In fact, the X-ray and infrared images suggest the Isleworth Mona Lisa was painted after Leonardo’s famous portrait, contrary to the claims of the Foundation.

Professor Kemp explains: ‘The images produced by infrared reflectography and X-ray are not all characteristic or what lies below Leonardo’s autograph paintings. We know that changes were made in the Louvre painting.

‘The Isleworth picture follows the final state of the Louvre painting. It does not therefore precede the Louvre painting.’

Arts blog readers may not have access to infrared or X-ray technology, but they can judge the paintings for themselves (above).

Credit: University of Oxford

Matt Pickles

University of Oxford

The Universe: Most Accurate And Precise Measurement Of The Expansion Of Space

October 3rd, 2012A team of astronomers, led by Wendy Freedman, director of the Carnegie Observatories, have used NASA’s Spitzer Space Telescope to make the most accurate and precise measurement yet of the Hubble constant, a fundamental quantity that measures the current rate at which our universe is expanding. These results will be published in the Astrophysical Journal and are available online.

Spitzer was able to improve upon past measurements of Hubble’s constant due to its infrared vision, which sees through dust to provide better views of variable stars called Cepheids. These pulsating stars are vital “rungs” in what astronomers called the cosmic distant ladder: a set of objects with known distances that, when combined with the speeds at which the objects are moving away from us, reveal the expansion rate of the universe.

Cepheids are crucial to these calculations because their distances from Earth can be readily measured. In 1908, Henrietta Leavitt discovered that these stars pulse at a rate that is directly related to their intrinsic brightness. To visualize why this is important, imagine somebody walking away from you while carrying a candle. The candle would dim the farther it traveled, and its apparent brightness would reveal just how far.

The same principle applies to Cepheids, standard candles in our cosmos. By measuring how bright they appear on the sky, and comparing this to their known brightness as if they were close up, astronomers can calculate their distance from Earth.

Spitzer observed ten Cepheids in our own Milky Way galaxy and 80 in a nearby neighboring galaxy called the Large Magellanic Cloud. Without the cosmic dust blocking their view at the infrared wavelengths, the research team was able to obtain more precise measurements of the stars’ apparent brightness, and thus their distances, than previous studies had done. With these data, the researchers could then tighten up the rungs on the cosmic distant ladder, opening the way for a new and improved estimate of our universe’s expansion rate.

“Just over a decade ago, using the words ‘precision’ and ‘cosmology’ in the same sentence was not possible, and the size and age of the universe was not known to better than a factor of two,” Freedman said. “Now we are talking about accuracies of a few percent. It is quite extraordinary”

The study appears in the Astrophysical Journal. Freedman’s co-authors are Barry Madore, Victoria Scowcroft, Chris Burns, Andy Monson, S. Eric Person and Mark Seibert of the Observatories of the Carnegie Institution and Jane Rigby of NASA’s Goddard Space Flight Center in Greenbelt, Md.

NASA’s Jet Propulsion Laboratory, Pasadena, Calif., manages the Spitzer Space Telescope mission for NASA’s Science Mission Directorate, Washington. Science operations are conducted at the Spitzer Science Center at the California Institute of Technology in Pasadena. Data are archived at the Infrared Science Archive housed at the Infrared Processing and Analysis Center at Caltech. Caltech manages JPL for NASA.

Wendy Freedman

Carnegie Institution

NASA

Galaxy Altering Quasars Ignite As Galaxies Collide

October 3rd, 2012NASA’s Spitzer and Hubble Space Telescopes have caught sight of luminous quasars igniting after galaxies collide. Quasars are bright, energetic regions around giant, active black holes in galactic centers.

“For the first time in a large sample, we are catching galactic systems when feedback between the galaxy and the quasar is still in action,” said Tanya Urrutia, a postdoctoral researcher at the Leibniz Institute for Astrophysics in Potsdam, Germany and lead author of a study appearing in the Astrophysical Journal. “Quasars profoundly influence galaxy evolution and they shape the properties of the massive galaxies we see today.”

Although immensely powerful and visible across billions of light years, quasars are actually quite tiny, at least compared to an entire galaxy. Quasars span a few light years, and their inner areas casting out high-velocity winds compare roughly in size only to that of our solar system. It takes a beam of light about ten hours to cross that distance. A large galaxy, however, stretches across tens of thousands of light years, or an area many millions of times larger.

“An amazing aspect of this work is that something that is happening on a very small scale can affect the host galaxy so much,” said Urrutia. “To put it in context, it is a bit like if somebody playing around with a stick at the beach could affect the behavior of all the oceans in the world.”

The transition of young, star-making galaxies to the old, quiet, elliptical galaxies we see in the modern Universe is strongly linked to the activity of central supermassive black holes, astronomers have learned. When galaxies merge together into a bigger galaxy, central black holes spark up as quasars, send out powerful winds and beam energy across the cosmos. The new study probes how the quasars work in altering the host galaxies’ star-making abilities.

Urrutia and her team looked at 13 particularly jazzed-up quasars at a distance of about six billion light-years or so, back when the universe was a little more than half its current age. The quasars’ light was reddened by the presence of lots of dust. Cosmic dust absorbs visible light and then re-emits it in longer, redder wavelengths, including the infrared light that Spitzer sees.

The dustier, redder quasars turned up in galaxies with more disturbed shapes, as revealed in observations by Hubble. This evidence pointed to those luminous quasars having been ignited by a recent major merger between two sizeable galaxies.

The astronomers also gauged how voraciously the supermassive black holes at the hearts of the quasars were feeding. In further Spitzer observations, the researchers saw that the reddest quasars most actively slurping up matter occurred in the most disturbed galaxies. In essence, Spitzer and Hubble witnessed the galaxies and quasars in a stage of co-evolution, with the state of one connected to the state of the other.

Other findings of the new study bolster theories about where this shared evolution will lead. The galactic mergers, which ignited central quasars and shrouded them in dust, also kicked off waves of star formation. Stars form from pockets of cold gas and dust, and galaxy collisions are known to trigger bursts of star birth.

Notably, the fast-feeding black holes that sport prominent quasars in the study appear to be growing still in size. Astronomers have previously established a relationship between a central black hole’s mass and the brightness of a host galaxy. However, in the young quasars studied, the black holes did not turn out to be as massive as would be expected. The black holes still have some catching up to do, it seems, with the rest of the processes spurred by the merger.

As the black holes grow, high-velocity winds from these monsters will scatter the cold gas needed to create new stars. In the process, the galaxies will start to transition from star-generating youth to an old age populated by dying stars. Urrutia and her team noted winds already rushing from some of the observed galaxies’ central supermassive black holes.

In the overall chronology of galactic evolution, then, it looks like waves of new star birth happen before the central holes grow and their quasars flare. “According to our results, the onset of star formation preceded the ignition of the quasar,” said Urrutia. “The evolution of quasars is intimately linked with the evolution of galaxies and the formation of their stars.”

Holy Snake Lord: Tomb Of Maya Queen K’abel Discovered In Guatemala

October 3rd, 2012By Alton Parrish

WUSTL archaeologist part of a team to discover tomb containing rare combination of Maya archaeological, historical records

El Peru Waka Regional Archaeological Project (2)

Credit: WUSTL

Based on this and other evidence, including ceramic vessels found in the tomb and stela (large stone slab) carvings on the outside, the tomb is likely that of K’abel, says Freidel, PhD, professor of anthropology in Arts & Sciences and Maya scholar.

Freidel says the discovery is significant not only because the tomb is that of a notable historical figure in Maya history, but also because the newly uncovered tomb is a rare situation in which Maya archaeological and historical records meet.

Credit: El Peru Waka Regional Archaeological Project

The burial chamber. The queen’s skull is above the plate fragments.

The discovery of the tomb of the great queen was “serendipitous, to put it mildly,” Freidel says.

The team at El Perú-Waka’ has focused on uncovering and studying “ritually-charged” features such as shrines, altars and dedicatory offerings rather than on locating burial locations of particular individuals.

“In retrospect, it makes a lot of sense that the people of Waka’ buried her in this particularly prominent place in their city,” Freidel says.

Olivia Navarro-Farr, PhD, assistant professor of anthropology at the College of Wooster in Ohio, originally began excavating the locale while still a doctoral student of Freidel’s. Continuing to investigate this area this season was of major interest to both she and Freidel because it had been the location of a temple that received much reverence and ritual attention for generations after the fall of the dynasty at El Perú.

With the discovery, archaeologists now understand the likely reason why the temple was so revered: K’abel was buried there, Freidel says.

Credit: WUSTL

K’abel, considered the greatest ruler of the Late Classic period, ruled with her husband, K’inich Bahlam, for at least 20 years (672-692 AD), Freidel says. She was the military governor of the Wak kingdom for her family, the imperial house of the Snake King, and she carried the title “Kaloomte’,” translated to “Supreme Warrior,” higher in authority than her husband, the king.

Prehistoric Builders Reveal Trade Secrets

October 3rd, 2012A fossil which has lain in a museum drawer for over a century has been recognized by a University of Leicester geologist as a unique clue to the long-lost skills of some of the most sophisticated animal architects that have ever lived on this planet.

The fossil is a graptolite, a planktonic colony from nearly half a billion years ago, found by nineteenth century geologists in the Southern Uplands of Scotland. Graptolites are common in rocks of this age, but only as the beautifully intricate multistory floating ‘homes’ that these animals constructed – the animals that made them were delicate creatures with long tentacle-bearing arms, but these have long rotted away.

These connections indicate that the animals of the colony could not have been all basically the same, as had been assumed. Rather, they must have been very different in shape and organization in different parts of the colony.

Dr Zalasiewicz said, “The light caught one of the fossils in just the right way, and it showed complex structures I had never seen in a graptolite before. It was a sheer stroke of luck…one of those Eureka moments.

“In some parts of the colony, these fossilized connections look like slender criss-crossing branches; others look like little hourglasses.

“Hence, a key element in the ancient success of these animals must have been an elaborate division of labour, in which different members of the colony took on different tasks, for feeding, building and so on. This amazing fossil shows sophisticated prehistoric co-operation, preserved in stone.”

It has been a mystery how such tiny ‘lowly’ prehistoric creatures could have co-operated to build such impressively sophisticated living quarters – it is a skill long been lost among the animals of the world’s oceans. Now, this single fossil, which has been carefully preserved in the collections of the British Geological Survey since 1882, sheds light on these ancient master builders.

Remarkably, over that past century, the fossil slab had been examined by some of the world’s best experts on these fossils because it includes key specimens of a rare and unusual species.

Dr. Mike Howe, manager of the British Geological Survey’s fossil collections and a co-author of the study, commented, “This shows that museum collections are a treasure trove, where fossils collected long ago can drive new science.”

Contacts and sources:

Superman-Strength Bacteria Produce Gold

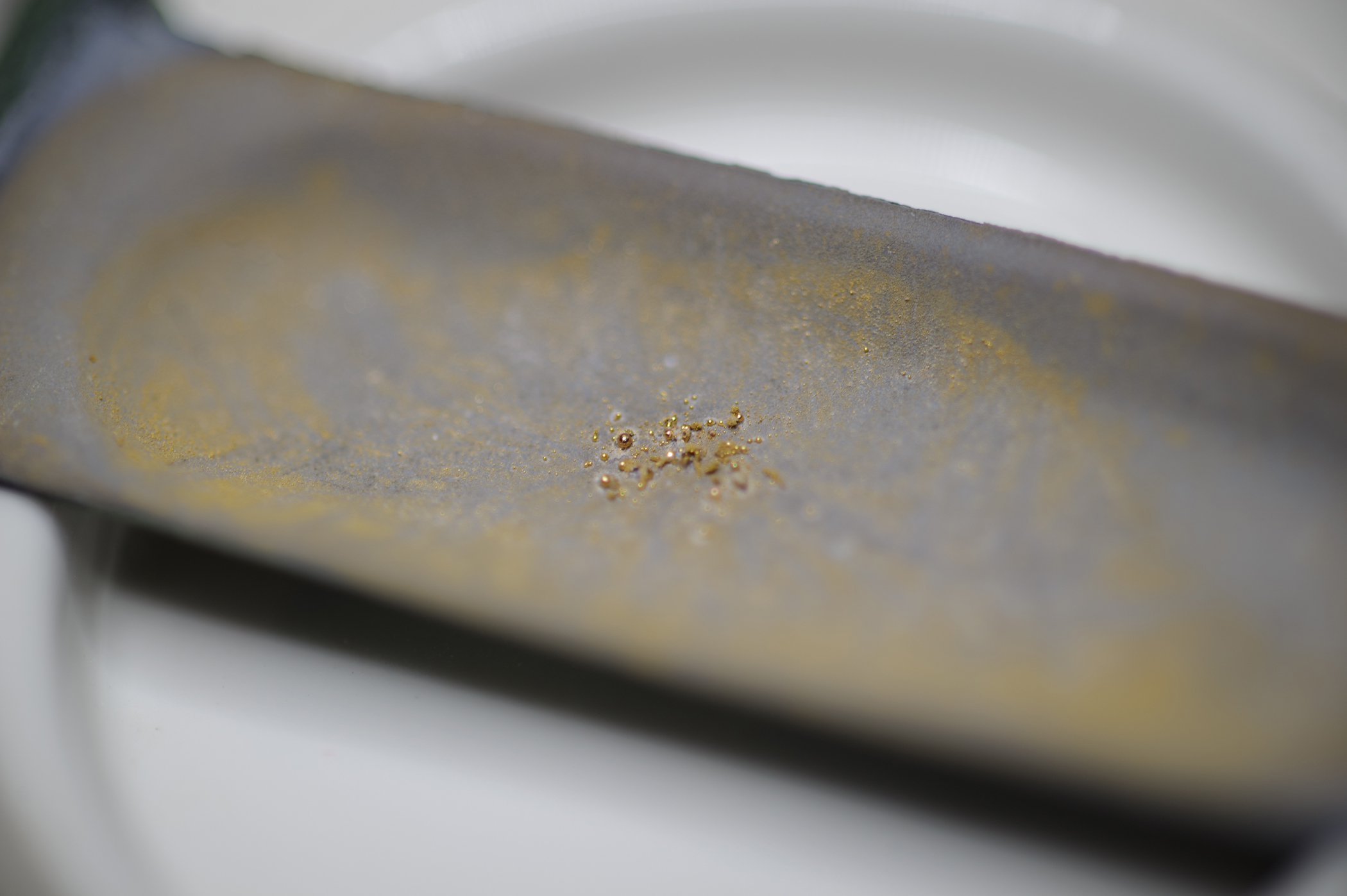

October 3rd, 2012At a time when the value of gold has reached an all-time high, Michigan State University researchers have discovered a bacterium’s ability to withstand incredible amounts of toxicity is key to creating 24-karat gold.

He and Adam Brown, associate professor of electronic art and intermedia, found the metal-tolerant bacteria Cupriavidus metallidurans can grow on massive concentrations of gold chloride – or liquid gold, a toxic chemical compound found in nature.

In fact, the bacteria are at least 25 times stronger than previously reported among scientists, the researchers determined in their art installation, “The Great Work of the Metal Lover,” which uses a combination of biotechnology, art and alchemy to turn liquid gold into 24-karat gold. The artwork contains a portable laboratory made of 24-karat gold-plated hardware, a glass bioreactor and the bacteria, a combination that produces gold in front of an audience.

Brown and Kashefi fed the bacteria unprecedented amounts of gold chloride, mimicking the process they believe happens in nature. In about a week, the bacteria transformed the toxins and produced a gold nugget.

“The Great Work of the Metal Lover” uses a living system as a vehicle for artistic exploration, Brown said.

In addition, the artwork consists of a series of images made with a scanning electron microscope. Using ancient gold illumination techniques, Brown applied 24-karat gold leaf to regions of the prints where a bacterial gold deposit had been identified so that each print contains some of the gold produced in the bioreactor.

“This is neo-alchemy. Every part, every detail of the project is a cross between modern microbiology and alchemy,” Brown said. “Science tries to explain the phenomenological world. As an artist, I’m trying to create a phenomenon. Art has the ability to push scientific inquiry.”

It would be cost prohibitive to reproduce their experiment on a larger scale, he said. But the researchers’ success in creating gold raises questions about greed, economy and environmental impact, focusing on the ethics related to science and the engineering of nature.

“The Great Work of the Metal Lover” was selected for exhibition and received an honorable mention at the world-renowned cyber art competition, Prix Ars Electronica, in Austria, where it’s on display until Oct. 7. Prix Ars Electronica is one of the most important awards for creativity and pioneering spirit in the field of digital and hybrid media, Brown said.

“Art has the ability to probe and question the impact of science in the world, and ‘The Great Work of the Metal Lover’ speaks directly to the scientific preoccupation while trying to shape and bend biology to our will within the postbiological age,” Brown said.

Egyptian Artificial Toes Are Likely The World’s Oldest Prosthetic Body Parts

October 3rd, 2012The results of scientific tests using replicas of two ancient Egyptian artificial toes, including one that was found on the foot of a mummy, suggest that they’re likely to be the world’s first prosthetic body parts.

University of Manchester researcher, Dr Jacky Finch, has shown that a three-part wood and leather artefact housed in the Egyptian Museum in Cairo, along with a second one, the Greville Chester artificial toe on display in the British Museum, not only looked the part but also helped their toeless owners walk like Egyptians.

Credit: University of Manchester

The toes date from before 600 BC, predating what was hitherto thought to be the earliest known practical prosthesis – the Roman Capua Leg – by several hundred years.

The University of Manchester researcher Dr Jacky Finch wanted to find out if a three part wood and leather toe dating from between 950 to 710 BC found on a female mummy buried near Luxor in Egypt, and the Greville Chester artificial toe from before 600 BC and made of cartonnage (a sort of papier maché mixture made using linen, glue and plaster), could be used as practical tools to help their owners to walk. Both display significant signs of wear and their design features also suggest they may have been more than cosmetic additions.

Dr Finch says: “Several experts have examined these objects and had suggested that they were the earliest prosthetic devices in existence. There are many instances of the ancient Egyptians creating false body parts for burial but the wear plus their design both suggest they were used by people to help them to walk. To try to prove this has been a complex and challenging process involving experts in not only Egyptian burial practices but also in prosthetic design and in computerized gait assessment.”

When wearing the replicas the pressure measurements showed that for both volunteers there were no overly high pressure points. This indicated that the false toes were not causing any undue discomfort or possible tissue damage. However, when the volunteers wore just the replica sandals without the false toes the pressure being applied under the foot rose sharply.

Dr Finch says: “The pressure data tells us that it would have been very difficult for an ancient Egyptian missing a big toe to walk normally wearing traditional sandals. They could of course remained bare foot or perhaps have worn some sort of sock or boot over the false toe, but our research suggests that wearing these false toes made walking in a sandal more comfortable.”

Alongside the test data Dr Finch also asked her volunteers to fill in a questionnaire about how they felt when doing the trials in the gait laboratory. Despite it having performed well the comfort scores for the cartonnage replica were disappointing although it was felt to be an excellent cosmetic replacement. Describing the performance of the three part wooden and leather toe both volunteers found this one to be extremely comfortable, scoring it highly, one volunteer commenting that with time he could get used to walking in it.

Assessing the volunteers’ experience Dr Finch said: “It was very encouraging that both volunteers were able to walk wearing the replicas. Now that we have the gait analysis data and volunteer feedback alongside the obvious signs of wear we can provide a more convincing argument that the original artefacts had some intended prosthetic function.

Writing in February 2011 in the Lancet, Dr Finch said: “To be classed as true prosthetic devices any replacement must satisfy several criteria. The material must withstand bodily forces so that it does not snap or crack with use. Proportion is important and the appearance must be sufficiently lifelike as to be acceptable to both the wearer and those around them. The stump must also be kept clean, so it must be easy to take on and off. But most importantly it must assist walking.

She continued: “The big toe is thought to carry some 40% of the bodyweight and is responsible for forward propulsion, although those without it can adapt well. To accurately determine any level of function requires the application of gait analysis techniques involving integrated cameras and pressure devices placed along a walkway.”

The findings from this study, which have been published in full in the Journal of Prosthetics and Orthotics, means the earliest known prosthetic is now more likely to come from ancient Egypt. The three part example pre-dates by some 400 years what is currently thought to be the oldest, although untested, prosthetic device. This is a bronze and wooden leg that was found in a Roman burial in Capua, Southern Italy. That has been dated to 300 BC although only a replica now remains as the original was destroyed in a bombing raid over London during the war.

Copy of Roman Capua Leg

Cosmic Hurricane: Most Powerful Winds In The Universe Found: Giant Mystery Solved

October 2nd, 2012If this were a movie the title might be: Gone, With The Quasar Wind. The most powerful winds in the universe have been found and a gigantic “what done it” mystery has been solved.

Credit: NASA/CXC/M. Weiss, Nahks Tr’Ehnl, Nurten Filiz Ak

Other versions: B/W – 300 DPI color TIFF – 300 DPI B/W TIFF

Image components: Just quasar art (300 DPI color TIFF) – Just spectrum inset (300 DPI color JPG)The inset at the top right shows two SDSS spectra for the same quasar (named SDSS J093620.52+004649.2). The upper spectrum (blue) was taken in 2002, while the lower spectrum (red) was taken in 2011. The deep, wide valley in the 2002 spectrum is a so-called “broad absorption line” — a feature which has disappeared from its spectrum by 2011.Quasars are powered by gas falling into supermassive black holes at the centers of galaxies. As the gas falls into the black hole, it heats up and gives off light. The gravitational force from the black hole is so strong, and is pulling so much gas, that the hot gas glows brighter than the entire surrounding galaxy.

But with so much going on in such a small space, not all the gas is able to find its way into the black hole. Much of it instead escapes, carried along by strong winds blowing out from the center of the quasar.

“These winds blow at thousands of miles per second, far faster than any winds we see on Earth,” says Niel Brandt, a professor at Penn State and Filiz Ak’s Ph.D. advisor. “The winds are important because we know that they play an important role in regulating the quasar’s central black hole, as well as star formation in the surrounding galaxy.”

An SDSS image of the quasar SDSS J093620.52+004649.2, one of the 19 quasars with disappearing BAL troughs. The constellation map on the bottom left shows the quasar’s position in the constellation Hydra. Three successive views zoom in closer and closer to the quasar.

Credit: Jordan Raddick (Johns Hopkins University) and the SDSS-III collaboration. Hydra constellation chart from The Constellations, produced by the International Astronomical Union and Sky and Telescope magazine (Roger Sinnott, Rick Fienberg, and Alan MacRobert) Other versions: – B/W JPG – 300 DPI color JPG – 300 DPI B/W JPG

See it for yourself!

The quasar above is part of the SDSS-III’s Data Release 9, which means that all its data available free of charge online. Use the links below to see the quasar change right before your eyes!

The three links below will take you to an interactive spectrum viewer for three spectra of this quasar, measured by the Sloan Digital Sky Survey on three different nights. The spectra are labeled at the bottom in Ångstroms — one Ångstrom equals one ten-billionth of a meter.

Zoom in on the area of each spectrum around 4000 Ångströms. In that area, you should see a broad valley in the 2001 and 2002 spectra — a valley that is gone from the 2011 spectrum!

Spectrum measured on April 28, 2001

Spectrum measured on February 9, 2002

Spectrum measured on January 2, 2011

Many quasars show evidence of these winds in their spectra — measurements of the amount of light that the quasar gives off at different wavelengths. Just outside the center of the quasar are clouds of hot gas flowing away from the central black hole. As light from deeper in the quasar passes through these clouds on its way to Earth, some of the light gets absorbed at particular wavelengths corresponding to the elements in the clouds.

As gas clouds are accelerated to high speeds by the quasar, the Doppler effect spreads the absorption over a broad range of wavelengths, leading to a wide valley visible in the spectrum. The width of this “broad absorption line (BAL)” measures the speed of the quasar’s wind. Quasars whose spectra show such broad absorption lines are known as “BAL quasars.”

But the hearts of quasars are chaotic, messy places. Quasar winds blow at thousands of miles per second, and the disk around the central black hole is rotating at speeds that approach the speed of light. All this adds up to an environment that can change quickly.

Previous studies had found a few examples of quasars whose broad absorption lines seemed to have disappeared between one observation and the next. But these quasars had been found one at a time, and largely by chance — no one had ever done a systematic search for them. Undertaking such a search would require measuring spectra for hundreds of quasars, spanning several years.

Enter the Sloan Digital Sky Survey (SDSS). Since 1998, SDSS has been regularly measuring spectra of quasars. Over the past three years, as part of SDSS-III’s Baryon Oscillation Spectroscopic Survey (BOSS), the survey has been specifically seeking out repeated spectra of BAL quasars through a program proposed by Brandt and colleagues.

Their persistence paid off — the research team gathered a sample of 582 BAL quasars, each of which had repeat observations over a period of between one and nine years – a sample about 20 times larger than any that had been previously assembled. The team then began to search for changes, and were quickly rewarded. In 19 of the quasars, the broad absorption lines had disappeared.

What’s going on here? There are several possible explanations, but the simplest is that, in these quasars, gas clouds that we had seen previously are literally “gone with the wind” —the rotation of the quasar’s disk and wind have carried the clouds out of the line-of-sight between us and the quasar.

And because the sample of quasars is so large, and had been gathered in such a systematic manner, the team can go beyond simply identifying disappearing gas clouds. “We can quantify this phenomenon,” says Filiz Ak.

Finding nineteen such quasars out of 582 total indicates that about three percent of quasars show disappearing gas clouds over a three-year span, which in turn suggests that a typical quasar cloud spends about a century along our line of sight. “Since the universe is 14 billion years old, we’re used to astronomical phenomena lasting a very long time,” says Pat Hall of York University in Toronto, another team member. “It’s fascinating to discover something that changes within a human lifetime.”

Now, as other astronomers come up with models of quasar winds, their models will need to explain this 100-year timescale. As theorists begin to consider the results, the team continues to analyze their sample of quasars — more results are coming soon. “This is really exciting for me,” Filiz Ak says. “I’m sitting at my desk, discovering the nature of the most powerful winds in the Universe.”

Sloan Digital Sky Survey

Geoengineering The Sky Could Turn Earth Into “Lifeless, Ice-Encrusted Rock” Warns Scientist

October 2nd, 2012By Alton Parrish.

Prof. Jost Heintzenberg, Leibniz Institute Leipzig, is warning other scientists they don’t know enough to begin tinkering with Earth’s atmosphere with geoengineering experiments to influence climate change. If they do, the results could be disastrous for every living thing. Heintzenberg is a scientist at prestigious Leibniz Institute for Tropospheric Research, a part of the Leibniz Association (WGL) in Germany, which is named after Gottfried Wilhelm Leibniz (1646 – 1716), philosopher and universal scholar.

Prof. Jost Heintzenberg

Credit: Leibniz Institute Leipzig

Carbon dioxide is not the only problem we must address if we are to understand and solve the problem of climate change. According to research published this month in the International Journal of Global Warming, we as yet do not understand adequately the role played by aerosols, clouds and their interaction and must take related processes into account before considering any large-scale geo-engineering.

There are 10 to the power of 40 molecules of the greenhouse gas carbon dioxide in the atmosphere. Those carbon dioxide molecules absorb and emit radiation mainly in the infrared region of the electromagnetic spectrum and their presence is what helps keep our planet at the relatively balmy temperatures we enjoy today.

Too few absorbing molecules and the greenhouse effect wanes and we would experience the kind of global cooling that would convert the whole planet into a lifeless, ice-encrusted rock floating in its orbit. Conversely, however, rising levels of atmospheric carbon dioxide lead to a rise in temperature. It is this issue that has given rise to the problem of anthropogenic climate change. Humanity has burned increasing amounts of fossil fuels since the dawn of the industrial revolution, releasing the locked in carbon stores from those ancient into the atmosphere boosting the number of carbon dioxide molecules in the atmosphere.

Nevertheless, Heintzenberg sees a conundrum in how to understand atmospheric aerosols and how they affect cloud formation and ultimately influence climate. There are multiple feedback loops to consider as well as the effect of climate forcing due to rising carbon dioxide levels on these species and vice versa. “The key role of aerosols and clouds in anthropogenic climate change make the high uncertainties related to them even more painful,” says Heintzenberg. It is crucial that we understand their effects.

A conceptualized image of an unmanned, wind-powered, remotely controlled ship that could be used to implement cloud brightening.

Contacts and sources:

Leibniz Institute for Tropospheric Research

Amazing! Plants That Flee From Predators Just Discovered

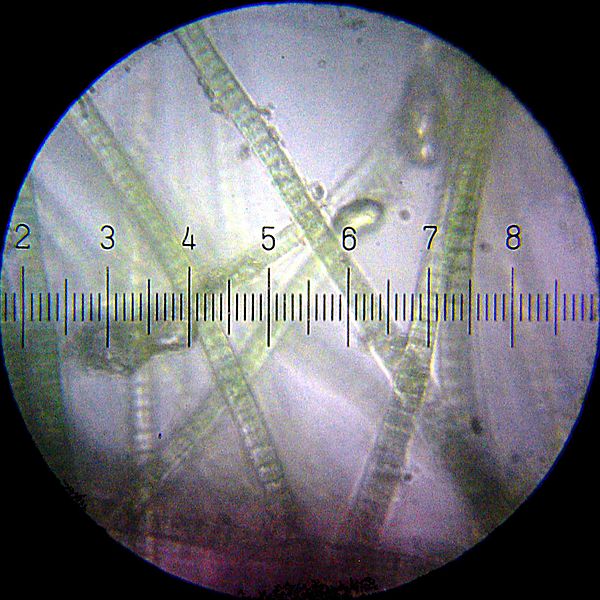

September 30th, 2012First observation of predator avoidance behavior by phytoplankton. In the oceans tiny plants swim away from tiny animals known as zooplankton that would eat them.

Significant modulation of phytoplankton swimming speed and vertical velocity was observed by Menden-Deuer and her colleagues when H. akashiwo was exposed to the actively grazing predator, Favella sp. They observed predator-induced defense behaviors previously unknown for phytoplankton. Modulation of individual phytoplankton movements during and after predator exposure resulted in an effective separation of predator and prey species. The strongest avoidance behaviors were observed when H. akashiwo co-occurred with an actively grazing predator. Predator-induced changes in phytoplankton movements resulted in a reduction in encounter rate and a 3-fold increase in net algal population growth rate.

Their discovery will be published in the September 28 issue of the journal PLOS ONE.

“It has been well observed that phytoplankton can control their movements in the water and move toward light and nutrients,” Menden-Deuer said. “What hasn’t been known is that they respond to predators by swimming away from them. We don’t know of any other plants that do this.”

While imaging 3-dimensional predator-prey interactions, the researchers noted that the phytoplankton Heterosigma akashiwo swam differently in the presence of predators, and groups of them shifted their distribution away from the predators.

“The phytoplankton can clearly sense the predator is there. They flee even from the chemical scent of the predator but are most agitated when sensing a feeding predator,” said Menden-Deuer.

When the scientists provided the phytoplankton with a refuge to avoid the predator – an area of low salinity water that the predators cannot tolerate – the phytoplankton moved to the refuge.

The important question these observations raise, according to Menden-Deuer, is how these interactions affect the survival of the prey species.

Measuring survival in the same experiments, the researchers found that fleeing helps the alga survive. Given a chance, the predators will eat all of the phytoplankton in one day if the algae have no safe place in which to escape, but they double every 48 hours if they have a refuge available to flee from predators. Fleeing makes the difference between life and death for this species, said Menden-Deuer.

“One of the puzzling things about some phytoplankton blooms is that they suddenly appear,” she said. “Growth and nutrient availability don’t always explain the formation of blooms. Our observation of algal fleeing from predators is another mechanism for how blooms could form. Amazingly, looking at individual microscopic behaviors can help to explain a macroscopic phenomenon.”

The researchers say there is no way of knowing how common this behavior is or how many other species of phytoplankton also flee from predators, since this is the first observation of such a behavior.

“If it is common among phytoplankton, then it would be a very important process,” Menden-Deuer said. “I wouldn’t be surprised if other species had that capacity. It would be very beneficial to them.”

In future studies, she hopes to observe these behaviors in the ocean and couple it with genetic investigations.

Funding for this research was provided by the National Science Foundation, the National Oceanic and Atmospheric Administration, and the U.S. Department of Agriculture. The study was conducted, in part, at the URI Marine Life Science Facility, which is supported by the Rhode Island Experimental Program to Stimulate Competitive Research.

Contacts and sources:

‘Todd McLeish

University of Rhode Island

Microbial Bebop: Listen To The Music Of Undersea Microbes

September 30th, 2012Soft horns and a tinkling piano form the backbone of “Fifty Degrees North, Four Degrees West,” a jazz number with two interesting twists: it has no composer and no actual musicians. Unless you count bacteria and other tiny microbes, that is.

When faced with an avalanche of microbial data collected from samples taken from the western English Channel, Larsen recognized he needed a way to make sense of it all. “Thinking of interesting ways to highlight interactions within data is part of my daily job,” he said. “I am always trying to find new ways to visualize those relationships in ways so that someone can make relevant biological conclusions.”

Listen to examples of microbial bebop here » or click a tune below.

Credit: Wikipedia

Credit: Wikipedia

“There are certain parameters like sunlight, temperature or the concentration of phosphorus in the water that give a kind of structure to the data and determine the microbial populations,” he said. “This structure provides us with an intuitive way to use music to describe a wide range of natural phenomena.”

A colleague of Larsen’s suggested that classical music could effectively represent the data, but Larsen wanted any patterns inherent in the information to emerge naturally and not to be imposed from without.

“For something as structured as classical music, there’s an insufficient amount of structure that you can infer without having to tweak the result to fit what you perceive it should sound like,” Larsen said. “We didn’t want to do that.”

While this is not the first attempt to “sonify” data, it is one of the more mellifluous examples of the genre. “We were astounded by just how musical it sounded,” Larsen said. “A large majority of attempts to converting linear data into sound succeed, but they really don’t obey the dictates of music – meter, tempo, harmony. To see these things in natural phenomena and to describe them was a wonderful surprise.”

According to Larsen, the musicality of the data is not limited to the organisms in the English Channel. In another set of analysis, he and his colleagues used a similar methodology to look at the relationship between a plant and a fungus.

“We expect to see the same intuitive patterns recurring in different environments,” he said. “Sometimes, it can sound a little avant-garde, but it’s not random because it reflects very real phenomena.”

Argonne National Laboratory seeks solutions to pressing national problems in science and technology. The nation’s first national laboratory, Argonne conducts leading-edge basic and applied scientific research in virtually every scientific discipline. Argonne researchers work closely with researchers from hundreds of companies, universities, and federal, state and municipal agencies to help them solve their specific problems, advance America’s scientific leadership and prepare the nation for a better future. With employees from more than 60 nations, Argonne is managed byUChicago Argonne, LLC for the U.S. Department of Energy‘s Office of Science.

Contacts and sources:

Jared Sagoff

DOE/Argonne National Laboratory

Time Bomb: Millions Of Pounds Of Unexploded Bombs In Gulf Of Mexico Pose Threat To Shipping

September 29th, 2012

By Alton Parrish.

Millions of pounds of unexploded bombs and other military ordnance that were dumped decades ago in the Gulf of Mexico, as well as off the coasts of both the Atlantic and Pacific oceans, could now pose serious threats to shipping lanes and the 4,000 oil and gas rigs in the Gulf, warns two Texas A&M University oceanographers.

Credit: Texas A&M University

Millions of pounds – no one, including the military, knows how many – were sent to the ocean floor as numerous bases tried to lessen the amount of ordnance at their respective locations.

“The best guess is that at least 31 million pounds of bombs were dumped, but that could be a very conservative estimate,” Bryant notes.

“And these were all kinds of bombs, from land mines to the standard military bombs, also several types of chemical weapons. Our military also dumped bombs offshore that they got from Nazi Germany right after World War II. No one seems to know where all of them are and what condition they are in today.”

Photos show that some of the chemical weapons canisters, such as those that carried mustard gas, appear to be leaking materials and are damaged.

“Is there an environmental risk? We don’t know, and that in itself is reason to worry,” explains Bryant. “We just don’t know much at all about these bombs, and it’s been 40 to 60 years that they’ve been down there.”

With the ship traffic needed to support the 4,000 energy rigs, not to mention commercial fishing, cruise lines and other activities, the Gulf can be a sort of marine interstate highway system of its own. There are an estimated 30,000 workers on the oil and gas rigs at any given moment.

The bombs are no stranger to Bryant and Slowey, who have come across them numerous times while conducting various research projects in the Gulf, and they have photographed many of them sitting on the Gulf floor like so many bowling pins, some in areas cleared for oil and gas platform installation.

“We surveyed some of them on trips to the Gulf within the past few years,” he notes. “Ten are about 60 miles out and others are about 100 miles out. The next closest dump site to Texas is in Louisiana, not far from where the Mississippi River delta area is in the Gulf. Some shrimpers have recovered bombs and drums of mustard gas in their fishing nets.”

Bombs used in the military in the 1940s through the 1970s ranged from 250- to 500- and even 1,000-pound explosives, some of them the size of file cabinets. The military has a term for such unused bombs: UXO, or unexploded ordnance.

“Record keeping of these dump sites seems to be sketchy and incomplete at best. Even the military people don’t know where all of them are, and if they don’t know, that means no one really knows,” Bryant adds. He believes that some munitions were “short dumped,” meaning they were discarded outside designated dumping areas.

The subject of the disposal of munitions at sea has been discussed at several offshore technology conferences in recent years, and it was a topic at an international conference several years ago in Poland, Bryant says.

“The bottom line is that these bombs are a threat today and no one knows how to deal with the situation,” Bryant says. “If chemical agents are leaking from some of them, that’s a real problem. If many of them are still capable of exploding, that’s another big problem.

“There is a real need to research the locations of these bombs and to determine if any are leaking materials that could be harmful to marine life and humans,” Bryant says.

For more information about the underwater munitions conference, go tohttp://www.underwatermunitions.org/

Contacts and sources:

Keith Randall

Texas A&M University

Stellar Shockwaves Shaped Our Solar System

September 29th, 2012

By Alton Parrish.

The early years of our Solar System were a turbulent time, and questions remain about its development. Dr Tagir Abdylmyanov, Associate Professor from Kazan State Power Engineering University, has been researching shockwaves emitted from our very young Sun, and has discovered that these would have caused the planets in our Solar System to form at different times.

Contacts and sources:

Dr Tagir Abdylmyanov , Kazan State Power Engineering University

Extraterrestrial “Iron Man”: Buddhist Statue, Discovered By Nazi Expedition, Is Made Of Meteorite, New Study Reveals

September 28th, 2012

By Alton Parrish.

Priceless thousand year old statue is first carving of a human in a meteorite.

It sounds like an artifact from an Indiana Jones film; a 1,000 year-old ancient Buddhist statue which was first recovered by a Nazi expedition in 1938 has been analysed by scientists and has been found to be carved from a meteorite. The findings, published in Meteoritics and Planetary Science, reveal the priceless statue to be a rare ataxite class of meteorite.

A thousand year-old ancient Buddhist statue known as the Iron Man

Credit: Stuttgart University, Elmar Buchner

The statue, known as the Iron Man, weighs 10kg and is believed to represent a stylistic hybrid between the Buddhist and pre-Buddhist Bon culture that portrays the god Vaisravana, the Buddhist King of the North, also known as Jambhala in Tibet.

The statue was discovered in 1938 by an expedition of German scientists led by renowned zoologist Ernst Schäfer. The expedition was supported by Nazi SS Chief Heinrich Himmler and the entire expeditionary team were believed to have been SS members.

Schäfer would later claim that he accepted SS support to advance his scientific research into the wildlife and anthropology of Tibet. However, historians believe Himmler’s support may have been based on his belief that the origins of the Aryan race could be found in Tibet.

It is unknown how the statue was discovered, but it is believed that the large swastika carved into the centre of the figure may have encouraged the team to take it back to Germany. Once it arrived in Munich it became part of a private collection and only became available for study following an auction in 2007.

The first team to study the origins of the statue was led by Dr Elmar Buchner from Stuttgart University. The team was able to classify it as an ataxite, a rare class of iron meteorite with high contents of nickel.

“The statue was chiseled from a fragment of the Chinga meteorite which crashed into the border areas between Mongolia and Siberia about 15,000 years ago,” said Dr Buchner. “While the first debris was officially discovered in 1913 by gold prospectors, we believe that this individual meteorite fragment was collected many centuries before.”

Meteorites inspired worship from many ancient cultures ranging from the Inuit’s of Greenland to the aborigines of Australia. Even today one of the most famous worship sites in the world, Mecca in Saudi Arabia, is based upon the Black Stone, believed to be a stony meteorite. Dr Buchner’s team believe the Iron Man originated from the Bon culture of the 11th Century.

“The Iron Man statue is the only known illustration of a human figure to be carved into a meteorite, which means we have nothing to compare it to when assessing value,” concluded Dr Buchner. “Its origins alone may value it at $20,000; however, if our estimation of its age is correct and it is nearly a thousand years old it could be invaluable.”

The fall of meteorites has been interpreted as divine messages by multitudinous cultures since prehistoric times, and meteorites are still adored as heavenly bodies. Stony meteorites were used to carve birds and other works of art; jewelry and knifes were produced of meteoritic iron for instance by the Inuit society.

Approximately 10.6 kg of the Buddhist sculpture (the “iron man”) is made of an iron meteorite, which represents a particularity in religious art and meteorite science. The specific contents of the crucial main (Fe, Ni, Co) and trace (Cr, Ga, Ge) elements indicate an ataxitic iron meteorite with high Ni contents (approximately 16 wt%) and Co (approximately 0.6 wt%) that was used to produce the artifact.

In addition, the platinum group elements (PGEs), as well as the internal PGE ratios, exhibit a meteoritic signature. The geochemical data of the meteorite generally match the element values known from fragments of the Chinga ataxite (ungrouped iron) meteorite strewn field discovered in 1913. The provenance of the meteorite as well as of the piece of art strongly points to the border region of eastern Siberia and Mongolia, accordingly. The sculpture possibly portrays the Buddhist god Vaiśravana and might originate in the Bon culture of the eleventh century. However, the ethnological and art historical details of the “iron man” sculpture, as well as the timing of the sculpturing, currently remain speculative.

Citationn: Elmar Buchner, Martin Schmieder, Gero Kurat, Franz Brandstaetter, Utz Kramar, Theo Ntaflos, Joerg Kroechert, “Buddha from space — An ancient object of art made of a Chinga iron meteorite fragment”, Meteoritics & Planetary Science, September 2012, DOI: 10.1111/j.1945-5100.2012.01409.

http://onlinelibrary.wiley.com/doi/10.1111/j.1945-5100.2012.01409.x/abstract