Posts by AltonParrish:

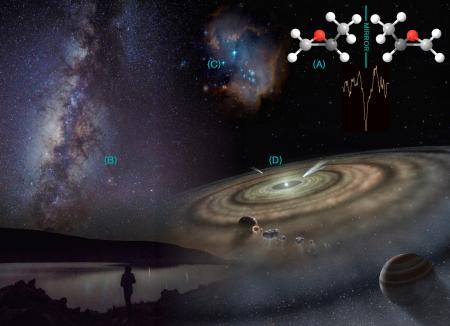

Prebiotic Molecule Detected in Interstellar Cloud

June 15th, 2016

By Alton Parrish.

Chiral molecules—compounds that come in otherwise identical mirror image variations, like a pair of human hands—are crucial to life as we know it. Living things are selective about which “handedness” of a molecule they use or produce. For example, all living things exclusively use the right-handed form of the sugar ribose (the backbone of DNA), and grapes exclusively synthesize the left-handed form of the molecule tartaric acid. While homochirality—the use of only one handedness of any given molecule—is evolutionarily advantageous, it is unknown how life chose the molecular handedness seen across the biosphere.

Now, Caltech researchers have detected, for the first time, a chiral molecule outside of our solar system, bringing them one step closer to understanding one of the most puzzling mysteries of the early origins of life.

A paper about the work appears in the June 17 issue of the journal Science.

The different forms, or enantiomers, of a chiral molecule have the same physical properties, such as the temperatures at which they boil and melt. Chemical interactions with other chiral species, however, can vary greatly between enantiomers. For instance, many chiral pharmaceutical chemicals are only effective in one handedness; in the other, they can be toxic.

“Homochirality is one of the most interesting properties of life as we know it,” says Geoffrey Blake (PhD ’86), professor of cosmochemistry and planetary sciences and professor of chemistry. “How did it come to be that all living things use one enantiomer of a particular amino acid, for example, over another? If we could run the tape of life again, would the same enantiomers be selected through a deterministic process, or is a random choice made that depends on a tiny imbalance of one handedness over the other? If there is life elsewhere in the universe, based on the biochemistry we know, will it use the same enantiomers?”

To help answer these questions, Blake and his colleagues at the National Radio Astronomy Observatory (NRAO) searched one particular molecular cloud, called Sagittarius B2(N), for chiral molecules. The team used the Green Bank Telescope Prebiotic Interstellar Molecular Survey (PRIMOS) of Sagittarius B2(N). The PRIMOS project, led by co-senior author Anthony Remijan of the NRAO, examines the spectrum of Sagittarius B2(N) across a broad range of radio frequencies. Every gas-phase molecule can only tumble in specific ways depending on its size and shape, giving it a unique rotational spectrum —like a fingerprint—that makes it readily identifiable in the PRIMOS survey.

The PRIMOS data revealed the signature of a chiral molecule called propylene oxide (CH3CHOCH2); follow-up studies with the Parkes radio telescope in Australia confirmed the findings. “It’s the first molecule detected in space that has the property of chirality, making it a pioneering leap forward in our understanding of how prebiotic molecules are made in space and the effects they may have on the origins of life,” says Brandon Carroll, co-first author on the paper and a graduate student in Blake’s group. “While the technique we used does not tell us about the abundance of each enantiomer, we expect this work to enable future observations that will let us understand a great deal more about chiral molecules, the origins of homochirality, and the origins of life in general.”

Propylene oxide is a useful molecule to study because it is relatively small compared to biomolecules such as amino acids; larger molecules are more difficult to detect with radio astronomy, but have been seen in meteorites and comets formed at the birth of the solar system. Though propylene oxide is not utilized in living organisms, its presence in space is a signpost for the existence of other chiral molecules.

“The next step is to detect an excess of one enantiomer over the other,” says Brett McGuire (PhD ’15), an NRAO Jansky Fellow and former member of the Blake lab, who shares first authorship on the work with Carroll. “By discovering a chiral molecule in space, we finally have a way to study where and how these molecules form before they find their way into meteorites and comets, and to understand the role they play in the origins of homochirality and life.”

(1)

“The past few years of exoplanetary science have told us there are millions of solar system-like environments in our galaxy alone, and thousands of nearby young stars around which planets are being born,” says Blake. “The detection of propylene oxide, and the future projects it enables, lets us begin to ask the question—does interstellar prebiotic chemistry plant the primordial cosmic seeds that determine the handedness of life?”

Additional coauthors on the paper, titled “Discovery of the interstellar chiral molecule propylene oxide (CH3CHOCH2),” include Caltech graduate student Ian Finneran, a member of the Blake group. The work is supported by the National Science Foundation Astronomy and Astrophysics and Graduate Fellowship grant programs and the NASA Astrobiology Institute through the Goddard Team and the Early Career Collaboration award program.

Comments Off on Prebiotic Molecule Detected in Interstellar Cloud

Citizen Scientists Discover Huge Galaxy Cluster

June 14th, 2016

By Alton Parrish.

Two volunteer participants in an international citizen science project have had a rare galaxy cluster that they found named after them.

The pair pieced together the huge C-shaped structure from much smaller images of cosmic radio waves shown to them as part of the web-based program Radio Galaxy Zoo.

The discovery surprised the astronomers running the program, said the lead author of the study Dr Julie Banfield of the ARC Centre of Excellence for All-sky Astrophysics (CAASTRO) at The Australian National University (ANU).

“They found something that none of us had even thought would be possible,” said Dr Banfield.More than 10,000 volunteers have joined in with Radio Galaxy Zoo, classifying over 1.6 million images from NASA’s Wide-Field Infrared Survey Explorer telescope and the NRAO Very Large Array in New Mexico, USA.

A radio contour overlay showing the newly-discovered Matorny-Terentev Cluster RGZ-CL J0823.2+0333

“The dataset is just too big for any individual or small team to plough through – but we have already reached almost 60% completeness” said Dr Banfield.

The project is led by Dr Banfield and Dr Ivy Wong who is based at the International Centre for Radio Astronomy Research (ICRAR) at The University of Western Australia.

“Although radio astronomy is not as pretty as optical images from the Hubble space telescope, people can find cool things, like black holes, quasars, spiral galaxies or clusters of galaxies.”

The astronomers classified the newly-discovered feature as a wide angle tail (WAT) radio galaxy, named for the C-shaped tail shape of highly energetic jets of plasma which are being ejected from it.

It is part of a previously unreported sparsely-populated galaxy cluster and one of the biggest ever found.

Their discovery has now been published in the Monthly Notices of the Royal Astronomical Society with the two volunteers included as co-authors.

“This radio galaxy might have had two distinct episodes of activity during its lifetime, with quiet times of approximately 1 million years in between.” said Radio Galaxy Zoo science team member and co-author Dr Anna Kapinska, also of CAASTRO / ICRAR at the University of Western Australia.

But the discovery of the Matorny-Terentev Cluster RGZ-CL J0823.2+0333, now bearing the names of the two citizen scientists, means even more than having added another piece to our cosmic puzzle.

While the unusual, bent shape of WATs has proven an excellent beacon for the detection of galaxy clusters, it will always be difficult to be detected by algorithms – which is where citizen science can play a huge part.

Through big projects such as Radio Galaxy Zoo, citizen science has established itself as a powerful research tool for astronomy, especially looking at the future challenges such as the EMU survey in Australia – the “Evolutionary Map of the Universe” with the Australian Square Kilometre Array Pathfinder (ASKAP) – and MeerKAT MIGHTEE in South Africa.

“Expanding on projects such as Radio Galaxy Zoo or on machine-learning techniques will be key to finding these unusual structures and to studying galaxy clusters.” said Dr Banfield.

The team of Radio Galaxy Zoo has entered their project in this year’s Australian Museum Eureka Prizes whose winners are expected to be announced during National Science Week

Comments Off on Citizen Scientists Discover Huge Galaxy Cluster

‘Tatooine’ Planet Confirmed by Crowdsourcing

June 14th, 2016

By Alton Parrish.

Crowdsourcing is used for everything from raising funds to locating the best burger in town. The practice of enlisting a large group of people to provide a service, information or a contribution to a project–most often via the internet–is a defining feature of our era.

Though the term crowdsourcing was coined in 2005 by Wired Magazine editors to describe how businesses were outsourcing work, one of crowdsourcing’s earliest uses was for science. A plea from Yale science professor Denison Olmsted–published in a newspaper article in 1833–asked the public to send in their observations about a major meteor storm. Olmsted then used the information to make significant advances in our understanding of the nature of such storms.

This is an artist’s impression of the simultaneous stellar eclipse and planetary transit events on Kepler-1647. Such a double eclipse event is known as a syzygy.

Now Lehigh University astronomer assistant professor of physics Joshua Pepper is using crowdsourcing to gather observations worldwide–and the information is being used to verify the discovery of new planets. His network– known as the “KELT Follow-Up Network”–is made up of nearly 40 members in 10 countries across 4 continents. The group–the largest, most coordinated network of its kind–contributed key observations to confirm the existence of the recently-identified planet, Kepler-1647 b.

Lehigh University astronomer assistant professor of physics Joshua Pepper leads the “KELT Follow-Up Network.” Made up of nearly 40 members in 10 countries across 4 continents, it is the largest, most coordinated network of its kind.

The new planet was discovered by a team at NASA’s Goddard Space Flight Center and San Diego State University used the Kepler Space Telescope. The discovery was announced today in San Diego, at a meeting of the American Astronomical Society. The research has been accepted for publication in the Astrophysical Journal with Veselin Kostov, a NASA Goddard postdoctoral fellow, as lead author.

Planets that orbit two stars are known as circumbinary planets, or sometimes “Tatooine” planets, after Luke Skywalker’s homeland in “Star Wars.” Using NASA’s Kepler telescope, astronomers search for slight dips in brightness that hint a planet might be transiting in front of a star, blocking a small portion of the star’s light.

“But finding circumbinary planets is much harder than finding planets around single stars,” said SDSU astronomer William Welsh, one of the paper’s coauthors. “The transits are not regularly spaced in time and they can vary in duration and even depth.”

This is a bird’s eye view comparison of the orbits of the Kepler circumbinary planets. Kepler-1647 b’s orbit, shown in red, is much larger than the other planets (shown in gray). For comparison, the Earth’s orbit is shown in blue.

To help verify what they had seen, the researchers made use of the worldwide network of professional and amateur astronomers that Pepper–a coauthor on the paper–had created.

“Most members of our network have small telescopes that are not able to observe distant galaxies–but they are very well-suited to observing bright stars, like the ones Kepler-1647 b is orbiting,” said Pepper.

The two network astronomers whose observations helped researchers to estimate the mass of the new planet are Eric L.N. Jensen, professor of astronomy at Swarthmore College and Joao Gregorio, an amateur astronomer in Portugal–both listed as co-authors on the paper.

“It’s really exciting for me to be part of this discovery, since I’ve been working on this problem for a long time, said Professor Jensen. “As a graduate student in the early 1990s, I studied dusty disks around young binary stars. We thought that such disks should form planets, but that was before any planets had been discovered outside the solar system, so it was just speculation at that point. I never thought that one day I would help discover a circumbinary planet.”

“As an amateur being involved in the KELT follow-up network giving my contribution to the discovery of ‘new worlds’ is amazing–I’m very proud to be able to contribute,” said Mr. Gregorio.

Kepler-1647 is 3700 light-years away and approximately 4.4 billion years old, roughly the same age as the Earth. The stars are similar to the Sun, with one slightly larger than our home star and the other slightly smaller. The planet has a mass and radius nearly identical to that of Jupiter, making it the largest transiting circumbinary planet ever found.

“It’s a bit curious that this biggest planet took so long to confirm, since it is easier to find big planets than small ones”, said SDSU astronomer Jerome Orosz, another coauthor on the study. “But it is because its orbital period is so long.”

The planet takes 1,107 days (just over 3 years) to orbit its host stars, the longest period of any confirmed transiting exoplanet found so far. The planet is also much further away from its stars than any other circumbinary planet, breaking with the tendency for circumbinary planets to have close-in orbits. Interestingly, its orbit puts the planet within the so-called habitable zone. Like Jupiter, however, Kepler-1647 b is a gas giant, making the planet unlikely to host life. Yet if the planet has large moons, they could potentially be suitable for life.

“Habitability aside, Kepler-1647 b is important because it is the tip of the iceberg of a theoretically predicted population of large, long-period circumbinary planets”, Welsh said.

The “KELT Follow up Network” was originally assembled to assist Pepper and his colleagues in identifying exoplanets for a project he founded called the Kilodegree Extremely Little Telescope (KELT) survey. It uses two robotic programmable telescopes, one in Arizona calledKELT North and the other in South Africa known as KELT South.

The survey has confirmed 15 exoplanets using the transit method. Lehigh, Vanderbilt University and Ohio State run the KELT project together, and the project’s low-resolution telescopes are dwarfed by other telescopes that have apertures of several meters in order to stare at tiny sections of the sky at high resolution. The wide-angle KELT view of the universe, by contrast, comes from a mere 4.5-centimeter aperture with a high-quality digital camera and lens assembly that captures the light of 100,000 stars with each exposure.

“The goal of KELT is to discover more planets that are transiting the brightest stars we can see. In essence, those give us the very rare, very valuable planets,” Pepper says.

Comments Off on ‘Tatooine’ Planet Confirmed by Crowdsourcing

Light Pollution Blots Out The Stars for 99% of U.S. and Europe

June 13th, 2016By Alton Parrish.

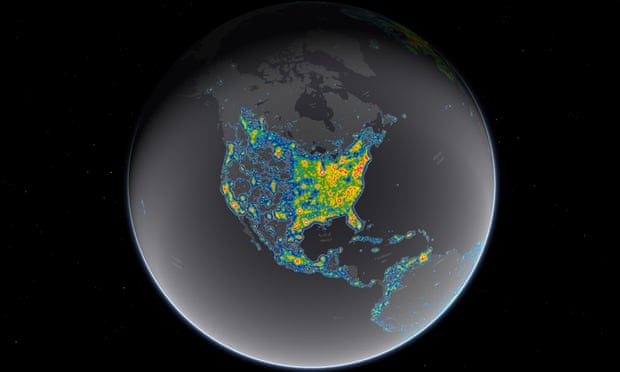

Artificial lights raise night sky luminance, creating the most visible effect of light pollution—artificial skyglow. Despite the increasing interest among scientists in fields such as ecology, astronomy, health care, and land-use planning, light pollution lacks a current quantification of its magnitude on a global scale.

A new atlas of light pollution documents the degree to which the world is illuminated by artificial skyglow. In addition to being a scourge for astronomers, bright nights also affect nocturnal organisms and the ecosystems in which they live. The “New World Atlas of Artificial Night Sky Brightness” was published in the open access journal Science Advances on June 10, 2016.

The Milky Way disappears in Berlin’s light-dome.

Researchers from Italy, Germany, the USA, and Israel carried out the work, which was led by Fabio Falchi from the Italian Light Pollution Science and Technology Institute (ISTIL). “The new atlas provides a critical documentation of the state of the night environment as we stand on the cusp of a worldwide transition to LED technology” explains Falchi. “Unless careful consideration is given to LED color and lighting levels, this transition could unfortunately lead to a 2-3 fold increase in skyglow on clear nights.”

(1)

This atlas shows that more than 80% of the world and more than 99% of the U.S. and European populations live under light-polluted skies. The Milky Way is hidden from more than one-third of humanity, including 60% of Europeans and nearly 80% of North Americans. Moreover, 23% of the world’s land surfaces between 75°N and 60°S, 88% of Europe, and almost half of the United States experience light-polluted nights.

Major advances over a similar atlas from 2001 were possible thanks to a new satellite, and to the recent development of inexpensive sky radiance meters. City lighting information for the atlas came from the American Suomi NPP satellite, which includes the first instrument intentionally designed to make accurate observations of urban lights from space. The atlas was calibrated using data from “Sky Quality Meters” at 20,865 individual locations around the world. The participation of citizen scientists in collecting the calibration data was critical, according to Dr. Christopher Kyba, a study co-author, and researcher at the GFZ German Research Centre for Geosciences. “Citizen scientists provided about 20% of the total data used for the calibration, and without them we would not have had calibration data from countries outside of Europe and North America.”

“The community of scientists who study the night have eagerly anticipated the release of this new Atlas” said Dr. Sibylle Schroer, who coordinates the EU funded “Loss of the Night Network and is not one of the study’s authors. The director of the International Dark-Sky Association, Scott Feierabend also hailed the work as a major breakthrough, saying “the new atlas acts as a benchmark, which will help to evaluate the success or failure of actions to reduce light pollution in urban and natural areas”.

Milky Way from Fairy Meadows

Comments Off on Light Pollution Blots Out The Stars for 99% of U.S. and Europe

Can Computers Do Magic?

June 11th, 2016By Alton Parrish.

Magicians could join composers and artists in finding new ideas for their performances by using computers to create new magic effects, according to computer scientists at Queen Mary University of London (QMUL).

Writing in the open access journal Frontiers in Psychology, the scientists, one of whom is also practicing magician, have looked at modelling particular human perceptual quirks and processes, and building computer systems able to search and find designs for new tricks based on these potential responses from the audience.

Co-author Professor Peter McOwan from QMUL’s School of Electronic Engineering and Computer Science, said: “While there’s much speculation as to when computers might take over human jobs, it’s safe to assume magicians don’t have to worry…yet

“Where computer science and artificial intelligence can help is in conjuring new tricks, which the magician could then perform.”

The internet and social media platforms, such as Snapchat, Instagram and Facebook, provide rich sets of ready-made psychological data about how people use language in day-to-day life.

This kind of data, the authors explain in the paper, could be exploited by computational systems that combine and search large datasets to automatically generate new tricks, by relying on the often ambiguous mental associations people have with particular words in certain contexts. For example, using clusters of words and their associated meanings could allow a magician to predict how a spectator might make connections between seemingly incongruous words in the right context and predict what they might say in a particular situation.

Co-author Dr Howard Williams also from QMUL’s School of Electronic Engineering and Computer Science, said: “Magicians and trick designers, and those in other creative fields, such as music and design, already use machines as development aids, however we point out that computers also have the potential to be creative aids, generating some aspects of the creative output themselves – though currently in a highly supervised way.”

Other areas of new magic might include:

Stage magic: where computers could be used to evaluate the range of comfortable positions a concealed body could take in a cabinet;

Comments Off on Can Computers Do Magic?

The GTC Obtains the Deepest Image of a Galaxy from Earth

June 11th, 2016

By Alton Parrish.

The telescope on La Palma produces an image 10 times deeper than any other taken from a ground-based telescope and observes the faint stellar halo of one of our neighboring galaxies, which supports the presently accepted model of galaxy formation.

Observing very distant objects in the universe is a challenge because the light which reaches us is extremely faint. Something similar occurs with objects which are not so distant but have very low surface brightness. Measuring this brightness is difficult due to the low contrast with the sky background. Recently a study led by the Instituto de Astrofísica de Canarias (IAC) set out to test the limit of observation which can be reached using the largest optical-infrared telescope in the world: the Gran Telescopio CANARIAS (GTC).

This image shows a weak halo composed of four thousand million stars around UGC00180 galaxy

The observers managed to obtain an image 10 times deeper than any other obtained from the ground, observing a faint halo of stars around the galaxy UGC0180, which is 500 million light years away from us. With this measurement, recently published in the specialized journal Astrophysical Journal the existence of the stellar halos predicted by theoretical models is confirmed, and it has become possible to study low surface brightness phenomena.

The currently accepted model for galaxy formation predicts that there are many stars in their outer zones, which form a stellar halo, and is the result of the destruction of other minor galaxies. The problem about this is that these halos consist of very few stars in a very large volume. For example in the Milky Way the fraction of stars in its halo is about 1% of the total number of stars in the galaxy, but distributed within a large volume, several times bigger than the rest of the Galaxy. This means that the surface brightness of galaxy halos is extremely low, and only a few of them have been studied even in nearby galaxies. Because of this difficulty the scientists had questioned the possibility of observing further away and obtaining ultra-deep images, even though technological development has provided us with bigger and bigger telescopes capable of exploring the surface brightness of fainter and fainter galaxies.

For their recent experiment the observers used the GTC, which is at the Roque de los Muchachos Observatory in Garafía, (La Palma, Canary Islands). They chose the galaxy UGC00180, which is quite similar to our neighbour, the Andromeda Galaxy, and to other galaxies to which they have references, and they used the OSIRIS camera on the GTC, which has a field big enough to cover a decent area of sky around the galaxy, in order to explore its possible halo. After 8.1 hours of exposure they could show that it does have a weak halo composed of four thousand million stars, about the same number as those in the Magellanic Clouds, which are satellite galaxies of the Milky Way.

As well as beating the previous surface brightness limit by a factor of ten, this observation shows that it will be possible to explore the universe not only to the same depth to which we can go using the conventional technique of star counts, but also out to distances where this cannot be achieved, (UGC00180 is 200 times further away than Andromeda).

Comments Off on The GTC Obtains the Deepest Image of a Galaxy from Earth

Works in The Visible Spectrum, Sees Smaller Than a Wavelength of Light

June 10th, 2016By Alton Parrish.

Curved lenses, like those in cameras or telescopes, are stacked in order to reduce distortions and resolve a clear image. That’s why high-power microscopes are so big and telephoto lenses so long.

While lens technology has come a long way, it is still difficult to make a compact and thin lens (rub a finger over the back of a cellphone and you’ll get a sense of how difficult). But what if you could replace those stacks with a single flat — or planar — lens?

Researchers from the Harvard John A. Paulson School of Engineering and Applied Sciences (SEAS) have demonstrated the first planar lens that works with high efficiency within the visible spectrum of light — covering the whole range of colors from red to blue. The lens can resolve nanoscale features separated by distances smaller than the wavelength of light. It uses an ultrathin array of tiny waveguides, known as a metasurface, which bends light as it passes through.

The research is described in the journal Science.

“This technology is potentially revolutionary because it works in the visible spectrum, which means it has the capacity to replace lenses in all kinds of devices, from microscopes to cameras, to displays and cell phones,” said Federico Capasso, Robert L. Wallace Professor of Applied Physics and Vinton Hayes Senior Research Fellow in Electrical Engineering and senior author of the paper. “In the near future, metalenses will be manufactured on a large scale at a small fraction of the cost of conventional lenses, using the foundries that mass produce microprocessors and memory chips.”

This is a schematic showing the ultra-thin meta-lens. The lens consists of titanium dioxide nanofins on a glass substrate. The meta-lens focuses an incident light (entering from bottom and propagating upward) to a spot (yellow area) smaller than the incident wavelength.

“Correcting for chromatic spread over the visible spectrum in an efficient way, with a single flat optical element, was until now out of reach,” said Bernard Kress, Partner Optical Architect at Microsoft, who was not part of the research. “The Capasso group’s metalens developments enable the integration of broadband imaging systems in a very compact form, allowing for next generations of optical sub-systems addressing effectively stringent weight, size, power and cost issues, such as the ones required for high performance AR/VR wearable displays.”

In order to focus red, blue and green light — light in the visible spectrum — the team needed a material that wouldn’t absorb or scatter light, said Rob Devlin, a graduate student in the Capasso lab and co-author of the paper.

“We needed a material that would strongly confine light with a high refractive index,” he said. “And in order for this technology to be scalable, we needed a material already used in industry.”

The team used titanium dioxide, a ubiquitous material found in everything from paint to sunscreen, to create the nanoscale array of smooth and high-aspect ratio nanostructures that form the heart of the metalens.

Scanning electron microscope micrograph of the fabricated meta-lens. The lens consists of titanium dioxide nanofins on a glass substrate. Scale bar: 2 mm

“We wanted to design a single planar lens with a high numerical aperture, meaning it can focus light into a spot smaller than the wavelength,” said Mohammadreza Khorasaninejad, a postdoctoral fellow in the Capasso lab and first author of the paper. “The more tightly you can focus light, the smaller your focal spot can be, which potentially enhances the resolution of the image.”

The team designed the array to resolve a structure smaller than a wavelength of light, around 400 nanometers across. At these scales, the metalens could provide better focus than a state-of-the art commercial lens.

“Normal lenses have to be precisely polished by hand,” said Wei Ting Chen, coauthor and a postdoctoral fellow in the Capasso Lab. “Any kind of deviation in the curvature, any error during assembling makes the performance of the lens go way down. Our lens can be produced in a single step — one layer of lithography and you have a high performance lens, with everything where you need it to be.”

The amazing field of metamaterials brought up lots of new ideas but few real-life applications have come so far,” said Vladimir M. Shalaev, professor of electrical and computer engineering at Purdue University, who was not involved in the research. “The Capasso group with their technology-driven approach is making a difference in that regard. This new breakthrough solves one of the most basic and important challenges, making a visible-range meta-lens that satisfies the demands for high numerical aperture and high efficiency simultaneously, which is normally hard to achieve.”

One of the most exciting potential applications, said Khorasaninejad, is in wearable optics such as virtual reality and augmented reality.

This is a schematic showing the ultra-thin meta-lens. The lens consists of titanium dioxide nanofins on a glass substrate. The meta-lens focuses an incident light (entering from bottom and propagating upward) to a spot (yellow area) smaller than the incident wavelength. Small meta-lens at the side (red color) showing a different view of the meta-lens.

“Any good imaging system right now is heavy because the thick lenses have to be stacked on top of each other. No one wants to wear a heavy helmet for a couple of hours,” he said. “This technique reduces weight and volume and shrinks lenses thinner than a sheet of paper. Imagine the possibilities for wearable optics, flexible contact lenses or telescopes in space.”

The authors have filed patents and are actively pursuing commercial opportunities.

The paper was coauthored by Jaewon Oh and Alexander Zhu of SEAS. It was supported in part by a MURI grant from the Air Force Office of Scientific Research, Draper Laboratory and Thorlabs Inc.

Comments Off on Works in The Visible Spectrum, Sees Smaller Than a Wavelength of Light

Prototype Gravitational Wave Spacecraft Sets New Free Fall Record

June 10th, 2016

By Alton Parrish.

Hypothesized by Albert Einstein a century ago, gravitational waves are oscillations in the fabric of spacetime, moving at the speed of light and caused by the acceleration of massive objects.

They can be generated, for example, by supernovas, neutron star binaries spiralling around each other, and pairs of merging black holes.

Even from these powerful objects, however, the fluctuations in spacetime are tiny by the time they arrive at Earth – smaller than 1 part in 100 billion billion.

The successful LISA Pathfinder mission paves the way for the LISA space-based, gravitational wave observatory scheduled for launch in 2034.

At the heart of the experiment is a two-kilogram cube of a high-purity gold and platinum alloy, called a test mass. The cube is nestled inside the shell-like LISA Pathfinder spacecraft, and has been in orbit since February 2016. The researchers found the test mass could be sufficiently stable and isolated from outside forces to fly in space and detect a whole new range of violent events that create gravitational waves.

The LISA Pathfinder spacecraft is equipped with electrodes adjacent to each side of the test mass cube to detect the relative position and orientation of the test mass with respect to the spacecraft. An array of tiny thrusters on the outside of the spacecraft compensates for forces that could affect the test mass orbit, chiefly including the pressure from the solar photon flux.

The mission is a crucial test of systems that will be incorporated in three spacecraft that will comprise the Laser Interferometer Space Antenna (LISA) gravitational wave observatory scheduled to launch in 2034. The LISA observatory will follow a heliocentric orbit trailing fifty million kilometers behind the Earth. Each LISA spacecraft will contain two test masses like the one currently in the LISA Pathfinder spacecraft. The LISA Pathfinder mission’s success is a crucial step in developing the LISA observatory.

In the LISA observatory mission planned for 2034, laser interferometers will measure the distances between test masses housed in spacecraft flying in a triangular configuration roughly a million kilometers on a side. The LISA Pathfinder spacecraft contains a second test mass to form a minuscule equivalent of one leg of the triangular LISA formation. The second Pathfinder mass is electrostatically manipulated to maintain its position relative to the free falling test mass. The masses are separated by only about a third of a meter, which is far too short for the detection of gravitational waves, but is vital for testing the systems that will eventually make up the LISA observatory.

Researchers report that the system reduces acceleration noise between the test masses to less than 0.54 x 10-15 g/(Hz)^½ over a frequency range of 0.7 mHz to 20 mHz. The noise in this range is five times lower than the LISA Pathfinder design threshold, and within a factor of 1.25 of the LISA observatory requirements. Above 60 mHz, acceleration noise is two orders of magnitude better than design requirements. According to the researchers, the measured performance of the Pathfinder mission systems would allow gravitational wave observations close to the original plan for the LISA Observatory.

ESA’s LISA Pathfinder mission has demonstrated the technology needed to build a space-based gravitational wave observatory.

Results from only two months of science operations show that the two cubes at the heart of the spacecraft are falling freely through space under the influence of gravity alone, unperturbed by other external forces, to a precision more than five times better than originally required

In a paper published today in Physical Review Letters, the LISA Pathfinder team show that the test masses are almost motionless with respect to each other, with a relative acceleration lower than 1 part in ten millionths of a billionth of Earth’s gravity

Sophisticated technologies are needed to register such minuscule changes, and gravitational waves were directly detected for the first time only in September 2015 by the ground-based Laser Interferometer Gravitational-Wave Observatory (LIGO).

This experiment saw the characteristic signal of two black holes, each with some 30 times the mass of the Sun, spiralling towards one another in the final 0.3 seconds before they coalesced to form a single, more massive object.

The signals seen by LIGO have a frequency of around 100 Hz, but gravitational waves span a much broader spectrum. In particular, lower-frequency oscillations are produced by even more exotic events such as the mergers of supermassive black holes.

With masses of millions to billions of times that of the Sun, these giant black holes sit at the centres of massive galaxies. When two galaxies collide, these black holes eventually coalesce, releasing vast amounts of energy in the form of gravitational waves throughout the merger process, and peaking in the last few minutes.

Comments Off on Prototype Gravitational Wave Spacecraft Sets New Free Fall Record

Great Apes Communicate Cooperatively

June 9th, 2016

By Alton Parrish.

Gestural communication in bonobos and chimpanzees shows turn-taking and clearly distinguishable communication styles

Human language is a fundamentally cooperative enterprise, embodying fast-paced interactions. It has been suggested that it evolved as part of a larger adaptation of humans’ unique forms of cooperation. In a cross-species comparison of bonobos and chimpanzees, scientists from the Humboldt Research Group of the Max Planck Institute for Ornithology in Seewiesen now showed that communicative exchanges of our closest living relatives, the great apes resemble cooperative turn-taking sequences in human conversation.

Human communication is one of the most sophisticated signalling systems, being highly cooperative and including fast interactions. The first step into this collective endeavour can already be observed in early infancy, well before the use of first words, when children start to engage in turn-taking interactional practices embodying gestures to communicate with other individuals. One of the predominant theories of language evolution thus suggested that the first fundamental steps towards human communication were gestures alone.

The results showed that communicative exchanges in both species resemble cooperative turn-taking sequences in human conversation. However, bonobos and chimpanzees differ in their communication styles. “For bonobos, gaze plays a more important role and they seem to anticipate signals before they have been fully articulated” says Marlen Froehlich, first author of the study.In chimps a single gesture can have different meanings depending on the context. In this case, the outstretched arm of the mother means: “Come to me!”

In contrast, chimpanzees engage in more time-consuming communicative negotiations and use clearly recognizable units such as signal, pause and response. Bonobos may therefore represent the most representative model for understanding the prerequisites of human communication.

“Communicative interactions of great apes thus show the hallmarks of human social action during conversation and suggest that cooperative communication arose as a way of coordinating collaborative activities more efficiently,” says Simone Pika, head of the study.

Just like in humans, chimp mothers play a crucial role in the development of social skills in their offspring.

Comments Off on Great Apes Communicate Cooperatively

The Secret Life of the Orion Nebula

June 9th, 2016By Alton Parrish.

The interplay of magnetic fields and gravitation in the gas cloud lead to the birth of new stars

Space bears witness to a constant stream of star births. And whole star clusters are often formed at the same time – and within a comparatively short period. Amelia Stutz and Andrew Gould from the Max Planck Institute for Astronomy in Heidelberg have proposed a new mechanism to explain this quick formation. The researchers have investigated a filament of gas and dust which also includes the well-known Orion nebula.

Star formation is basically a simple process: You take a very cold cloud consisting of hydrogen gas and a sprinkling of dust and leave the system to get on with it. Then, within the space of a few million years, the sufficiently cold regions will collapse under their own gravity and form new stars.

Reality is a bit more complicated. A particular feature is that there seem to be two types of star formation. In conventional, smaller molecular clouds, only one or a few stars form – until the gas has dispersed over a period of three million years or so. Larger clouds survive around ten times longer. Whole star clusters are born simultaneously in these clouds and very massive suns are formed.

Why is it that so many stars are created during these approximately 30 million years? In astronomical terms, this period is quite short. Most attempts at an explanation are based on a kind of chain reaction in which the formation of the first stars in the cloud triggers the formation of further stars. Supernova explosions of the most massive (and therefore shortest-lived) stars which have just formed could be one explanation, as their shock waves compress the cloud material and thus create the seeds for new stars.

Amelia Stutz and Andrew Gould from the Max Planck Institute for Astronomy in Heidelberg are pursuing a different approach and bringing gravity and magnetic fields into play. To test their idea, they undertook a detailed investigation of the Orion nebula, 1300 light years away. The bright red gas cloud with the complex pattern is one of the best-known celestial objects.

The starting point for Stutz and Gould’s considerations are maps of the mass distribution in a structure known as an “integral-shaped filament” because of its form – it resembles that of a curved integral sign – and which includes the Orion nebula in the central section of the filament. The Heidelberg-based researchers also drew on studies of the magnetic fields in and around this object.

The data show that magnetic fields and gravitation have approximately the same effect on the filament. Taking this as their basis, the two astronomers developed a scenario in which the filament is a flexible structure undulating to and fro. The usual models of star formation, on the other hand, are based on gas clouds which collapse under their own gravitation.

Important proof for the new idea is the distribution of protostars and infant suns in and around the filament. Protostars are the precursors of suns: they contract even further until their nuclei have reached densities and temperatures which are high enough for nuclear fusion reactions to start in a big way. This is the point at which a star is born.

Protostars are light enough to be dragged along when the filament undulates backwards and forwards. Infant stars, in contrast, are much more compact and are simply left behind by the filament or launched into the surrounding space as if fired from a slingshot. The model can thus explain what the observation data actually show: protostars are to be found only along the dense spine of the filament; infant stars, on the other hand, are found mainly outside the filament.

This scenario has the potential for a new mechanism which could explain the formation of whole star clusters on (in astronomical terms) short timescales. The observed positions of the star clusters suggest that the integral-shaped filament originally extended much further towards the north than it does today. Over millions of years, one star cluster after another seems to have formed, starting from the north. And each finished star cluster has scattered the gas-dust mixture surrounding it as time has passed.

This is why we now see three star clusters in and around the filament: the oldest cluster is furthest away from the northern tip of the filament; the second one is closer and is still surrounded by filament remnants; the third one, in the centre of the integral-shaped filament, is just in the process of growing.

The interaction of magnetic fields and gravity allows certain types of instabilities, some of which are familiar from plasma physics, and which could lead to the formation of one star cluster after another. This hypothesis is based on observational data for the integral-shaped filament. It is not a mature model for a new mode of star formation, however. Theoreticians have first to carry out appropriate simulations and astronomers have to make further observations.

Only when this preparatory work is complete will it be clear whether the molecular cloud in Orion represents a special case. Or whether the birth of star clusters in a medley of magnetically trapped filaments is the usual route to forming whole clusters of new stars in space within a short period.

Comments Off on The Secret Life of the Orion Nebula

Where Are The Milky Way’s Missing Red Giants?

June 8th, 2016By Alton Parrish.

New computer simulations from the Georgia Institute of Technology provide a conclusive test for a hypothesis of why the center of the Milky Way appears to be filled with young stars but has very few old ones. According to the theory, the remnants of older, red giant stars are still there — they just aren’t bright enough to be detected with telescopes.

The Georgia Tech simulations investigate the possibility that these red giants were dimmed after they were stripped of 10s of percent of their mass millions of years ago during repeated collisions with an accretion disk at the galactic center. The very existence of the young stars, seen in astronomical observations today, is an indication that such a gaseous accretion disk was present in the galactic center because the young stars are thought to have formed from it as recently as a few million years ago.

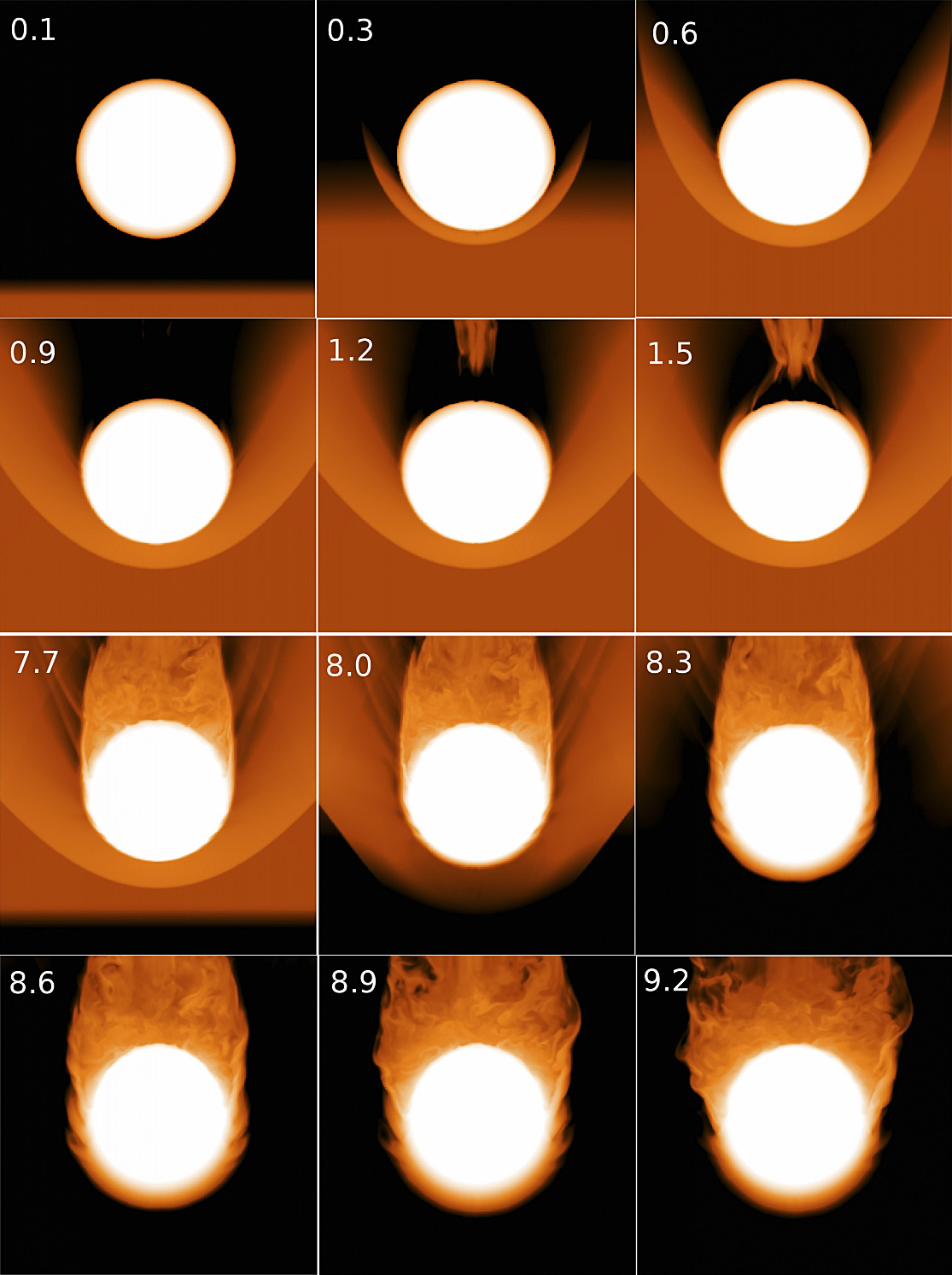

A sequence of snapshots from a simulation showing a red giant star tunneling through a high density gas clump. The star is moving downward in the illustration, as indicated by the bow-shaped “onion skin” surfaces of constant density. Soon after the star plunges into the clump, it develops a high temperature “blister” at the point of impact and a full turbulent wake behind it

The study is published in the June edition of The Astrophysical Journal. It is the first to run computer simulations on the theory, which was introduced in 2014.

Astrophysicists in Georgia Tech’s College of Sciences created models of red giants similar to those that are supposedly missing from the galactic center — stars that are more than a billion years old and 10s of times larger in size than the Sun. They put them through a computerized version of a wind tunnel to simulate collisions with the gaseous disk that once occupied much of the space within .5 parsecs of the galactic center. They varied orbital velocities and the disk’s density to find the conditions required to cause significant damage to the red giant stars.

(1)

“Red giants could have lost a significant portion of their mass only if the disk was very massive and dense,” said Tamara Bogdanovic, the Georgia Tech assistant professor who co-led the study. “So dense, that gravity would have already fragmented the disk on its own, helping to form massive clumps that became the building blocks of a new generation of stars.”

The simulations suggest that each of the red giant stars orbited its way into and through the disk as many as dozens of times, sometimes taking as long as days to weeks to complete a single pass-through. Mass was stripped away with each collision as the star blistered the fragmenting disk’s surface.

According to former Georgia Tech undergraduate student Thomas Forrest Kieffer, the first author on the paper, it’s a process that would have taken place 4 to 8 million years ago, which is the same age as the young stars seen in the center of the Milky Way today.

A sequence of snap shots based on a simulation of a red giant entering and exiting a clump in a fragmenting accretion disk. In this case, it takes four days for the star to travel through the clump (each .1 unit of time is approximately one hour).

“The only way for this scenario to take place within that relatively short time frame,” Kieffer said, “was if, back then, the disk that fragmented had a much larger mass than all the young stars that eventually formed from it — at least 100 to 1,000 times more mass.”

The impacts also likely lowered the kinetic energy of the red giant stars by at least 20 to 30 percent, shrinking their orbits and pulling them closer to the Milky Way’s black hole. At the same time, the collisions may have torqued the surface and spun up the red giants, which are otherwise known to rotate relatively slowly in isolation.

“We don’t know very much about the conditions that led to the most recent episode of star formation in the galactic center or whether this region of the galaxy could have contained so much gas,” Bogdanovic said. “If it did, we expect that it would presently house under-luminous red giants with smaller orbits, spinning more rapidly than expected. If such population of red giants is observed, among a small number that are still above the detection threshold, it would provide direct support for the star-disk collision hypothesis and allow us to learn more about the origins of the Milky Way.”

Comments Off on Where Are The Milky Way’s Missing Red Giants?

Hot New Aluminum Alloys Can Jump-Start U.S. Rare Earth Production

June 7th, 2016By Alton Parrish.

Researchers at the Department of Energy’s Oak Ridge National Laboratory and partners Lawrence Livermore National Laboratory and Wisconsin-based Eck Industries have developed aluminum alloys that are both easier to work with and more heat tolerant than existing products.

What may be more important, however, is that the alloys—which contain cerium—have the potential to jump-start the United States’ production of rare earth elements.

Aluminum–cerium-magnesium alloy engine head

ORNL scientists Zach Sims, Michael McGuire and Orlando Rios, along with colleagues from Eck, LLNL and Ames Laboratory in Iowa, discuss the technical and economic possibilities for aluminum–cerium alloys in an article in JOM, a publication of the Minerals, Metals & Materials Society.

The team is working as part of the Critical Materials Institute, an Energy Innovation Hub created by the U.S. Department of Energy (DOE) and managed out of DOE’s Advanced Manufacturing Office. Based at Ames, the institute works to increase the availability of rare earth metals and other materials critical for U.S. energy security.

Rare earths are a group of elements critical to electronics, alternative energy and other modern technologies. Modern windmills and hybrid autos, for example, rely on strong permanent magnets made with the rare earth elements neodymium and dysprosium. Yet there is no production occurring in North America at this time.

One problem is that cerium accounts for up to half of the rare earth content of many rare earth ores, including those in the United States, and it has been difficult for rare earth producers to find a market for all of the cerium mined. The United States’ most common rare earth ore, in fact, contains three times more cerium than neodymium and 500 times more cerium than dysprosium.

Aluminum–cerium alloys promise to boost domestic rare earth mining by increasing the demand and, eventually, the value of cerium.

Green sand mold of an aerospace engine head. Molten metal is poured in and allowed to cool.

“We have these rare earths that we need for energy technologies,” said Rios, “but when you go to extract rare earths, the majority is cerium and lanthanum, which have limited large-volume uses.”

If, for example, the new alloys find a place in internal combustion engines, they could quickly transform cerium from an inconvenient byproduct of rare earth mining to a valuable product in itself.

“The aluminum industry is huge,” Rios explained. “A lot of aluminum is used in the auto industry, so even a very small implementation into that market would use an enormous amount of cerium.” A 1 percent penetration into the market for aluminum alloys would translate to 3,000 tons of cerium, he added.

Rios said components made with aluminum-cerium alloys offer several advantages over those made from existing aluminum alloys, including low cost, high castability, reduced heat-treatment requirements and exceptional high-temperature stability.

“Most alloys with exceptional properties are more difficult to cast,” said David Weiss, vice president for engineering and research and development at Eck Industries, “but the aluminum-cerium system has equivalent casting characteristics to the aluminum-silicon alloys.”

The key to the alloys’ high-temperature performance is a specific aluminum-cerium compound, or intermetallic, which forms inside the alloys as they are melted and cast. This intermetallic melts only at temperatures above 2,000 degrees Fahrenheit.

That heat tolerance makes aluminum–cerium alloys very attractive for use in internal combustion engines, Rios noted. Tests have shown the new alloys to be stable at 300 degrees Celsius (572 degrees Fahrenheit), a temperature that would cause traditional alloys to begin disintegrating. In addition, the stability of this intermetallic sometimes eliminates the need for heat treatments typically needed for aluminum alloys.

Alloyed metals being poured from a furnace into a ladle, to be used to fill molds

Not only would aluminum-cerium alloys allow engines to increase fuel efficiency directly by running hotter, they may also increase fuel efficiency indirectly, by paving the way for lighter engines that use small aluminum-based components or use aluminum alloys to replace cast iron components such as cylinder blocks, transmission cases and cylinder heads.

The team has already cast prototype aircraft cylinder heads in conventional sand molds. The team also cast a fully functional cylinder head for a fossil fuel-powered electric generator in 3D-printed sand molds. This first-of-a-kind demonstration led to a successful engine test performed at ORNL’s National Transportation Research Center. The engine was shown to handle exhaust temperatures of over 600 degrees Celsius.

“Three-dimensional printed molds are typically very hard to fill,” said ORNL physicist Zachary Sims, “but aluminum–cerium alloys can completely fill the mold thanks to their exceptional castability.”

The alloys were jointly invented by researchers at ORNL and Eck Industries. Colleagues at Eck Industries contributed expertise in aluminum casting, and LLNL researchers analyzed the aluminum-cerium castings using synchrotron source X-ray computed tomography.

Comments Off on Hot New Aluminum Alloys Can Jump-Start U.S. Rare Earth Production

Asteroseismology Listen to the Relics of the Milky Way: Sounds from the Oldest Stars in Our Galaxy

June 7th, 2016By Alton Parrish.

Astrophysicists from the University of Birmingham have captured the sounds of some of the oldest stars in our galaxy, the Milky Way, according to research published today in the Royal Astronomical Society journal Monthly Notices.

The research team, from the University of Birmingham’s School of Physics and Astronomy, has reported the detection of resonant acoustic oscillations of stars in ‘M4’, one of the oldest known clusters of stars in the Galaxy, some 13 billion years old.

M 4 is relatively close to us, lying 7200 light-years distant, making it a prime object for study. It contains several tens of thousands stars and is noteworthy in being home to many white dwarfs — the cores of ancient, dying stars whose outer layers have drifted away into space

Using data from the NASA Kepler/K2 mission, the team has studied the resonant oscillations of stars using a technique called asteroseismology. These oscillations lead to miniscule changes or pulses in brightness, and are caused by sound trapped inside the stars. By measuring the tones in this ‘stellar music’, it is possible to determine the mass and age of individual stars.

This discovery opens the door to using asteroseismology to study the very early history of our Galaxy.

Dr Andrea Miglio, from the University of Birmingham’s School of Physics and Astronomy, who led the study, said: ‘We were thrilled to be able to listen to some of the stellar relics of the early universe. The stars we have studied really are living fossils from the time of the formation of our Galaxy, and we now hope be able to unlock the secrets of how spiral galaxies, like our own, formed and evolved.’

Dr Guy Davies, from the University of Birmingham’s School of Physics and Astronomy, and co-author on the study, said: ‘The age scale of stars has so far been restricted to relatively young stars, limiting our ability to probe the early history of our Galaxy. In this research we have been able to prove that asteroseismology can give precise and accurate ages for the oldest stars in the Galaxy ‘

M4: The Closest Known Globular Cluster

Professor Bill Chaplin, from the University of Birmingham’s School of Physics and Astronomy and leader of the international collaboration on asteroseismology, said: ‘Just as archaeologists can reveal the past by excavating the earth, so we can use sound inside the stars to perform Galactic archaeology.’

Comments Off on Asteroseismology Listen to the Relics of the Milky Way: Sounds from the Oldest Stars in Our Galaxy

Polymer Opals For Smart Clothing, Buildings and Security

June 6th, 2016By Alton Parrish.

The team, led by the University of Cambridge, have invented a way to make such sheets on industrial scales, opening up applications ranging from smart clothing for people or buildings, to banknote security.

Using a new method called Bend-Induced-Oscillatory-Shearing (BIOS), the researchers are now able to produce hundreds of meters of these materials, known as ‘polymer opals’, on a roll-to-roll process. The results are reported in the journal Nature Communications.

Some of the brightest colors in nature can be found in opal gemstones, butterfly wings and beetles. These materials get their color not from dyes or pigments, but from the systematically-ordered microstructures they contain.

Researchers at the University of Cambridge have devised a method to produce “Polymer Opals” on an industrial scale.

The team behind the current research, based at Cambridge’s Cavendish Laboratory, have been working on methods of artificially recreating this ‘structural color’ for several years, but to date, it has been difficult to make these materials using techniques that are cheap enough to allow their widespread use.

In order to make the polymer opals, the team starts by growing vats of transparent plastic nano-spheres. Each tiny sphere is solid in the middle but sticky on the outside. The spheres are then dried out into a congealed mass. By bending sheets containing a sandwich of these spheres around successive rollers the balls are magically forced into perfectly arranged stacks, by which stage they have intense colour.

By changing the sizes of the starting nano-spheres, different colors (or wavelengths) of light are reflected. And since the material has a rubber-like consistency, when it is twisted and stretched, the spacing between the spheres changes, causing the material to change color. When stretched, the material shifts into the blue range of the spectrum, and when compressed, the color shifts towards red. When released, the material returns to its original color. Such chameleon materials could find their way into colour-changing wallpapers, or building coatings that reflect away infrared thermal radiation.

“Finding a way to coax objects a billionth of a meter across into perfect formation over kilometer scales is a miracle,” said Professor Jeremy Baumberg, the paper’s senior author. “But spheres are only the first step, as it should be applicable to more complex architectures on tiny scales.”

In order to make polymer opals in large quantities, the team first needed to understand their internal structure so that it could be replicated. Using a variety of techniques, including electron microscopy, x-ray scattering, rheology and optical spectroscopy, the researchers were able to see the three-dimensional position of the spheres within the material, measure how the spheres slide past each other, and how the colors change.

“It’s wonderful to finally understand the secrets of these attractive films,” said PhD student Qibin Zhao, the paper’s lead author.

Cambridge Enterprise, the University’s commercialization arm which is helping to commercialize the material, has been contacted by more than 100 companies interested in using polymer opals, and a new spin-out Phomera Technologies has been founded. Phomera will look at ways of scaling up production of polymer opals, as well as selling the material to potential buyers. Possible applications the company is considering include coatings for buildings to reflect heat, smart clothing and footwear, or for banknote security and packaging applications.

The research is funded as part of a UK Engineering and Physical Sciences Research Council (EPSRC) investment in the Cambridge NanoPhotonics Centre, as well as the European Research Council (ERC).

Comments Off on Polymer Opals For Smart Clothing, Buildings and Security

Cometary Belt around Distant Multi-planet System Hints at Hidden or Wandering Planets

June 6th, 2016

By Alton Parrish.

Astronomers using the Atacama Large Millimeter/submillimeter Array (ALMA) radio observatory in Chile have made the first high-resolution image of the belt of comets (a region analogous to the Kuiper belt in our own Solar System, where Pluto and may smaller objects are found) around HR 8799, the only star where multiple planets have been imaged directly.

The shape of this dusty disk, particularly its inner edge, is surprisingly inconsistent with the orbits of the planets, suggesting that either they changed position over time or there is at least one more planet in the system yet to be discovered. The astronomers reported their results in Monthly Notices of the Royal Astronomical Society in May.

“These data really allow us to see the inner edge of this disk for the first time,” explains Mark Booth from Pontificia Universidad Católica de Chile and lead author of the study. “By studying the interactions between the planets and the disk, this new observation shows that either the planets that we see have had different orbits in the past or there is at least one more planet in the system that is too small to have been detected.”

The disk, which fills a region 150 to 420 times the Sun-Earth distance, is produced by the ongoing collisions of cometary bodies in the outer reaches of this star system. ALMA was able to image the emission from millimetre-size pieces of debris in the disk; according to the researchers, the small size of these dust grains suggests that the planets in the system are larger than Jupiter. Previous observations with other telescopes did not detect this discrepancy in the disk.

It is not clear if this difference is due to the low resolution of the previous observations or because different wavelengths are sensitive to different grain sizes, which would be distributed slightly differently. HR 8799 is a young star approximately 1.5 times the mass of the Sun located 129 light-years from Earth in the direction of the constellation Pegasus.

“This is the very first time that a multi-planet system with orbiting dust is imaged, allowing for direct comparison with the formation and dynamics of our own Solar System,” explains Antonio Hales, co-author of the study from the National Radio Astronomy Observatory in Charlottesville, Virginia, in the United States.

Comments Off on Cometary Belt around Distant Multi-planet System Hints at Hidden or Wandering Planets

Six weighty facts about gravity

June 4th, 2016By Alton Parrish.

Gravity: we barely ever think about it, at least until we slip on ice or stumble on the stairs. To many ancient thinkers, gravity wasn’t even a force–it was just the natural tendency of objects to sink toward the center of Earth, while planets were subject to other, unrelated laws.

Of course, we now know that gravity does far more than make things fall down. It governs the motion of planets around the Sun, holds galaxies together and determines the structure of the universe itself. We also recognize that gravity is one of the four fundamental forces of nature, along with electromagnetism, the weak force and the strong force.

The modern theory of gravity–Einstein’s general theory of relativity–is one of the most successful theories we have. At the same time, we still don’t know everything about gravity, including the exact way it fits in with the other fundamental forces. But here are six weighty facts we do know about gravity.

1. Gravity is by far the weakest force we know.

Gravity only attracts–there’s no negative version of the force to push things apart. And while gravity is powerful enough to hold galaxies together, it is so weak that you overcome it every day. If you pick up a book, you’re counteracting the force of gravity from all of Earth.

For comparison, the electric force between an electron and a proton inside an atom is roughly one quintillion (that’s a one with 30 zeroes after it) times stronger than the gravitational attraction between them. In fact, gravity is so weak, we don’t know exactly how weak it is.

2. Gravity and weight are not the same thing.

Astronauts on the space station float, and sometimes we lazily say they are in zero gravity. But that’s not true. The force of gravity on an astronaut is about 90 percent of the force they would experience on Earth. However, astronauts are weightless, since weight is the force the ground (or a chair or a bed or whatever) exerts back on them on Earth.

Take a bathroom scale onto an elevator in a big fancy hotel and stand on it while riding up and down, ignoring any skeptical looks you might receive. Your weight fluctuates, and you feel the elevator accelerating and decelerating, yet the gravitational force is the same. In orbit, on the other hand, astronauts move along with the space station. There is nothing to push them against the side of the spaceship to make weight. Einstein turned this idea, along with his special theory of relativity, into general relativity.

3. Gravity makes waves that move at light speed.

General relativity predicts gravitational waves. If you have two stars or white dwarfs or black holes locked in mutual orbit, they slowly get closer as gravitational waves carry energy away. In fact, Earth also emits gravitational waves as it orbits the sun, but the energy loss is too tiny to notice.

We’ve had indirect evidence for gravitational waves for 40 years, but the Laser Interferometer Gravitational-wave Observatory (LIGO) only confirmed the phenomenon this year. The detectors picked up a burst of gravitational waves produced by the collision of two black holes more than a billion light-years away.

One consequence of relativity is that nothing can travel faster than the speed of light in vacuum. That goes for gravity, too: If something drastic happened to the sun, the gravitational effect would reach us at the same time as the light from the event.

4. Explaining the microscopic behavior of gravity has thrown researchers for a loop.

The other three fundamental forces of nature are described by quantum theories at the smallest of scales– specifically, the Standard Model. However, we still don’t have a fully working quantum theory of gravity, though researchers are trying.

One avenue of research is called loop quantum gravity, which uses techniques from quantum physics to describe the structure of space-time. It proposes that space-time is particle-like on the tiniest scales, the same way matter is made of particles. Matter would be restricted to hopping from one point to another on a flexible, mesh-like structure. This allows loop quantum gravity to describe the effect of gravity on a scale far smaller than the nucleus of an atom.

A more famous approach is string theory, where particles–including gravitons–are considered to be vibrations of strings that are coiled up in dimensions too small for experiments to reach. Neither loop quantum gravity nor string theory, nor any other theory is currently able to provide testable details about the microscopic behavior of gravity.

5. Gravity might be carried by massless particles called gravitons.

In the Standard Model, particles interact with each other via other force-carrying particles. For example, the photon is the carrier of the electromagnetic force. The hypothetical particles for quantum gravity are gravitons, and we have some ideas of how they should work from general relativity. Like photons, gravitons are likely massless. If they had mass, experiments should have seen something–but it doesn’t rule out a ridiculously tiny mass.

6. Quantum gravity appears at the smallest length anything can be.

Gravity is very weak, but the closer together two objects are, the stronger it becomes. Ultimately, it reaches the strength of the other forces at a very tiny distance known as the Planck length, many times smaller than the nucleus of an atom.

That’s where quantum gravity’s effects will be strong enough to measure, but it’s far too small for any experiment to probe. Some people have proposed theories that would let quantum gravity show up at close to the millimeter scale, but so far we haven’t seen those effects. Others have looked at creative ways to magnify quantum gravity effects, using vibrations in a large metal bar or collections of atoms kept at ultracold temperatures.

It seems that, from the smallest scale to the largest, gravity keeps attracting scientists’ attention. Perhaps that’ll be some solace the next time you take a tumble, when gravity grabs your attention too.

Comments Off on Six weighty facts about gravity

Quantum Satellite Device Tests Technology for Global Quantum Network

June 4th, 2016By Alton Parrish.

You can’t sign up for the quantum internet just yet, but researchers have reported a major experimental milestone towards building a global quantum network – and it’s happening in space.

With a network that carries information in the quantum properties of single particles, you can create secure keys for secret messaging and potentially connect powerful quantum computers in the future. But scientists think you will need equipment in space to get global reach.

Researchers at the National University of Singapore and University of Strathclyde, UK, have launched a satellite that is testing technology for a global quantum network. This image combines a photograph of the quantum device with an artist’s illustration of nanosatellites establishing a space-based quantum network.

Researchers from the National University of Singapore (NUS) and the University of Strathclyde, UK, have become the first to test in orbit technology for satellite-based quantum network nodes.

They have put a compact device carrying components used in quantum communication and computing into orbit. And it works: the team report first data in a paper published 31 May 2016 in the journalPhysical Review Applied.

The team’s device dubbed SPEQS creates and measures pairs of light particles, called photons. Results from space show that SPEQS is making pairs of photons with correlated properties – an indicator of performance.

Team-leader Alexander Ling, an Assistant Professor at the Centre for Quantum Technologies (CQT) at NUS, said “This is the first time anyone has tested this kind of quantum technology in space.”

The team had to be inventive to redesign a delicate, table-top quantum setup to be small and robust enough to fly inside a nanosatellite only the size of a shoebox. The whole satellite weighs just 1.65-kilogramme.

Towards entanglement

Making correlated photons is a precursor to creating entangled photons. Described by Einstein as “spooky action at a distance”, entanglement is a connection between quantum particles that lends security to communication and power to computing.

Professor Artur Ekert, Director of CQT, invented the idea of using entangled particles for cryptography. He said “Alex and his team are taking entanglement, literally, to a new level. Their experiments will pave the road to secure quantum communication and distributed quantum computation on a global scale. I am happy to see that Singapore is one of the world leaders in this area.”

Local quantum networks already exist. The problem Ling’s team aims to solve is a distance limit. Losses limit quantum signals sent through air at ground level or optical fibre to a few hundred kilometres – but we might ultimately use entangled photons beamed from satellites to connect points on opposite sides of the planet. Although photons from satellites still have to travel through the atmosphere, going top-to-bottom is roughly equivalent to going only 10 kilometres at ground level.

The group’s first device is a technology pathfinder. It takes photons from a BluRay laser and splits them into two, then measures the pair’s properties, all on board the satellite. To do this it contains a laser diode, crystals, mirrors and photon detectors carefully aligned inside an aluminum block. This sits on top of a 10 centimeters by 10 centimeters printed circuit board packed with control electronics.

(1)

Through a series of pre-launch tests – and one unfortunate incident – the team became more confident that their design could survive a rocket launch and space conditions. The team had a device in the October 2014 Orbital-3 rocket which exploded on the launch pad. The satellite containing that first device was later found on a beach intact and still in working order.

Future plans

Even with the success of the more recent mission, a global network is still a few milestones away. The team’s roadmap calls for a series of launches, with the next space-bound SPEQS slated to produce entangled photons. SPEQS stands for Small Photon-Entangling Quantum System.

With later satellites, the researchers will try sending entangled photons to Earth and to other satellites. The team are working with standard “CubeSat” nanosatellites, which can get relatively cheap rides into space as rocket ballast. Ultimately, completing a global network would mean having a fleet of satellites in orbit and an array of ground stations.

In the meantime, quantum satellites could also carry out fundamental experiments – for example, testing entanglement over distances bigger than Earth-bound scientists can manage. “We are reaching the limits of how precisely we can test quantum theory on Earth,” said co-author Dr Daniel Oi at the University of Strathclyde.

This research is supported by the National Research Foundation (NRF) Singapore under its Competitive Research Programme (CRP Award No. NRF-CRP12-2013-02), and NRF Singapore and the Ministry of Education, Singapore under the Research Centres of Excellence programme. The authors also acknowledge the Scottish Quantum Information Network and the EU FP7 CONNECT2SEA project “Development of Quantum Technologies for Space Applications.”

Comments Off on Quantum Satellite Device Tests Technology for Global Quantum Network

Bugs Bunny’s Wisdom Confirmed by Science

June 3rd, 2016By Alton Parrish.

Bugs Bunny hasn’t aged a day since his cartoon debut in 1940, and he rarely wears glasses. He can play all nine positions on a baseball field – at once. He also consistently outwits gangsters, hunters and water fowl.

That could be due to all the carrots he consumes. Carrots contain high quantities of carotenoids – plant pigments that have been shown to provide health benefits, including reduced risk of diseases such as eye disease. The orange carrot is the richest source of vitamin A in the American diet.

The researchers examined 35 different types of carrot. First, by comparing white and light-orange carrots, which have lower levels of carotenoids, with carotenoid-rich bright orange carrots, the researchers found a specific gene that appears to control the accumulation of carotenoids in the vegetable.

In the paper, Iorizzo proposed a genetic mechanism that explains why carrot roots accumulate carotenoid pigments. “Orange carrots have co-opted light-induced genes to accumulate higher levels of carotenoids in their roots,” Iorizzo said. “Though grown in the dark, orange carrots act like they’re grown in the light.”

Evolutionarily, carrots split from grapes more than 100 million years ago, which is fortunate because Bugs enjoys carrot juice much more than wine. Carrots diverged from lettuce more than 70 million years ago.

The center of origin for carrot domestication was the Middle East and Central Asia; documentation shows that purple and yellow carrots were grown in Central Asia 1,100 years ago, with orange carrots first documented in the 16th century.

The paper also describes gene expansion contributing to flavor and carotenoid accumulation in modern carrots.

These colorful, high-carotenoid carrots have become popular, perhaps because of their health benefits: through breeding, carotene content has increased in the carrot by 50 percent in the United States since 1970. And that’s a good thing as vitamin A deficiency is a global problem, although less so in the United States.

Iorizzo says that the sequenced genome will significantly change the nature of research in carrot biology.

“It will serve as the basis in molecular breeding to assist in improving carrot traits such as enhanced levels of carotenoids, drought tolerance and disease resistance,” he said. “The primary focus of research of several laboratories around the world that conduct fundamental research on carrot genetics will shift more toward extensive genetic screens for genomewide association analysis and functional genomics.”

Hamid Ashrafti, an NC State assistant professor of horticultural science, is a co-author on the paper. Philipp Simon from the University of Wisconsin-Madison is the paper’s corresponding author.

Comments Off on Bugs Bunny’s Wisdom Confirmed by Science

Universe Expanding Faster Than Expected, Confounds Current Understanding of Physics

June 3rd, 2016By Alton Parish.

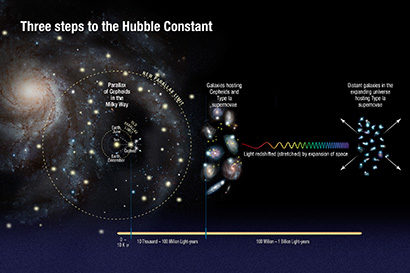

Astronomers have obtained the most precise measurement yet of how fast the universe is expanding, and it doesn’t agree with predictions based on other data and our current understanding of the physics of the cosmos.

The discrepancy — the universe is now expanding 9 percent faster than expected — means either that measurements of the cosmic microwave background radiation are wrong, or that some unknown physical phenomenon is speeding up the expansion of space, the astronomers say.

“If you really believe our number — and we have shed blood, sweat and tears to get our measurement right and to accurately understand the uncertainties — then it leads to the conclusion that there is a problem with predictions based on measurements of the cosmic microwave background radiation, the leftover glow from the Big Bang,” said Alex Filippenko, a UC Berkeley professor of astronomy and co-author of a paper announcing the discovery.

“Maybe the universe is tricking us, or our understanding of the universe isn’t complete,” he added.