Posts by AltonParrish:

Jamesbondia: Four New Plant Species Named for Ornithologist James Bond

May 21st, 2016By Alton Parrish.

A new subgenus of plants has officially been called Jamesbondia after the notable American ornithologist James Bond, whose name Ian Fleming is known to have used for his eponymous spy series.

An article published in Plant Biosystems formally proposes the existence of a new subgenus of plants, Jamesbondia, an infrageneric group of the Neotropical flowering genus known as Alternanthera. It has officially been called Jamesbondia after the notable American ornithologist James Bond, whose name Ian Fleming is known to have used for his eponymous spy series.

Jamesbondia

The four Jamesbondia plant species are mostly found in Central America and the Caribbean Islands. Authors I. Sánchez-del Pino and D. Iamonico have built on the research of J.M. Mears, who identified a group of Caribbean plant species as “Jamesbondia” from 1980 to 1982 in unpublished annotations onAlternanthera specimens. Molecular phylogenetic analyses and observations of the flower morphology justify the official separate naming of this group.

The name Jamesbondia has never previously been validly published. Respecting the annotations of Mears, the authors named the subgenus in honour of the American ornithologist. Sánchez-del Pino and Iamonico suspect that Mears’ choice of name relates to the geographic distribution of the species:“‘Jamesbondia is clearly dedicated to the ornithologist James Bond (1900–1989), who focused his research on birds in the same Caribbean areas that are the primary home of the four putative species of subgenus Jamesbondia.”

Ian Fleming, a keen bird watcher, adopted the name for his series of spy novels about a fictional British Secret Service and is quoted as saying, ”It struck me that this brief, unromantic, Anglo-Saxon and yet very masculine name was just what I needed, and so a second James Bond was born.”

Comments Off on Jamesbondia: Four New Plant Species Named for Ornithologist James Bond

NASA Directly Observes A Fundamental Process of Nature for 1st Time

May 20th, 2016

By Alton Parrish.

Like sending sensors up into a hurricane, NASA has flown four spacecraft through an invisible maelstrom in space, called magnetic reconnection. Magnetic reconnection is one of the prime drivers of space radiation and so it is a key factor in the quest to learn more about our space environment and protect our spacecraft and astronauts as we explore farther and farther from our home planet.

The four Magnetospheric Multiscale, or MMS, spacecraft (shown here in an artist’s concept) have now made more than 4,000 trips through the boundaries of Earth’s magnetic field, gathering observations of our dynamic space environment.

Space is a better vacuum than any we can create on Earth, but it does contain some particles — and it’s bustling with activity. It overflows with energy and a complex system of magnetic fields. Sometimes, when two sets of magnetic fields connect, an explosive reaction occurs: As the magnetic fields re-align and snap into a new formation they send particles zooming off in jets.

A new paper printed on May 12, 2016, in Science provides the first observations from inside a magnetic reconnection event. The research shows that magnetic reconnection is dominated by the physics of electrons — thus providing crucial information about what powers this fundamental process in nature.

The effects of this sudden release of particles and energy — such as giant eruptions on the sun, the aurora, radiation storms in near-Earth space, high energy cosmic particles that come from other galaxies — have been observed throughout the solar system and beyond. But we have never been able to witness the phenomenon of magnetic reconnection directly. Satellites have observed tantalizing glances of particles speeding by, but not the impetus — like seeing the debris flung out from a tornado, but never seeing the storm itself.

“We developed a mission, the Magnetospheric Multiscale mission, that for the first time would have the precision needed to gather observations in the heart of magnetic reconnection,” said Jim Burch, the principal investigator for MMS at the Southwest Research Institute in San Antonio, Texas, and the first author of the Science paper. “We received results faster than we could have expected. By seeing magnetic reconnection in action, we have observed one of the fundamental forces of nature.”

MMS is made of four identical spacecraft that launched in March 2015. They fly in a pyramid formation to create a full 3-D map of any phenomena they observe. On Oct. 16, 2015, the spacecraft traveled straight through a magnetic reconnection event at the boundary where Earth’s magnetic field bumps up against the sun’s magnetic field. In only a few seconds, the 25 sensors on each of the spacecraft collected thousands of observations. This unprecedented time cadence opened the door for scientists to track better than ever before how the magnetic and electric fields changed, as well as the speeds and direction of the various charged particles.

The science of reconnection springs from the basic science of electromagnetics, which dominates most of the universe and is a force as fundamental in space as gravity is on Earth. Any set of magnetic fields can be thought of as a row of lines. These field lines are always anchored to some body — a planet, a star — creating a giant magnetic network surrounding it. It is at the boundaries of two such networks where magnetic reconnection happens.

Magnetic reconnection — a phenomenon that happens throughout space — occurs when magnetic field lines come together, realign and send particles hurling outward.

Imagine rows of magnetic field lines moving toward each other at such a boundary. (The boundary that MMS travels through, for example, is the one where Earth’s fields meet the sun’s.) The field lines are sometimes traveling in the same direction, and don’t have much effect on each other, like two water currents flowing along side each other.

But if the two sets of field lines point in opposite directions, the process of realigning is dramatic. It can be hugely explosive, sending particles hurtling off at near the speed of light. It can also be slow and steady. Either way it releases a huge amount of energy.

“One of the mysteries of magnetic reconnection is why it’s explosive in some cases, steady in others, and in some cases, magnetic reconnection doesn’t occur at all,” said Tom Moore, the mission scientist for MMS at NASA’s Goddard Space Flight Center in Greenbelt, Maryland.

Whether explosive or steady, the local particles are caught up in the event, hurled off to areas far away, crossing magnetic boundaries they never could have crossed otherwise. At the edges of Earth’s magnetic environment, the magnetosphere, such events allow solar radiation to enter near-Earth space.

(1)

“From previous satellites’ measurements, we know that the magnetic fields act like a slingshot, sending the protons accelerating out,” said Burch. “The decades-old mystery is what do the electrons do, and how do the two magnetic fields interconnect. Satellite measurements of electrons have been too slow by a factor of 100 to sample the magnetic reconnection region. The precision and speed of the MMS measurements, however, opened up a new window on the universe, a new ‘microscope’ to see reconnection.”

With this new set of observations, MMS tracked what happens to electrons during magnetic reconnection. As the four spacecraft flew across the magnetosphere’s boundary they flew directly through what’s called the dissipation region where magnetic reconnection occurred. The observations were able to track how the magnetic fields suddenly shifted, and also how the particles moved away.

Space is a better vacuum than any we can create on Earth, but it’s nonetheless bustling with activity, particles and magnetic field lines. NASA studies our space environment to protect our technology and astronauts as we explore farther and farther from our home planet.

The observations show that the electrons shot away in straight lines from the original event at hundreds of miles per second, crossing the magnetic boundaries that would normally deflect them. Once across the boundary, the particles curved back around in response to the new magnetic fields they encountered, making a U-turn. These observations align with a computer simulation known as the crescent model, named for the characteristic crescent shapes that the graphs show to represent how far across the magnetic boundary the electrons can be expected to travel before turning around again.

A surprising result was that at the moment of interconnection between the sun’s magnetic field lines and those of Earth the crescents turned abruptly so that the electrons flowed along the field lines. By watching these electron tracers, MMS made the first observation of the predicted breaking and interconnection of magnetic fields in space.

“The data showed the entire process of magnetic reconnection to be fairly orderly and elegant,” said Michael Hesse, a space scientist at Goddard who first developed the crescent model. “There doesn’t seem to be much turbulence present, or at least not enough to disrupt or complicate the process.”

Spotting the persistent characteristic crescent shape in the electron distributions suggests that it is the physics of electrons that is at the heart of understanding how magnetic field lines accelerate the particles.

“This shows us that the electrons move in such a way that electric fields are established and these electric fields in turn produce a flash conversion of magnetic energy,” said Roy Torbert, a scientist at the Space Science Center at the University of New Hampshire in Durham, who is a co-author on the paper. “The encounter that our instruments were able to measure gave us a clearer view of an explosive reconnection energy release and the role played by electron physics.”

Since it launched, MMS has made more than 4,000 trips through the magnetic boundaries around Earth, each time gathering information about the way the magnetic fields and particles move. After its first direct observation of magnetic reconnection, it has flown through such an event five more times, providing more information about this fundamental process.

As the mission continues, the team can adjust the formation of the MMS spacecraft bringing them closer together, which provides better viewing of electron paths, or further apart, which provides better viewing of proton paths. Each set of observations contributes to explaining different aspects of magnetic reconnection. Together, such information will help scientists map out the details of our space environment — crucial information as we journey ever farther beyond our home planet

Comments Off on NASA Directly Observes A Fundamental Process of Nature for 1st Time

‘CardBoardiZer’ Allows Users to Create Robotic Models in Minutes

May 20th, 2016

By Alton Parrish.

A new computerized system allows novice designers to convert static three-dimensional objects into moving robotic versions made out of materials including cardboard, wood and sheet metal.

“We are taking inanimate objects and making them come alive,” said Karthik Ramani, the Donald W. Feddersen Professor of Mechanical Engineering at Purdue University.

The new system is called CardBoardiZer and has evolved from previous work based in Ramani’s C Design Lab.

We wanted to create a system that’s much easier to use than other design programs, which are too complicated for the average person to learn,” Ramani said. “People can pick up CardBoardiZer in 10 minutes.”

For example, he said, an object like a plastic dinosaur with immovable parts can be scanned using a laser scanner and then turned into a folding cardboard version with moveable head, mouth, limbs and tail.

“Once I have the rough shape, this system can take over from there,” Ramani said.

(0)

The models can then be motorized using a commercial product called Ziro, which grew out of work in the Purdue lab. Ziro uses motorized “joint modules” equipped with wireless communicators and micro-controllers. The user controls the robotic creations with hand gestures while wearing a wireless “smart glove.”

“We want everybody to become more artistic, to democratize the interfaces and accessibility of these tools so that they are more universally accessible and to lower the barriers to entry of designing and making these kinds of more sophisticated models,” he said.

The system converts objects into flat versions similar to a tailor’s dress patterns.

“And then I can cut it, fold it and give it motion where I want,” said Ramani, who has been teaching a popular toy design class at Purdue for 18 years, refining ways to make toys both educational and fun.

The prototype technique represents a potential alternative to, and overcomes the limitations of, 3-D printing.

“I can make a dinosaur this big,” he said, holding his arms out wide. “With 3-D printing you’ll be spending one month printing it, but CardBoardiZer works quickly with standard cardboard, wood or sheet metal.”

The system was inspired by a do-it-yourself “makers” movement and has a design focus.

“Our geometric simplification algorithm can generate cardboardized models with a few folds, making it easy for children to fold and at the same time retaining a shape that the user desires,” said Yunbo Zhang, a postdoctoral research associate who led the project in the C Design Lab.

Ramani is a co-founder and chief scientist of the company ZeroUI (www.zeroui.com), which produces Ziro (ziro.io). The company is in the Purdue Research Park and in San Jose, California.

Ziro was recently showcased during the Consumer Electronics Show in Las Vegas, where it was named a “Best at CES 2016 Finalist” by Engadget.

Comments Off on ‘CardBoardiZer’ Allows Users to Create Robotic Models in Minutes

Weather Shaped by City Shape Say Researchers

May 19th, 2016

By Alton Parrish.

The features that make cities unique are important to understanding how cities affect weather and disperse air pollutants, researchers highlight in a new study.

Compared to their surroundings, cities can be hot — hot enough to influence the weather. Industrial, domestic, and transportation-related activities constantly release heat, and after a warm day, concrete surfaces radiate stored heat long into the night. These phenomena can be strong enough to drive thunderstorms off course. But it isn’t only about the heat cities release; it’s also about their spatial layout. By channeling winds and generating turbulence hundreds of meters into the atmosphere, the presence and organization of buildings also affect weather and air quality.

London at night

In an EPFL-led study published in the Journal of Boundary Layer Meteorology, researchers have shown that the way cities are represented in today’s weather and air quality models fails to capture the true magnitude of some important features, such as the transfer of energy and heat in the lower atmosphere. What’s more, they found that processes that atmospheric sensors are unable to sense are essential to more accurately represent cities in weather models.

Stirring up the air

When wind blows over a city, buildings interact with the moving air mass, generating turbulence, much like sticking your fingertips into a stream causes visible vortices to form on the water surface. This turbulence spreads up into the atmosphere and down into the streets. As a result, more heat, humidity, and pollutants are transported upwards from the ground. At the same time, more of the wind’s turbulent energy dissipates between streets, in gardens, or in other open spaces.

Animation of the results of a high resolution computer simulation of atmospheric turbulence in a neighborhood of the city of Basel, Switzerland

(1)

“What we showed in our work is the importance of taking into account the spatial variability of cities — the unique features that make Paris Paris and London London,” says Marco Giometto, the first author of the study. “Most city representations used in weather models are based on data obtained from tower measurements made at a particular location within the city, which current models approximate as a rough patch of land. The transport of heat, humidity, or pollutants is then computed using mathematical relationships. These relationships implicitly assume that the city is geometrically regular, which is a stringent assumption,” he says.

Giometto and his colleagues performed a series of detailed simulations of the wind flow over and within a neighborhood of the city of Basel and compared results against wind tower measurements collected within the same area. By accounting for the spatial variability of streets and buildings in the same Basel neighborhood they were able to show that, for certain parameters that play a role in the local weather and the dispersal of smoke, smog, or other pollutants, approximating the city as an uniform patch of land can lead to errors up 200 percent.

Basel

“Weather models obviously can’t include detailed representations of all large cities,” says Giometto. High-resolution simulations require time and resources that are not available to weather forecasters. Instead, he argues, going beyond the status quo of likening cities to rough patches of land will require developing new, more accurate ways to translate specific urban settings to minimalist representations that can then be integrated into a computer model.

In particular, Giometto’s findings highlight the importance of accounting for dispersive terms that arise due to the spatial heterogeneity of cities. In the mathematical equations that govern the movement of the air, they do just what their name indicates: they disperse pollutants, heat, humidity, or even energy. “You can picture them as mini air-circulations, locked in between buildings that transport warm and polluted air up from ground-level on one side and draw down cleaner and cooler air on the other,” says Andreas Christen, a coauthor of the study. But unlike the wind speed or direction, a single weather station is unable to measure these dispersive terms directly, which is where the computer simulations come in.

“We need accurate computer simulations of the wind over cities to estimate dispersive terms for the prevailing wind directions,” says Giometto. Ultimately, this information will allow to develop accurate models, that will benefit urban residents. A better understanding of how cities affect the air within and above them, and better tools to account for these effects, would not only contribute to improving urban weather forecasts and the evaluation of the spread of pollutant plumes and smog in urban settings. In the future, they could also contribute to making cities more energy efficient and, maybe one day, a little bit less hot.

.

.

Comments Off on Weather Shaped by City Shape Say Researchers

NASA Satellites Image Fort McMurray Fires Day and Night

May 19th, 2016By Alton parrish.

The grayish brown swirl that is combining with the clouds billowing over Alberta and Saskatchewan, Canada is smoke that has risen from the Fort McMurray fire complex. This image taken by the Terra satellite’s Moderate Resolution Imaging Spectroradiometer (MODIS) on May 17, 2016. Actively burning areas, detected by MODIS’s thermal bands, are outlined in red.

The Fort McMurray wildfire has destroyed one of the oilsands camps north of the city and is roaring eastward toward others in its path. This image from the Suomi NPP satellite taken on May 17, 2016 shows the fires heading in that direction. The leading eastern edge of the Alberta fires was expected to reach the Saskatchewan border by the end of Tuesday.

Huge columns of smoke rise up from the myriad of fires in the Fort McMurray complex in Alberta, Canada. NASA’s Suomi NPP satellite collected this natural-color image using the VIIRS (Visible Infrared Imaging Radiometer Suite) instrument on May 16, 2016. The actively burning areas are outlined in red. The fire north of Fort McMurray had retreated and some citizens had returned, but in the last few days this area has been threatened again. On May 15, warnings were issued that the wildfire was moving at 30-40 meters (98-131 feet) per minute to the north again.

The smoke released by any type of fire (forest, brush, crop, structure, tires, waste or wood burning) is a mixture of particles and chemicals produced by incomplete burning of carbon-containing materials. All smoke contains carbon monoxide, carbon dioxide and particulate matter (PM or soot).

Smoke can contain many different chemicals, including aldehydes, acid gases, sulfur dioxide, nitrogen oxides, polycyclic aromatic hydrocarbons (PAHs), benzene, toluene, styrene, metals and dioxins. The type and amount of particles and chemicals in smoke varies depending on what is burning, how much oxygen is available, and the burn temperature. The air pollution levels in and around Alberta remain at dangerously high levels. Current readings show it to be at 38 where 10 is considered dangerously high.

Comments Off on NASA Satellites Image Fort McMurray Fires Day and Night

Polluted Dust Can Impact Ocean Life Thousands of Miles Away Say Researchers

May 18th, 2016By Alton Parrish.

As climatologists closely monitor the impact of human activity on the world’s oceans, researchers at the Georgia Institute of Technology have found yet another worrying trend impacting the health of the Pacific Ocean.

A new modeling study conducted by researchers in Georgia Tech’s School of Earth and Atmospheric Sciences shows that for decades, air pollution drifting from East Asia out over the world’s largest ocean has kicked off a chain reaction that contributed to oxygen levels falling in tropical waters thousands of miles away.

“There’s a growing awareness that oxygen levels in the ocean may be changing over time,” said Taka Ito, an associate professor at Georgia Tech. “One reason for that is the warming environment – warm water holds less gas. But in the tropical Pacific, the oxygen level has been falling at a much faster rate than the temperature change can explain.”

As iron is deposited from air pollution off the coast of East Asia, ocean currents carry the nutrient far and wide.

The study, which was published May 16 in Nature Geoscience, was sponsored by the National Science Foundation, a Georgia Power Faculty Scholar Chair and a Cullen-Peck Faculty Fellowship.

In the report, the researchers describe how air pollution from industrial activities had raised levels of iron and nitrogen – key nutrients for marine life – in the ocean off the coast of East Asia. Ocean currents then carried the nutrients to tropical regions, where they were consumed by photosynthesizing phytoplankton.

But while the tropical phytoplankton may have released more oxygen into the atmosphere, their consumption of the excess nutrients had a negative effect on the dissolved oxygen levels deeper in the ocean.

“If you have more active photosynthesis at the surface, it produces more organic matter, and some of that sinks down,” Ito said. “And as it sinks down, there’s bacteria that consume that organic matter. Like us breathing in oxygen and exhaling CO2, the bacteria consume oxygen in the subsurface ocean, and there is a tendency to deplete more oxygen.”

That process plays out in all across the Pacific, but the effects are most pronounced in tropical areas, where dissolved oxygen is already low.

Athanasios Nenes, a professor in the School of Earth and Atmospheric Sciences and the School of Chemical and Biomolecular Engineering at Georgia Tech who worked with Ito on the study, said the research is the first to describe just how far reaching the impact of human industrial activity can be.

“The scientific community always thought that the impact of air pollution is felt in the vicinity of where it deposits ,” said Nenes, who also serves as Georgia Power Faculty Scholar. “This study shows that the iron can circulate across the ocean and affect ecosystems thousands of kilometers away.”

While evidence had been mounting that global climate change may have an impact on future oxygen levels, Ito and Nenes were spurred to search for an explanation for why oxygen levels in the tropics had been declining since the 1970s.

To understand how the process worked, the researchers developed a model that combines atmospheric chemistry, biogeochemical cycles, and ocean circulation. Their model maps out how polluted, iron-rich dust that settles over the Northern Pacific gets carried by ocean currents east toward North America, down the coast and then back west along the equator.

In their model, the researchers accounted for other factors that can also impact oxygen levels, such as water temperature and ocean current variability.

Whether due to warming sea waters or an increase in iron pollution, the implications of growing oxygen-minimum zones are far reaching for marine life.

“Many living organisms depend on oxygen that is dissolved in seawater,” Ito said. “So if it gets low enough, it can cause problems, and it might change habitats for marine organisms.”

Occasionally, waters from low oxygen areas swell to the coastal waters, killing or displacing populations of fish, crabs and many other organisms. Those “hypoxic events” may become more frequent as the oxygen-minimum zones grow, Ito said.

The increasing phytoplankton activity is a double-edged sword, Ito said.

“Phytoplankton is an essential part of the living ocean,” he said. “It serves as the base of food chain and absorbs atmospheric carbon dioxide. But if the pollution continues to supply excess nutrients, the process of the decomposition depletes oxygen from the deeper waters, and this deep oxygen is not easily replaced.”

The study also expands on the understanding of dust as a transporter of pollution, Nenes said

.

“Dust has always attracted of a lot of interest because of its impact on the health of people,” Nenes said. “This is really the first study showing that dust can have a huge impact on the health of the oceans in ways that we’ve never understood before. It just raises the need to understand what we’re doing to marine ecosystems that feed populations worldwide.”

Comments Off on Polluted Dust Can Impact Ocean Life Thousands of Miles Away Say Researchers

Discovered: Tiny Ocean Organism Has Big Role in Climate Regulation

May 18th, 2016By Alton Parrish.

Scientists have discovered that a tiny, yet plentiful, ocean organism is playing an important role in the regulation of the Earth’s climate.

Research, published in the journal Nature Microbiology, has found that the bacterial group Pelagibacterales, thought to be among the most abundant organisms on Earth, comprising up to half a million microbial cells found in every teaspoon of seawater, plays an important function in the stabilization of the Earth’s atmosphere

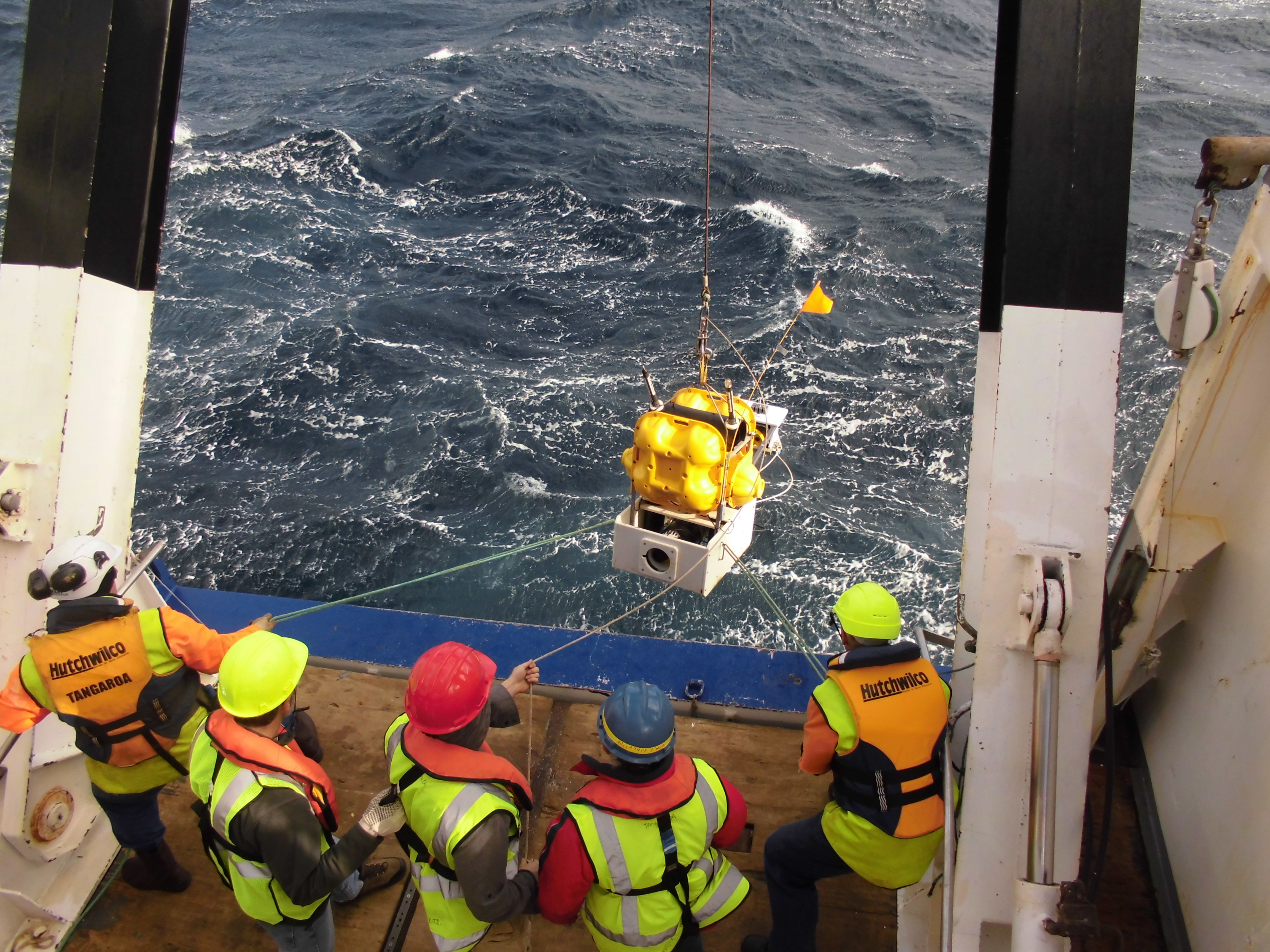

This is a rosette going into the water in Bermuda to sample SAR11.

Dr Ben Temperton, lecturer in the department of Biosciences at the University of Exeter, was a member of the international team of researchers that has for the first time identified Pelagibacteralesas a likely source for the production of dimethylsulfide (DMS), which is known to stimulate cloud formation, and is integral to a negative feedback loop known as the CLAW hypothesis.

Under this hypothesis, the temperature of the Earth’s atmosphere is stabilised through a negative feedback loop where sunlight increases the abundance of certain phytoplankton, which in turn produce more dimethylsulfoniopropionate (DMSP). This is broken down into DMS by other members of the microbial community. Through a series of chemical processes, DMS increases cloud droplets, which in turn reduces the amount of sunlight hitting the ocean surface.

These latest findings reveal the significance of Pelagibacterales in this process and open up a path for further research.

Dr Temperton said: “This work shows that the Pelagibacterales are likely an important component in climate stability. If we are going to improve models of how DMS impacts climate, we need to consider this organism as a major contributor.”

The research also revealed new information about the way in which the Pelagibacteralesproduces DMS.

Dr Temperton added: “What’s fascinating is the elegance and simplicity of DMS production in the Pelagibacterales. These organisms don’t have the genetic regulatory mechanisms found in most bacteria. Having evolved in nutrient-limited oceans, they have some of the smallest genomes of all free-living organisms, because small genomes take fewer resources to replicate.

“The production of DMS in Pelagibacterales is like a pressure release valve. When there is too much DMSP for Pelagibacterales to handle, it flows down a metabolic pathway that generates DMS as a waste product. This valve is always on, but only comes into play when DMSP concentrations exceed a threshold. Kinetic regulation like this is not uncommon in bacteria, but this is the first time we’ve seen it in play for such an important biogeochemical process.”

Dr Jonathan Todd from UEA’s School of Biological Sciences said: “These types of ocean bacteria are among the most abundant organisms on Earth – comprising up to half a million microbial cells found in every teaspoon of seawater.

“We studied it at a molecular genetic level to discover exactly how it generates a gas called dimethylsulfide (DMS), which is known for stimulating cloud formation.

“Our research shows how a compound called dimethylsulfoniopropionate that is made in large amounts by marine plankton is then broken down into DMS by these tiny ocean organisms called Pelagibacterales.

“The resultant DMS gas may then have a role in regulating the climate by increasing cloud droplets that in turn reduce the amount of sunlight hitting the ocean’s surface.”

Dr Emily Fowler from UEA’s School of Biological Sciences worked on the characterization of the Pelagibacterales DMS generating enzymes as part of her successful PhD at UEA. She said: “Excitingly, the way Pelagibacterales generates DMS is via a previously unknown enzyme, and we have found that the same enzyme is present in other hugely abundant marine bacterial species. This likely means we have been vastly underestimating the microbial contribution to the production of this important gas.”

Comments Off on Discovered: Tiny Ocean Organism Has Big Role in Climate Regulation

Evidence of Ancient Giant Asteroid Strike Found in Australia, “Just the Tip of the Iceberg…”

May 17th, 2016By Alton Parrish.

Scientists have found evidence of a huge asteroid that struck the Earth early in its life with an impact larger than anything humans have experienced.

Tiny glass beads called spherules, found in north-western Australia were formed from vaporized material from the asteroid impact, said Dr Andrew Glikson from The Australian National University (ANU).

“The impact would have triggered earthquakes orders of magnitude greater than terrestrial earthquakes, it would have caused huge tsunamis and would have made cliffs crumble,” said Dr Glikson, from the ANU Planetary Institute.

“Material from the impact would have spread worldwide. These spherules were found in sea floor sediments that date from 3.46 billion years ago.”

The asteroid is the second oldest known to have hit the Earth and one of the largest.

Dr Glikson said the asteroid would have been 20 to 30 kilometers across and would have created a crater hundreds of kilometers wide.

About 3.8 to 3.9 billion years ago the moon was struck by numerous asteroids, which formed the craters, called mare, that are still visible from Earth

“Exactly where this asteroid struck the earth remains a mystery,” Dr Glikson said.

“Any craters from this time on Earth’s surface have been obliterated by volcanic activity and tectonic movements.”

Dr Glikson and Dr Arthur Hickman from Geological Survey of Western Australia found the glass beads in a drill core from Marble Bar, in north-western Australia, in some of the oldest known sediments on Earth.

The sediment layer, which was originally on the ocean floor, was preserved between two volcanic layers, which enabled very precise dating of its origin.

Dr Glikson has been searching for evidence of ancient impacts for more than 20 years and immediately suspected the glass beads originated from an asteroid strike.

Subsequent testing found the levels of elements such as platinum, nickel and chromium matched those in asteroids.

There may have been many more similar impacts, for which the evidence has not been found, said Dr Glikson

“This is just the tip of the iceberg. We’ve only found evidence for 17 impacts older than 2.5 billion years, but there could have been hundreds”

“Asteroid strikes this big result in major tectonic shifts and extensive magma flows. They could have significantly affected the way the Earth evolved.”

Comments Off on Evidence of Ancient Giant Asteroid Strike Found in Australia, “Just the Tip of the Iceberg…”

Quark-Gluon Plasma Created: Scientists See Ripples of a Particle-Separating Wave

May 16th, 2016By Alton Parrish.

Scientists in the STAR collaboration at the Relativistic Heavy Ion Collider (RHIC), a particle accelerator exploring nuclear physics and the building blocks of matter at the U.S. Department of Energy’s Brookhaven National Laboratory, have new evidence for what’s called a “chiral magnetic wave” rippling through the soup of quark-gluon plasma created in RHIC’s energetic particle smashups.

The STAR detector at the Relativistic Heavy Ion Collider tracks particles emerging from thousands of subatomic smashups per second.

The presence of this wave is one of the consequences scientists were expecting to observe in the quark-gluon plasma–a state of matter that existed in the early universe when quarks and gluons, the building blocks of protons and neutrons, were free before becoming inextricably bound within those larger particles. The tentative discovery, if confirmed, would provide additional evidence that RHIC’s collisions of energetic gold ions recreate nucleus-size blobs of the fiery plasma thousands of times each second. It would also provide circumstantial evidence in support of a separate, long-debated quantum phenomenon required for the wave’s existence. The findings are described in a paper that will be highlighted as an Editors’ Suggestion in Physical Review Letters.

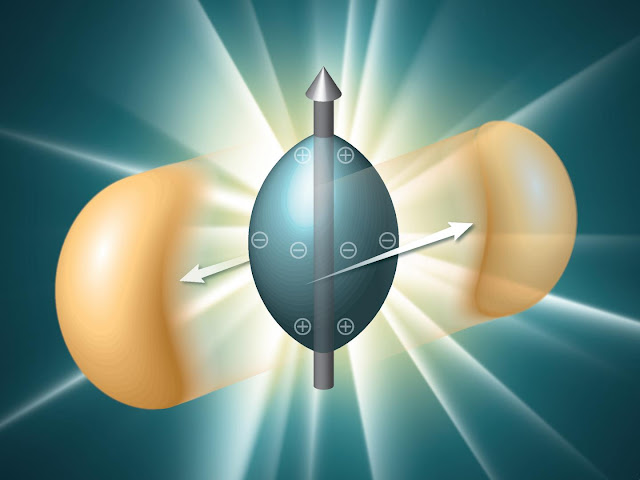

To try to understand these results, let’s take a look deep within the plasma to a seemingly surreal world where magnetic fields separate left- and right-“handed” particles, setting up waves that have differing effects on how negatively and positively charged particles flow.

“What we measure in our detector is the tendency of negatively charged particles to come out of the collisions around the ‘equator’ of the fireball, while positively charged particles are pushed to the poles,” said STAR collaborator Hongwei Ke, a postdoctoral fellow at Brookhaven. But the reasons for this differential flow, he explained, begin when the gold ions collide.

Off-center collisions of gold ions create a strong magnetic field and set up a series of effects that push positively charged particles to the poles of the football-shaped collision zone and negatively charged particles to the equator.

(0)

The ions are gold atoms stripped of their electrons, leaving 79 positively charged protons in a naked nucleus. When these ions smash into one another even slightly off center, the whole mix of charged matter starts to swirl. That swirling positive charge sets up a powerful magnetic field perpendicular to the circulating mass of matter, Ke explained. Picture a spinning sphere with north and south poles.

Within that swirling mass, there are huge numbers of subatomic particles, including quarks and gluons at the early stage, and other particles at a later stage, created by the energy deposited in the collision zone. Many of those particles also spin as they move through the magnetic field. The direction of their spin relative to their direction of motion is a property called chirality, or handedness; a particle moving away from you spinning clockwise would be right-handed, while one spinning counterclockwise would be left-handed.

According to Gang Wang, a STAR collaborator from the University of California at Los Angeles, if the numbers of particles and antiparticles are different, the magnetic field will affect these left- and right-handed particles differently, causing them to separate along the axis of the magnetic field according to their “chiral charge.”

“This ‘chiral separation’ acts like a seed that, in turn, causes particles with different charges to separate,” Gang said. “That triggers even more chiral separation, and more charge separation, and so on–with the two effects building on one another like a wave, hence the name ‘chiral magnetic wave.’ In the end, what you see is that these two effects together will push more negative particles into the equator and the positive particles to the poles.”

(1)

To look for this effect, the STAR scientists measured the collective motion of certain positively and negatively charged particles produced in RHIC collisions. They found that the collective elliptic flow of the negatively charged particles–their tendency to flow out along the equator–was enhanced, while the elliptic flow of the positive particles was suppressed, resulting in a higher abundance of positive particles at the poles. Importantly, the difference in elliptic flow between positive and negative particles increased with the net charge density produced in RHIC collisions.

According to the STAR publication, this is exactly what is expected from calculations using the theory predicting the existence of the chiral magnetic wave. The authors note that the results hold out for all energies at which a quark-gluon plasma is believed to be created at RHIC, and that, so far, no other model can explain them.

The finding, says Aihong Tang, a STAR physicist from Brookhaven Lab, has a few important implications.

“First, seeing evidence for the chiral magnetic wave means the elements required to create the wave must also exist in the quark-gluon plasma. One of these is the chiral magnetic effect–the quantum physics phenomenon rrrthat causes the electric charge separation along the axis of the magnetic field–which has been a hotly debated topic in physics. Evidence of the wave is evidence that the chiral magnetic effect also exists.” Tang said.

The chiral magnetic effect is also related to another intriguing observation at RHIC of more-localized charge separation within the quark-gluon plasma. So this new evidence of the wave provides circumstantial support for those earlier findings.

Finally, Tang pointed out that the process resulting in propagation of the chiral magnetic wave requires that “chiral symmetry”–the independent identities of left- and right-handed particles–be “restored.”

“In the ‘ground state’ of quantum chromodynamics (QCD)–the theory that describes the fundamental interactions of quarks and gluons–chiral symmetry is broken, and left- and right-handed particles can transform into one another. So the chiral charge would be eliminated and you wouldn’t see the propagation of the chiral magnetic wave,” said nuclear theorist Dmitri Kharzeev, a physicist at Brookhaven and Stony Brook University. But QCD predicts that when quarks and gluons are deconfined, or set free from protons and neutrons as in a quark-gluon plasma, chiral symmetry is restored. So the observation of the chiral wave provides evidence for chiral symmetry restoration–a key signature that quark-gluon plasma has been created.

“How does deconfinement restore the symmetry? This is one of the main things we want to solve,” Kharzeev said. “We know from the numerical studies of QCD that deconfinement and restoration happen together, which suggests there is some deep relationship. We really want to understand that connection.”

Brookhaven physicist Zhangbu Xu, spokesperson for the STAR collaboration, added, “To improve our ability to search for and understand the chiral effects, we’d like to compare collisions of nuclei that have the same mass number but different numbers of protons–and therefore, different amounts of positive charge (for example, Ruthenium, mass number 96 with 44 protons, and Zirconium, mass number 96 with 40 protons). That would allow us to vary the strength of the initial magnetic field while keeping all other conditions essentially the same.”

Comments Off on Quark-Gluon Plasma Created: Scientists See Ripples of a Particle-Separating Wave

Mars: Boiling water could be carving slopes into the planet’s surface

May 16th, 2016By alton Parrish.

Active features observed on the surface of Mars could be the result of liquid water boiling whilst flowing under the low pressure of a thin atmosphere, according to an Open University study published online this week in Nature Geoscience.

The low pressure on the surface of Mars means that water is not stable for long and will either quickly freeze or boil; liquid water therefore exists only very temporarily. The streaks and slopes that have been observed and seen to lengthen on Mars during its summer had previously been attributed to flowing salty water. However, new research indicates the reason for this could be altogether more explosive, as sand particles on the planet’s surface are ejected by the rapidly boiling liquid

Open University Mars simulation chamber

Scientists at The Open University used their unique Mars simulation chamber to conduct experiments of water flowing down a slope of martian surface material, under martian atmospheric temperatures and pressures. A block of ice was placed on top of a sandy slope and under Earth-like conditions, little change was observed to the slope as the ice melted and trickled downwards.

Dr Manish Patel, who was part of the research team and responsible for the Mars simulation chamber says: “Water on Mars is generally unstable and it’s this sudden boiling during the flow of the water which is the key process that could be causing these small channels on the surface. This discovery has the potential to change how we interpret these kinds of geomorphological flow features on surface of Mars, and clearly shows us that there are important differences in how water-related debris flows occur on Earth and Mars.”

Comments Off on Mars: Boiling water could be carving slopes into the planet’s surface

Ingestible Robot Operates in Simulated Stomach

May 15th, 2016By Alton Parrish.

In experiments involving a simulation of the human esophagus and stomach, researchers at MIT, the University of Sheffield, and the Tokyo Institute of Technology have demonstrated a tiny origami robot that can unfold itself from a swallowed capsule and, steered by external magnetic fields, crawl across the stomach wall to remove a swallowed button battery or patch a wound.

The new work, which the researchers are presenting this week at the International Conference on Robotics and Automation, builds on a long sequence of papers on origami robots from the research group of Daniela Rus, the Andrew and Erna Viterbi Professor in MIT’s Department of Electrical

Engineering and Computer Science.

“It’s really exciting to see our small origami robots doing something with potential important applications to healthcare,” says Daniela Rus. Pictured, an example of a capsule and the unfolded origami device.

“It’s really exciting to see our small origami robots doing something with potential important applications to health care,” says Rus, who also directs MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL). “For applications inside the body, we need a small, controllable, untethered robot system. It’s really difficult to control and place a robot inside the body if the robot is attached to a tether.”

Researchers at MIT and elsewhere developed a tiny origami robot that can unfold itself from a swallowed capsule and, steered by external magnetic fields, crawl across the stomach wall to remove a swallowed button battery or patch a wound.

Joining Rus on the paper are first author Shuhei Miyashita, who was a postdoc at CSAIL when the work was done and is now a lecturer in electronics at the University of York, in England; Steven Guitron, a graduate student in mechanical engineering; Shuguang Li, a CSAIL postdoc; Kazuhiro Yoshida of Tokyo Institute of Technology, who was visiting MIT on sabbatical when the work was done; and Dana Damian of the University of Sheffield, in England.

Although the new robot is a successor to one reported at the same conference last year, the design of its body is significantly different. Like its predecessor, it can propel itself using what’s called a “stick-slip” motion, in which its appendages stick to a surface through friction when it executes a move, but slip free again when its body flexes to change its weight distribution.

Also like its predecessor — and like several other origami robots from the Rus group — the new robot consists of two layers of structural material sandwiching a material that shrinks when heated. A pattern of slits in the outer layers determines how the robot will fold when the middle layer contracts.

Material difference

The robot’s envisioned use also dictated a host of structural modifications. “Stick-slip only works when, one, the robot is small enough and, two, the robot is stiff enough,” says Guitron. “With the original Mylar design, it was much stiffer than the new design, which is based on a biocompatible material.”

To compensate for the biocompatible material’s relative malleability, the researchers had to come up with a design that required fewer slits. At the same time, the robot’s folds increase its stiffness along certain axes.

The new robot consists of two layers of structural material sandwiching a material that shrinks when heated. A pattern of slits in the outer layers determines how the robot will fold when the middle layer contracts.

But because the stomach is filled with fluids, the robot doesn’t rely entirely on stick-slip motion. “In our calculation, 20 percent of forward motion is by propelling water — thrust — and 80 percent is by stick-slip motion,” says Miyashita. “In this regard, we actively introduced and applied the concept and characteristics of the fin to the body design, which you can see in the relatively flat design.”

It also had to be possible to compress the robot enough that it could fit inside a capsule for swallowing; similarly, when the capsule dissolved, the forces acting on the robot had to be strong enough to cause it to fully unfold. Through a design process that Guitron describes as “mostly trial and error,” the researchers arrived at a rectangular robot with accordion folds perpendicular to its long axis and pinched corners that act as points of traction.

In the center of one of the forward accordion folds is a permanent magnet that responds to changing magnetic fields outside the body, which control the robot’s motion. The forces applied to the robot are principally rotational. A quick rotation will make it spin in place, but a slower rotation will cause it to pivot around one of its fixed feet. In the researchers’ experiments, the robot uses the same magnet to pick up the button battery.

Porcine precedents

The researchers tested about a dozen different possibilities for the structural material before settling on the type of dried pig intestine used in sausage casings. “We spent a lot of time at Asian markets and the Chinatown market looking for materials,” Li says. The shrinking layer is a biodegradable shrink wrap called Biolefin.

To design their synthetic stomach, the researchers bought a pig stomach and tested its mechanical properties. Their model is an open cross-section of the stomach and esophagus, molded from a silicone rubber with the same mechanical profile. A mixture of water and lemon juice simulates the acidic fluids in the stomach.

Every year, 3,500 swallowed button batteries are reported in the U.S. alone. Frequently, the batteries are digested normally, but if they come into prolonged contact with the tissue of the esophagus or stomach, they can cause an electric current that produces hydroxide, which burns the tissue. Miyashita employed a clever strategy to convince Rus that the removal of swallowed button batteries and the treatment of consequent wounds was a compelling application of their origami robot.

“Shuhei bought a piece of ham, and he put the battery on the ham,” Rus says. “Within half an hour, the battery was fully submerged in the ham. So that made me realize that, yes, this is important. If you have a battery in your body, you really want it out as soon as possible.”

“This concept is both highly creative and highly practical, and it addresses a clinical need in an elegant way,” says Bradley Nelson, a professor of robotics at the Swiss Federal Institute of Technology Zurich. “It is one of the most convincing applications of origami robots that I have seen.”

Comments Off on Ingestible Robot Operates in Simulated Stomach

Space Mission First to Observe Key Interaction Between Magnetic Fields of Earth and Sun

May 15th, 2016By Alton Parrish.

NASA mission, with help from UMD physicists, is the first ever to observe how magnetic reconnection takes place, a critical step in understanding space weather.

Most people do not give much thought to the Earth’s magnetic field, yet it is every bit as essential to life as air, water and sunlight. The magnetic field provides an invisible, but crucial, barrier that protects Earth from the sun’s magnetic field, which drives a stream of charged particles known as the solar wind outward from the sun’s outer layers. The interaction between these two magnetic fields can cause explosive storms in the space near Earth, which can knock out satellites and cause problems here on Earth’s surface, despite the protection offered by Earth’s magnetic field.

This artist’s rendition shows the four identical MMS spacecraft flying near the sun-facing boundary of Earth’s magnetic field (blue wavy lines). The MMS mission has revealed the clearest picture yet of the process of magnetic reconnection between the magnetic fields of Earth and the sun — a driving force behind space weather, solar flares and other energetic phenomena.

A new study co-authored by University of Maryland physicists provides the first major results of NASA’s Magnetospheric Multiscale (MMS) mission, including an unprecedented look at the interaction between the magnetic fields of Earth and the sun. The paper describes the first direct and detailed observation of a phenomenon known as magnetic reconnection, which occurs when two opposing magnetic field lines break and reconnect with each other, releasing massive amounts of energy.

The discovery is a major milestone in understanding magnetism and space weather. The research paper appears in the May 13, 2016, issue of the journal Science.

“Imagine two trains traveling toward each other on separate tracks, but the trains are switched to the same track at the last minute,” said James Drake, a professor of physics at UMD and a co-author on the Science study. “Each track represents a magnetic field line from one of the two interacting magnetic fields, while the track switch represents a reconnection event. The resulting crash sends energy out from the reconnection point like a slingshot.”

Evidence suggests that reconnection is a major driving force behind events such as solar flares, coronal mass ejections, magnetic storms, and the auroras observed at both the North and South poles of Earth. Although researchers have tried to study reconnection in the lab and in space for nearly half a century, the MMS mission is the first to directly observe how reconnection happens.

The MMS mission provided more precise observations than ever before. Flying in a pyramid formation at the edge of Earth’s magnetic field with as little as 10 kilometers’ distance between four identical spacecraft, MMS images electrons within the pyramid once every 30 milliseconds. In contrast, MMS’ predecessor, the European Space Agency and NASA’s Cluster II mission, takes measurements once every three seconds–enough time for MMS to make 100 measurements.

“Just looking at the data from MMS is extraordinary. The level of detail allows us to see things that were previously a blur,” explained Drake, who served on the MMS science team and also advised the engineering team on the requirements for MMS instrumentation. “With a time interval of three seconds, seeing reconnection with Cluster II was impossible. But the quality of the MMS data is absolutely inspiring. It’s not clear that there will ever be another mission quite like this one.”

The four MMS spacecraft fly in a tightly controlled tetrahedral (pyramid) shape that can be re-scaled by changing the distances between each spacecraft. MMS can image the behavior of electrons within this tetrahedron once every 30 milliseconds — providing 100X greater resolution than previous efforts.

Simply observing reconnection in detail is an important milestone. But a major goal of the MMS mission is to determine how magnetic field lines briefly break, enabling reconnection and energy release to happen. Measuring the behavior of electrons in a reconnection event will enable a more accurate description of how reconnection works; in particular, whether it occurs in a neat and orderly process, or in a turbulent, stormlike swirl of energy and particles.

A clearer picture of the physics of reconnection will also bring us one step closer to understanding space weather–including whether solar flares and magnetic storms follow any sort of predictable pattern like weather here on Earth. Reconnection can also help scientists understand other, more energetic astrophysical phenomena such as magnetars, which are neutron stars with an unusually strong magnetic field.

“Understanding reconnection is relevant to a whole range of scientific questions in solar physics and astrophysics,” said Marc Swisdak, an associate research scientist in UMD’s Institute for Research in Electronics and Applied Physics. Swisdak is not a co-author on theScience paper, but he is actively collaborating with Drake and others on subsequent analyses of the MMS data.

“Reconnection in Earth’s magnetic field is relatively low energy, but we can get a good sense of what is happening if we extrapolate to more energetic systems,” Swisdak added. “The edge of Earth’s magnetic field is an excellent test lab, as it’s just about the only place where we can fly a spacecraft directly through a region where reconnection occurs.”

To date, MMS has focused only on the sun-facing side of Earth’s magnetic field. In the future, the mission is slated to fly to the opposite side to investigate the teardrop-shaped tail of the magnetic field that faces away from the sun.

Comments Off on Space Mission First to Observe Key Interaction Between Magnetic Fields of Earth and Sun

Wine Could Help Counteract the Negative Impact of High Fat/High Sugar Diets

May 14th, 2016

By alton Parrish.

Red wine lovers have a new reason to celebrate. Researchers have found a new health benefit of resveratrol, which occurs naturally in blueberries, raspberries, mulberries, grape skins and consequently in red wine.

While studying the effects of resveratrol in the diet of rhesus monkeys, Dr. J.P. Hyatt, an associate professor at Georgetown University, and his team of researchers hypothesized that a resveratrol supplement would counteract the negative impact of a high fat/high sugar diet on the hind leg muscles. In previous animal studies, resveratrol has already shown to increase the life span of mice and slow the onset of diabetes. In one study, it mirrored the positive effects of aerobic exercise in mice, which were fed a high fat/high sugar diet.

For Dr. Hyatt’s current study, which was published in the open access journal Frontiers in Physiology, a control group of rhesus monkeys was fed a healthy diet and another group was fed a high fat/high sugar diet, half of which also received a resveratrol supplement and half of which did not. The researchers wanted to know how different parts of the body responded to the benefits of resveratrol – specifically the muscles in the back of the leg.

Three types of muscles were examined: a “slow” muscle, a “fast” muscle and a “mixed” muscle. The study showed that each muscle responded differently to the diet and to the addition of resveratrol.

The soleus muscle, a large muscle spanning from the knee to the heel, is considered a “slow” muscle used extensively in standing and walking. Of the three lower hind leg muscles analyzed for this study, the soleus was the most effected by the high fat/high sugar diet and also most effected by the resveratrol supplements; this may be partially due to the fact that, on a daily basis, it is used much more than the other two muscles.

In the soleus muscle, myosin, a protein which helps muscles contract, and determines its slow or fast properties, shifted from more slow to more fast with a high fat/ high sugar diet. The addition of resveratrol to the diet counteracted this shift.

The plantaris muscle, a 5-10 cm long muscle along the back of the calf, did not have a negative response to the high fat/high sugar diet, but it did have a positive response to the addition of resveratrol, with a fast to slow myosin shift. The third muscle was not affected by the diet or addition of resveratrol.

Hyatt said it would be reasonable to expect other slow muscles to respond similarly to the soleus muscle when exposed to a high fat/high sugar diet and resveratrol.

“The maintenance or addition of slow characteristics in soleus and plantaris muscles, respectively, implies that these muscles are far more fatigue resistant than those without resveratrol. Skeletal muscles that are phenotypically slower can sustain longer periods of activity and could contribute to improved physical activity, mobility, or stability, especially in elderly individuals,” he said, when asked if this study could be applied to humans.

While these results are encouraging, and there might be a temptation to continue eating a high fat/high sugar diet and simply add a glass of red wine or a cup of fruit to one’s daily consumption, the researchers stress the importance of a healthy diet cannot be overemphasized. But for now there’s one more reason to have a glass of red wine.

Comments Off on Wine Could Help Counteract the Negative Impact of High Fat/High Sugar Diets

Advanced Astronomical Software Used to Date 2,500 Year-Old Lyric Poem

May 14th, 2016By Alton Parrish.

Physicists and astronomers from the University of Texas at Arlington have used advanced astronomical software to accurately date lyric poet Sappho’s “Midnight Poem,” which describes the night sky over Greece more than 2,500 years ago.

The scientists described their research in the article “Seasonal dating of Sappho’s ‘Midnight Poem’ revisited,” published today in the Journal of Astronomical History and Heritage. Martin George, former president of the International Planetarium Society, now at the National Astronomical Research Institute of Thailand, also participated in the work.

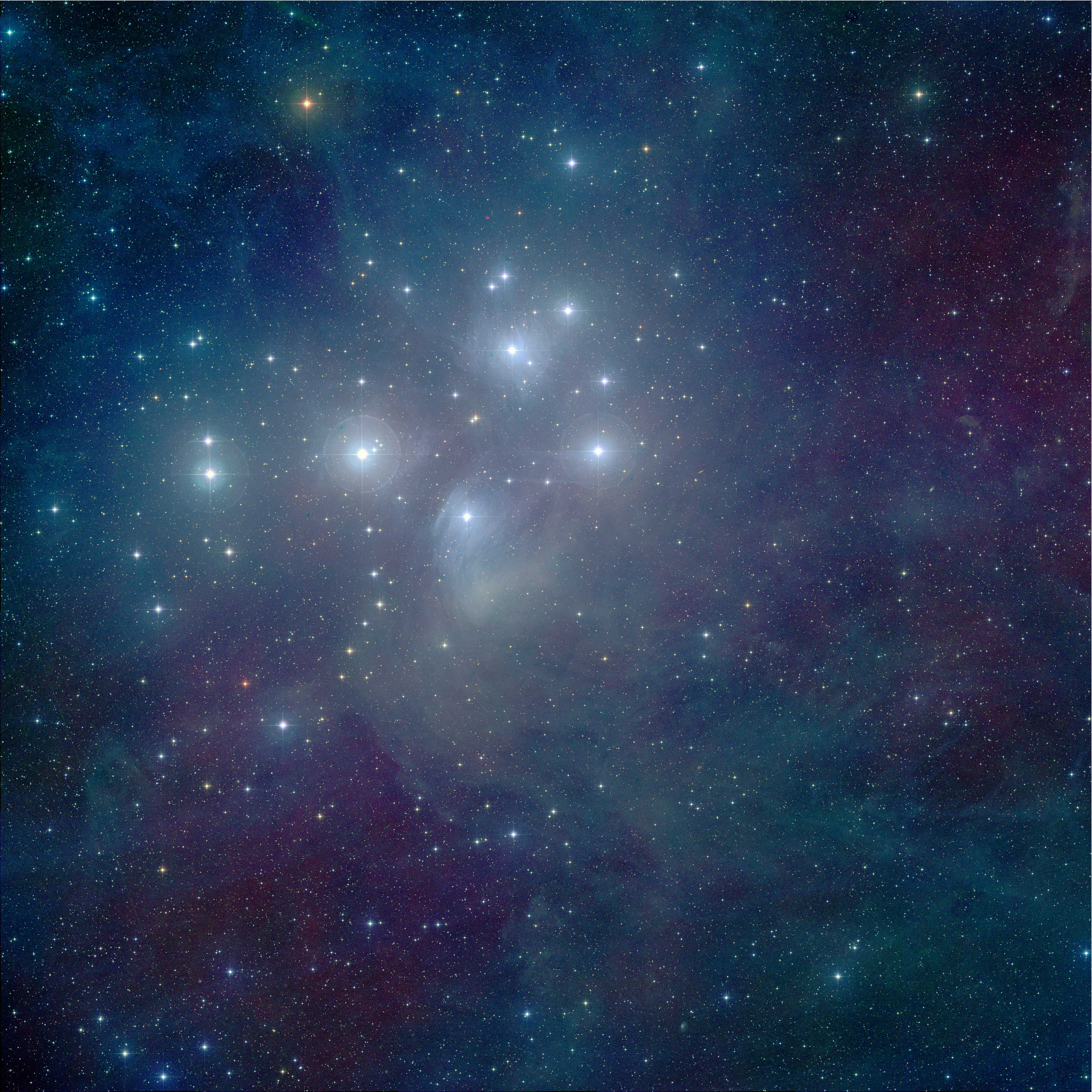

This is a color composite image of the Pleiades from the Digitized Sky Survey.

“This is an example of where the scientific community can make a contribution to knowledge described in important ancient texts, ” said Manfred Cuntz, physics professor and lead author of the study. “Estimations had been made for the timing of this poem in the past, but we were able to scientifically confirm the season that corresponds to her specific descriptions of the night sky in the year 570 B.C.”

Sappho’s “Midnight Poem” describes a star cluster known as the Pleiades having set at around midnight, when supposedly observed by her from the Greek island of Lesbos.

The moon has set

And the Pleiades;

It is midnight,

The time is going by,

And I sleep alone.

(Henry Thornton Wharton, 1887:68)

Cuntz and co-author and astronomer Levent Gurdemir, director of the Planetarium at UTA, used advanced software called Starry Night version 7.3, to identify the earliest date that the Pleiades would have set at midnight or earlier in local time in 570 B.C. The Planetarium system Digistar 5 also allows creating the night sky of ancient Greece for Sappho’s place and time.

“Use of Planetarium software permits us to simulate the night sky more accurately on any date, past or future, at any location,” said Levent Gurdemir.”This is an example of how we are opening up the Planetarium to research into disciplines beyond astronomy, including geosciences, biology, chemistry, art, literature, architecture, history and even medicine.”

The Starry Night software demonstrated that in 570 B.C., the Pleiades set at midnight on Jan. 25, which would be the earliest date that the poem could relate to. As the year progressed, the Pleiades set progressively earlier.

“The timing question is complex as at that time they did not have accurate mechanical clocks as we do, only perhaps water clocks” said Cuntz. “For that reason, we also identified the latest date on which the Pleiades would have been visible to Sappho from that location on different dates some time during the evening.”

The researchers also determined that the last date that the Pleiades would have been seen at the end of astronomical twilight – the moment when the Sun’s altitude is -18 degrees and the sky is regarded as perfectly dark – was March 31.

“From there, we were able to accurately seasonally date this poem to mid-winter and early spring, scientifically confirming earlier estimations by other scholars,” Cuntz said.

Sappho was the leading female poet of her time and closely rivaled Homer. Her interest in astronomy was not restricted to the “Midnight Poem.” Other examples of her work make references to the Sun, the Moon, and planet Venus.

“Sappho should be considered an informal contributor to early Greek astronomy as well as to Greek society at large,” Cuntz added. “Not many ancient poets comment on astronomical observations as clearly as she does.”

Morteza Khaledi, dean of UTA’s College of Science, congratulated the researchers on their work, which forms part of UTA’s strategic focus on data-driven discovery within the Strategic Plan 2020: Bold Solutions | Global Impact.

“This research helps to break down the traditional silos between science and the liberal arts, by using high-precision technology to accurate date ancient poetry,” Khaledi said. “It also demonstrates that the Planetarium’s reach can go way beyond astronomy into multiple fields of research.”

Dr. Manfred Cuntz is a professor of physics at UTA and active researcher in solar and stellar astrophysics, as well as astrobiology. In recent years he has focused on extra-solar planets, including stellar habitable zones and orbital stability analyses. He received his Ph.D. from the University of Heidelberg, Germany, in 1988.

Levent Gurdemir received his master’s of science degree in physics from UTA and is the current director of the university’s Planetarium. UTA uses the facility for research, teaching and community outreach, serving large numbers of K-12 students and the public at this local facility.

Comments Off on Advanced Astronomical Software Used to Date 2,500 Year-Old Lyric Poem

Child’s Robot Learning Companion Serves as a Customizable Tutor

May 12th, 2016

By Alton Parrish.

Parents want the best for their children’s education and often complain about large class sizes and the lack of individual attention. A new social robot from MIT helps students learn through personalized interactions.

Goren Gordon, an artificial intelligence researcher from Tel Aviv University who runs the Curiosity Lab there, is no different.

He and his wife spend as much time as they can with their children, but there are still times when their kids are alone or unsupervised. At those times, they’d like their children to have a companion to learn and play with, Gordon says.

That’s the case, even if that companion is a robot.

(1)

Working in the Personal Robots Group at MIT, led by Cynthia Breazeal, Gordon was part of a team that developed a socially assistive robot called Tega that is designed to serve as a one-on-one peer learner in or outside of the classroom.

Tega is the newest social robot platform designed and built by a diverse team of engineers, software developers, and artists at the Personal Robots Group at the MIT Media Lab. This robot, with its furry, brightly colored appearance, was developed specifically to enable long-term interactions with children.

(2)

Personal Robots Group, MIT Media Lab

Socially assistive robots for education aren’t new, but what makes Tega unique is the fact that it can interpret the emotional response of the student it is working with and, based on those cues, create a personalized motivational strategy.

Testing the setup in a preschool classroom, the researchers showed that the system can learn and improve itself in response to the unique characteristics of the students it worked with. It proved to be more effective at increasing students’ positive attitude towards the robot and activity than a non-personalized robot assistant.

The team reported its results at the 30th Association for the Advancement of Artificial Intelligence (AAAI) Conference in Phoenix, Arizona, in February.

Tega is the latest in a line of smartphone-based, socially assistive robots developed in the MIT Media Lab. The work is supported by a five-year, $10 million Expeditions in Computing award from the National Science Foundation (NSF), which support long-term, multi-institutional research in areas with the potential for disruptive impact.

The classroom pilot

The researchers piloted the system with 38 students aged three to five in a Boston-area school last year. Each student worked individually with Tega for 15 minutes per session over the course of eight weeks.

A furry, brightly colored robot, Tega was developed specifically to enable long-term interactions with children. It uses an Android device to process movement, perception and thinking and can respond appropriately to children’s behaviors.

(3)

A child plays an interactive language learning game with Tega, a socially assistive robot

Unlike previous iterations, Tega is equipped with a second Android phone containing custom software developed by Affectiva Inc. — an NSF-supported spin-off of Rosalind Picard of MIT — that can interpret the emotional content of facial expressions, a method known as “affective computing.”

The students in the trial learned Spanish vocabulary from a tablet computer loaded with a custom-made learning game. Tega served not as a teacher but as a peer learner, encouraging students, providing hints when necessary and even sharing in students’ annoyance or boredom when appropriate.

The system began by mirroring the emotional response of students – getting excited when they were excited, and distracted when the students lost focus – which educational theory suggests is a successful approach. However, it went further and tracked the impact of each of these cues on the student.

Over time, it learned how the cues influenced a student’s engagement, happiness and learning successes. As the sessions continued, it ceased to simply mirror the child’s mood and began to personalize its responses in a way that would optimize each student’s experience and achievement.

(4)

Personal Robots Group, MIT Media Lab

“We started with a very high-quality approach, and what is amazing is that we were able to show that we could do even better,” Gordon says.

Over the eight weeks, the personalization continued to increase. Compared with a control group that received only the mirroring reaction, students with the personalized response were more engaged by the activity, the researchers found.

In addition to tracking long-term impacts of the personalization, they also studied immediate changes that a response from Tega elicited from the student. From these before-and-after responses, they learned that some reactions, like a yawn or a sad face, had the effect of lowering the engagement or happiness of the student — something they had suspected but that had never been studied.

“We know that learning from peers is an important way that children learn not only skills and knowledge, but also attitudes and approaches to learning such as curiosity and resilience to challenge,” says Breazeal, associate professor of Media Arts and director of the Personal Robots Group at the MIT Media Laboratory. “What is so fascinating is that children appear to interact with Tega as a peer-like companion in a way that opens up new opportunities to develop next-generation learning technologies that not only address the cognitive aspects of learning, like learning vocabulary, but the social and affective aspects of learning as well.”

The experiment served as a proof of concept for the idea of personalized educational assistive robots and also for the feasibility of using such robots in a real classroom. The system, which is almost entirely wireless and easy to set up and operate behind a divider in an active classroom, caused very little disruption and was thoroughly embraced by the student participants and by teachers.

“It was amazing to see,” Gordon reports. “After a while the students started hugging it, touching it, making the expression it was making and playing independently with almost no intervention or encouragement.”

Tega is a new platform, developed at MIT, for research in socially assistive robotics. The Tega design expands on MIT’s past work with squash-and-stretch style robots like Dragonbot but introduces new capabilities and a more robust design, with the intention of a three-month deployment for interaction with children.

Though the duration of the experiment was comprehensive, the study showed the personalization process continued to progress even through the eight weeks, suggesting more time would be needed to arrive at an optimal interaction style.

The researchers plan to improve upon and test the system in a variety of settings, including with students with learning disabilities, for whom one-on-one interaction and assistance is particularly critical and hard to come by.

“A child who is more curious is able to persevere through frustration, can learn with others and will be a more successful lifelong learner,” Breazeal says. “The development of next-generation learning technologies that can support the cognitive, social and emotive aspects of learning in a highly personalized way is thrilling.”

Comments Off on Child’s Robot Learning Companion Serves as a Customizable Tutor

Are Children Like Werewolves? Does the Moon Alter Moods

May 12th, 2016By Alton Parrish.

Are children like werewolves? Study of children’s sleeping patterns over lunar cycles.

Always surrounded by an aura of mystery, the moon and its possible influence over human behavior has been object of ancestral fascination and mythical speculation for centuries. While the full moon cannot turn people into werewolves, some people do accuse it of causing a bad night’s sleep or creating physical and mental alterations. But is there any science behind these myths?

To establish if lunar phases somehow do affect humans, an international group of researchers studied children to see if their sleeping patterns changed or if there were any differences in their daily activities. The results were published in Frontiers in Pediatrics.

“We considered that performing this research on children would be particularly more relevant because they are more amenable to behavior changes than adults and their sleep needs are greater than adults,” said Dr. Jean-Philippe Chaput, from the Eastern Ontario Research Institute.

The study was completed on a total of 5812 children from five continents. The children came from a wide range of economic and sociocultural levels, and variables such as age, sex, highest parental education, day of measurement, body mass index score, nocturnal sleep duration, level of physical activity and total sedentary time were considered.

Data collection took place over 28 months, which is equivalent to the same number of lunar cycles. These were then subdivided into three lunar phases: full moon, half-moon and new moon. The findings obtained in the study revealed that in general, nocturnal sleep duration around full moon compared to new moon reported an average decrease of 5 minutes (or a 1% variant). No other activity behaviors were substantially modified.

Moon Phases 2016, Northern Hemisphere

“Our study provides compelling evidence that the moon does not seem to influence people’s behavior. The only significant finding was the 1% sleep alteration in full moon, and this is largely explained by our large sample size that maximizes statistical power,” said Chaput.The clinical implication of sleeping 5 minutes less during full moon does not represent a considerable threat to health. “Overall, I think we should not be worried about the full moon. Our behaviors are largely influenced by many other factors like genes, education, income and psychosocial aspects rather than by gravitational forces,” he added.

While the results of this study are conclusive, controversy over the moon could result in further research to determine if our biology is in some way synchronized with the lunar cycle or if the full moon has a larger influence in people suffering mental disorders or physical ailments.

“Folklore and even certain instances of occupational lore suggest that mental health issues or behaviors of humans and animals are affected by lunar phases. Whether there is science behind the myth or not, the moon mystery will continue to fascinate civilizations in the years to come,” he said.

Comments Off on Are Children Like Werewolves? Does the Moon Alter Moods

Five-Fingered Robot Hand Learns to Get a Grip On Its Own

May 11th, 2016

by Alton Parrish.

Robots today can perform space missions, solve a Rubik’s cube, sort hospital medication and even make pancakes. But most can’t manage the simple act of grasping a pencil and spinning it around to get a solid grip.

Intricate tasks that require dexterous in-hand manipulation — rolling, pivoting, bending, sensing friction and other things humans do effortlessly with our hands — have proved notoriously difficult for robots.

Now, a University of Washington team of computer scientists and engineers has built a robot hand that can not only perform dexterous manipulation but also learn from its own experience without needing humans to direct it. Their latest results are detailed in a paper to be presented May 17 at the IEEE International Conference on Robotics and Automation.

“Hand manipulation is one of the hardest problems that roboticists have to solve,” said lead author Vikash Kumar, a UW doctoral student in computer science and engineering. “A lot of robots today have pretty capable arms but the hand is as simple as a suction cup or maybe a claw or a gripper.”

By contrast, the UW research team spent years custom building one of the most highly capable five-fingered robot hands in the world. Then they developed an accurate simulation model that enables a computer to analyze movements in real time. In their latest demonstration, they apply the model to the hardware and real-world tasks like rotating an elongated object.

With each attempt, the robot hand gets progressively more adept at spinning the tube, thanks to machine learning algorithms that help it model both the basic physics involved and plan which actions it should take to achieve the desired result.

This autonomous learning approach developed by the UW Movement Control Laboratory contrasts with robotics demonstrations that require people to program each individual movement of the robot’s hand in order to complete a single task.

“Usually people look at a motion and try to determine what exactly needs to happen —the pinky needs to move that way, so we’ll put some rules in and try it and if something doesn’t work, oh the middle finger moved too much and the pen tilted, so we’ll try another rule,” said senior author and lab director Emo Todorov, UW associate professor of computer science and engineering and of applied mathematics.

“It’s almost like making an animated film — it looks real but there was an army of animators tweaking it,” Todorov said. “What we are using is a universal approach that enables the robot to learn from its own movements and requires no tweaking from us.”

UW computer science and engineering doctoral student Vikash Kumar custom built this robot hand, which has 40 tendons, 24 joints and more than 130

Building a dexterous, five-fingered robot hand poses challenges, both in design and control. The first involved building a mechanical hand with enough speed, strength responsiveness and flexibility to mimic basic behaviors of a human hand.

The UW’s dexterous robot hand — which the team built at a cost of roughly $300,000 — uses a Shadow Hand skeleton actuated with a custom pneumatic system and can move faster than a human hand. It is too expensive for routine commercial or industrial use, but it allows the researchers to push core technologies and test innovative control strategies.

“There are a lot of chaotic things going on and collisions happening when you touch an object with different fingers, which is difficult for control algorithms to deal with,” said co-authorSergey Levine, UW assistant professor of computer science and engineering who worked on the project as a postdoctoral fellow at University of California, Berkeley. “The approach we took was quite different from a traditional controls approach.”