Posts by AltonParrish:

Computers in Your Clothes: Clothes that Receive and Transmit Digital Information Are Closer to Reality

May 9th, 2016

By Alton Parrish.

Researchers who are working to develop wearable electronics have reached a milestone: They are able to embroider circuits into fabric with 0.1 mm precision—the perfect size to integrate electronic components such as sensors and computer memory devices into clothing.

With this advance, the Ohio State University researchers have taken the next step toward the design of functional textiles—clothes that gather, store, or transmit digital information. With further development, the technology could lead to shirts that act as antennas for your smart phone or tablet, workout clothes that monitor your fitness level, sports equipment that monitors athletes’ performance, a bandage that tells your doctor how well the tissue beneath it is healing—or even a flexible fabric cap that senses activity in the brain.

That last item is one that John Volakis, director of the ElectroScience Laboratory at Ohio State, and research scientist Asimina Kiourti are investigating. The idea is tomake brain implants, which are under development to treat conditions from epilepsy to addiction, more comfortable by eliminating the need for external wiring on the patient’s body.

“A revolution is happening in the textile industry,” said Volakis, who is also the Roy & Lois Chope Chair Professor ofElectrical Engineering at Ohio State. “We believe that functional textiles are an enabling technology for communications and sensing—and one day even medical applications like imaging and health monitoring.”

Asimina Kiourti.

Recently, he and Kiourti refined their patented fabrication method to create prototype wearables at a fraction of the cost and in half the time as they could only two years ago. With new patents pending, they published the new results in the journal IEEE Antennas and Wireless Propagation Letters.

In Volakis’ lab, the functional textiles, also called “e-textiles,” are created in part on a typical tabletop sewing machine—the kind that fabric artisans and hobbyists might have at home. Like other modern sewing machines, it embroiders thread into fabric automatically based on a pattern loaded via a computer file. The researchers substitute the thread with fine silver metal wires that, once embroidered, feel the same as traditional thread to the touch.“We started with a technology that is very well known—machine embroidery—and we asked, how can we functionalize embroidered shapes? How do we make them transmit signals at useful frequencies, like for cell phones or health sensors?” Volakis said. “Now, for the first time, we’ve achieved the accuracy of printed metal circuit boards, so our new goal is to take advantage of the precision to incorporate receivers and other electronic components.”

John Volakis (O.S.U )

The shape of the embroidery determines the frequency of operation of the antenna or circuit, explained Kiourti.

The shape of one broadband antenna, for instance, consists of more than half a dozen interlocking geometric shapes, each a little bigger than a fingernail, that form an intricate circle a few inches across. Each piece of the circle transmits energy at a different frequency, so that they cover a broad spectrum of energies when working together—hence the “broadband” capability of the antenna for cell phone and internet access.

“Shape determines function,” she said. “And you never really know what shape you will need from one application to the next. So we wanted to have a technology that could embroider any shape for any application.”

The researchers’ initial goal, Kiourti added, was just to increase the precision of the embroidery as much as possible, which necessitated working with fine silver wire. But that created a problem, in that fine wires couldn’t provide as much surface conductivity as thick wires. So they had to find a way to work the fine thread into embroidery densities and shapes that would boost the surface conductivity and, thus, the antenna/sensor performance.

Previously, the researchers had used silver-coated polymer thread with a 0.5-mm diameter, each thread made up of 600 even finer filaments twisted together. The new threads have a 0.1-mm diameter, made with only seven filaments. Each filament is copper at the center, enameled with pure silver.

They purchase the wire by the spool at a cost of 3 cents per foot; Kiourti estimated that embroidering a single broadband antenna like the one mentioned above consumes about 10 feet of thread, for a material cost of around 30 cents per antenna. That’s 24 times less expensive than when Volakis and Kiourti created similar antennas in 2014.

In part, the cost savings comes from using less thread per embroidery. The researchers previously had to stack the thicker thread in two layers, one on top of the other, to make the antenna carry a strong enough electrical signal. But by refining the technique that she and Volakis developed, Kiourti was able to create the new, high-precision antennas in only one embroidered layer of the finer thread. So now the process takes half the time: only about 15 minutes for the broadband antenna mentioned above.

She’s also incorporated some techniques common to microelectronics manufacturing to add parts to embroidered antennas and circuits.

One prototype antenna looks like a spiral and can be embroidered into clothing to improve cell phone signal reception. Another prototype, a stretchable antenna with an integrated RFID (radio-frequency identification) chip embedded in rubber, takes the applications for the technology beyond clothing. (The latter object was part of a study done for a tire manufacturer.)

Yet another circuit resembles the Ohio State Block “O” logo, with non-conductive scarlet and gray thread embroidered among the silver wires “to demonstrate that e-textiles can be both decorative and functional,” Kiourti said.

They may be decorative, but the embroidered antennas and circuits actually work. Tests showed that an embroidered spiral antenna measuring approximately six inches across transmitted signals at frequencies of 1 to 5 GHz with near-perfect efficiency. The performance suggests that the spiral would be well-suited to broadband internet and cellular communication.

In other words, the shirt on your back could help boost the reception of the smart phone or tablet that you’re holding – or send signals to your devices with health or athletic performance data.

The work fits well with Ohio State’s role as a founding partner of the Advanced Functional Fabrics of America Institute, a national manufacturing resource center for industry and government. The new institute, which joins some 50 universities and industrial partners, was announced earlier this month by U.S. Secretary of Defense Ashton Carter.

Syscom Advanced Materials in Columbus provided the threads used in Volakis and Kiourti’s initial work. The finer threads used in this study were purchased from Swiss manufacturer Elektrisola. The research is funded by the National Science Foundation, and Ohio State will license the technology for further development.

Until then, Volakis is making out a shopping list for the next phase of the project.

“We want a bigger sewing machine,” he said.

Comments Off on Computers in Your Clothes: Clothes that Receive and Transmit Digital Information Are Closer to Reality

Coastal Birds Know Tides and Moon Phases

May 9th, 2016By Alton Parrish.

Coastal wading birds shape their lives around the tides, and new research in The Auk: Ornithological Advances shows that different species respond differently to shifting patterns of high and low water according to their size and daily schedules, even following prey cycles tied to the phases of the moon.

Many birds rely on the shallow water of the intertidal zone for foraging, but this habitat appears and disappears as the tide ebbs and flows, with patterns that go through monthly cycles of strong “spring” and weak “neap” tides. Leonardo Calle of Montana State University (formerly Florida Atlantic University) and his colleagues wanted to assess how wading birds respond to these changes, because different species face different constraints–longer-legged birds can forage in deeper water than those with shorter legs, and birds that are only active during the day have different needs than those that will forage day or night.

Great White Heron forages in intertidal habitat.

Changes in the daily schedules of tidal flooding affected smaller, daylight-dependent Little Blue Herons more than Great White Herons, which have longer legs and forage at night when necessary. The abundance of foraging wading birds was also tied to the phases of the moon, but this turned out not to be driven directly by changes in the availability of shallow-water habitat. Instead, the researchers speculate that the birds were responding to movements of their aquatic prey timed to the spring-neap tide cycle, a hypothesis that could be confirmed through a study jointly tracking predator and prey abundance.

Little Blue Heron

“Wading birds are a cog in the wheel that is the intertidal ecosystem, and the intertidal ecosystem is driven by tidal forces–everything depends on tides,” says Calle. “The nuances of how water levels rise and fall over time and space are very important to understand in order to assess how birds feed. Ultimately, this will help us determine if birds have enough area or enough time to fulfill their energy demands and which areas require greater attention or protection.”

Calle and his colleagues conducted their seasonal surveys of foraging wading birds from 2010 to 2013, working from a boat at low tide in Florida’s Great White Heron National Wildlife Refuge. “First the Great White Herons would arrive, followed by the other birds,” says Calle. “Sharks and rays would be on the edge of the flats. Once, not ten feet from the bow of my kayak, a green turtle popped its head above the water and drew a breath just as a grazing manatee drifted by.”

“The model developed by Calle et al. makes a significant contribution to our understanding of the factors that drive the abundance of herons and egrets in tidal areas,” according to John Brzorad of Lenoir-Rhyne University, an expert on egret ecology. “Although it has been long observed that abundance varies with tidal phase, these authors incorporate time of day, moon phase, and season and allow predictions to be made about bird abundance based on modeling hectares available for foraging birds. It will be exciting to apply this model to other tidal areas.”

Comments Off on Coastal Birds Know Tides and Moon Phases

Leopards Have Lost 75% of Their Historic Range

May 7th, 2016

By Alton Parris.

(1)

Famously difficult to spot, this leopard almost dares the photographer to come take a closer look. Photographer

The leopard (Panthera pardus), one of the world’s most iconic big cats, has lost as much as 75 percent of its historic range, according to a paper published today in the scientific journal PeerJ. Conducted by partners including the National Geographic Society’s Big Cats Initiative, international conservation charities the Zoological Society of London (ZSL) and Panthera and the International Union for Conservation of Nature (IUCN) Cat Specialist Group, this study represents the first known attempt to produce a comprehensive analysis of leopards’ status across their entire range and all nine subspecies.

The research found that leopards historically occupied a vast range of approximately 35 million square kilometers (13.5 million square miles) throughout Africa, the Middle East and Asia. Today, however, they are restricted to approximately 8.5 million square kilometers (3.3 million square miles).

(2)

In the warm glow of evening, a leopard rests on a tree limb

To obtain their findings, the scientists spent three years reviewing more than 1,300 sources on the leopard’s historic and current range. The results appear to confirm conservationists’ suspicions that, while the entire species is not yet as threatened as some other big cats, leopards are facing a multitude of growing threats in the wild, and three subspecies have already been almost completely eradicated.

Lead author Andrew Jacobson, of ZSL’s Institute of Zoology, University College London and the National Geographic Society’s Big Cats Initiative, stated: “The leopard is a famously elusive animal, which is likely why it has taken so long to recognize its global decline. This study represents the first of its kind to assess the status of the leopard across the globe and all nine subspecies. Our results challenge the conventional assumption in many areas that leopards remain relatively abundant and not seriously threatened.”

In addition, the research found that while African leopards face considerable threats, particularly in North and West Africa, leopards have also almost completely disappeared from several regions across Asia, including much of the Arabian Peninsula and vast areas of former range in China and Southeast Asia. The amount of habitat in each of these regions is plummeting, having declined by nearly 98 percent.

A leopard pauses in Pilanesberg National Park, South Africa, deciding between pursuing impala or warthog

“Leopards’ secretive nature, coupled with the occasional, brazen appearance of individual animals within megacities like Mumbai and Johannesburg, perpetuates the misconception that these big cats continue to thrive in the wild — when actually our study underlies the fact that they are increasingly threatened,” said Luke Dollar, co-author and program director of the National Geographic Society’s Big Cats Initiative.

Philipp Henschel, co-author and Lion Program survey coordinator for Panthera, stated: “A severe blind spot has existed in the conservation of the leopard. In just the last 12 months, Panthera has discovered the status of the leopard in Southeast Asia is as perilous as the highly endangered tiger.” Henschel continued: “The international conservation community must double down in support of initiatives ––protecting the species. Our next steps in this very moment will determine the leopard’s fate.”

Leopard skin, claws and other parts are common items in the global illegal wildlife trade.

Co-author Peter Gerngross, with the Vienna, Austria-based mapping firm BIOGEOMAPS, added: “We began by creating the most detailed reconstruction of the leopard’s historic range to date. This allowed us to compare detailed knowledge on its current distribution with where the leopard used to be and thereby calculate the most accurate estimates of range loss. This research represents a major advancement for leopard science and conservation.”

Leopards are capable of surviving in human-dominated landscapes provided they have sufficient cover, access to wild prey and tolerance from local people. In many areas, however, habitat is converted to farmland and native herbivores are replaced with livestock for growing human populations. This habitat loss, prey decline, conflict with livestock owners, illegal trade in leopard skins and parts and legal trophy hunting are all factors contributing to leopard decline.

Complicating conservation efforts for the leopard, Jacobson noted: “Our work underscores the pressing need to focus more research on the less studied subspecies, three of which have been the subject of fewer than five published papers during the last 15 years. Of these subspecies, one — the Javan leopard (P. p. melas) — is currently classified as critically endangered by the IUCN, while another — the Sri Lankan leopard (P. p. kotiya) — is classified as endangered, highlighting the urgent need to understand what can be done to arrest these worrying declines.”

Despite this troubling picture, some areas of the world inspire hope. Even with historic declines in the Caucasus Mountains and the Russian Far East/Northeast China, leopard populations in these areas appear to have stabilized and may even be rebounding with significant conservation investment through the establishment of protected areas and increased anti-poaching measures.

“Leopards have a broad diet and are remarkably adaptable,” said Joseph Lemeris Jr., a National Geographic Society’s Big Cats Initiative researcher and paper co-author. “Sometimes the elimination of active persecution by government or local communities is enough to jumpstart leopard recovery. However, with many populations ranging across international boundaries, political cooperation is critical.”

Comments Off on Leopards Have Lost 75% of Their Historic Range

Gold Makes World’s Tiniest Engine, A Basis for Future Nano-Machines

May 6th, 2016By Alton Parrish.

Researchers have developed the world’s tiniest engine – just a few billionths of a meter in size – which uses light to power itself. The nanoscale engine, developed by researchers at the University of Cambridge, could form the basis of future nano-machines that can navigate in water, sense the environment around them, or even enter living cells to fight disease.

(1)

The significance of their achievement is to introduce a previously undefined paradigm for nanoactuation which is incredibly simple, but solves many problems. It is optically powered (although other modes are also possible), and potentially offers unusually large force/mass. This looks to be widely generalizable, because the actuating nanotransducers can be selectively bound to designated active sites. The concept can underpin a plethora of future designs and already we produce a dramatic optical response over large areas at high speed.

The prototype device is made of tiny charged particles of gold, bound together with temperature-responsive polymers in the form of a gel. When the ‘nano-engine’ is heated to a certain temperature with a laser, it stores large amounts of elastic energy in a fraction of a second, as the polymer coatings expel all the water from the gel and collapse. This has the effect of forcing the gold nanoparticles to bind together into tight clusters. But when the device is cooled, the polymers take on water and expand, and the gold nanoparticles are strongly and quickly pushed apart, like a spring. The results are reported in the journal PNAS.

“It’s like an explosion,” said Dr Tao Ding from Cambridge’s Cavendish Laboratory, and the paper’s first author. “We have hundreds of gold balls flying apart in a millionth of a second when water molecules inflate the polymers around them.”

“We know that light can heat up water to power steam engines,” said study co-author Dr Ventsislav Valev, now based at the University of Bath. “But now we can use light to power a piston engine at the nanoscale.”

Nano-machines have long been a dream of scientists and public alike, but since ways to actually make them move have yet to be developed, they have remained in the realm of science fiction. The new method developed by the Cambridge researchers is incredibly simple, but can be extremely fast and exert large forces.

The forces exerted by these tiny devices are several orders of magnitude larger than those for any other previously produced device, with a force per unit weight nearly a hundred times better than any motor or muscle. According to the researchers, the devices are also bio-compatible, cost-effective to manufacture, fast to respond, and energy efficient.

Professor Jeremy Baumberg from the Cavendish Laboratory, who led the research, has named the devices ‘ANTs’, or actuating nano-transducers. “Like real ants, they produce large forces for their weight. The challenge we now face is how to control that force for nano-machinery applications.”

The research suggests how to turn Van de Waals energy – the attraction between atoms and molecules – into elastic energy of polymers and release it very quickly. “The whole process is like a nano-spring,” said Baumberg. “The smart part here is we make use of Van de Waals attraction of heavy metal particles to set the springs (polymers) and water molecules to release them, which is very reversible and reproducible.”

The team is currently working with Cambridge Enterprise, the University’s commercialization arm, and several other companies with the aim of commercializing this technology for microfluidics bio-applications.

Comments Off on Gold Makes World’s Tiniest Engine, A Basis for Future Nano-Machines

Black Hole 660 Million Times as Massive as Our Sun

May 6th, 2016By Alton Parrish.

It’s about 660 million times as massive as our sun, and a cloud of gas circles it at about 1.1 million mph.

This supermassive black hole sits at the center of a galaxy dubbed NGC 1332, which is 73 million light years from Earth. And an international team of scientists that includes Rutgers associate professor Andrew J. Baker has measured its mass with unprecedented accuracy.

This is NGC 1332, a galaxy with a black hole at its center whose mass has been measured at high precision by ALMA.

Their groundbreaking observations, made with the revolutionary Atacama Large Millimeter/ submillimeter Array (ALMA) in Chile, were published in the Astrophysical Journal Letters. ALMA, the world’s largest astronomical project, is a telescope with 66 radio antennas about 16,400 feet above sea level.

Black holes – the most massive typically found at the centers of galaxies – are so dense that their gravity pulls in anything that’s close enough, including light, said Baker, an associate professor in the Astrophysics Group in Rutgers’ Department of Physics and Astronomy. The department is in the School of Arts and Sciences.

A black hole can form after matter, often from an exploding star, condenses via gravity. Supermassive black holes at the centers of massive galaxies grow by swallowing gas, stars and other black holes. But, said Baker, “just because there’s a black hole in your neighborhood, it does not act like a cosmic vacuum cleaner.”

Stars can come close to a black hole, but as long as they’re in stable orbits and moving fast enough, they won’t enter the black hole, said Baker, who has been at Rutgers since 2006.

“The black hole at the center of the Milky Way, which is the biggest one in our own galaxy, is many thousands of light years away from us,” he said. “We’re not going to get sucked in.”

Scientists think every massive galaxy, like the Milky Way, has a massive black hole at its center, Baker said. “The ubiquity of black holes is one indicator of the profound influence that they have on the formation of the galaxies in which they live,” he said.

Understanding the formation and evolution of galaxies is one of the major challenges for modern astrophysics. The scientists’ findings have important implications for how galaxies and their central supermassive black holes form. The ratio of a black hole’s mass to a galaxy’s mass is important in understanding their makeup, Baker said.

Research suggests that the growth of galaxies and the growth of their black holes are coordinated. And if we want to understand how galaxies form and evolve, we need to understand supermassive black holes, Baker said.

Part of understanding supermassive black holes is measuring their exact masses. That lets scientists determine if a black hole is growing faster or slower than its galaxy. If black hole mass measurements are inaccurate, scientists can’t draw any definitive conclusions, Baker said.

To measure NGC 1332’s central black hole, scientists tapped ALMA’s high-resolution observations of carbon monoxide emissions from a giant disc of cold gas orbiting the hole. They also measured the speed of the gas.

“This has been a very active area of research for the last 20 years, trying to characterize the masses of black holes at the centers of galaxies,” said Baker, who began studying black holes as a graduate student. “This is a case where new instrumentation has allowed us to make an important new advance in terms of what we can say scientifically.”

He and his coauthors recently submitted a proposal to use ALMA to observe other massive black holes. Use of ALMA is granted after an annual international competition of proposals, according to Baker.

ALMA is an international partnership of the National Radio Astronomy Observatory, European Southern Observatory, and the National Astronomical Observatory of Japan in cooperation with Chile. U.S. access to ALMA comes via the National Radio Astronomy Observatory, which is supported by the National Science Foundation.

Coauthors of the study of NGC 1332’s central black hole include: lead author Aaron J. Barth, Benjamin D. Boizelle and David A. Buote of the University of California, Irvine; Jeremy Darling of the University of Colorado; Baker; Luis C. Ho of the Kavli Institute for Astronomy and Astrophysics at Peking University in Beijing, China; and Jonelle L. Walsh of Texas A&M University.

Comments Off on Black Hole 660 Million Times as Massive as Our Sun

Cheaper, More Reliable Solar Power with New World Record for Polymer Solar Cells

May 4th, 2016By alton Parrish.

Polymer solar cells can be even cheaper and more reliable thanks to a breakthrough by scientists at Linköping University and the Chinese Academy of Sciences (CAS). This work is about avoiding costly and unstable fullerenes.

Polymer solar cells have in recent years emerged as a low cost alternative to silicon solar cells. In order to obtain high efficiency, fullerenes are usually required in polymer solar cells to separate charge carriers. However, fullerenes are unstable under illumination, and form large crystals at high temperatures.

Polymer solar cells manufactured using low-cost roll-to-roll printing technology, demonstrated here by professors Olle Inganäs (right) and Shimelis Admassie.

Now, a team of chemists led by Professor Jianhui Hou at the CAS set a new world record for fullerene-free polymer solar cells by developing a unique combination of a polymer called PBDB-T and a small molecule called ITIC. With this combination, the sun’s energy is converted with an efficiency of 11%, a value that strikes most solar cells with fullerenes, and all without fullerenes.

Feng Gao, together with his colleagues Olle Inganäs and Deping Qian at Linköping University, have characterized the loss spectroscopy of photovoltage (Voc), a key figure for solar cells, and proposed approaches to further improving the device performance.

The two research groups are now presenting their results in the high-profile journal Advanced Materials.

-We have demonstrated that it is possible to achieve a high efficiency without using fullerene, and that such solar cells are also highly stable to heat. Because solar cells are working under constant solar radiation, good thermal stability is very important, said Feng Gao, a physicist at the Department of Physics, Chemistry and Biology, Linköping University.

-The combination of high efficiency and good thermal stability suggest that polymer solar cells, which can be easily manufactured using low-cost roll-to-roll printing technology, now come a step closer to commercialization, said Feng Gao.

Comments Off on Cheaper, More Reliable Solar Power with New World Record for Polymer Solar Cells

Mediterranean Style Diet Might Slow Down Ageing and Reduce Bone Loss

May 4th, 2016By Alton Parrish.

Sticking to a Mediterranean style diet might slow down ageing finds the EU funded project NU-AGE. At a recent conference in Brussels, researchers presented that a NU-AGE Mediterranean style diet, tested in the project, significantly decreased the levels of the protein known as C-reactive protein, one of the main inflammatory marker linked with the ageing process.

“This is the first project that goes in such depths into the effects of the Mediterranean diet on health of elderly population. We are using the most powerful and advanced techniques including metabolomics, transcriptomics, genomics and the analysis of the gut microbiota to understand what effect, the Mediterranean style diet has on the population of over 65 years old” said prof. Claudio Franceschi, project coordinator from the University of Bologna, Italy.

A new personally tailored, Mediterranean style diet was given to volunteers to assess if it can slow down the ageing process. The project was conducted in five European countries: France, Italy, the Netherlands, Poland and the UK and involved 1142 participants. There are differences between men and women as well as among participants coming from the different countries. Volunteers from five countries differed in genetics, body composition, compliance to the study, response to diet, blood measurements, cytomegalovirus positivity and inflammatory parameters.

NU-AGE’s researchers also looked at socio-economic factors of food choices and health information as well as the most significant barriers to the improvement of the quality of a diet. As with biological markers, considerable country differences were seen when comparing several aspects, for instance on the overall nutrition knowledge. In France and the UK, over 70% of participants thought they had high nutrition knowledge while in Poland only 31% believed so.

Also, when elderly people buy food products, there are country differences in the attitudes towards nutrition information on the food labels (what is important for a person from Poland, may not be as important for a person from Italy). In addition, participants from different countries understand and trust nutrition claims differently. Participants from the Netherlands and the UK appeared to understand nutrition claims better than participants from France, followed by those from Poland and Italy. In terms of trust, over 40% of Italian participants thought that nutrition claims on food products are reliable, while only 20% of British participants had the same opinion (on reliability of these claims). Surprisingly to experts, no gender differences were observed in nutrition knowledge between men and women.

“The NU-AGE conference was a great success and allowed us to share the most recent results of the project as well as decide on the next steps and future work,” concluded Franceschi.

Comments Off on Mediterranean Style Diet Might Slow Down Ageing and Reduce Bone Loss

What Lies beneath West Antarctica?

May 3rd, 2016

By Alton Parrish.

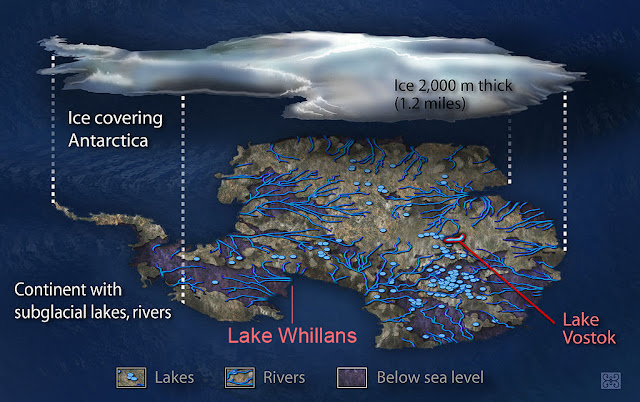

Three recent publications by early career researchers at three different institutions across the country provide the first look into the biogeochemistry, geophysics and geology of Subglacial Lake Whillans, which lies 800 meters (2,600 feet) beneath the West Antarctic Ice Sheet.

The findings stem from the Whillans Ice Stream Subglacial Access Research Drilling (WISSARD) project funded by the National Science Foundation (NSF).

Collectively, the researchers describe a wetland-like area beneath the ice. Subglacial Lake Whillans is primarily fed by ice melt, but also contains small amounts of seawater from ancient marine sediments on the lake bed. The lake waters periodically drain through channels to the ocean, but with insufficient energy to carry much sediment.

The new insights will not only allow scientists to better understand the biogeochemistry and mechanics of the lake itself, but will also allow them to use that information to improve models of how Antarctic subglacial lake systems interact with the ice above and sediment below. These models will help assess the contribution that subglacial lakes may have to the flow of water from the continent to the ocean, and therefore to sea-level rise.

In recent decades, researchers, primarily using airborne radar and satellite laser observations, have discovered that a continental system of rivers and lakes — some similar in size to North America’s Great Lakes — exists beneath the miles-thick Antarctic ice sheet. These findings represent some of the very first methodical descriptions of one of those lakes based on actual sampling of water and sediments.

In January 2013, the WISSARD project successfully drilled through the ice sheet to reach Subglacial Lake Whillans, retrieving water and sediment samples from a body of water that had been isolated from direct contact with the atmosphere for many thousands of years. The team used a customized, clean hot-water drill to collect their samples without contaminating the pristine environment.

WISSARD was preceded by ongoing field research that began as early as 2007 to place this individual lake in context with the larger subglacial water system. Those investigations and the sampling of Subglacial Lake Whillans were funded, and the complex logistics provided, by the NSF-managed U.S. Antarctic Program.

Some of the initial analyses of the samples taken from the lake are highlighted in the recent papers, published in three different journals by three scientists whose graduate work was funded, at least in part, through the WISSARD project. They used an array of biogeochemical, geophysical and geological methods to provide unique insights into the dynamics of the subglacial system.

In a paper published in Geophysical Research Letters, lead author Matthew Siegfried, of the Scripps Institution of Oceanography at the University of California, San Diego, and his colleagues report that Global Positioning System (GPS) data gathered over a period of five years indicate that periodic drainage of the lake can increase velocity at the base of the ice sheet and speed up movement of the ice by as much as four percent in episodic bursts, each of which can last for several months.

The authors suggest that these short-term dynamics need to be better understood to help refine prediction of future, long-term ice sheet changes.

In a second paper, published in Geology, lead author Alexander Michaud, of Montana State University, and his colleagues — including two other Montana State WISSARD-trained students, graduate student Trista Vick-Majors and undergraduate student, Will van Gelder — used data taken from a 38-centimeter (15-inch) long core of lake sediment to characterize the water chemistry in the lake and its sediments.

Their findings indicate that lake water comes primarily from melting at the base of the ice sheet covering the lake, with a minor contribution from seawater, which was trapped in sediments beneath the ice sheet during the last interglacial period, when the Antarctic ice sheet had retreated. This ancient, isolated reservoir of ocean water continues to affect the biogeochemistry of this lake system. This new finding contrasts with previous studies from neighboring ice streams, where water extracted from subglacial sediments did not appear to have a discernable marine signature.

In the third paper, published in the journal Earth and Planetary Science Letters, lead author Timothy Hodson of Northern Illinois University and his colleagues examined another sediment core taken from the lake to discover more about the relationship between the ice sheet, subglacial hydrology and underlying sediments.

Their findings show that even though floods pass through the lake from time to time, the flow is not powerful enough to erode extensive drainage channels, like the rivers that drain much of the Earth’s surface. Rather the environment beneath this portion of the ice sheet is somewhat similar to a wetland within a coastal plain, where bodies of water tend to be broad and shallow and where water flows gradually.

Together, these new publications highlight an environment where geology, hydrology, biology and glaciology all interact to create a dynamic subglacial system, which can have global impacts.

Helen Amanda Fricker, a WISSARD principal investigator and a professor of geophysics at Scripps, who initially discovered Subglacial Lake Whillans in 2007 from satellite data said: “It is amazing to think that we did not know that this lake even existed until a decade ago. It is exciting to see such a rich dataset from the lake, and these new data are helping us understand how lakes function as part of the ice-sheet system.”

Understanding and quantifying this, and similar, systems, she added, requires training a new generation of scientists who can cross disciplinary boundaries, as exemplified by the WISSARD project.

Comments Off on What Lies beneath West Antarctica?

Lock-Pick Malware Hacks Smart Home Systems, Gets the PIN Code for the Front Door and More

May 3rd, 2016By Alton Parrish.

Smart homes may not be so smart yet.

Cybersecurity researchers at the University of Michigan were able to hack into the leading “smart home” automation system and essentially get the PIN code to a home’s front door.

Their “lock-pick malware app” was one of four attacks that the cybersecurity researchers leveled at an experimental set-up of Samsung’s SmartThings, a top-selling Internet of Things platform for consumers. The work is believed to be the first platform-wide study of a real-world connected home system. The researchers didn’t like what they saw.

“At least today, with the one public IoT software platform we looked at, which has been around for several years, there are significant design vulnerabilities from a security perspective,” said Atul Prakash, U-M professor of computer science and engineering. “I would say it’s okay to use as a hobby right now, but I wouldn’t use it where security is paramount.”

Earlence Fernandes, a doctoral student in computer science and engineering who led the study, said that “letting it control your window shades is probably fine.”

“One way to think about it is if you’d hand over control of the connected devices in your home to someone you don’t trust and then imagine the worst they could do with that and consider whether you’re okay with someone having that level of control,” he said.

Regardless of how safe individual devices are or claim to be, new vulnerabilities form when hardware like electronic locks, thermostats, ovens, sprinklers, lights and motion sensors are networked and set up to be controlled remotely. That’s the convenience these systems offer. And consumers are interested in that.

As a testament to SmartThings’ growing use, its Android companion app that lets you manage your connected home devices remotely has been downloaded more than 100,000 times. SmartThings’ app store, where third-party developers can contribute SmartApps that run in the platform’s cloud and let users customize functions, holds more than 500 apps.

The researchers performed a security analysis of the SmartThings’ programming framework and to show the impact of the flaws they found, they conducted four successful proof-of-concept attacks.

They demonstrated a SmartApp that eavesdropped on someone setting a new PIN code for a door lock, and then sent that PIN in a text message to a potential hacker. The SmartApp, which they called a “lock-pick malware app” was disguised as a battery level monitor and only expressed the need for that capability in its code.

As an example, they showed that an existing, highly rated SmartApp could be remotely exploited to virtually make a spare door key by programming an additional PIN into the electronic lock. The exploited SmartApp was not originally designed to program PIN codes into locks.

They showed that one SmartApp could turn off “vacation mode” in a separate app that lets you program the timing of lights, blinds, etc., while you’re away to help secure the home.

They demonstrated that a fire alarm could be made to go off by any SmartApp injecting false messages.

How is all this possible? The security loopholes the researchers uncovered fall into a few categories. One common problem is that the platform grants its SmartApps too much access to devices and to the messages those devices generate. The researchers call this “over-privilege.”

“The access SmartThings grants by default is at a full device level, rather than any narrower,” Prakash said. “As an analogy, say you give someone permission to change the lightbulb in your office, but the person also ends up getting access to your entire office, including the contents of your filing cabinets.”

More than 40 percent of the nearly 500 apps they examined were granted capabilities the developers did not specify in their code. That’s how the researchers could eavesdrop on setting of lock PIN codes.

The researchers also found that it is possible for app developers to deploy an authentication method called OAuth incorrectly. This flaw, in combination with SmartApps being over-privileged, allowed the hackers to program their own PIN code into the lock–to make their own secret spare key.

Finally, the “event subsystem” on the platform is insecure. This is the stream of messages devices generate as they’re programmed and carry out those instructions. The researchers were able to inject erroneous events to trick devices. That’s how they managed the fire alarm and flipped the switch on vacation mode.

These results have implications for all smart home systems, and even the broader Internet of Things.

“The bottom line is that it’s not easy to secure these systems” Prakash said. “There are multiple layers in the software stack and we found vulnerabilities across them, making fixes difficult.”

The researchers told SmartThings about these issues in December 2015 and the company is working on fixes. The researchers rechecked a few weeks ago if a lock’s PIN code could still be snooped and reprogrammed by a potential hacker, and it still could. In a statement, SmartThings officials say they’re continuing to explore “long-term, automated, defensive capabilities to address these vulnerabilities.” They’re also analyzing old and new apps in an effort to ensure that appropriate authentication is put in place, among other steps.

Jaeyeon Jung, with Microsoft Research, also contributed to this work. The researchers will present a paper on the findings, titled “Security Analysis of Emerging Smart Home Applications,” May 24 at the IEEE Symposium on Security and Privacy in San Jose.

Comments Off on Lock-Pick Malware Hacks Smart Home Systems, Gets the PIN Code for the Front Door and More

Clashing with Einstein: Spacetime Become Granular below the Planck Scale

May 2nd, 2016

By Alton Parrish.

Our experience of space-time is that of a continuous object, without gaps or discontinuities, just as it is described by classical physics. For some quantum gravity models however, the texture of space-time is “granular” at tiny scales (below the so-called Planck scale, 10-33 cm), as if it were a variable mesh of solids and voids (or a complex foam). One of the great problems of physics today is to understand the passage from a continuous to a discrete description of spacetime: is there an abrupt change or is there gradual transition? Where does the change occur?

The separation between one world and the other creates problems for physicists: for example, how can we describe gravity – explained so well by classical physics – according to quantum mechanics? Quantum gravity is in fact a field of study in which no consolidated and shared theories exist as yet. There are, however, “scenarios”, which offer possible interpretations of quantum gravity subject to different constraints, and which await experimental confirmation or confutation.

One of the problems to be solved in this respect is that if space-time is granular beyond a certain scale it means that there is a “basic scale”, a fundamental unit that cannot be broken down into anything smaller, a hypothesis that clashes with Einstein’s theory of special relativity.

Imagine holding a ruler in one hand: according to special relativity, to an observer moving in a straight line at a constant speed (close to the speed of light) relative to you, the ruler would appear shorter. But what happens if the ruler has the length of the fundamental scale? For special relativity, the ruler would still appear shorter than this unit of measurement. Special relativity is therefore clearly incompatible with the introduction of a basic graininess of spacetime. Suggesting the existence of this basic scale, say the physicists, means to violate Lorentz invariance, the fundamental tenet of special relativity.

So how can the two be reconciled? Physicists can either hypothesize violations of Lorentz invariance, but have to satisfy very strict constraints (and this has been the preferred approach so far), or they must find a way to avoid the violation and find a scenario that is compatible with both granularity and special relativity. This scenario is in fact implemented by some quantum gravity models such as String Field Theory and Causal Set Theory. The problem to be addressed, however, was how to test their predictions experimentally given that the effects of these theories are much less apparent than are those of the models that violate special relativity.

One solution to this impasse has now been put forward by Stefano Liberati, SISSA professor, and colleagues in their latest publication. The study was conducted with the participation of researchers from the LENS in Florence (Francesco Marin and Francesco Marino) and from the INFN in Padua (Antonello Ortolan). Other SISSA scientists taking part in the study, in addition to Liberati, were PhD student Alessio Belenchia and postdoc Dionigi Benincasa. The research was funded by a grant of the John Templeton Foundation.

“We respect Lorentz invariance, but everything comes at a price, which in this case is the introduction of non-local effects”, comments Liberati. The scenario studied by Liberati and colleagues in fact salvages special relativity but introduces the possibility that physics at a certain point in space-time can be affected by what happens not only in proximity to that point but also at regions very far from it. “Clearly we do not violate causality nor do we presuppose information that travels faster than light”, points out the scientist. “We do, however, introduce a need to know the global structure so as to understand what’s going on at a local level”.

From theory to facts

There’s something else that makes Liberati and colleagues’ model almost unique, and no doubt highly precious: it is formulated in such a way as to make experimental testing possible. “To develop our reasoning we worked side by side with the experimental physicists of the Florence LENS. We are in fact already working on developing the experiments”. With these measurements, Liberati and colleagues may be able to identify the boundary, or transition zone, where space-time becomes granular and physics non-local.

“At LENS they’re now building a quantum harmonic oscillator: a silicon chip weighing a few micrograms which after being cooled to temperatures close to absolute zero, is illuminated with a laser light and starts to oscillate harmonically” explains Liberati. “Our theoretical model accommodates the possibility of testing non-local effects on quantum objects having a non-negligible mass”. This is an important aspect: a theoretical scenario that accounts for quantum effects without violating special relativity also implies that these effects at our scales must necessarily be very small (otherwise we would already have observed them). In order to test them, we need to be able to observe them in some way or other. According to our model, it is possible to see the effects in ‘borderline’ objects, that is, objects that are undeniably quantum objects but having a size where the mass – i.e., the ‘charge’ associated with gravity (as electrical charge is associated with electrical field) – is still substantial.”

“On the basis of the proposed model, we formulated predictions about how the system would oscillate”, says Liberati. “Two predictions, to be precise: one function that describes the system without non-local effects and one that describes it with local effects”. The model is particularly robust since, as Liberati explains, the difference in the pattern described in the two cases cannot be generated by environmental influences on the oscillator.

“So it’s a ‘win-win’ situation: if we don’t see the effect, we can raise the bar of the energies where to look for the transition. Above all, the experiments being prepared should be able to push the constraints on the non-locality scale to the Planck scale. In this case , we go as far as to exclude these scenarios with non-locality. And this in itself would be a good result, as we would be cutting down the number of possible theoretical scenarios”, concludes Liberati. “If on the other hand we were to observe the effect, well, in that case we would be confirming the existence of non-local effects, thus paving the way for an altogether new physics.”

Comments Off on Clashing with Einstein: Spacetime Become Granular below the Planck Scale

New Study: Fossil Fuels Could Be Phased Out Worldwide In A Decade

May 2nd, 2016

By Alton Parrish.

The worldwide reliance on burning fossil fuels to create energy could be phased out in a decade, according to an article published by a major energy think tank in the UK.

Professor Benjamin Sovacool, Director of the Sussex Energy Group at the University of Sussex, believes that the next great energy revolution could take place in a fraction of the time of major changes in the past.

A new study analyses energy transitions throughout history and argues that only looking towards the past can often paint an overly bleak and unnecessary picture

But it would take a collaborative, interdisciplinary, multi-scalar effort to get there, he warns. And that effort must learn from the trials and tribulations from previous energy systems and technology transitions.

In a paper published in the peer-reviewed journal Energy Research & Social Science, Professor Sovacool analyses energy transitions throughout history and argues that only looking towards the past can often paint an overly bleak and unnecessary picture.

Moving from wood to coal in Europe, for example, took between 96 and 160 years, whereas electricity took 47 to 69 years to enter into mainstream use.

But this time the future could be different, he says – the scarcity of resources, the threat of climate change and vastly improved technological learning and innovation could greatly accelerate a global shift to a cleaner energy future.

The study highlights numerous examples of speedier transitions that are often overlooked by analysts. For example, Ontario completed a shift away from coal between 2003 and 2014; a major household energy programme in Indonesia took just three years to move two-thirds of the population from kerosene stoves to LPG stoves; and France’s nuclear power programme saw supply rocket from four per cent of the electricity supply market in 1970 to 40 per cent in 1982.

Each of these cases has in common strong government intervention coupled with shifts in consumer behaviour, often driven by incentives and pressure from stakeholders.

Professor Sovacool says:

“The mainstream view of energy transitions as long, protracted affairs, often taking decades or centuries to occur, is not always supported by the evidence.

“Moving to a new, cleaner energy system would require significant shifts in technology, political regulations, tariffs and pricing regimes, and the behaviour of users and adopters.

“Left to evolve by itself – as it has largely been in the past – this can indeed take many decades. A lot of stars have to align all at once.

“But we have learnt a sufficient amount from previous transitions that I believe future transformations can happen much more rapidly.”

In sum, although the study suggests that the historical record can be instructive in shaping our understanding of macro and micro energy transitions, it need not be predictive.

Comments Off on New Study: Fossil Fuels Could Be Phased Out Worldwide In A Decade

Robots Successfully Tend Tree Nursery

April 30th, 2016By Alton Parrish.

Very specific conditions are needed for a tiny seed to grow into a mighty tree. Providing these conditions helps to preserve biodiversity, as plants produced from cuttings are essentially clones. EU-funded researchers have developed an innovative propagation unit where plantlets can thrive, along with tailor-made growth protocols for many species.

The Zephyr project has developed a new, zero-impact growth chamber for forest plants: a sustainable controlled environment where tender shoots can thrive under the watchful “eyes” of a robotic nursery assistant. Key components of the new system include a rotating set of trays under an array of LED lamps, a robotic arm equipped with a camera, and wireless microsensors that keep tabs on the plants.

The project is due to end in November 2015, and the partners are currently putting the final touches to their system. Standard growth chambers based on their prototype could be available within a year, says project manager Carlo Polidori, and customised units designed to meet specific requirements will also be on offer.

New growth, firmly rooted in research

Many of the partners in the Zephyr consortium are SMEs that had already collaborated in earlier EU-funded projects, shaping some of the components on which the proposed system relies. Further crucial parts, such as the stereoscopic camera, were developed elsewhere, and Zephyr contributed the microsensors.

“The real innovation in Zephyr is putting all these things together to produce a very competitive, highly automated unit,” says Polidori. In contrast to other growth chambers used in silviculture, Zephyr’s prototype uses neither pesticides and nor fertilisers, he notes.

It is also greener, requiring far less water, soil, energy and space, he adds. Moisture is recycled, plants are grown in individual pots containing the optimal amount of substrate, and instead of the 10 overhead lamps required by comparable systems, it relies on a mere 3, which are powered by solar panels. The combination of wireless microsensors and camera inspection means that conditions in the chamber can be monitored remotely.

More bark for the buck

One of the Zephyr growth chamber’s particular strengths is that it provides uniform conditions, Polidori notes. In the current, “static” chambers, treelings remain in the same spot for large stretches of time, and some are thus nearer to key components, such as lamps, than others.

In the prototype unit, all seedlings in a batch benefit from the same amounts of light, moisture and warmth, he adds. Seedlings are placed on revolving trays, taking turns in the best spots. The system thus produces plantlets of consistent quality, with the strong roots they will need to survive out in the wild.

And, better yet, it can produce them just in time, Polidori adds. Seedlings can thus be made available at the very beginning of the planting season, giving them plenty of time to get established before the days get short and cold.

In addition to the actual chamber, Zephyr has produced growth protocols for a wide variety of species. This guidance notably details the type of soil required for individual species, obviating the need for fertilisers, Polidori explains. The partners also use specific spectra to boost plant growth.

It’s a compelling system, but it’s not yet out of the woods. As a next step, the partners intend to develop the prototype into a reasonably priced standard module, which according to Polidori could be coming to a forest near you by late 2016. The prototype, he explains, contains particularly sophisticated versions of some components, meeting research needs that aren’t relevant to production environments. Customised units including such parts will, however, be available to clients with specific requirements.

As a further advance, the partners are considering an innovative business model for the commercialisation of their system. Known as a “distributed company”, this arrangement will enable the entities involved to cooperate without setting up a new company, Polidori notes. Zephyr may thus break new ground not just for plant propagation, but also for the marketing of knowledge-based products and services, stimulating growth in more ways than one.

Comments Off on Robots Successfully Tend Tree Nursery

Winds Gusting To 43,000 Miles Per Second Created by Mysterious Binary Systems

April 30th, 2016

By Alton Parrish.

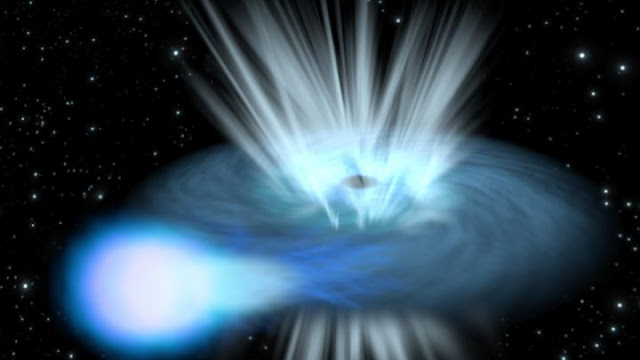

Two black holes in nearby galaxies have been observed devouring their companion stars at a rate exceeding classically understood limits, and in the process, kicking out matter into surrounding space at astonishing speeds of around a quarter the speed of light.

The researchers, from the University of Cambridge, used data from the European Space Agency’s (ESA) XMM-Newton space observatory to reveal for the first time strong winds gusting at very high speeds from two mysterious sources of x-ray radiation. The discovery, published in the journal Nature, confirms that these sources conceal a compact object pulling in matter at extraordinarily high rates.

Artist’s impression depicting a compact object – either a black hole or a neutron star – feeding on gas from a companion star in a binary system.

When observing the Universe at x-ray wavelengths, the celestial sky is dominated by two types of astronomical objects: supermassive black holes, sitting at the centres of large galaxies and ferociously devouring the material around them, and binary systems, consisting of a stellar remnant – a white dwarf, neutron star or black hole – feeding on gas from a companion star.

In both cases, the gas forms a swirling disc around the compact and very dense central object. Friction in the disc causes the gas to heat up and emit light at different wavelengths, with a peak in x-rays.

But an intermediate class of objects was discovered in the 1980s and is still not well understood. Ten to a hundred times brighter than ordinary x-ray binaries, these sources are nevertheless too faint to be linked to supermassive black holes, and in any case, are usually found far from the centre of their host galaxy.

“We think these so-called ‘ultra-luminous x-ray sources’ are special binary systems, sucking up gas at a much higher rate than an ordinary x-ray binary,” said Dr Ciro Pinto from Cambridge’s Institute of Astronomy, the paper’s lead author. “Some of these sources host highly magnetised neutron stars, while others might conceal the long-sought-after intermediate-mass black holes, which have masses around one thousand times the mass of the Sun. But in the majority of cases, the reason for their extreme behavior is still unclear.”

Pinto and his colleagues collected several days’ worth of observations of three ultra-luminous x-ray sources, all located in nearby galaxies located less than 22 million light-years from the Milky Way. The data was obtained over several years with the Reflection Grating Spectrometer on XMM-Newton, which allowed the researchers to identify subtle features in the spectrum of the x-rays from the sources.In all three sources, the scientists were able to identify x-ray emission from gas in the outer portions of the disc surrounding the central compact object, slowly flowing towards it.

But two of the three sources – known as NGC 1313 X-1 and NGC 5408 X-1 – also show clear signs of x-rays being absorbed by gas that is streaming away from the central source at 70,000 kilometres per second – almost a quarter of the speed of light.

The irregular galaxy NGC 5408 viewed by the NASA/ESA Hubble Space Telescope. The galaxy is located some 16 million light-years away and hosts a very bright source of X-rays, NGC 1313 X-1.

NGC 5408 X-1 is an ultra-luminous X-ray source – a binary system consisting of a stellar remnant that is feeding on gas from a companion star at an especially high rate.

Scientists using ESA’s XMM-Newton have discovered gas streaming away at a quarter of the speed of light from NGC 5408 X-1 and another bright X-ray binary, NGC 1313 X-1, confirming that these sources conceal a compact object accreting matter at extraordinarily high rates.

“This is the first time we’ve seen winds streaming away from ultra-luminous x-ray sources,” said Pinto. “And the very high speed of these outflows is telling us something about the nature of the compact objects in these sources, which are frantically devouring matter.”

While the hot gas is pulled inwards by the central object’s gravity, it also shines brightly, and the pressure exerted by the radiation pushes it outwards. This is a balancing act: the greater the mass, the faster it draws the surrounding gas; but this also causes the gas to heat up faster, emitting more light and increasing the pressure that blows the gas away.

There is a theoretical limit to how much matter can be pulled in by an object of a given mass, known as the Eddington limit. The limit was first calculated for stars by astronomer Arthur Eddington, but it can also be applied to compact objects like black holes and neutron stars.

Eddington’s calculation refers to an ideal case in which both the matter being accreted onto the central object and the radiation being emitted by it do so equally in all directions.

But the sources studied by Pinto and his collaborators are potentially being fed through a disc which has been puffed up due to internal pressures arising from the incredible rates of material passing through it. These thick discs can naturally exceed the Eddington limit and can even trap the radiation in a cone, making these sources appear brighter when we look straight at them. As the thick disc moves material further from the black hole’s gravitational grasp it also gives rise to very high-speed winds like the ones observed by the Cambridge researchers.

“By observing x-ray sources that are radiating beyond the Eddington limit, it is possible to study their accretion process in great detail, investigating by how much the limit can be exceeded and what exactly triggers the outflow of such powerful winds,” said Norbert Schartel, ESA XMM-Newton Project Scientist.

The nature of the compact objects hosted at the core of the two sources observed in this study is, however, still uncertain.

Based on the x-ray brightness, the scientists suspect that these mighty winds are driven from accretion flows onto either neutron stars or black holes, the latter with masses of several to a few dozen times that of the Sun.

To investigate further, the team is still scrutinising the data archive of XMM-Newton, searching for more sources of this type, and are also planning future observations, in x-rays as well as at optical and radio wavelengths.

“With a broader sample of sources and multi-wavelength observations, we hope to finally uncover the physical nature of these powerful, peculiar objects,” said Pinto.

Comments Off on Winds Gusting To 43,000 Miles Per Second Created by Mysterious Binary Systems

The Riddle Of The Missing Stars

April 29th, 2016

By Alton Parrish.

Thanks to the NASA/ESA Hubble Space Telescope, some of the most mysterious cosmic residents have just become even more puzzling.

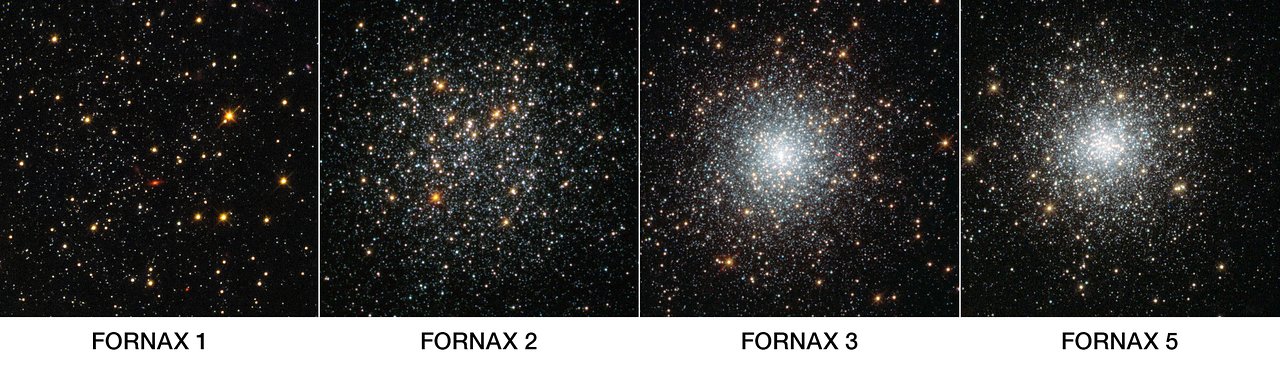

This NASA/ESA Hubble Space Telescope image shows four globular clusters in the dwarf galaxy Fornax.

New observations of globular clusters in a small galaxy show they are very similar to those found in the Milky Way, and so must have formed in a similar way. One of the leading theories on how these clusters form predicts that globular clusters should only be found nestled in among large quantities of old stars. But these old stars, though rife in the Milky Way, are not present in this small galaxy, and so, the mystery deepens.

Globular clusters — large balls of stars that orbit the centres of galaxies, but can lie very far from them — remain one of the biggest cosmic mysteries. They were once thought to consist of a single population of stars that all formed together. However, research has since shown that many of the Milky Way’s globular clusters had far more complex formation histories and are made up of at least two distinct populations of stars.

Of these populations, around half the stars are a single generation of normal stars that were thought to form first, and the other half form a second generation of stars, which are polluted with different chemical elements. In particular, the polluted stars contain up to 50-100 times more nitrogen than the first generation of stars.

The proportion of polluted stars found in the Milky Way’s globular clusters is much higher than astronomers expected, suggesting that a large chunk of the first generation star population is missing. A leading explanation for this is that the clusters once contained many more stars but a large fraction of the first generation stars were ejected from the cluster at some time in its past.

This explanation makes sense for globular clusters in the Milky Way, where the ejected stars could easily hide among the many similar, old stars in the vast halo, but the new observations, which look at this type of cluster in a much smaller galaxy, call this theory into question.

Astronomers used Hubble’s Wide Field Camera 3 (WFC3) to observe four globular clusters in a small nearby galaxy known as the Fornax Dwarf Spheroidal galaxy .

“We knew that the Milky Way’s clusters were more complex than was originally thought, and there are theories to explain why. But to really test our theories about how these clusters form we needed to know what happened in other environments,” says Søren Larsen of Radboud University in Nijmegen, the Netherlands, lead author of the new paper. “Before now we didn’t know whether globular clusters in smaller galaxies had multiple generations or not, but our observations show clearly that they do!”

The astronomers’ detailed observations of the four Fornax clusters show that they also contain a second polluted population of stars [2] and indicate that not only did they form in a similar way to one another, their formation process is also similar to clusters in the Milky Way. Specifically, the astronomers used the Hubble observations to measure the amount of nitrogen in the cluster stars, and found that about half of the stars in each cluster are polluted at the same level that is seen in Milky Way’s globular clusters.

This high proportion of polluted second generation stars means that the Fornax globular clusters’ formation should be covered by the same theory as those in the Milky Way.

Based on the number of polluted stars in these clusters they would have to have been up to ten times more massive in the past, before kicking out huge numbers of their first generation stars and reducing to their current size. But, unlike the Milky Way, the galaxy that hosts these clusters doesn’t have enough old stars to account for the huge number that were supposedly banished from the clusters.

“If these kicked-out stars were there, we would see them — but we don’t!” explains Frank Grundahl of Aarhus University in Denmark, co-author on the paper. “Our leading formation theory just can’t be right. There’s nowhere that Fornax could have hidden these ejected stars, so it appears that the clusters couldn’t have been so much larger in the past.”

This finding means that a leading theory on how these mixed generation globular clusters formed cannot be correct and astronomers will have to think once more about how these mysterious objects, in the Milky Way and further afield, came to exist.

Comments Off on The Riddle Of The Missing Stars

Smart Hand: Using Your Skin as a Touch Screen:

April 28th, 2016By Alton Parrish.

Using your skin as a touchscreen has been brought a step closer after UK scientists successfully created tactile sensations on the palm using ultrasound sent through the hand.

The University of Sussex-led study – funded by the Nokia Research Centre and the European Research Council – is the first to find a way for users to feel what they are doing when interacting with displays projected on their hand.

This solves one of the biggest challenges for technology companies who see the human body, particularly the hand, as the ideal display extension for the next generation of smartwatches and other smart devices.

Current ideas rely on vibrations or pins, which both need contact with the palm to work, interrupting the display.

However, this new innovation, called SkinHaptics, sends sensations to the palm from the other side of the hand, leaving the palm free to display the screen.

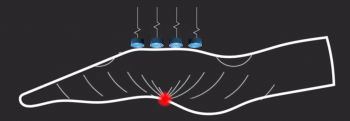

The device uses ‘time-reversal’ processing to send ultrasound waves through the hand. This technique is effectively like ripples in water but in reverse – the waves become more targeted as they travel through the hand, ending at a precise point on the palm.

It draws on a rapidly growing field of technology called haptics, which is the science of applying touch sensation and control to interaction with computers and technology.

Professor Sriram Subramanian, who leads the research team at the University of Sussex, says that technologies will inevitably need to engage other senses, such as touch, as we enter what designers are calling an ‘eye-free’ age of technology.

He says: “Wearables are already big business and will only get bigger. But as we wear technology more, it gets smaller and we look at it less, and therefore multisensory capabilities become much more important.

“If you imagine you are on your bike and want to change the volume control on your smartwatch, the interaction space on the watch is very small. So companies are looking at how to extend this space to the hand of the user.

“What we offer people is the ability to feel their actions when they are interacting with the hand.”

Professor Sriram Subramanian is a Professor of Informatics at the University of Sussex where he leads the Interact Laband is a member of the Creative Technology Group.

The findings were presented at the IEEE Haptics Symposium 2016 in Philadelphia, USA, by the study’s co-author Dr Daniel Spelmezan, a research assistant in the Interact Lab. The symposium concluded Monday 11 April 2016.

Comments Off on Smart Hand: Using Your Skin as a Touch Screen:

Physicians Say Climate Change Will Have Devastating Health Effects Across the World

April 28th, 2016

By Alton Parrish.

Climate change will have devastating consequences for public and individual health unless aggressive, global action is taken now to curb greenhouse gas emissions, the American College of Physicians (ACP) says in a new policy paper published today in Annals of Internal Medicine.

“The American College of Physicians urges physicians to help combat climate change by advocating for effective climate change adaptation and mitigation policies, helping to advance a low-carbon health care sector, and by educating communities about potential health dangers posed by climate change,” said ACP President Wayne J. Riley, MD, MPH, MBA, MACP. “We need to take action now to protect the health of our community’s most vulnerable members — including our children, our seniors, people with chronic illnesses, and the poor — because our climate is already changing and people are already being harmed.”

Lho Kruet Village.

ACP cites higher rates of respiratory and heat-related illnesses, increased prevalence of diseases passed by insects, water-borne diseases, food and water insecurity and malnutrition, and behavioral health problems as potential health effects of climate change. The elderly, the sick, and the poor are especially vulnerable.

As clinicians, physicians have a role in combating climate change, especially as it relates to human health, ACP says. ACP calls on the health care sector to implement environmentally sustainable and energy efficient practices and prepare for the impacts of climate change to ensure continued operations during periods of elevated patient demand. The health care sector is ranked second-highest in energy use, after the food industry, spending about $9 billion annually on energy costs.

Health care system mitigation focus areas include transportation, energy conservation/efficiency, alternative energy generation, green building design, waste disposal and management, reducing food waste, and water conservation.

“Office-based physicians and their staffs can also play a role by taking action to achieve energy and water efficiency, using renewable energy, expanding recycling programs, and using low-carbon or zero-carbon transportation,” Dr. Riley said.

ACP encourages physicians to become educated about climate change, its effect on human health, and how to respond to future challenges. ACP recommends that medical schools and continuing medical education providers incorporate climate change-related coursework into curricula.

“ACP has 18 international chapters that span the globe,” said Dr. Riley. “This paper was written not only to support advocacy for changes by the U.S. government to mitigate climate change, but to provide our international chapters and internal medicine colleagues with policies and analysis that they can use to advocate with their own governments, colleagues, and the public, and for them to advocate for changes to reduce their own health systems impact.”

The paper was developed by ACP’s Health and Public Policy Committee, which is charged with addressing issues that affect the health care of the U.S. The committee reviewed available studies, reports, and surveys on climate change and its relation to human health. The recommendations are based on reviewed literature and input from ACP’s Board of Governors, Board of Regents, additional ACP councils, and nonmember experts in the field.

Comments Off on Physicians Say Climate Change Will Have Devastating Health Effects Across the World

New 5D Storage Can Store 360 Terabytes of Data on Tiny Disc for Billions of Years

April 27th, 2016By Alton Parrish.

Scientists at the University of Southampton have made a major step forward in the development of digital data storage that is capable of surviving for billions of years.

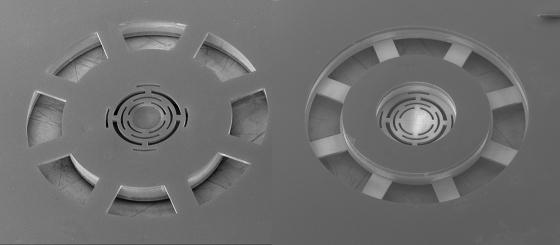

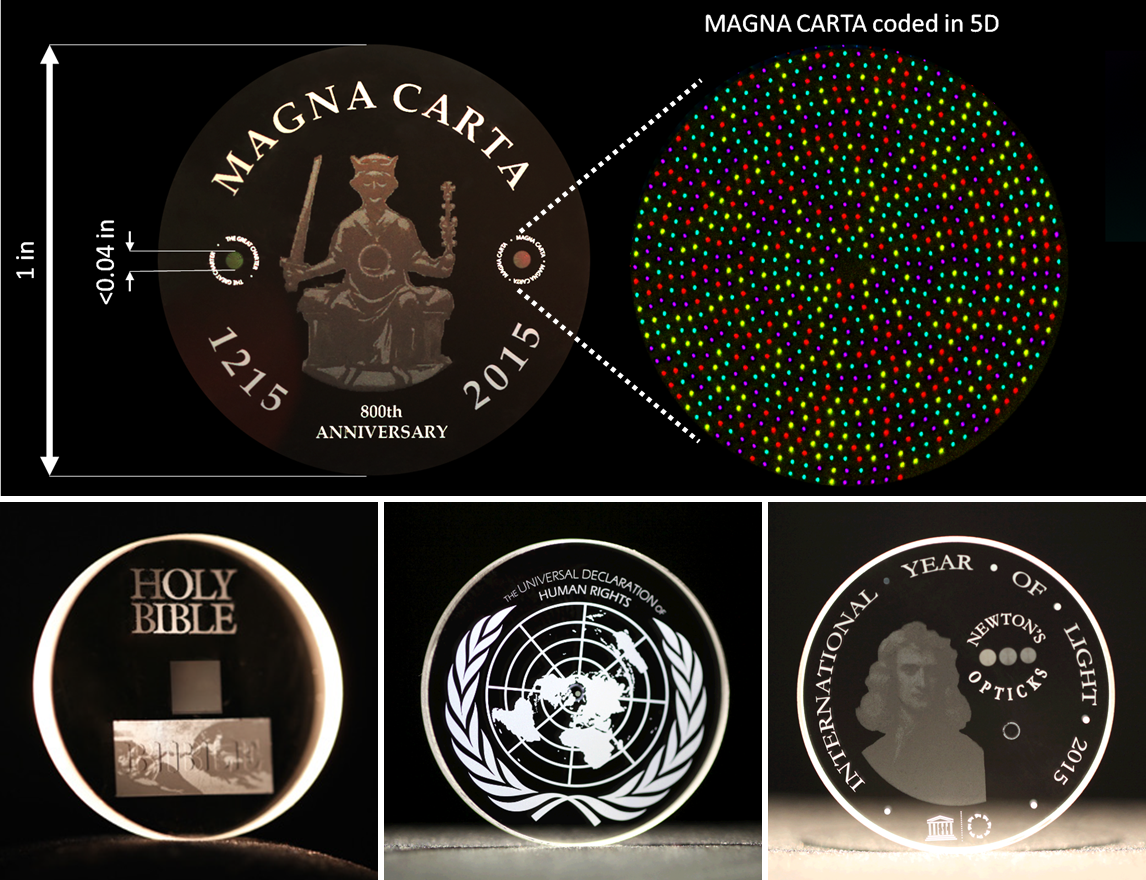

Using nanostructured glass, scientists from the University’s Optoelectronics Research Centre (ORC) have developed the recording and retrieval processes of five dimensional (5D) digital data by femtosecond laser writing.

The storage allows unprecedented properties including 360 TB/disc data capacity, thermal stability up to 1,000°C and virtually unlimited lifetime at room temperature (13.8 billion years at 190°C ) opening a new era of eternal data archiving. As a very stable and safe form of portable memory, the technology could be highly useful for organisations with big archives, such as national archives, museums and libraries, to preserve their information and records

The technology was first experimentally demonstrated in 2013 when a 300 kb digital copy of a text file was successfully recorded in 5D.

Now, major documents from human history such as Universal Declaration of Human Rights (UDHR), Newton’s Opticks, Magna Carta and Kings James Bible, have been saved as digital copies that could survive the human race. A copy of the UDHR encoded to 5D data storage was recently presented to UNESCO by the ORC at the International Year of Light (IYL) closing ceremony in Mexico.

The documents were recorded using ultrafast laser, producing extremely short and intense pulses of light. The file is written in three layers of nanostructured dots separated by five micrometres (one millionth of a metre).

The self-assembled nanostructures change the way light travels through glass, modifying polarisation of light that can then be read by combination of optical microscope and a polariser, similar to that found in Polaroid sunglasses.

Coined as the ‘Superman memory crystal’, as the glass memory has been compared to the “memory crystals” used in the Superman films, the data is recorded via self-assembled nanostructures created in fused quartz. The information encoding is realised in five dimensions: the size and orientation in addition to the three dimensional position of these nanostructures.

Professor Peter Kazansky, from the ORC, says: “It is thrilling to think that we have created the technology to preserve documents and information and store it in space for future generations. This technology can secure the last evidence of our civilisation: all we’ve learnt will not be forgotten.”

The researchers will present their research at the photonics industry’s renowned SPIE—The International Society for Optical Engineering Conference in San Francisco, USA this week. The invited paper, ‘5D Data Storage by Ultrafast Laser Writing in Glass’ will be presented on Wednesday 17 February.

The team are now looking for industry partners to further develop and commercialise this ground-breaking new technology.

Universal Declaration of Human Rights recorded into 5D optical data

Comments Off on New 5D Storage Can Store 360 Terabytes of Data on Tiny Disc for Billions of Years

Pollution Makes Storm Clouds Last Longer, Days Colder And Nights Wamer

April 27th, 2016By Alton Parrish.

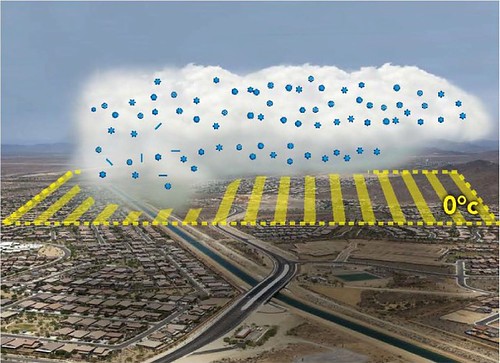

A new study shows why pollution results in larger, deeper and longer lasting storm clouds, leading to colder days and warmer nights

A new study reveals how pollution causes thunderstorms to leave behind larger, deeper, longer lasting clouds. Appearing in the Proceedings of the National Academy of Sciences November 26, the results solve a long-standing debate and reveal how pollution plays into climate warming. The work can also provide a gauge for the accuracy of weather and climate models.

Researchers had thought that pollution causes larger and longer-lasting storm clouds by making thunderheads draftier through a process known as convection. But atmospheric scientist Jiwen Fan and her colleagues show that pollution instead makes clouds linger by decreasing the size and increasing the lifespan of cloud and ice particles. The difference affects how scientists represent clouds in climate models.

“This study reconciles what we see in real life to what computer models show us,” said Fan of the Department of Energy’s Pacific Northwest National Laboratory. “Observations consistently show taller and bigger anvil-shaped clouds in storm systems with pollution, but the models don’t always show stronger convection. Now we know why.”