Posts by AltonParrish:

Researchers at Disney show soft sides in 3-D printer

May 5th, 2015By Disney.

A team from Disney Research and Carnegie Mellon University have devised a 3-D printer that layers together laser-cut sheets of fabric to form soft, squeezable objects such as bunnies, doll clothing and phone cases. These objects can have complex geometries and incorporate circuitry that makes them interactive.”Today’s 3-D printers can easily create custom metal, plastic, and rubber objects,” said Jim McCann, associate research scientist at Disney Research Pittsburgh. “But soft fabric objects, like plush toys, are still fabricated by hand. Layered fabric printing is one possible method to automate the production of this class of objects.” 3D printed objects from our layered fabric 3D printer: (a) printed fabric Stanford bunny, (b) printed Japanese sunny doll with two materials, (c) printed touch sensor, (d) printed cellphone case with an embedded conductive fabric coil for wireless power reception.

Credit: Disney Research PittsburghThe fabric printer is similar in principle to laminated object manufacturing, which takes sheets of paper or metal that have each been cut into a 2-D shape and then bonds them together to form a 3-D object. Fabric presents particular cutting and handling challenges, however, which the Disney team has addressed in the design of its printer.

Credit: Disney Research PittsburghThe fabric printer is similar in principle to laminated object manufacturing, which takes sheets of paper or metal that have each been cut into a 2-D shape and then bonds them together to form a 3-D object. Fabric presents particular cutting and handling challenges, however, which the Disney team has addressed in the design of its printer.

The layered-fabric printer will be described at the Association for Computing Machinery’s annual Conference on Human Factors in Computing Systems, CHI 2015, April 18-23 in Seoul, South Korea, where the report has received an honorable mention for a Best Paper award. In addition to McCann, the team included Huaishu Peng, a Ph.D. student in information science at Cornell University, and Scott Hudson and Jen Mankoff, both faculty members in Carnegie Mellon’s Human-Computer Interaction Institute.

Last year at CHI, Hudson presented a soft 3-D object printer he developed at Disney Research that deposits layers of needle-felted yarn. The layered-fabric printing method, by contrast, can produce thicker, more squeezable objects. Disney presents a new type of 3D printer that can form precise, but soft and deformable 3D objects from layers of off-the-shelf fabric. Their printer employs an approach where a sheet of fabric forms each layer of a 3D object. The printer cuts this sheet along the 2D contour of the layer using a laser cutter and then bonds it to previously printed layers using a heat sensitive adhesive. Surrounding fabric in each layer is temporarily retained to provide a removable support structure for layers printed above it. This process is repeated to build up a 3D object layer by layer. The printer is capable of automatically feeding two separate fabric types into a single print. This allows specially cut layers of conductive fabric to be embedded in our soft prints. Using this capability Disney demonstrates 3D models with touch sensing capability built into a soft print in one complete printing process, and a simple LED display making use of a conductive fabric coil for wireless power reception.

The latest soft printing apparatus includes two fabrication surfaces – an upper cutting platform and a lower bonding platform. Fabric is fed from a roll into the device, where a vacuum holds the fabric up against the upper cutting platform while a laser cutting head moves below. The laser cuts a rectangular piece out of the fabric roll, then cuts the layer’s desired 2-D shape or shapes within that rectangle. This second set of cuts is left purposefully incomplete so that the shapes receive support from the surrounding fabric during the fabrication process.

Once the cutting is complete, the bonding platform is raised up to the fabric and the vacuum is shut off to release the fabric. The platform is lowered and a heated bonding head is deployed, heating and pressing the fabric against previous layers. The fabric is coated with a heat-sensitive adhesive, so the bonding process is similar to a person using a hand iron to apply non-stitched fabric ornamentation onto a costume or banner.

Once the process is complete, the surrounding support fabric is torn away by hand to reveal the 3-D object.

The researchers demonstrated this technique by using 32 layers of 2-millimeter-thick felt to create a 2 ½-inch bunny. The process took about 2 ½ hours.

“The layers in the bunny print are evident because the bunny is relatively small compared to the felt we used to print it,” McCann said. “It’s a trade-off — with thinner fabric, or a larger bunny, the layers would be less noticeable, but the printing time would increase.”

Two types of material can be used to create objects by feeding one roll of fabric into the machine from left to right, while a second roll of a different material is fed front to back. If one of the materials is conductive, the equivalent of wiring can be incorporated into the device. The researchers demonstrated the possibilities by building a fabric starfish that serves as a touch sensor, as well as a fabric smartphone case with an antenna that can harvest enough energy from the phone to light an LED.

The feel of a fabricated object can be manipulated in the fabrication process by adding small interior cuts that make it easy to bend the object in one direction, while maintaining stiffness in the perpendicular direction.

Comments Off on Researchers at Disney show soft sides in 3-D printer

Astronomers Unveil the Farthest Galaxy

May 5th, 2015

By University of Yale.

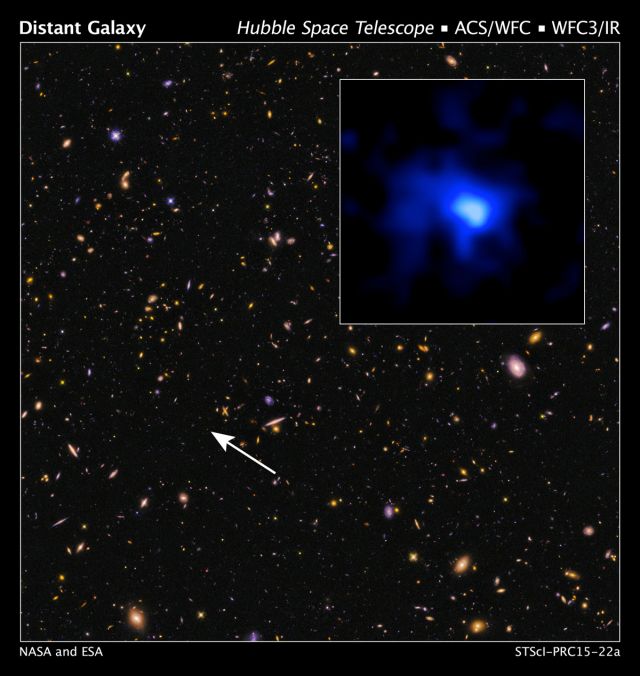

An international team of astronomers led by Yale University and the University of California-Santa Cruz have pushed back the cosmic frontier of galaxy exploration to a time when the universe was only 5% of its present age.

The team discovered an exceptionally luminous galaxy more than 13 billion years in the past and determined its exact distance from Earth using the powerful MOSFIRE instrument on the W.M. Keck Observatory’s 10-meter telescope, in Hawaii. It is the most distant galaxy currently measured.

The galaxy EGS-zs8-1 sets a new distance record. It was discovered in images from the Hubble Space Telescope’s CANDELS survey.

Credit: NASA, ESA, P. Oesch and I. Momcheva (Yale University), and the 3D-HST and HUDF09/XDF teams

The galaxy, EGS-zs8-1, was originally identified based on its particular colors in images from NASA’s Hubble and Spitzer space telescopes. It is one of the brightest and most massive objects in the early universe.

Age and distance are vitally connected in any discussion of the universe. The light we see from our Sun takes just eight minutes to reach us, while the light from distant galaxies we see via today’s advanced telescopes travels for billions of years before it reaches us — so we’re seeing what those galaxies looked like billions of years ago.

“It has already built more than 15% of the mass of our own Milky Way today,” said Pascal Oesch, a Yale astronomer and lead author of a study published online May 5 in Astrophysical Journal Letters. “But it had only 670 million years to do so. The universe was still very young then.” The new distance measurement also enabled the astronomers to determine that EGS-zs8-1 is still forming stars rapidly, about 80 times faster than our galaxy.

Only a handful of galaxies currently have accurate distances measured in this very early universe. “Every confirmation adds another piece to the puzzle of how the first generations of galaxies formed in the early universe,” said Pieter van Dokkum, the Sol Goldman Family Professor of Astronomy and chair of Yale’s Department of Astronomy, who is second author of the study. “Only the largest telescopes are powerful enough to reach to these large distances.”

The MOSFIRE instrument allows astronomers to efficiently study several galaxies at the same time. Measuring galaxies at extreme distances and characterizing their properties will be a major goal of astronomy over the next decade, the researchers said.

The new observations establish EGS-zs8-1 at a time when the universe was undergoing an important change: The hydrogen between galaxies was transitioning from a neutral state to an ionized state. “It appears that the young stars in the early galaxies like EGS-zs8-1 were the main drivers for this transition, called reionization,” said Rychard Bouwens of the Leiden Observatory, co-author of the study.

Taken together, the new Keck Observatory, Hubble, and Spitzer observations also pose new questions. They confirm that massive galaxies already existed early in the history of the universe, but they also show that those galaxies had very different physical properties from what is seen around us today. Astronomers now have strong evidence that the peculiar colors of early galaxies — seen in the Spitzer images — originate from a rapid formation of massive, young stars, which interacted with the primordial gas in these galaxies.

The observations underscore the exciting discoveries that are possible when NASA’s James Webb Space Telescope is launched in 2018, note the researchers. In addition to pushing the cosmic frontier to even earlier times, the telescope will be able to dissect the galaxy light of EGS-zs8-1 seen with the Spitzer telescope and provide astronomers with more detailed insights into its gas properties.

“Our current observations indicate that it will be very easy to measure accurate distances to these distant galaxies in the future with the James Webb Space Telescope,” said co-author Garth Illingworth of the University of California-Santa Cruz. “The result of JWST’s upcoming measurements will provide a much more complete picture of the formation of galaxies at the cosmic dawn.”

Comments Off on Astronomers Unveil the Farthest Galaxy

Cannabis Consumers Show Greater Susceptibility to False Memories

April 26th, 2015

By Universidad de Bacelona.

A new study published in the American journal with the highest impact factor in worldwide, Molecular Psychiatry, reveals that consumers of cannabis are more prone to experiencing false memories.

The study was conducted by researchers from the Human Neuropsychopharmacology group at the Biomedical Research Institute of Hospital de Sant Pau (www.iibsantpau.cat) and from Universitat Autònoma de Barcelona, in collaboration with the Brain Cognition and Plasticity group of the Bellvitge Institute for Biomedical Research (IDIBELL – University of Barcelona).

The image shows the brain activation pattern which permits ruling out a stimulus as a false memory. In the control group, the activations are much more intense and extensive than in the group of cannabis consumers.

Credit: Universitat Autònoma de Barcelona

One of the known consequences of consuming this drug is the memory problems it can cause. Chronic consumers show more difficulties than the general population in retaining new information and recovering memories. The new study also reveals that the chronic use of cannabis causes distortions in memory, making it easier for imaginary or false memories to appear.

On occasions, the brain can remember things that never happened. Our memory consists of a malleable process which is created progressively and therefore is subject to distortions or even false memories. These memory “mistakes” are seen more frequently in several neurological and psychiatric disorders, but can also be observed in the healthy population, and become more common as we age.

One of the most common false memories we have are of situations from our childhood which we believe to remember because the people around us have explained them to us over and over again. Maintaining an adequate control over the “veracity” of our memories is a complex cognitive task which allows us to have our own sense of reality and also shapes our behaviour, based on past experiences.

In the study published in the journal Molecular Psychiatry, researchers from Sant Pau and Bellvitge compared a group of chronic consumers of cannabis to a healthy control group while they worked on learning a series of words. After a few minutes they were once again shown the original words, together with new words which were either semantically related or unrelated.

All participants were asked to identify the words belonging to the original list. Cannabis consumers believed to have already seen the semantically related new words to a higher degree than participants in the control group. By using magnetic resonance imaging, researchers discovered that cannabis consumers showed a lower activation in areas of the brain related to memory procedures and to the general control of cognitive resources.

The study found memory deficiencies despite the fact that participants had stopped consuming cannabis one month before participating in the study. Although they had not consumed the drug in a month, the more the patient had used cannabis throughout their life, the lower the level of activity in the hippocampus, key to storing memories.

The results show that cannabis consumers are more vulnerable to suffering memory distortions, even weeks after not consuming the drug. This suggests that cannabis has a prolonged effect on the brain mechanisms which allow us to differentiate between real and imaginary events. These memory mistakes can cause problems in legal cases, for example, due to the effects the testimonies of witnesses and their victims can have. Nevertheless, from a clinical viewpoint, the results point to the fact that a chronic use of cannabis could worsen problems with age-related memory loss

Comments Off on Cannabis Consumers Show Greater Susceptibility to False Memories

Nailo: Wireless mouse worm on thumb

April 26th, 2015

By MIT.

Researchers at the MIT Media Laboratory are developing a new wearable device that turns the user’s thumbnail into a miniature wireless track pad.

They envision that the technology could let users control wireless devices when their hands are full — answering the phone while cooking, for instance. It could also augment other interfaces, allowing someone texting on a cellphone, say, to toggle between symbol sets without interrupting his or her typing. Finally, it could enable subtle communication in circumstances that require it, such as sending a quick text to a child while attending an important meeting.

A new wearable device, NailO, turns the user’s thumbnail into a miniature wireless track pad. Here, it works as a X-Y coordinate touch pad for a smartphone

The researchers describe a prototype of the device, called NailO, in a paper they’re presenting next week at the Association for Computing Machinery’s Computer-Human Interaction conference in Seoul, South Korea.

According to Cindy Hsin-Liu Kao, an MIT graduate student in media arts and sciences and one of the new paper’s lead authors, the device was inspired by the colorful stickers that some women apply to their nails. “It’s a cosmetic product, popular in Asian countries,” says Kao, who is Taiwanese. “When I came here, I was looking for them, but I couldn’t find them, so I’d have my family mail them to me.”

Indeed, the researchers envision that a commercial version of their device would have a detachable membrane on its surface, so that users could coordinate surface patterns with their outfits. To that end, they used capacitive sensing — the same kind of sensing the iPhone’s touch screen relies on — to register touch, since it can tolerate a thin, nonactive layer between the user’s finger and the underlying sensors.

Designed in the MIT Media Lab, NailO is a thumbnail-mounted wireless track pad that controls digital devices. Watch it in action.

Video: Melanie Gonick/MIT

Instant access

As the site for a wearable input device, however, the thumbnail has other advantages: It’s a hard surface with no nerve endings, so a device affixed to it wouldn’t impair movement or cause discomfort. And it’s easily accessed by the other fingers — even when the user is holding something in his or her hand.

“It’s very unobtrusive,” Kao explains. “When I put this on, it becomes part of my body. I have the power to take it off, so it still gives you control over it. But it allows this very close connection to your body.”

To build their prototype, the researchers needed to find a way to pack capacitive sensors, a battery, and three separate chips — a microcontroller, a Bluetooth radio chip, and a capacitive-sensing chip — into a space no larger than a thumbnail. “The hardest part was probably the antenna design,” says Artem Dementyev, a graduate student in media arts and sciences and the paper’s other lead author. “You have to put the antenna far enough away from the chips so that it doesn’t interfere with them.”

Kao and Dementyev are joined on the paper by their advisors, principal research scientist Chris Schmandt and Joe Paradiso, an associate professor of media arts and sciences. Dementyev and Paradiso focused on the circuit design, while Kao and Schmandt concentrated on the software that interprets the signal from the capacitive sensors, filters out the noise, and translates it into movements on screen.

For their initial prototype, the researchers built their sensors by printing copper electrodes on sheets of flexible polyester, which allowed them to experiment with a range of different electrode layouts. But in ongoing experiments, they’re using off-the-shelf sheets of electrodes like those found in some track pads.

Slimming down

They’ve also been in discussion with battery manufacturers — traveling to China to meet with several of them — and have identified a technology that they think could yield a battery that fits in the space of a thumbnail, but is only half a millimeter thick. A special-purpose chip that combines the functions of the microcontroller, radio, and capacitive sensor would further save space.

At such small scales, however, energy efficiency is at a premium, so the device would have to be deactivated when not actually in use. In the new paper, the researchers also report the results of a usability study that compared different techniques for turning it off and on. They found that requiring surface contact with the operator’s finger for just two or three seconds was enough to guard against inadvertent activation and deactivation.

“Keyboards and mice — still — are not going away anytime soon,” says Steve Hodges, who leads the Sensors and Devices group at Microsoft Research in Cambridge, England. “But more and more that’s being complemented by use of our devices and access to our data while we’re on the move. I’ve got desktop, I’ve got a mobile phone, but that’s still not enough. Different ways of displaying and controlling devices while we’re on the go are, I believe, going to be increasingly important.”

“Is it the case that we’ll all be walking around with digital fingernails in five years’ time?” Hodges asks. “Maybe it is. Most likely, we’ll have a little ecosystem of these input devices. Some will be audio based, which is completely hands free. But there are a lot of cases where that’s not going to be appropriate. NailO is interesting because it’s thinking about much more subtle interactions, where gestures or speech input are socially awkward.”

Comments Off on Nailo: Wireless mouse worm on thumb

9/11 leaves legacy of chronic ill health symptoms among medical rescuers

April 17th, 2015By Alton Parrish.

The health of 2281 New York City Fire Department emergency medical services workers deployed to the scene of the World Trade Center attacks was tracked over a period of 12 years, from the date of the incident on September 11 2001 to the end of December 2013.

The researchers looked at the mental and physical health conditions that have been certified as being linked to the aftermath of the incident under the James Zadroga 9/11 Health and Compensation Act of 2010.

Between 2001 and 2013, the cumulative incidence of acid reflux disease (GERD) was just over 12% while obstructive airways disease (OAD), which includes bronchitis and emphysema, was just under 12%. The cumulative incidences of rhinosinusitis and cancer were 10.6% and 3.1%, respectively.

Validated screening tests were used to gauge the prevalence of mental health conditions: this was 16.7% for probable depression; 7% for probable post-traumatic stress disorder (PTSD); and 3% for probable harmful alcohol use.

Compared with the workers who did not attend the aftermath of the World Trade Center attacks, those who arrived earliest on the scene were at greatest risk for nearly all the health conditions analysed.

They were almost four times as likely to have acid reflux and rhinosinusitis, seven times as likely to have probable PTSD, and twice as likely to have probable depression.

And the more intense the experience was at the time, the greater was the risk of a diagnosis of acid reflux, obstructive airways disease, or rhinosinusitis, and of testing positive for PTSD, depression, and harmful drinking.

The degree of ill health among workers attending the scene was generally lower than that of a demographically similar group of New York City firefighters, probably because of the differences in tasks performed at the World Trade Center site, suggest the authors.

The findings of a substantial amount of ill health underscore the need for continued monitoring and treatment of emergency medical services workers who helped the victims of the World Trade Center attacks, they conclude.

Comments Off on 9/11 leaves legacy of chronic ill health symptoms among medical rescuers

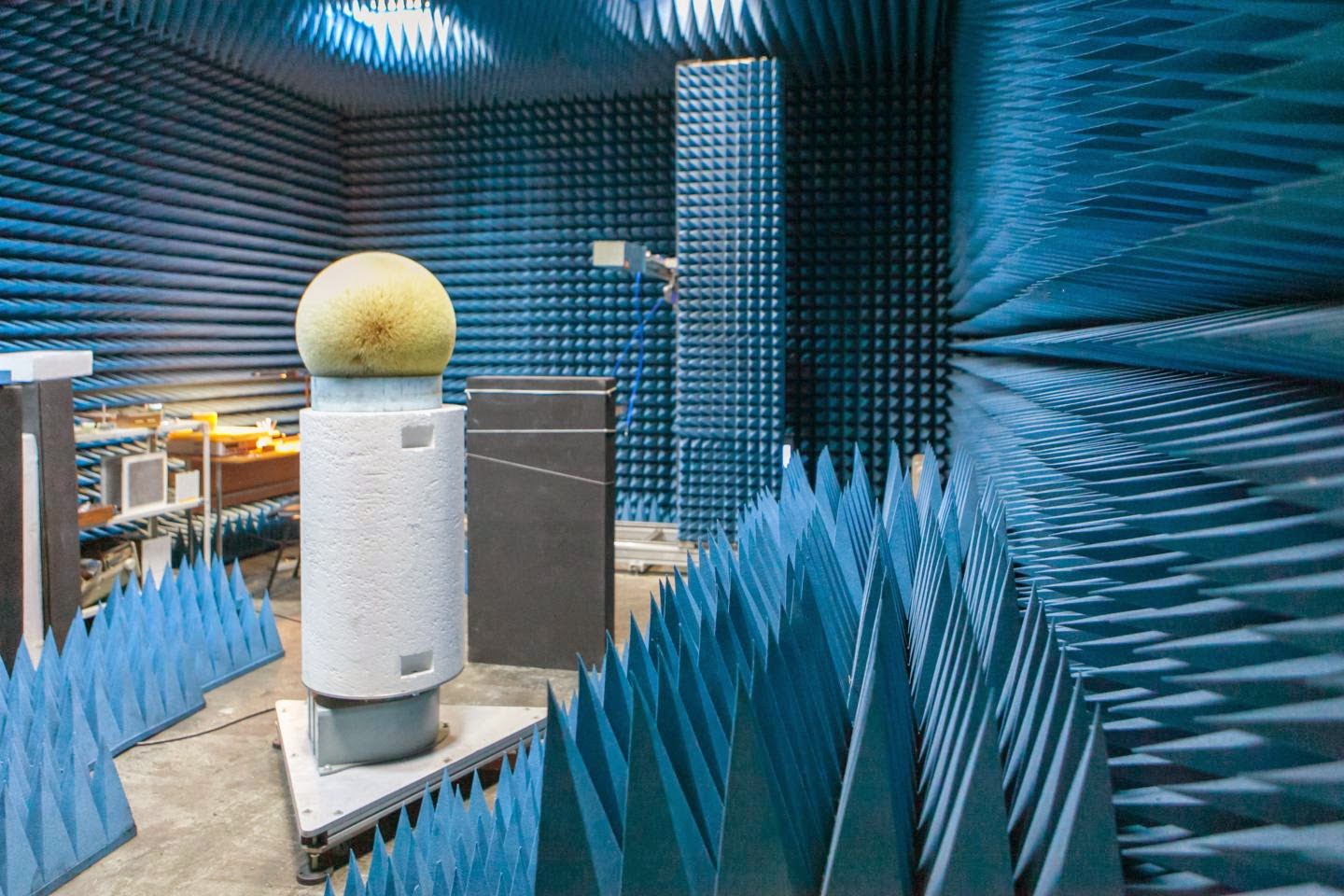

Invisible objects created without meta-material cloaking

April 17th, 2015

By ITMO University.

The scientists studied light scattering from a glass cylinder filled with water. In essence, such an experiment represents a two-dimensional analog of a classical problem of scattering from a homogeneous sphere (Mie scattering), the solution to which is known for almost a century. However, this classical problem contains unusual physics that manifests itself when materials with high values of refractive index are involved. In the study, the scientists used ordinary water whose refractive index can be regulated by changing temperature.

As it turned out, high refractive index is associated with two scattering mechanisms: resonant scattering, which is related to the localization of light inside the cylinder, and non-resonant, which is characterized by smooth dependence on the wave frequency. The interaction between these mechanisms is referred to as Fano resonances. The researchers discovered that at certain frequencies waves scattered via resonant and non-resonant mechanisms have opposite phases and are mutually destroyed, thus making the object invisible.

The work led to the first experimental observation of an invisible homogeneous object by means of scattering cancellation. Importantly, the developed technique made it possible to switch from visibility to invisibility regimes at the same frequency of 1.9 GHz by simply changing the temperature of the water in the cylinder from 90 °C to 50 °C.

“Our theoretical calculations were successfully tested in microwave experiments. What matters is that the invisibility idea we implemented in our work can be applied to other electromagnetic wave ranges, including to the visible range. Materials with corresponding refractive index are either long known or can be developed at will,” said Mikhail Rybin, first author of the paper and senior researcher at the Metamaterials Laboratory in ITMO University.

Credit: Scientific Reports

The subject of invisibility came into prominence with the development of metamaterials – artificially designed structures with optical properties that are not encountered elsewhere in nature. Metamaterials are capable of changing the direction of light in exotic ways, including making light curve around the cloaked object. Nevertheless, coating layers based on metamaterials are extremely hard to fabricate and are not compatible with many other invisibility ideas. The method developed by the group is based on a new understanding of scattering processes and leaves behind the existing ones in simplicity and cost-effectiveness.

Contacts and sources:

Comments Off on Invisible objects created without meta-material cloaking

Microwave weapon can neutralize aircraft electrical systems

April 5th, 2015

By S.A.R.A.

Scientific Applications & Research Associates, Inc. (SARA) (Huntington Beach, CA) has developed a compact, lightweight, steerable, high-power, microwave weapon. The aimable, high-power microwave weapon uses a unique antenna to produce a highly focused beam of energy for contacting a target to neutralize the electrically driven systems such as found in missiles, airplanes and automobiles, accompanied by a low impact on human life.

The antenna can carried on a surface a truck or air craft along with a feed mechanism such that the antennas are capable of folding into a storage configuration for rapid transport and then expansion into an upright stance for shooting a pencil-thin beam of high-energy microwave radiation at a moving target.

The antenna shoots a high-powered microwave energy in a controllable fashion quickly and without the use of traditional large and cumbersome vacuum waveguides. The high-energy pulsed microwave energy beam can be used against a moving target to neutralize its electrical control systems without simultaneously exposing the pilot or other human cargo to unhealthy radiation.

The microwave antenna is unmatched in the rapidity and swath of its beam steering and can be used for directed energy weapons (DEW) engagements with moving targets both on the ground and in the air.

The highly steerable antenna is the first of its kind to use twist-reflector and transreflector technology in a high-power microwave (HPM) capable configuration.

The power for the microwave is first generated by an internal combustion or jet engine, that feeds to a pulse forming network or Marx bank, that, in turn, drives a high power microwave (HPM) generator, such as s super-reltron, relativistic-magnetron, virtual cathode oscillator, or relativistic Klystron, to produce the microwave radiation for channeling through the waveguide to the feed horn.

The research and development efforts that produced the technology was funded by the US Army Research Laboratory (ARL). In May 2003. the weapon earned US Patent # 6,559,807.

In the patent inventor Robert A. Koslover says it was determined that a high-peak power microwave transmission, on the order of more than 100 megawatts (MW) of energy, confined to a very tight beam (“pencil beam”) using an L-band antenna, lightweight (less than 250 kg) and compact enough to be deployed on a land vehicle or an air platform, could find wide use in intercepting a target and degrading or neutralizing the electronic control monitoring systems and directional control systems in such targets as flying missiles and piloted aircraft as a means of rendering them ineffective without injuring human life.

In other situations, civil authorities may find use for the device to neutralize the electrical system and computer-driven controls of an automobile or other motor vehicle thereby eliminating the need for extended car chase situations by police authorities that often result in destruction of property and severe injury or death to participants and members of the public.

Comments Off on Microwave weapon can neutralize aircraft electrical systems

Exploding head syndrome: Common on young people

April 5th, 2015

By WSU.

The study also found that more than one-third of those who had exploding head syndrome also experienced isolated sleep paralysis, a frightening experience in which one cannot move or speak when waking up. People with this condition will literally dream with their eyes wide open. The study is the largest of its kind, with 211 undergraduate students interviewed by psychologists or graduate students trained in recognizing the symptoms of exploding head syndrome and isolated sleep paralysis. The results appear online in the Journal of Sleep Research. Based on smaller, less rigorous studies, some researchers have hypothesized that exploding head syndrome is a rare condition found mostly in people older than 50.

“I didn’t believe the clinical lore that it would only occur in people in their 50s,” said Sharpless. “That didn’t make a lot of biological sense to me.” He started to think exploding head syndrome was more widespread last year when he reviewed the scientific literature on the disorder for the journal Sleep Medicine Reviews. In that report he concluded the disorder was a largely overlooked phenomenon that warranted a deeper look. The disorder tends to come as one is falling asleep. Researchers suspect it stems from problems with the brain shutting down. When the brain goes to sleep, it’s like a computer shutting down, with motor, auditory and visual neurons turning off in stages. But instead of shutting down properly, the auditory neurons are thought to fire all at once, Sharpless said.

“That’s why you get these crazy-loud noises that you can’t explain, and they’re not actual noises in your environment,” he said. The same part of the brain, the brainstem’s reticular formation, appears to be involved in isolated sleep paralysis as well, which could account for why some people experience both maladies, he said.

They can be extremely frightening. Exploding head syndrome can last just a few seconds but can lead some people to believe that they’re having a seizure or a subarachnoid hemorrhage, said Sharpless. “Some people have worked these scary experiences into conspiracy theories and mistakenly believe the episodes are caused by some sort of directed-energy weapon,” he said.

In fact, both exploding head syndrome and isolated sleep paralysis have been misinterpreted as unnatural events. The waking dreams of sleep paralysis can make for convincing hallucinations, which might account for why some people in the Middle Ages would be convinced they saw demons or witches. “In 21st century America, you have aliens,” said Sharpless. “For this scary noise you hear at night when there’s nothing going on in your environment, well, it might be the government messing with you.”

Some people are so put off by the experience that they don’t even tell their spouse, he said. “They may think they’re going crazy and they don’t know that a good chunk of the population has had the exact same thing,” he said. Neither disorder has a well-established treatment yet, though researchers have tried different drugs that may be promising, said Sharpless, co-author of the upcoming book, “Sleep Paralysis: Historical, Psychological, and Medical Perspectives.”

Comments Off on Exploding head syndrome: Common on young people

Robot model for infant learning shows bodily posture may affect memory

March 26th, 2015

By Indiana University.

An Indiana University cognitive scientist and collaborators have found that posture is critical in the early stages of acquiring new knowledge.

The study, conducted by Linda Smith, a professor in the IU Bloomington College of Arts and Sciences’ Department of Psychological and Brain Sciences, in collaboration with a roboticist from England and a developmental psychologist from the University of Wisconsin-Madison, offers a new approach to studying the way “objects of cognition,” such as words or memories of physical objects, are tied to the position of the body.

A robot is taught to distinguish between two objects as part of the research on the effect of body posture on infant learning.

Credit: Photo by University of Plymouth

“This study shows that the body plays a role in early object name learning, and how toddlers use the body’s position in space to connect ideas,” Smith said. “The creation of a robot model for infant learning has far-reaching implications for how the brains of young people work.”

The research, “Posture Affects How Robots and Infants Map Words to Objects,” was published today in PLOS ONE, an open-access, peer-reviewed online journal.

Using both robots and infants, researchers examined the role bodily position played in the brain’s ability to “map” names to objects. They found that consistency of the body’s posture and spatial relationship to an object as an object’s name was shown and spoken aloud were critical to successfully connecting the name to the object.

The new insights stem from the field of epigenetic robotics, in which researchers are working to create robots that learn and develop like children, through interaction with their environment. Morse applied Smith’s earlier research to creating a learning robot in which cognitive processes emerge from the physical constraints and capacities of its body.

“A number of studies suggest that memory is tightly tied to the location of an object,” Smith said. “None, however, have shown that bodily position plays a role or that, if you shift your body, you could forget.”

To reach these conclusions, the study’s authors conducted a series of experiments, first with Morse’s robots, which were programmed to map the name of an object to the object through shared association with a posture, then with children age 12 to 18 months.

In one experiment, a robot was first shown an object situated to its left, then a different object to the right; then the process was repeated several times to create an association between the objects and the robot’s two postures. Then with no objects in place, the robot’s view was directed to the location of the object on the left and given a command that elicited the same posture from the earlier viewing of the object. Then the two objects were presented in the same locations without naming, after which the two objects were presented in different locations as their names were repeated. This caused the robot to turn and reach toward the object now associated with the name.

The robot consistently indicated a connection between the object and its name during 20 repeats of the experiment. But in subsequent tests where the target and another object were placed in both locations — so as to not be associated with a specific posture — the robot failed to recognize the target object. When replicated with infants, there were only slight differences in the results: The infant data, like that of the robot, implicated the role of posture in connecting names to objects.

“These experiments may provide a new way to investigate the way cognition is connected to the body, as well as new evidence that mental entities, such as thoughts, words and representations of objects, which seem to have no spatial or bodily components, first take shape through spatial relationship of the body within the surrounding world,” Smith said.

Smith’s research has long focused on creating a framework for understanding cognition that differs from the traditional view, which separates physical actions such as handling objects or walking up a hill from cognitive actions such as learning language or playing chess.

Additional research is needed to determine whether this study’s results apply to infants only, or more broadly to the relationship between the brain, the body and memory, she added. The study may also provide new approaches to research on developmental disorders in which difficulties with motor coordination and cognitive development are well-documented but poorly understood.

Comments Off on Robot model for infant learning shows bodily posture may affect memory

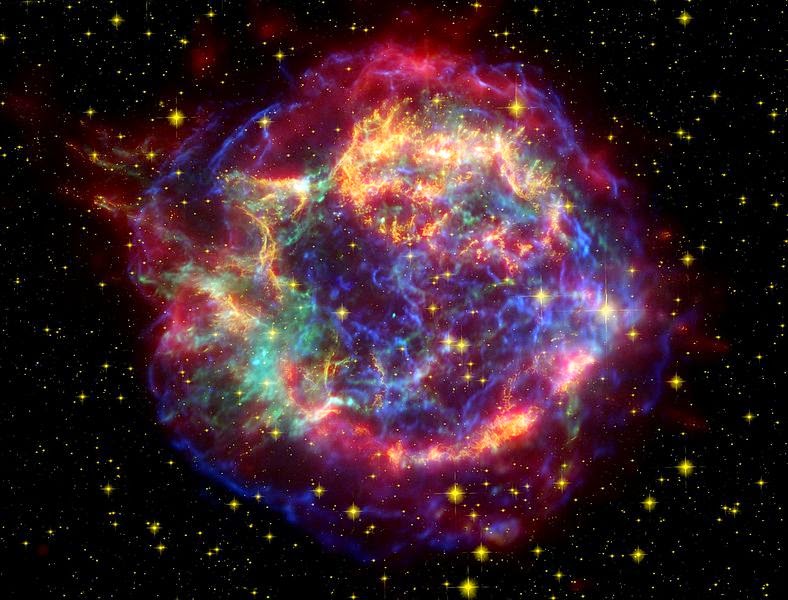

Milky way’s center harbors supernova ‘dust factory’

March 26th, 2015

By Alton Parrish.

“Dust itself is very important because it’s the stuff that forms stars and planets, like the sun and Earth, respectively, so to know where it comes from is an important question,” said lead author Ryan Lau, Cornell postdoctoral associate for astronomy, in research published March 19 in Science Express. “Our work strongly reinforces the theory that supernovae are producing the dust seen in galaxies of the early universe,” he said.

Lau explains that one of astronomy’s big questions is why galaxies – forming as recently as 1 billion years after the Big Bang – contain so much dust. The leading theory is that supernovae – stars that explode at the end of their lives – contain large amounts of metal-enriched material that, in turn, harbors key ingredients of dust, like silicon, iron and carbon.

The astronomers examined Sagittarius A East, a 10,000-year-old supernova remnant near the center of our galaxy. Lau said that when a supernova explodes, the materials in its center expand and form dust. This has been observed in several young supernova remnants – such as the famed SN1987A and Cassiopeia A.

Credit: ESA/Hubble

The astronomers captured the observations via FORCAST (the Faint Object Infrared Camera Telescope) aboard SOFIA (the Stratospheric Observatory for Infrared Astronomy), a modified Boeing 747 and a joint project of NASA, the German Aerospace Center and the Universities Space Research Association. It is the world’s largest airborne astronomical observatory. Currently, no space-based telescope can observe at far-infrared wavelengths, and ground-based telescopes are unable to observe light at these wavelengths due to the Earth’s atmosphere.

Joining Lau on this research, “Old Supernova Dust Factory Revealed at the Galactic Center,” are co-authors Terry Herter, Cornell professor of astronomy and principal scientific investigator on FORCAST; Mark Morris, University of California, Los Angeles; Zhiyuan Li, Nanjing University, China; and Joe Adams, NASA Ames Research Center.

Comments Off on Milky way’s center harbors supernova ‘dust factory’

Mars: Aurora and mysterious dust cloud

March 21st, 2015

By Alton Parrish.

NASA’s Mars Atmosphere and Volatile Evolution (MAVEN) spacecraft has observed two unexpected phenomena in the Martian atmosphere: an unexplained high-altitude dust cloud and aurora that reaches deep into the Martian atmosphere.

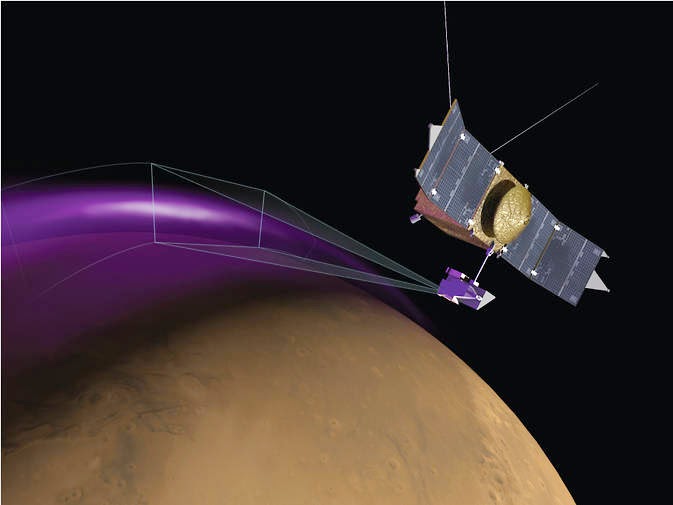

Artist’s conception of MAVEN’s Imaging UltraViolet Spectrograph (IUVS) observing the “Christmas Lights Aurora” on Mars. MAVEN observations show that aurora on Mars is similar to Earth’s “Northern Lights” but has a different origin.

“If the dust originates from the atmosphere, this suggests we are missing some fundamental process in the Martian atmosphere,” said Laila Andersson of the University of Colorado’s Laboratory for Atmospherics and Space Physics (CU LASP), Boulder, Colorado.

The cloud was detected by the spacecraft’s Langmuir Probe and Waves (LPW) instrument, and has been present the whole time MAVEN has been in operation. It is unknown if the cloud is a temporary phenomenon or something long lasting. The cloud density is greatest at lower altitudes. However, even in the densest areas it is still very thin. So far, no indication of its presence has been seen in observations from any of the other MAVEN instruments.

Possible sources for the observed dust include dust wafted up from the atmosphere; dust coming from Phobos and Deimos, the two moons of Mars; dust moving in the solar wind away from the sun; or debris orbiting the sun from comets. However, no known process on Mars can explain the appearance of dust in the observed locations from any of these sources.

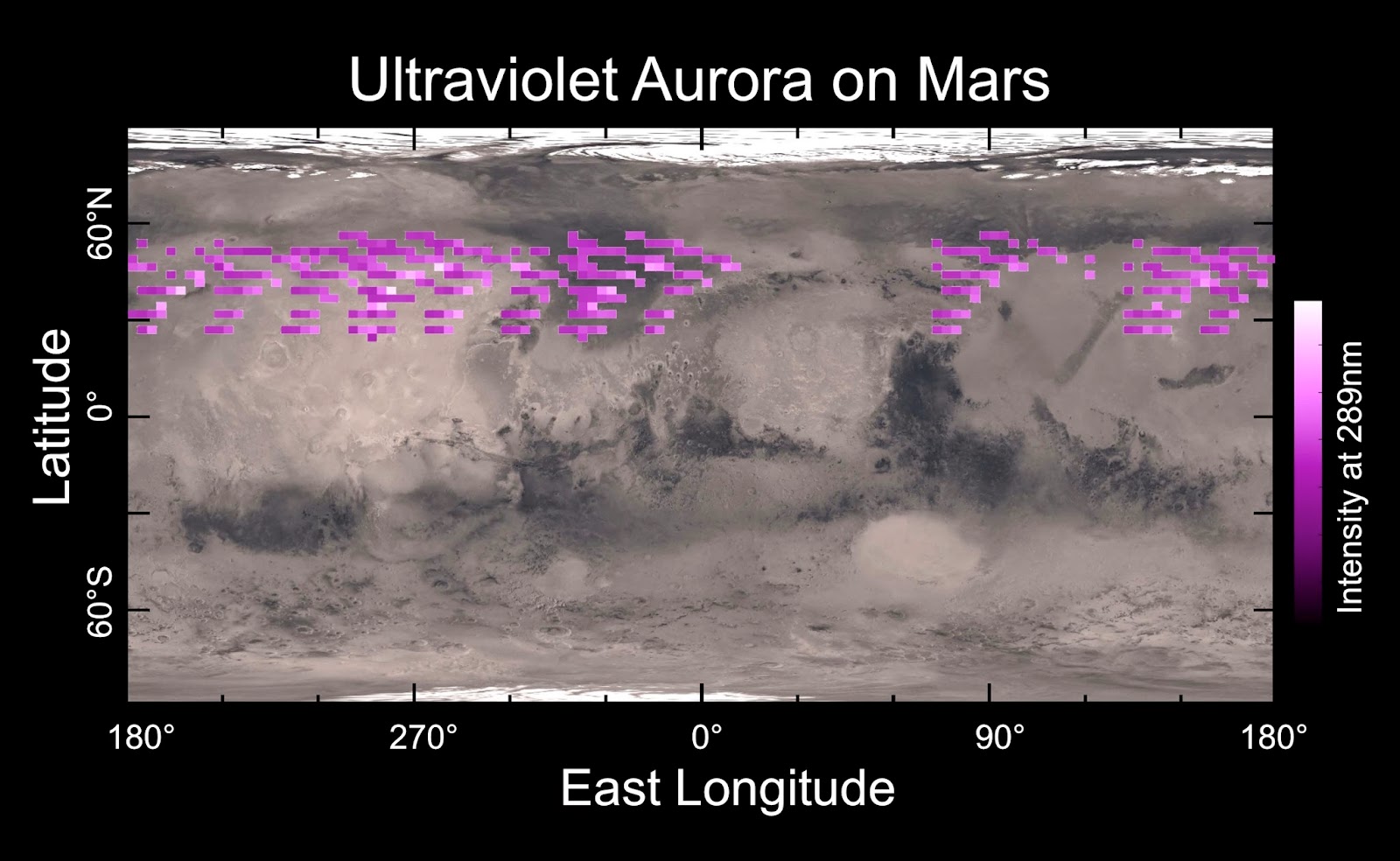

A map of IUVS’s auroral detections in December 2014 overlaid on Mars’ surface. The map shows that the aurora was widespread in the northern hemisphere, not tied to any geographic location. The aurora was detected in all observations during a 5-day period.

Image Credit: University of Colorado

MAVEN’s Imaging Ultraviolet Spectrograph (IUVS) observed what scientists have named “Christmas lights.” For five days just before Dec. 25, MAVEN saw a bright ultraviolet auroral glow spanning Mars’ northern hemisphere. Aurora, known on Earth as northern or southern lights, are caused by energetic particles like electrons crashing down into the atmosphere and causing the gas to glow.

“What’s especially surprising about the aurora we saw is how deep in the atmosphere it occurs – much deeper than at Earth or elsewhere on Mars,” said Arnaud Stiepen, IUVS team member at the University of Colorado. “The electrons producing it must be really energetic.”

The source of the energetic particles appears to be the sun. MAVEN’s Solar Energetic Particle instrument detected a huge surge in energetic electrons at the onset of the aurora. Billions of years ago, Mars lost a global protective magnetic field like Earth has, so solar particles can directly strike the atmosphere. The electrons producing the aurora have about 100 times more energy than you get from a spark of house current, so they can penetrate deeply in the atmosphere.

The findings are being presented at the 46th Lunar and Planetary Science Conference in The Woodlands, Texas.

MAVEN was launched to Mars on Nov. 18, 2013, to help solve the mystery of how the Red Planet lost most of its atmosphere and much of its water. The spacecraft arrived at Mars on Sept. 21, and is four months into its one-Earth-year primary mission.

“The MAVEN science instruments all are performing nominally, and the data coming out of the mission are excellent,” said Bruce Jakosky of CU LASP, Principal Investigator for the mission.

MAVEN is part of the agency’s Mars Exploration Program, which includes the Opportunity and Curiosity rovers, the Mars Odyssey and Mars Reconnaissance Orbiter spacecraft currently orbiting the planet.

NASA’s Mars Exploration Program seeks to characterize and understand Mars as a dynamic system, including its present and past environment, climate cycles, geology and biological potential. In parallel, NASA is developing the human spaceflight capabilities needed for its journey to Mars or a future round-trip mission to the Red Planet in the 2030’s.

MAVEN’s principal investigator is based at the University of Colorado’s Laboratory for Atmospheric and Space Physics, and NASA’s Goddard Space Flight Center in Greenbelt, Maryland, manages the MAVEN project. Partner institutions include Lockheed Martin, the University of California at Berkeley, and NASA’s Jet Propulsion Laboratory.

Comments Off on Mars: Aurora and mysterious dust cloud

Robots are the future of farming

March 21st, 2015

By Alton Parrish.

Scientists and industry representatives at the forefront of the next agricultural machine age met at Queensland University of Technology (QUT) last week for the first scientific workshop of the National University Network Precision Agriculture Systems (March 10-12).

QUT robotics Professor Tristan Perez said since the 1960s, increased use of agrochemicals, significant advances in crop and animal genetics, agricultural mechanisation and improved management practices have been at the core of increased productivity and would continue to provide future incremental improvements.

Professor Tristan Perez says large, expensive, single tractors could be replaced with a team of more cost effective robots.

Credit: QUT

“We are starting to see automation in agriculture for single processes such as animal and crop drone remote monitoring, robotic weed management, autonomous irrigation.

“However, we envisage that the integration of these technologies together with a systems view of the farming enterprise will trigger the next wave of productive innovation in agriculture.

“Farmers will have to access data and technologies from which to extract information that will assist in management decisions.”

Professor Perez said the agricultural landscape would rapidly change due to low-cost and portable ICT infrastructure and robotics.

“The farm of the future will involve multiple lightweight, small, autonomous, energy efficient machines (AgBots) operating collectively to weed, fertilise and control pest and diseases, while collecting vasts amount of data to enable better management decision making,” Professor Perez said.

“There is enormous potential for AgBots to be combined with sensor networks and drones to provide a farmer with large amounts of data, which when can be combined with mathematical models and novel statistical techniques (big data analytics) to extract key information for management decisions—not only on when to apply herbicides, pesticides and fertilizers but how much to use.”

Professor Perez said weed and pest management in crops was a serious problem for farmers and replacing large, expensive, single tractors with a team of more cost effective robots that could weed on the spot and perform other farming operations 24 hours a day.

He said there was no reason why agricultural robots would be confined to broadacre and horticulture farming crops—AgBots could also be of great value within the livestock industry.

He said with the world population projected to increase from seven billion plus in 2015 to nearly nine billion by 2050, it was essential to find ways to increase yield and maintaining the status quo was no longer an option.

“Farmers are keen to explore this new agricultural frontier which has the potential to ensure their long-term sustainability by enabling them to deliver high-premium produce,” Professor Perez said.

QUT’s AgBot II prototype is 3 metres wide, 2 metres long and 1.4 metres high with all dimensions adjustable. Equipped with cameras, sensors and software AgBot is designed to work in autonomous groups to navigate, detect and classify weeds and manage them either chemically or mechanically as well as apply fertilizer for site specific crop management. AgBot II is currently static but will be engaging in field trials tin June 2015.

He said researchers from nine Australian universities as well as farmers and industry representatives attended the National University Network for Precision Agriculture Systems Science-Industry workshop at QUT from 10 to 12 March. At this workshop, key research and development projects will be discussed that could serve as a springboard for agriculture into the digital age.

Comments Off on Robots are the future of farming

How chameleons change colors

March 11th, 2015

By University of Geneva.

Many chameleons have the remarkable ability to exhibit complex and rapid color changes during social interactions. A collaboration of scientists within the Sections of Biology and Physics of the Faculty of Science from the University of Geneva (UNIGE), Switzerland, unveils the mechanisms that regulate this phenomenon.

In a study published in Nature Communications, the team led by professors Michel Milinkovitch and Dirk van der Marel demonstrates that the changes take place via the active tuning of a lattice of nanocrystals present in a superficial layer of dermal cells called iridophores.

These are male panther chameleons (Furcifer pardalis) photographed in Madagascar.

The researchers also reveal the existence of a deeper population of iridophores with larger and less ordered crystals that reflect the infrared light. The organisation of iridophores into two superimposed layers constitutes an evolutionary novelty and it allows the chameleons to rapidly shift between efficient camouflage and spectacular display, while providing passive thermal protection.

These are male panther chameleons (Furcifer pardalis) photographed in Madagascar.

Male chameleons are popular for their ability to change colorful adornments depending on their behaviour. If the mechanisms responsible for a transformation towards a darker skin are known, those that regulate the transition from a lively color to another vivid hue remained mysterious. Some species, such as the panther chameleon, are able to carry out such a change witin one or two minutes to court a female or face a competing male.

Blue, a structural color of the chameleon

Besides brown, red and yellow pigments, chameleons and other reptiles display so-called structural colors. “These colors are generated without pigments, via a physical phenomenon of optical interference. They result from interactions between certain wavelengths and nanoscopic structures, such as tiny crystals present in the skin of the reptiles”, says Michel Milinkovitch, professor at the Department of Genetics and Evolution at UNIGE. These nanocrystals are arranged in layers that alternate with cytoplasm, within cells called iridophores. The structure thus formed allows a selective reflection of certain wavelengths, which contributes to the vivid colors of numerous reptiles.

To determine how the transition from one flashy color to another one is carried out in the panther chameleon, the researchers of two laboratories at UNIGE worked hand in hand, combining their expertise in both quantum physics and in evolutionary biology. “We discovered that the animal changes its colors via the active tuning of a lattice of nanocrystals. When the chameleon is calm, the latter are organised into a dense network and reflect the blue wavelengths. In contrast, when excited, it loosens its lattice of nanocrystals, which allows the reflection of other colors, such as yellows or reds”, explain the physicist Jérémie Teyssier and the biologist Suzanne Saenko, co-first authors of the article. This constitutes a unique example of an auto-organised intracellular optical system controlled by the chameleon.

Crystals as a heat shield

The scientists also demonstrated the existence of a second deeper layer of iridophores. “These cells, which contain larger and less ordered crystals, reflect a substantial proportion of the infrared wavelengths”, states Michel Milinkovitch. This forms an excellent protection against the thermal effects of high exposure to sun radiations in low-latitude regions.

The organisation of iridophores in two superimposed layers constitutes an evolutionary novelty: it allows the chameleons to rapidly shift between efficient camouflage and spectacular display, while providing passive thermal protection.

In their future research, the scientists will explore the mechanisms that explain the development of an ordered nanocrystals lattice within iridophores, as well as the molecular and cellular mechanisms that allow chameleons to control the geometry of this lattice.

Comments Off on How chameleons change colors

The blind can read with new finger mounted device

March 11th, 2015

By MIT.

Researchers at the MIT Media Laboratory have built a prototype of a finger-mounted device with a built-in camera that converts written text into audio for visually impaired users. The device provides feedback — either tactile or audible — that guides the user’s finger along a line of text, and the system generates the corresponding audio in real time.

Researchers at the MIT Media Lab have created a finger-worn device with a built-in camera that can convert text to speech for the visually impaired.

“You really need to have a tight coupling between what the person hears and where the fingertip is,” says Roy Shilkrot, an MIT graduate student in media arts and sciences and, together with Media Lab postdoc Jochen Huber, lead author on a new paper describing the device. “For visually impaired users, this is a translation. It’s something that translates whatever the finger is ‘seeing’ to audio. They really need a fast, real-time feedback to maintain this connection. If it’s broken, it breaks the illusion.”

Huber will present the paper at the Association for Computing Machinery’s Computer-Human Interface conference in April. His and Shilkrot’s co-authors are Pattie Maes, the Alexander W. Dreyfoos Professor in Media Arts and Sciences at MIT; Suranga Nanayakkara, an assistant professor of engineering product development at the Singapore University of Technology and Design, who was a postdoc and later a visiting professor in Maes’ lab; and Meng Ee Wong of Nanyang Technological University in Singapore.

The paper also reports the results of a usability study conducted with vision-impaired volunteers, in which the researchers tested several variations of their device. One included two haptic motors, one on top of the finger and the other beneath it. The vibration of the motors indicated whether the subject should raise or lower the tracking finger.

Researchers at the MIT Media Lab have created a finger-worn device with a built-in camera that can convert text to speech for the visually impaired.

Another version, without the motors, instead used audio feedback: a musical tone that increased in volume if the user’s finger began to drift away from the line of text. The researchers also tested the motors and musical tone in conjunction. There was no consensus among the subjects, however, on which types of feedback were most useful. So in ongoing work, the researchers are concentrating on audio feedback, since it allows for a smaller, lighter-weight sensor.

Bottom line

The key to the system’s real-time performance is an algorithm for processing the camera’s video feed, which Shilkrot and his colleagues developed. Each time the user positions his or her finger at the start of a new line, the algorithm makes a host of guesses about the baseline of the letters. Since most lines of text include letters whose bottoms descend below the baseline, and because skewed orientations of the finger can cause the system to confuse nearby lines, those guesses will differ. But most of them tend to cluster together, and the algorithm selects the median value of the densest cluster.

That value, in turn, constrains the guesses that the system makes with each new frame of video, as the user’s finger moves to the right, which reduces the algorithm’s computational burden.

Given its estimate of the baseline of the text, the algorithm also tracks each individual word as it slides past the camera. When it recognizes that a word is positioned near the center of the camera’s field of view — which reduces distortion — it crops just that word out of the image. The baseline estimate also allows the algorithm to realign the word, compensating for distortion caused by oddball camera angles, before passing it to open-source software that recognizes the characters and translates recognized words into synthesized speech.

In the work reported in the new paper, the algorithms were executed on a laptop connected to the finger-mounted devices. But in ongoing work, Marcel Polanco, a master’s student in computer science and engineering, and Michael Chang, an undergraduate computer science major participating in the project through MIT’s Undergraduate Research Opportunities Program, are developing a version of the software that runs on an Android phone, to make the system more portable.

The researchers have also discovered that their device may have broader applications than they’d initially realized. “Since we started working on that, it really became obvious to us that anyone who needs help with reading can benefit from this,” Shilkrot says. “We got many emails and requests from organizations, but also just parents of children with dyslexia, for instance.”

“It’s a good idea to use the finger in place of eye motion, because fingers are, like the eye, capable of quickly moving with intention in x and y and can scan things quickly,” says George Stetten, a physician and engineer with joint appointments at Carnegie Mellon’s Robotics Institute and the University of Pittsburgh’s Bioengineering Department, who is developing a finger-mounted device that gives visually impaired users information about distant objects. “I am very impressed with what they do.”

Comments Off on The blind can read with new finger mounted device

Arsenic in baby formula, breast feeding safer

February 28th, 2015By Darthmouth University.

Baby formula poses higher arsenic risk to newborns than breast milk, shows Dartmouth study

In the first U.S. study of urinary arsenic in babies, Dartmouth College researchers found that formula-fed infants had higher arsenic levels than breast-fed infants, and that breast milk itself contained very low arsenic concentrations.

Credit: Dartmouth College

The findings appear Feb. 23 online in the journal Environmental Health Perspectives. A PDF is available on request.

The researchers measured arsenic in home tap water, urine from 72 six-week-old infants and breast milk from nine women in New Hampshire. Urinary arsenic was 7.5 times lower for breast-fed than formula-fed infants. The highest tap water arsenic concentrations far exceeded the arsenic concentrations in powdered formulas, but for the majority of the study’s participants, both the powder and water contributed to exposure.

“This study’s results highlight that breastfeeding can reduce arsenic exposure even at the relatively low levels of arsenic typically experienced in the United States,” says lead author Professor Kathryn Cottingham. “This is an important public health benefit of breastfeeding.”

Arsenic occurs naturally in bedrock and is a common global contaminant of well water. It causes cancers and other diseases, and early-life exposure has been associated with increased fetal mortality, decreased birth weight and diminished cognitive function. The Environmental Protection Agency has set a maximum contaminant level for public drinking water, but private well water is not subject to regulation and is the primary water source in many rural parts of the United States.

“We advise families with private wells to have their tap water tested for arsenic,” says senior author ProfessorMargaret Karagas, principal investigator at Dartmouth’s Children’s Environmental Health and Disease Prevention Research Center. Added study co-lead author Courtney Carignan: “We predict that population-wide arsenic exposure will increase during the second part of the first year of life as the prevalence of formula-feeding increases.”

Comments Off on Arsenic in baby formula, breast feeding safer

Radio chip for the ‘internet of things’

February 28th, 2015

By MIT.

A circuit developed at MIT that reduces power leakage when transmitters are idle could greatly extend battery life.

At this year’s Consumer Electronics Show in Las Vegas, the big theme was the “Internet of things” — the idea that everything in the human environment, from kitchen appliances to industrial equipment, could be equipped with sensors and processors that can exchange data, helping with maintenance and the coordination of tasks.

Realizing that vision, however, requires transmitters that are powerful enough to broadcast to devices dozens of yards away but energy-efficient enough to last for months — or even to harvest energy from heat or mechanical vibrations.

“A key challenge is designing these circuits with extremely low standby power, because most of these devices are just sitting idling, waiting for some event to trigger a communication,” explains Anantha Chandrakasan, the Joseph F. and Nancy P. Keithley Professor in Electrical Engineering at MIT. “When it’s on, you want to be as efficient as possible, and when it’s off, you want to really cut off the off-state power, the leakage power.”

This week, at the Institute of Electrical and Electronics Engineers’ International Solid-State Circuits Conference, Chandrakasan’s group will present a new transmitter design that reduces off-state leakage 100-fold. At the same time, it provides adequate power for Bluetooth transmission, or for the even longer-range 802.15.4 wireless-communication protocol.

“The trick is that we borrow techniques that we use to reduce the leakage power in digital circuits,” Chandrakasan explains. The basic element of a digital circuit is a transistor, in which two electrical leads are connected by a semiconducting material, such as silicon. In their native states, semiconductors are not particularly good conductors. But in a transistor, the semiconductor has a second wire sitting on top of it, which runs perpendicularly to the electrical leads. Sending a positive charge through this wire — known as the gate — draws electrons toward it. The concentration of electrons creates a bridge that current can cross between the leads.

But while semiconductors are not naturally very good conductors, neither are they perfect insulators. Even when no charge is applied to the gate, some current still leaks across the transistor. It’s not much, but over time, it can make a big difference in the battery life of a device that spends most of its time sitting idle.

Going negative

Chandrakasan — along with Arun Paidimarri, an MIT graduate student in electrical engineering and computer science and first author on the paper, and Nathan Ickes, a research scientist in Chandrakasan’s lab — reduces the leakage by applying a negative charge to the gate when the transmitter is idle. That drives electrons away from the electrical leads, making the semiconductor a much better insulator.

Of course, that strategy works only if generating the negative charge consumes less energy than the circuit would otherwise lose to leakage. In tests conducted on a prototype chip fabricated through the Taiwan Semiconductor Manufacturing Company’s research program, the MIT researchers found that their circuit spent only 20 picowatts of power to save 10,000 picowatts in leakage.

To generate the negative charge efficiently, the MIT researchers use a circuit known as a charge pump, which is a small network of capacitors — electronic components that can store charge — and switches. When the charge pump is exposed to the voltage that drives the chip, charge builds up in one of the capacitors. Throwing one of the switches connects the positive end of the capacitor to the ground, causing a current to flow out the other end. This process is repeated over and over. The only real power drain comes from throwing the switch, which happens about 15 times a second.

Turned on

To make the transmitter more efficient when it’s active, the researchers adopted techniques that have long been a feature of work in Chandrakasan’s group. Ordinarily, the frequency at which a transmitter can broadcast is a function of its voltage. But the MIT researchers decomposed the problem of generating an electromagnetic signal into discrete steps, only some of which require higher voltages. For those steps, the circuit uses capacitors and inductors to increase voltage locally. That keeps the overall voltage of the circuit down, while still enabling high-frequency transmissions.

What those efficiencies mean for battery life depends on how frequently the transmitter is operational. But if it can get away with broadcasting only every hour or so, the researchers’ circuit can reduce power consumption 100-fold.

Comments Off on Radio chip for the ‘internet of things’

Historic Indian sword was masterfully crafted

February 21st, 2015

By Alton Parrish.

The master craftsmanship behind Indian swords was highlighted when scientists and conservationists from Italy and the UK joined forces to study a curved single-edged sword called a shamsheer. The study, led by Eliza Barzagli of the Institute for Complex Systems and the University of Florence in Italy, is published in Springer’s journal Applied Physics A – Materials Science & Processing.

This is a 75-centimeter-long shamsheer from the late 18th or early 19th century made in India (Wallace Collection, London).

.jpg&container=blogger&gadget=a&rewriteMime=image%2F*)

Credit: Dr. Alan Williams/Wallace Collection

The 75-centimeter-long sword from the Wallace Collection in London was made in India in the late eighteenth or early nineteenth century. The design is of Persian origin, from where it spread across Asia and eventually gave rise to a family of similar weapons called scimitars being forged in various Southeast Asian countries.

Two different approaches were used to examine the shamsheer: the classical one (metallography) and a non-destructive technique (neutron diffraction). This allowed the researchers to test the differences and complementarities of the two techniques. The Wallace Collection to ascertain its composition. Samples to be viewed under the microscope were collected from already damaged sections of the weapon.

The sword in question first underwent metallographic tests at the laboratories of The sword was then sent to the ISIS pulsed spallation neutron source at the Rutherford Appleton Laboratory in the UK. Two non-invasive neutron diffraction techniques not damaging to artefacts were used to further shed light on the processes and materials behind its forging.

“Ancient objects are scarce, and the most interesting ones are usually in an excellent state of conservation. Because it is unthinkable to apply techniques with a destructive approach, neutron diffraction techniques provide an ideal solution to characterize archaeological specimens made from metal when we cannot or do not want to sample the object,” said Barzagli, explaining why different methods were used.

It was established that the steel used is quite pure. Its high carbon content of at least one percent shows it is made of wootz steel. This type of crucible steel was historically used in India and Central Asia to make high-quality swords and other prestige objects. Its band-like pattern is caused when a mixture of iron and carbon crystalizes into cementite. This forms when craftsmen allow cast pieces of metal (called ingots) to cool down very slowly, before being forged carefully at low temperatures.

Barzagli’s team reckons that the craftsman of this particular sword allowed the blade to cool in the air, rather than plunging it into a liquid of some sort. Results explaining the item’s composition also lead the researchers to presume that the particular sword was probably used in battle.

Craftsmen often enhanced the characteristic “watered silk” pattern of wootz steel by doing micro-etching on the surface. Barzagli explains that through overcleaning some of these original ‘watered’ surfaces have since been obscured, or removed entirely. “A non-destructive method able to identify which of the shiny surface blades are actually of wootz steel is very welcome from a conservative point of view,” she added.

Comments Off on Historic Indian sword was masterfully crafted

Got the marijuana munchies? How the brain flips the hunger switch

February 21st, 2015

By Yale University.

Lead author Tamas Horvath and his colleagues set out to monitor the brain circuitry that promotes eating by selectively manipulating the cellular pathway that mediates marijuana’s action on the brain, using transgenic mice.

Image via Everything About Weed

“It’s like pressing a car’s brakes and accelerating instead,” he said. “We were surprised to find that the neurons we thought were responsible for shutting down eating, were suddenly being activated and promoting hunger, even when you are full. It fools the brain’s central feeding system.”

In addition to helping explain why you become extremely hungry when you shouldn’t be, Horvath said, the new findings could provide other benefits, like helping cancer patients who often lose their appetite during treatment.

Researchers have long known that using cannabis is associated with increased appetite even when you are full. It is also well known that activating the cannabinoid receptor 1 (CB1R) can contribute to overeating. A group of nerve cells called pro-opiomelanocortin (POMC) neurons are considered as key drivers of reducing eating when full.

“This event is key to cannabinoid-receptor-driven eating,” said Horvath, who points out that the feeding behavior driven by these neurons is just one mode of action that involves CB1R signaling. “More research is needed to validate the findings.” Whether this primitive mechanism is also key to getting “high” on cannabis is another question the Horvath lab is aiming to address.

Comments Off on Got the marijuana munchies? How the brain flips the hunger switch

Ultrasound technology made to measure

February 11th, 2015By Alton Parrish.

The range of uses for ultrasound is gigantic; the applied technologies are just as diverse. Researchers are now covering a wide range of applications with a new modular system: From sonar systems to medical ultrasound technologies and all the way to the high frequency range – such as for materials testing.

Modular ultrasound platform for medical applications.

Ultrasound technologies make visible what remains hidden from our naked eyes: Physicians study tissue changes in our bodies with the aid of sonography; submarines equipped use sonar systems to get their bearings in the darkness of the deep sea; and for materials and components testing, ultrasound provides a non-destructive alternative to costly technologies that are not real-time capable. Depending on the application, a variety of technologies can be used.

“Complete systems are typically developed, based on unique customer specifications. Within this context, that only allows them to be used for a very limited area, however, the development expenditure is really quite high,” explains Steffen Tretbar of the Fraunhofer Institute for Biomedical Engineering IBMT in St. Ingbert.

Tretbar and his team therefore are taking a new path: The scientists have developed a multichannel ultrasound platform that uses a modular configuration so that it can be adapted to a set of applications that are entirely different from each other, such as real-time treatment monitoring. “This way, we can both quickly respond to customer requests for the widest array of applications, and also offer money-saving solutions,” says Tretbar.

The system uses basic components, like main board, power supply, and control software that always stays the same. “Then we put application specific components – the front-end boards – into this main board, like with a building-block system,” explains Tretbar.

In order to adapt an application, the frequency range of the ultrasound waves is a key regulating screw. Sonar systems typically move within the low-frequency range (from the kilohertz range to about two MHz). This way, you admittedly do not get a high spatial resolution of the images; however, you can “see” up to several hundred meters deep.

Unlike with its use in medicine: Here the physician needs records with the highest possible resolution. For this purpose, the sound waves do not have to traverse any long stretches, but instead just penetrate a couple of centimeters into the body. For this reason, medical ultrasound typically hovers within a frequency range of between 2 to 20 MHz. Very high frequencies, up to the 100 MHz range, enable resolutions in the µm-range, e.g., for materials testing or the imaging of small animals that is needed with the development of new technologies. The researchers developed coresponding front-end boards for all three areas.

Swift interfaces to the computer

In order to fine-tune the system, you merely need to configure the software accordingly. “We have realized very fast interfaces to the PC. This way, we can control the systems in real time, enable very swift signal processing with repeat rates in the kHz/range, and simply implement new software algorithms that have been adapted for various applications,” explains Tretbar. Another advantage of the ultrasound platform: Scientists can refer back to not only classic image data, but also to the unprocessed raw signals of each element in the ultrasound array. This allows them to develop completely new technologies.

The various modules are ready for deployment – primarily corporation from the medical field have signaled their interest in such developments. In order to turn the technology into concrete products, the experts from IBMT offer two approaches: Either they apply software interfaces to the ultrasound systems that are integrated directly into the customer’s system. Or the second option is to integrate the customer’s application into the software of the ultrasound system and then realize a software product for the entire application.

As part of the research platform, IBMT’s development expertise covers all technology components – from ultrasound transducers and new ultrasound technologies to complete systems and their certification or approval as a medical product.

Comments Off on Ultrasound technology made to measure

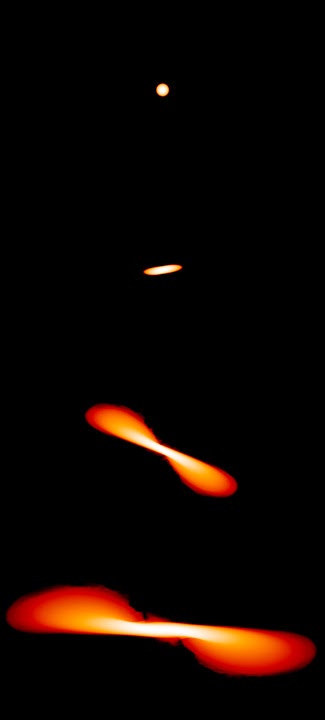

Black-hole chokes swallowing a star

February 10th, 2015

By University of Texas.

A five-year analysis of an event captured by a tiny telescope at McDonald Observatory and followed up by telescopes on the ground and in space has led astronomers to believe they witnessed a giant black hole tear apart a star. The work is published this month in The Astrophysical Journal.

On Jan. 21, 2009, the ROTSE IIIb telescope at McDonald caught the flash of an extremely bright event. The telescope’s wide field of view takes pictures of large swathes of sky every night, looking for newly exploding stars as part of the ROTSE Supernova Verification Project (RSVP). Software then compares successive photos to find bright “new” objects in the sky — transient events such as the explosion of a star or a gamma-ray burst.

With a magnitude of -22.5, this 2009 event was as bright as the “superluminous supernovae” (a new category of the brightest stellar explosions known) that the ROTSE team discovered at McDonald in recent years. The team nicknamed the 2009 event “Dougie,” after a character in the cartoon “South Park.” (Its technical name is ROTSE3J120847.9+430121.)

The team thought Dougie might be a supernova and set about looking for its host galaxy, which would be too faint for ROTSE to see. They found that a sky survey had mapped a faint red galaxy at Dougie’s location. They used one of the giant Keck telescopes in Hawaii to pinpoint its distance: 3 billion light-years.

These deductions meant Dougie had a home — but just what was he? To narrow it down from four possibilities, they studied Dougie with the orbiting Swift telescope and the giant Hobby-Eberly Telescope at McDonald, and they made computer models. These models showed how Dougie’s light would behave if created by different physical processes. The astronomers then compared the different theoretical Dougies to their telescope observations of the real thing.

“When we discovered this new object, it looked similar to supernovae we had known already,” said lead author Jozsef Vinko of the University of Szeged in Hungary. “But when we kept monitoring its light variation, we realized that this was something nobody really saw before.”

Team member J. Craig Wheeler, leader of the supernova group at The University of Texas at Austin, said they got the idea they might be witnessing a “tidal disruption event,” in which the enormous gravity of a black hole pulls on one side of a star harder than the other side, creating tides that rip the star apart.

“These especially large tides can be strong enough that you pull the star out into a noodle” shape, said Wheeler. The star “doesn’t fall directly into the black hole,” Wheeler said. “It might form a disk first. But the black hole is destined to swallow most of that material.”

Astronomers have seen black holes swallow stars about a dozen times before, but this one is special even in that rare company: It’s not going down easily.

Models by team members James Guillochon of Harvard University and Enrico Ramirez-Ruiz of the University of California at Santa Cruz showed that the disrupted stellar matter was generating so much radiation that it pushed back on the infall. The black hole was choking on the rapidly infalling matter.

Based on the characteristics of the light from Dougie and their deductions of the star’s original mass, the team has determined that Dougie started out as a star like our sun before being ripped apart.

Their observations of the host galaxy, coupled with Dougie’s behavior, led them to surmise that the galaxy’s central black hole has the “rather modest” mass of about a million suns, Wheeler said.

Delving into Dougie’s behavior has unexpectedly resulted in learning more about small, distant galaxies, Wheeler said, musing “Who knew this little guy had a black hole?”

The paper’s lead author, Joszef Vinko, began the project while on sabbatical at The University of Texas at Austin. The team also includes Robert Quimby of San Diego State University, who started the search for supernovae using ROTSE IIIb and discovered the category of superluminous supernovae while a graduate student at The University of Texas at Austin.

Comments Off on Black-hole chokes swallowing a star