|

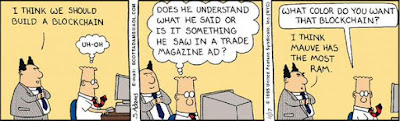

| Dilbert – by Scott Adams |

Interest in blockchain is at a fever pitch lately. This is in large part due to the eye-popping price dynamics of Bitcoin–the original bad-boy cryptocurrency–which everyone knows is powered by blockchain…whatever that is. But no matter. Given that even big players like Goldman Sachs are getting into the act (check out their super slick presentation here: Blockchain–The New Technology of Trust) maybe it’s time to figure out what all the fuss is about. What follows is based on my slide deck which I recently presented at the Olin School of Business at a Blockchain Panel (I will link up to video as soon as it becomes available)

Things are a little confusing out there I think in part because not enough care is taken in defining terms before assessing pros and cons. And when terms are defined, they sometimes include desired outcomes as a part of their definition. For example, blockchain is often described as consisting of (among other things) an immutable ledger. This is like defining a titanic to be an unsinkable ship.

So what do people mean when they bandy about the term blockchain? I recently had a chance to learn about the project from a corporate perspective as represented by Ed Corno of IBM (see IBM Blockchain), the other member of the panel I mentioned above. From Ed’s slide deck we have the following definition:

Blockchain: a shared, replicated, permissioned ledger with consensus, provenance, immutability and finality.

Well, if this is what blockchain is, then maybe I want one too! The issue I have with this definition (apart from the fact that it confounds descriptive elements with desired outcomes) is that it glosses over what I consider to be an important defining characteristic of blockchain: the consensus mechanism. Loosely speaking, there are two ways to achieve consensus. One is reputation-based (trust) and the other is game-based (trustless).

I’m not 100% sure, but I believe the corporate versions of blockchain are likely to stick to the standard model of reputation-based accounting. In this case, the efficiency gains of “blockchain” boil down to the gains associated with making databases more synchronized across trading partners, more cryptographically secure, more visible, more complete, etc. In short, there is nothing revolutionary or radical going on here — it’s just the usual advancement of the technology and methods associated with the on-going problem of database management. Labeling the endeavor blockchain is alright, I guess. It certainly makes for good marketing!

On the other hand, game-based blockchains–like the one that power Bitcoin–are, in my view, potentially more revolutionary. But before I explain why I think this, I want to step back a bit and describe my bird’s eye view of what’s happening in this space.

A Database of Individual Action Histories

The type of information that concerns us here is not what one might label “knowledge,” say, as in the recipe for a nuclear bomb. The information in question relates more to a set of events that have happened in the past, in particular, events relating to individual actions. Consider, for example, “David washed your car two days ago.” This type of information is intrinsically useless in the sense that it is not usable in any productive manner. In addition to work histories like this, the same is true of customer service histories, delivery/receipt histories, credit histories, or any performance-related history. And yet, people value such information. It forms the bedrock of reputation and perhaps even of identity. As such, it is frequently used as a form of currency.

Why is intrinsically useless history of this form valued? A monetary theorist may tell you it’s because of a lack of commitment or a lack of trust (see Evil is the Root of All Money). If people could be relied upon to make good on their promises a priori, their track records would largely be irrelevant from an economic perspective. A good reputation is a form of capital. It is valued because it persuades creditors (believers) that more reputable agencies are more likely to make good on their promises. We keep our money in a bank not because we think bankers are angels, but because we believe the long-term franchise value of banking exceeds the short-run benefit a bank would derive from appropriating our funds. (Well, that’s the theory, at least. Admittedly, it doesn’t work perfectly.)

Note something important here. Because histories are just information, they can be created “out of thin air.” And, indeed, this is the fundamental source of the problem: people have an incentive to fabricate or counterfeit individual histories (their own and perhaps those of others) for a personal gain that comes at the expense of the community. No society can thrive, let alone survive, if its members have to worry excessively about others taking credit for their own personal contributions to the broader community. I’m writing this blog post in part (well, perhaps mainly) because I’m hoping to get credit for it.

Since humans (like bankers) are not angels, what is wanted is an honest and immutable database of histories (defined over a set of actions that are relevant for the community in question). Its purpose is to eliminate false claims of sociable behavior (acts which are tantamount to counterfeiting currency). Imagine too eliminating the frustration of discordant records. How much time is wasted in trying to settle “he said/she said” claims inside and outside of law courts? The ultimate goal, of course, is to promote fair and efficient outcomes. We may not want something like this creepy Santa Claus technology, but something similar defined over a restricted domain for a given application would be nice.

Organizing History

Let e(t) denote a set of events, or actions (relevant to the community in question), performed by an individual at date t = 1,2,3,… An individual history at date t is denoted

h(t-1) = { e(t-1), e(t-2), …, e(0) }, t = 1,2,3,…

Aggregating over individual events, we can let E(t) denote the set of individual actions at date t, and let H(t-1) denote the communal history, that is, the set of individual histories of people belonging to the community in question:

H(t-1) = { E(t-1), E(t-2), … , E(0) }, t = 1,2,3,…

Observe that E(t) can be thought of as a “block” of information (relating to a set of actions taken by members of the community at date t). If this is so, then H(t-1) consists of time-stamped blocks of information connected in sequence to form a chain of blocks. In this sense, any database consisting of a complete history of (community-relevant) events can be thought of as a “blockchain.”

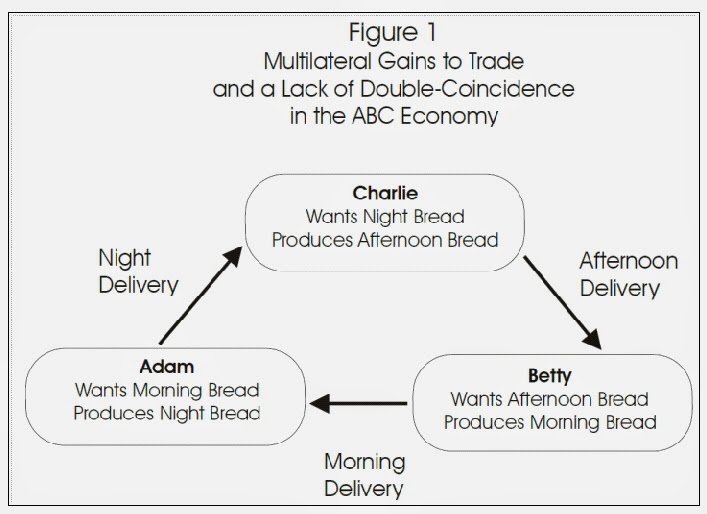

Note that there are other ways of organizing history. For example, consider a cash-based economy where people are anonymous and let e(t) denote acquisitions of cash (if positive) or expenditures of cash (if negative). Then an individual’s cash balances at the beginning of date t is given by h(t-1) = e(t-1) + e(t-2) + … + e(0). This is the sense in which “money is memory.” Measuring a person’s worth by how much money they have serves as a crude summary statistic of the net contributions they’ve made to society in the past (assuming they did not steal or counterfeit the money, of course). Another way to organize history is to specify h(t-1) = { e(t-1) }. This is the “what have you done for me lately?” model of remembering favors. The possibilities are endless. But an essential component of blockchain is that it contains a complete history of all community-relevant events. (We could perhaps generalize to truncated histories if data storage is a problem.)

Database Management Systems (DBMS) and the Read/Write Privilege

Alright then, suppose that a given community (consisting of people, different divisions within a firm, different firms in a supply chain, etc.) wants to manage a chained-block of histories H(t-1) over time. How is this to be done?

Along with a specification of what is to constitute the relevant information to be contained in the database, any DBMS will have to specify parameters restricting:

1. The Read Privilege (who, what, and how);

2. The Write Privilege (who, what, and how).

That is, who gets to gets to read and write history? Is the database to be completely open, like a public library? Or will some information be held in locked vaults, accessible only with permission? And if by permission, how is this to be granted? By a trusted person, by algorithm, or some other manner? Even more important is the question of who gets to write history. As I explained earlier, the possibility for manipulation along this dimension is immense. How to guard against to attempts to fabricate history?

Historically, in “small” communities (think traditional hunter-gatherer societies) this was accomplished more or less automatically. There are no strangers in a small, isolated village and communal monitoring is relatively easy. Brave deeds and foul acts alike, unobserved by some or even most, rapidly become common knowledge. This is true even of the small communities we belong to today (at work, in clubs, families, friends, etc.). Kocherlakota (1996) labels H(t-1) in this scenario “societal memory.” I like to think of it as a virtual database of individual histories living in a distributed ledger of brains talking to each other in a P2P fashion, with additions to, and maintenance of, the shared history determined through a consensus mechanism. In this primitive DBMS, read and write privileges are largely open, the latter being subject to consensus. It all sounds so...blockchainy.

While the primitive “blockchain” described above works well enough for small societies, it doesn’t scale very well. Today, the traditional local networks of human brains have been augmented (and to some extent replaced) by a local and global networks of computers capable of communicating over the Internet. Achieving rapid consensus in a large heterogeneous community characterized by a vast flows of information is a rather daunting task.

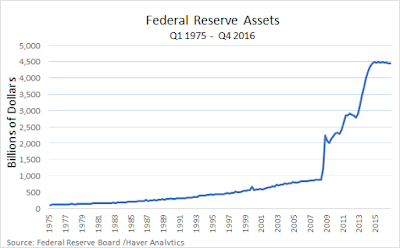

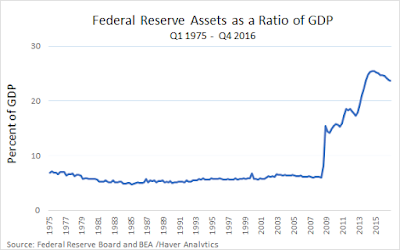

The “solution” to this problem has largely taken the form of proprietary databases with highly restricted read privileges managed by trusted entities who are delegated the write privilege. The double-spend problem for digital money, for example, is solved by delegating the record-keeping task to a bank, located within a banking system, performing debit/credit operations on a set of proprietary ledgers connected to a central hub (a clearing agency) typically managed by a central bank.

The Problem and the Blockchain Solution

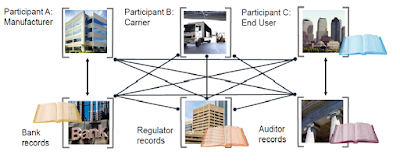

Depending on your perspective, the system that has evolved to date is either (if you are born before 1980) a great improvement over how things operated when we were young, or (if you are born post 1980) a hopelessly tangled hodgepodge of networks that have trouble communicating with each other and are intolerably vulnerable to data breaches (see figure below, courtesy Ed Corno of IBM).

The solution to this present state of affairs is presented as blockchain (defined earlier) which Ed depicts in the following way,

Well sure, this looks like a more organized way to keep the books and clear up communication channels, though the details concerning how consensus is achieved in this system remain a little hazy to me. As I mentioned earlier, I’m guessing that it’ll be based on some reputation-based mechanism. But if this is the case, then why can’t we depict the solution in the following way?

That is, gather all the agents and agencies interacting with each other, forming them into a more organized community, but keep it based on the traditional client-server (or hub-and-spoke) model. In the center, we have the set of trusted “historians” (bankers, accountants, auditors, database managers, etc.) who are granted the write-privilege. Communications between members may be intermediated either by historians or take place in a P2P manner with the historians listening in. The database can consist of the chain-blocked sets of information (blockchain) H(t-1) described above. The parameters governing the read-privilege can be determined beforehand by the needs of the community. The database could be made completely open–which is equivalent to rendering it shared. And, of course, multiple copies of the database can be made as often as is deemed necessary.

The point I’m making is, if we’re ultimately going to depend on reputation-based consensus mechanisms, then we need no new innovation (like blockchain) to organize a database. While I’m no expert in the field of database management, it seems to me that standard protocols, for example, in the form of SQL Server 2017, can accommodate what is needed technologically and operationally (if anyone disagrees with me on this matter, please comment below).

Extending the Write Privilege: Game-Based Consensus

As explained above, extending the read-privilege is not a problem technologically. We are all free to publish our diaries online, creating a shared-distributed ledger of our innermost thoughts. Extending the write-privilege to unknown or untrusted parties, however, is an entirely different matter. Of course, this depends in part on the nature of the information to be stored. Wikipedia seems to work tolerably well. But its hard to use Wikipedia as currency. This is not the case with personal action histories. You don’t want other people writing your diary!

Well, fine, so you don’t trust “the Man.” What then? One alternative is to game the write privilege. The idea is to replace the trusted historian with a set of delegates drawn from the community (a set potentially consisting of the entire community). Next, have these delegates play a validation/consensus game designed in such a way that the equilibrium (say, Nash or some other solution concept) strategy profile chosen by each delegate at every date t = 1,2,3,… entails: (1) No tampering with recorded history H(t-1); and (2) Only true blocks E(t) are validated and appended to the ledger H(t-1).

What we have done here is replace one type of faith for another. Instead of having faith in mechanisms that rely on personal reputations, we must now trust that the mechanism governing non-cooperative play in the validation/consensus game will deliver a unique equilibrium outcome with the desired properties. I think this is in part what people mean when I hear them say “trust the math.”

Well, trusting the math is one thing. Trusting in the outcome of a non-cooperative game is quite another matter. The relevant field in economics is called mechanism design. I’m not going to get into details here, but suffice it to say, it’s not so straightforward designing mechanisms with sure-fire good properties. Ironically, mechanisms like Bitcoin will have to build up trust the old-fashioned way–through positive user experience (much the same way most of us trust our vehicles to function, even if we have little idea how an internal combustion engine works).

Of course, the same holds true for games based on reputational mechanisms. The difference is, I think, that non-cooperative consensus games are intrinsically more costly to operate than their reputational counterparts. The proof-of-work game played by Bitcoin miners, for example, is made intentionally costly (to prevent DDoS attacks) even though validating the relevant transaction information is virtually costless if left in the hands of a trusted validator. And if a lack of transparency is the problem for trusted systems, this conceptually separate issue can be dealt with by extending the read-privilege communally.

Having said this, I think that depending on the circumstances and the application, the cost associated with a game-based consensus mechanism may be worth incurring. I think we have to remain agnostic on this matter for now and see how future developments unfold.

Blockchain: Powering DAOs

If Blockchain (with non-cooperative consensus) has a comparative advantage, where might it be? To me, the clear application is in supporting Decentralized Autonomous Organizations (DAOs). A DAO is basically a set of rules written as a computer program. Because it possesses no central authority or node, it can offer tailor-made “legal” systems unencumbered by prevailing laws and regulations, at least, insofar as transactions are limited to virtual fulfillments (e.g., debit/credit operations on a ledger).

Bitcoin is an example of a DAO, though the intermediaries that are associated with Bitcoin obviously are not. Ethereum is a platform that permits the construction of more sophisticated DAOs via the use of smart contracts. The comparative advantages of DAOs are that they permit: (1) a higher degree of anonymity; (2) permissionless access and use; and (3) commitment to contractual terms (smart contracts).

It’s not immediately clear to me what value these comparative advantages have for registered businesses. There may be a role for legally compliant smart contracts (a tricky business for international transactions). But perhaps the potential is much more than I can presently imagine. Time will tell.

Link to my past posts on the subject of Bitcoin and Blockchain.